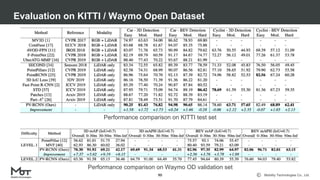

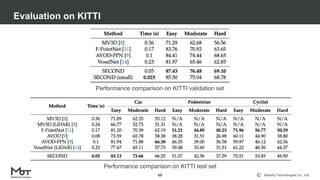

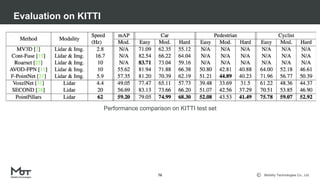

This document summarizes several 3D perception datasets and algorithms for autonomous driving. It begins with an overview of Kazuyuki Miyazawa from Mobility Technologies Co. and then covers popular datasets like KITTI, ApolloScape, nuScenes, and Waymo Open Dataset, describing their sensor setups, data formats, and licenses. It also summarizes seminal 3D object detection algorithms like PointNet, VoxelNet, and SECOND that take point cloud data as input.

![Mobility Technologies Co., Ltd.

KITTI [2012]

6

Sensor Setup

● GPS/IMU x 1

● LiDAR (64ch) x 1

● Grayscale Camera (1.4M) x 2

● Color Camera (1.4M) x 2

http://www.cvlibs.net/datasets/kitti/](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-6-320.jpg)

![Mobility Technologies Co., Ltd.

KITTI [2012]

7](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-7-320.jpg)

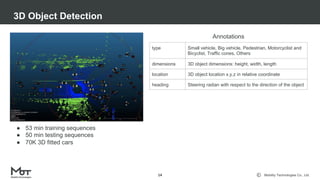

![Mobility Technologies Co., Ltd.

3D Object Detection

8

● 7,481 training images / point clouds

● 7,518 test images / point clouds

● 80,256 labeled objects

type Car, Van, Truck, Pedestrian, Person_sitting, Cyclist,

Tram, Misc or DontCare

truncated 0 to 1, where truncated refers to the object leaving

image boundaries

occuluded 0 = fully visible, 1 = partly occluded, 2 = largely occluded,

3 = unknown

alpha Observation angle of object, ranging [-pi..pi]

bbox 2D bounding box of object in the image

dimensions 3D object dimensions: height, width, length

location 3D object location x,y,z in camera coordinate

rotation_y Rotation ry around Y-axis in camera coordinates [-pi..pi]

Annotations](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-8-320.jpg)

![Mobility Technologies Co., Ltd.

ApolloScape [2017]

11

Sensor Setup

● GPS/IMU x 1

● LiDAR x 2

● Color Camera (9.2M) x 2

http://apolloscape.auto/](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-11-320.jpg)

![Mobility Technologies Co., Ltd.

ApolloScape [2017]

12

Scene Parsing

3D Car Instance

Lane Segmentation](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-12-320.jpg)

![Mobility Technologies Co., Ltd.

ApolloScape [2017]

13

Self Localization Stereo](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-13-320.jpg)

![Mobility Technologies Co., Ltd.

nuScenes [2019]

16

Sensor Setup

● GPS/IMU x 1

● LiDAR (32ch) x 1

● RADAR x 5

● Color Camera (1.4M) x 3

https://www.nuscenes.org/](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-16-320.jpg)

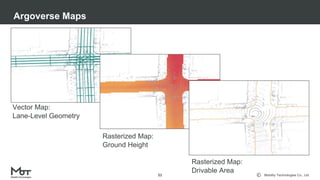

![Mobility Technologies Co., Ltd.

Argoverse [2019]

20

Sensor Setup

● GPS x 1

● LiDAR (32ch) x 2

● Color Camera (4.8M) x 2

● Color Camera (2M) x 7

https://www.argoverse.org/](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-20-320.jpg)

![Mobility Technologies Co., Ltd.

Lyft Level 5 [2019]

24

Sensor Setup (BETA_V0)

● LiDAR (40ch) x 3

● WFOV Camera (1.2M) x 6

● Long-focal-length Camera (1.7M) x 1

Sensor Setup (BETA_++)

● LiDAR (64ch) x 1

● LiDAR (40ch) x 2

● WFOV Camera (2M) x 6

● Long-focal-length Camera (2M) x 1

https://level5.lyft.com/dataset/](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-24-320.jpg)

![Mobility Technologies Co., Ltd.

Audi Autonomous Driving Dataset (A2D2) [2020]

28

Sensor Setup

● GPS/IMU x 1

● LiDAR (16ch) x 5

● Color Camera (2.3M) x 6

https://www.a2d2.audi/a2d2/en.html](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-28-320.jpg)

![Mobility Technologies Co., Ltd.

Audi Autonomous Driving Dataset (A2D2) [2020]

29](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-29-320.jpg)

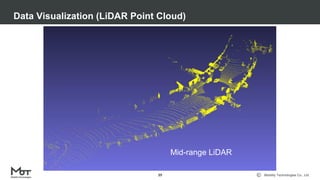

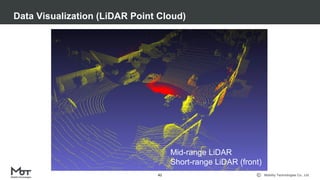

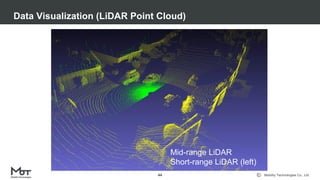

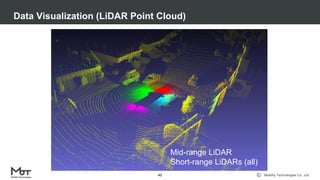

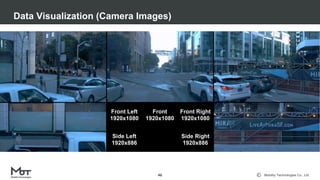

![Mobility Technologies Co., Ltd.

Waymo Open Dataset [2019]

34

Sensor Setup

● Mid-Range (~75m) LiDAR x 1

● Short-Range (~20m) LiDAR x 4

● Color Camera (2M) x 3

● Color Camera (1.6M) x 2

https://waymo.com/open/](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-34-320.jpg)

![Mobility Technologies Co., Ltd.

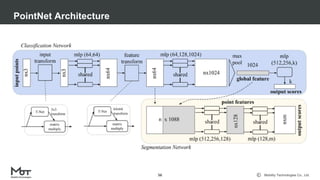

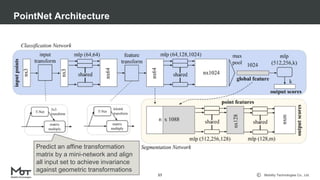

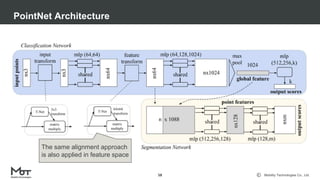

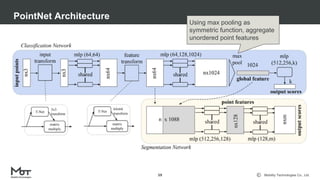

■ Design a novel type of neural network that directly consumes point clouds, which well respects

the permutation invariance of points in the input

■ Provide a unified architecture for applications ranging from object classification, part

segmentation, to scene semantic parsing

PointNet [C. Qi+, CVPR2017]

55

https://arxiv.org/abs/1612.00593](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-55-320.jpg)

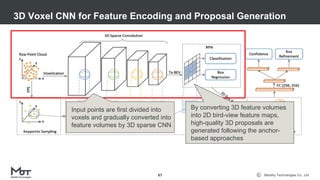

![Mobility Technologies Co., Ltd.

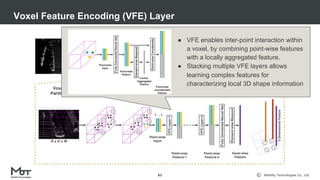

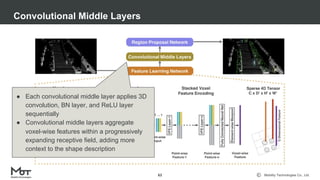

■ Divide a point cloud into 3D voxels and transform them into a unified feature representation

■ Descriptive volumetric representation is then connected to a RPN to generate detections

VoxelNet [Y, Zhou+, CVPR2018]

60

A voxel represents a value

on a regular grid in three-

dimensional space

https://en.wikipedia.org/wiki/Voxel

LiDAR ONLY

https://arxiv.org/abs/1711.06396](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-60-320.jpg)

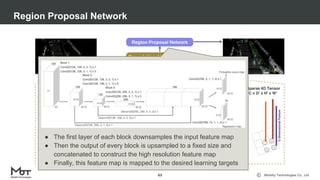

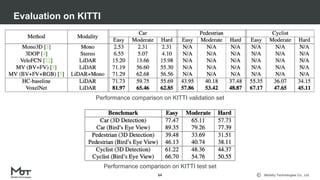

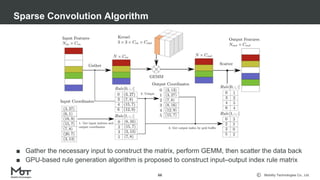

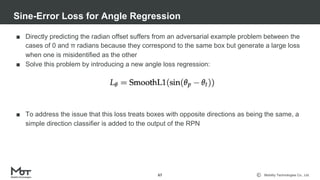

![Mobility Technologies Co., Ltd.

■ Apply sparse convolution to greatly increase the speeds of training and inference

■ Introduce a novel angle loss regression approach to solve the problem of the large loss

generated when the angle prediction error is equal to π

SECOND (Sparsely Embedded CONvolutional Detection) [Y, Yan+, Sensors2018]

65

LiDAR ONLY

https://pdfs.semanticscholar.org/5125/a16039cabc6320c908a4764f32596e018ad3.pdf](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-65-320.jpg)

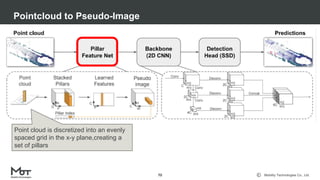

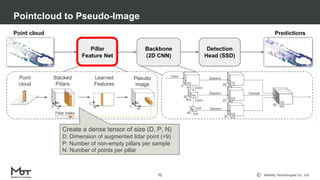

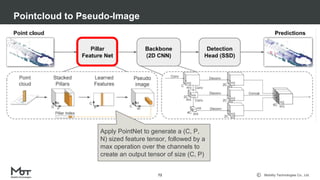

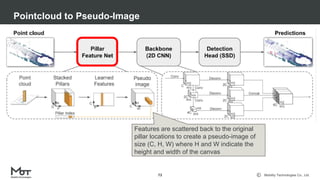

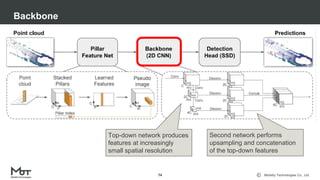

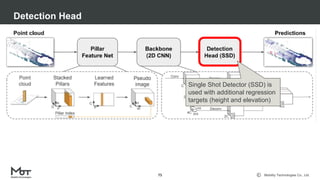

![Mobility Technologies Co., Ltd.

PointPillars [A. Lang+, CVPR2019]

69

■ Propose an encoder to learn a representation of point clouds organized in vertical columns

(pillars) and generate pseudo 2D image

■ Encoded features can be used with any standard 2D convolutional detection architecture

without computationally-expensive 3D ConvNets

LiDAR ONLY

https://arxiv.org/abs/1812.05784](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-69-320.jpg)

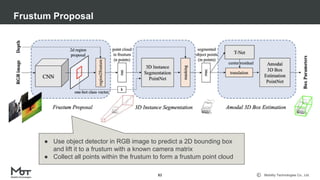

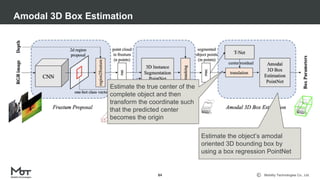

![Mobility Technologies Co., Ltd.

■ First generate 2D object region proposals in the RGB image using CNN, then each 2D region

is extruded to a 3D viewing frustum to get a point cloud

■ PointNet predicts a 3D bounding box for the object from the points in frustum

Frustum PointNets [C. Qi+, CVPR2018]

81

LiDAR + Camera

https://arxiv.org/abs/1812.05784](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-81-320.jpg)

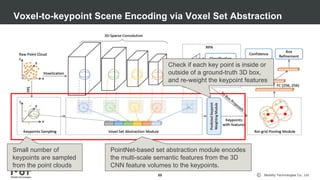

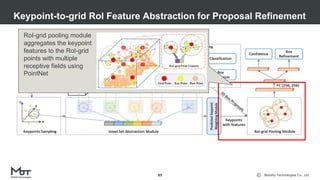

![Mobility Technologies Co., Ltd.

PV-RCNN [S. Shi+, CVPR2020]

86

https://arxiv.org/abs/1912.13192

■ Voxel-based operation efficiently encodes multi-scale feature representations and can

generate high-quality 3D proposals, while the PointNet-based set abstraction operation

preserves accurate location information with flexible receptive fields

■ Integrate the two operations via the voxel-to-keypoint 3D scene encoding and the keypoint-to-

grid RoI feature abstraction

LiDAR ONLY](https://image.slidesharecdn.com/202005213d-perception-for-autonomous-drivingpublish-200521101605/85/3D-Perception-for-Autonomous-Driving-Datasets-and-Algorithms-86-320.jpg)