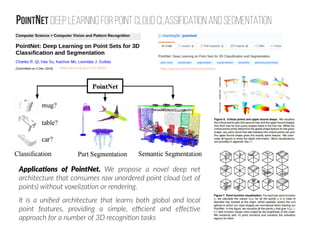

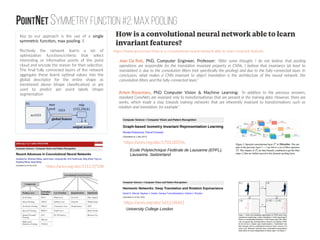

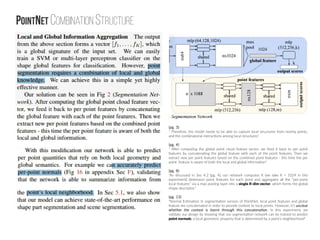

The document discusses the application of deep learning techniques to 3D point cloud data, emphasizing its significance in fields such as augmented reality and autonomous driving. It highlights ongoing research efforts, such as workshops aimed at fostering interdisciplinary collaboration to improve 3D data processing, and introduces PointNet, a novel architecture for classification and segmentation of unordered point clouds. The document also outlines the architectural components and performance metrics of PointNet, including pooling operations and potential applications in various domains.

![PointNet Modifications Architecture #1: Uncertainty estimation?

https://arxiv.org/pdf/1703.04977.pdf

http://mlg.eng.cam.ac.uk/yarin/blog_3d801aa532c1ce.html

[in classification

pipeline only] not in

segmentation part](https://image.slidesharecdn.com/3dv2017initreport-170503031811/85/PointNet-11-320.jpg)