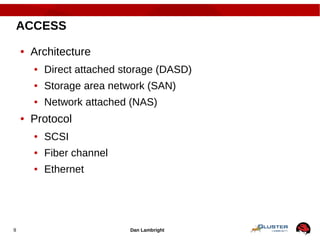

This document summarizes a presentation about software defined storage using the open source Gluster file system. It begins with an overview of storage concepts like reliability, performance, and scaling. It then discusses the history and types of storage and provides case studies of proprietary storage systems. The presentation introduces software defined storage and Gluster, describing its modular design, use in cloud computing, pros and cons. Key Gluster concepts are defined and its distributed and replicated volume types are explained. The presentation concludes with instructions for setting up and using Gluster.