This document discusses performance optimization techniques for All Flash storage systems based on ARM architecture processors. It provides details on:

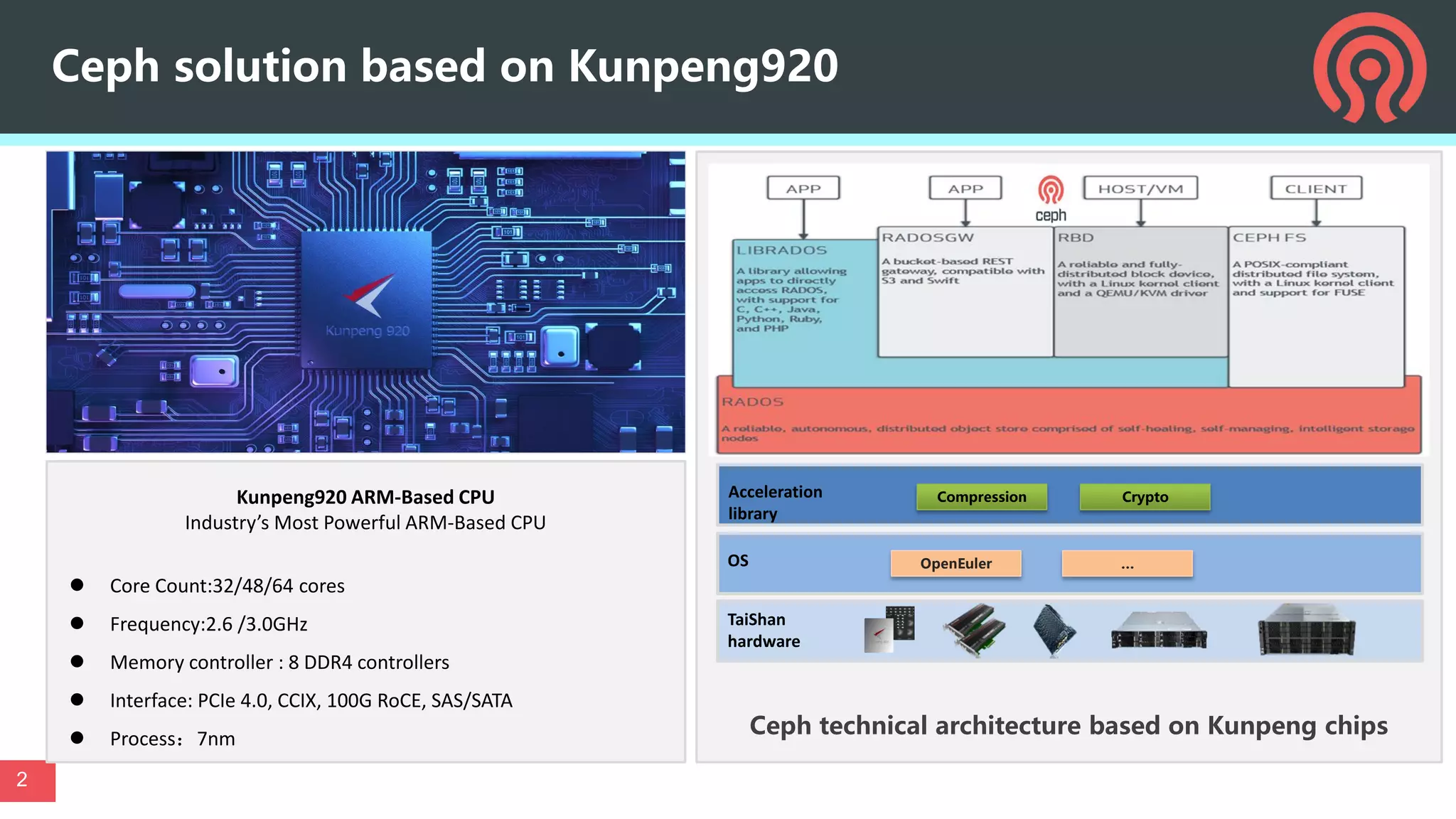

- The processor used, which is the Kunpeng920 ARM-based CPU with 32-64 cores at 2.6-3.0GHz, along with its memory and I/O controllers.

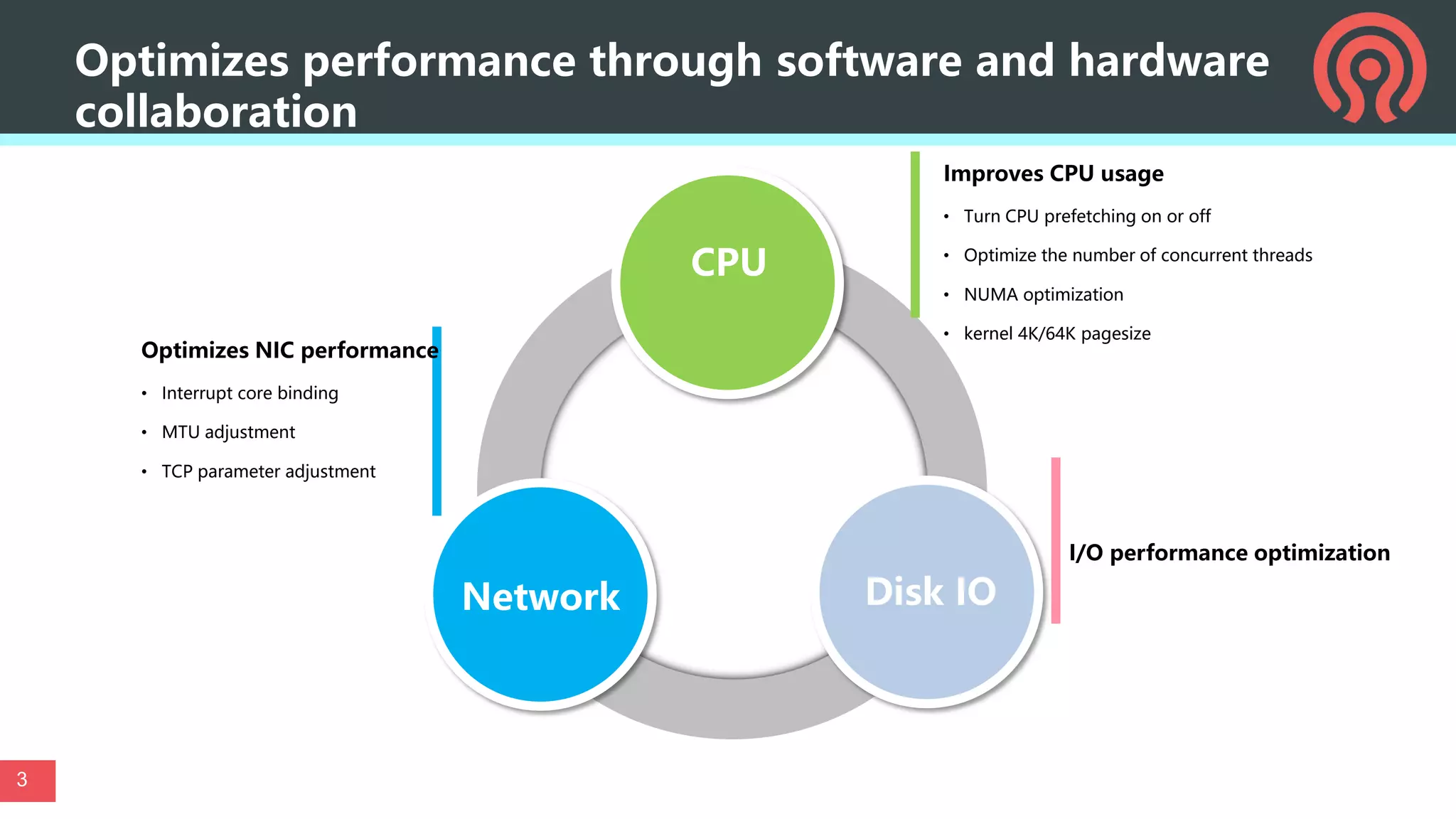

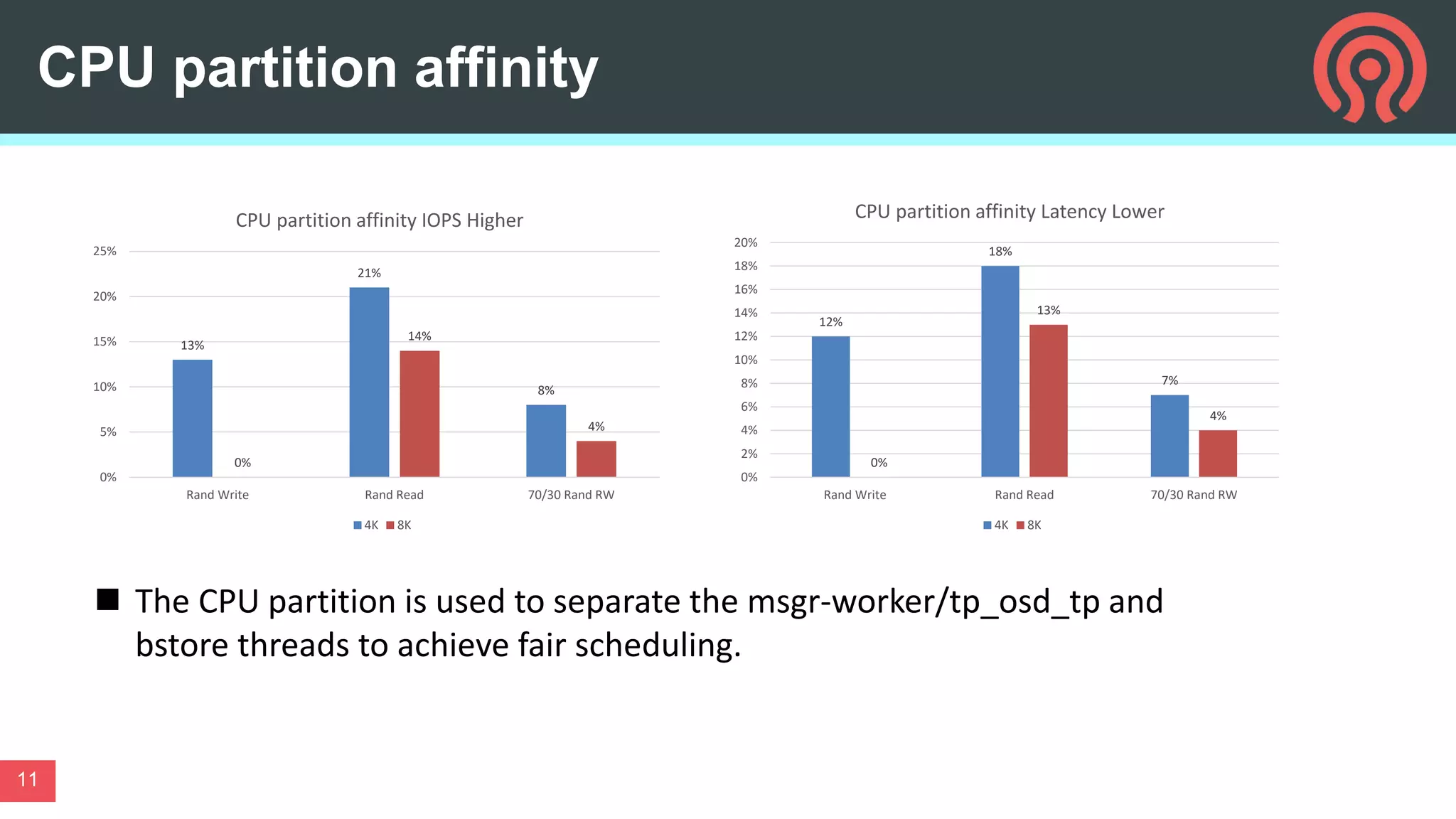

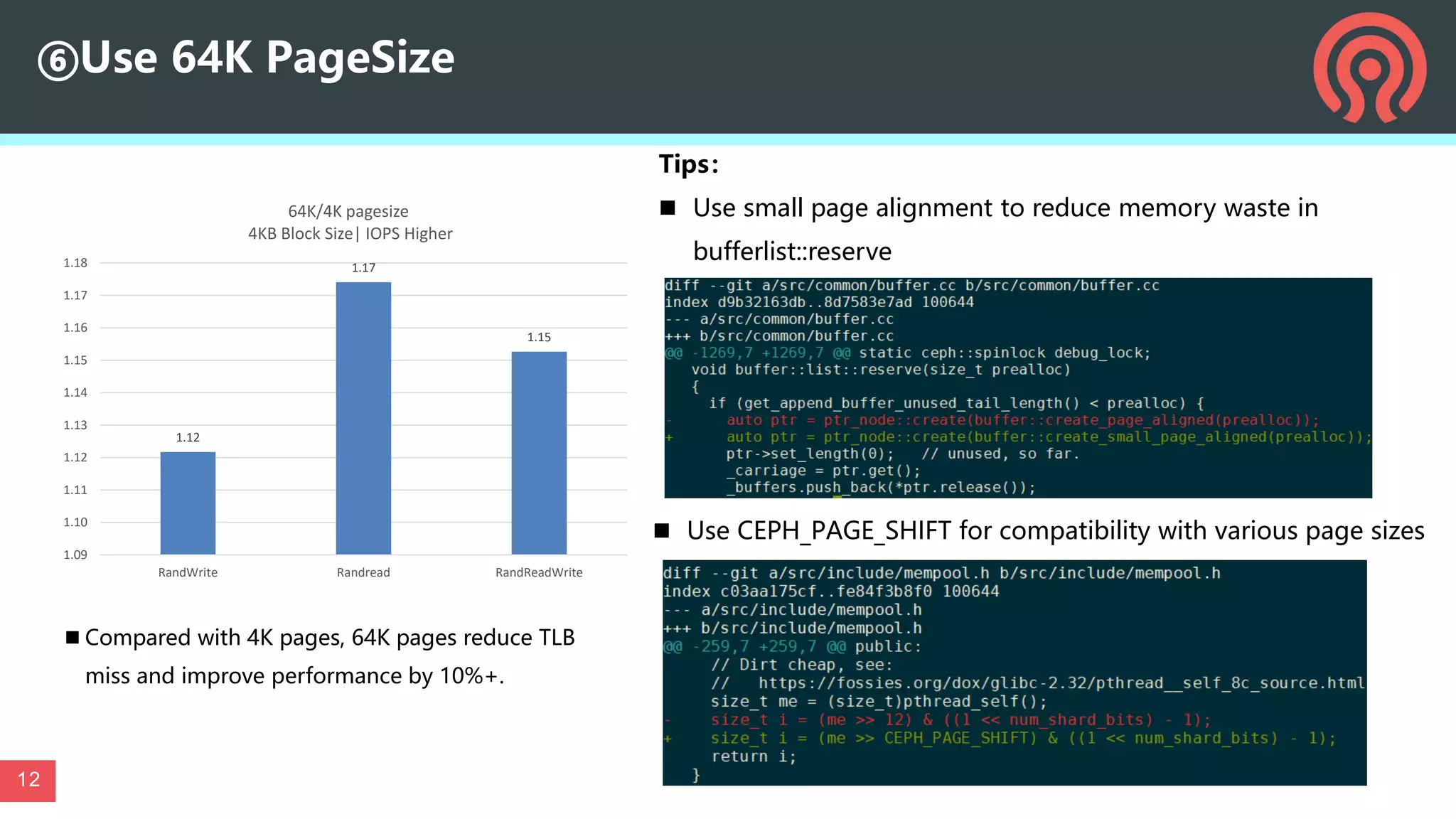

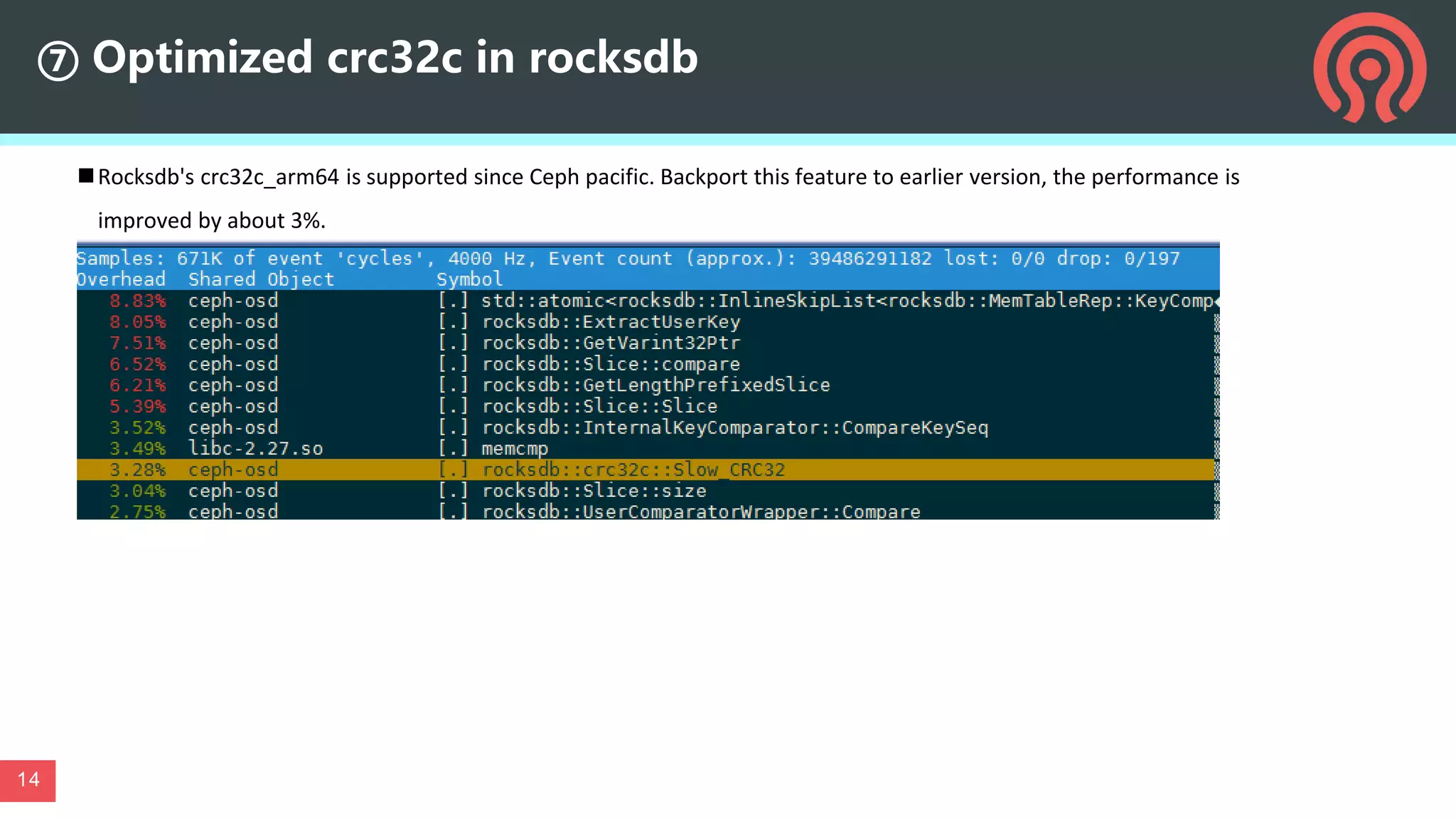

- Optimizing performance through both software and hardware techniques, including improving CPU usage, I/O performance, and network performance.

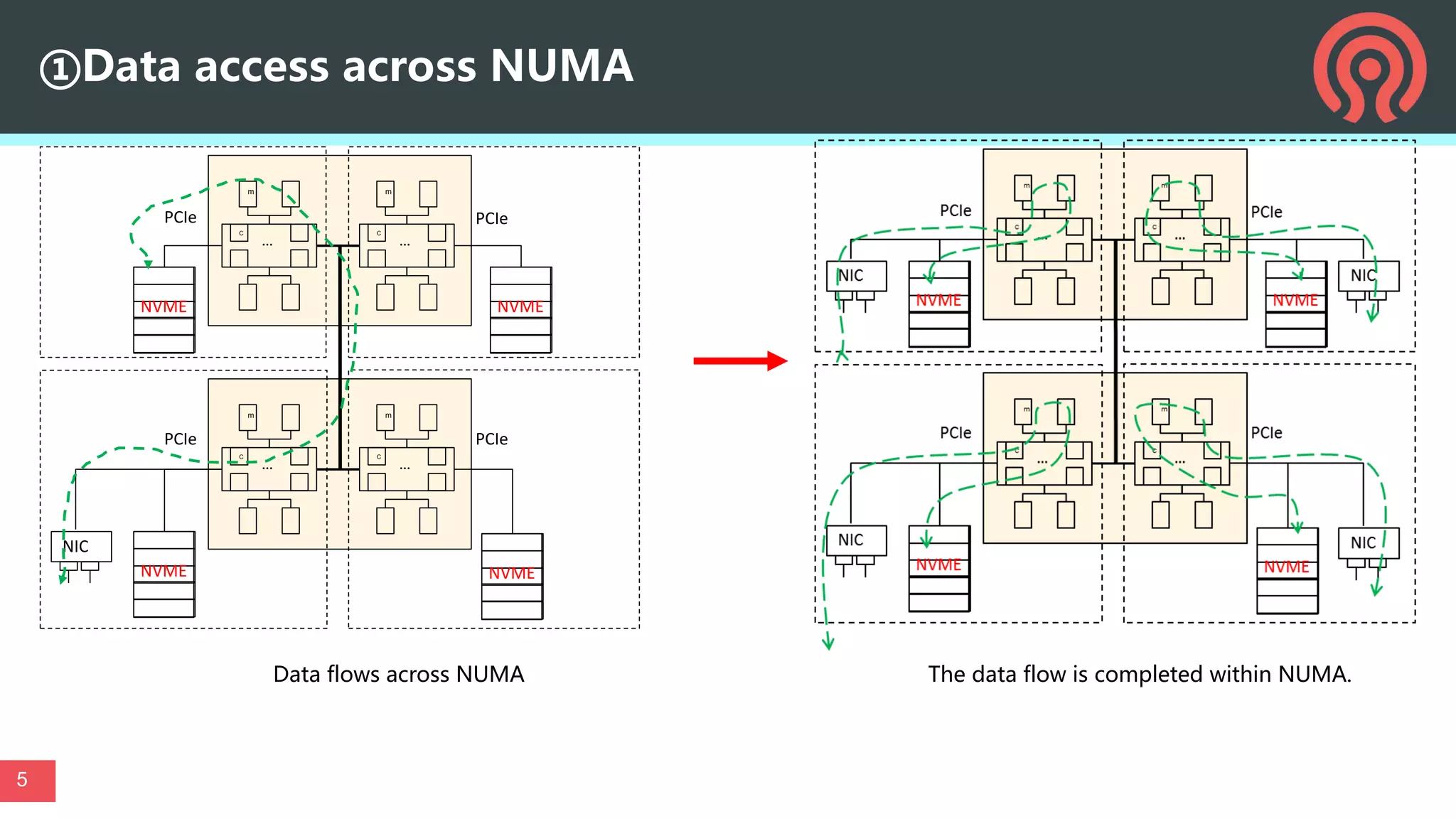

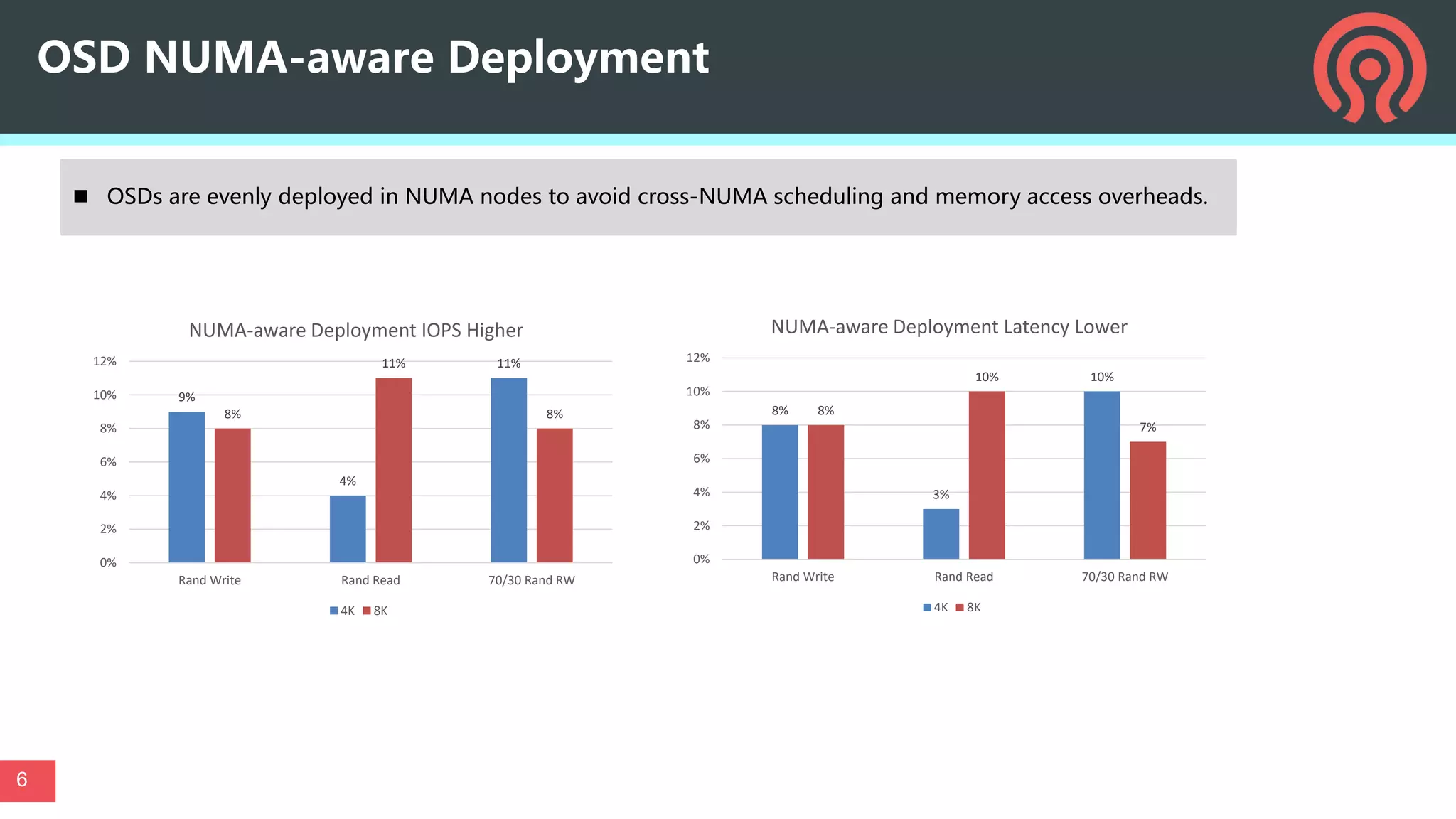

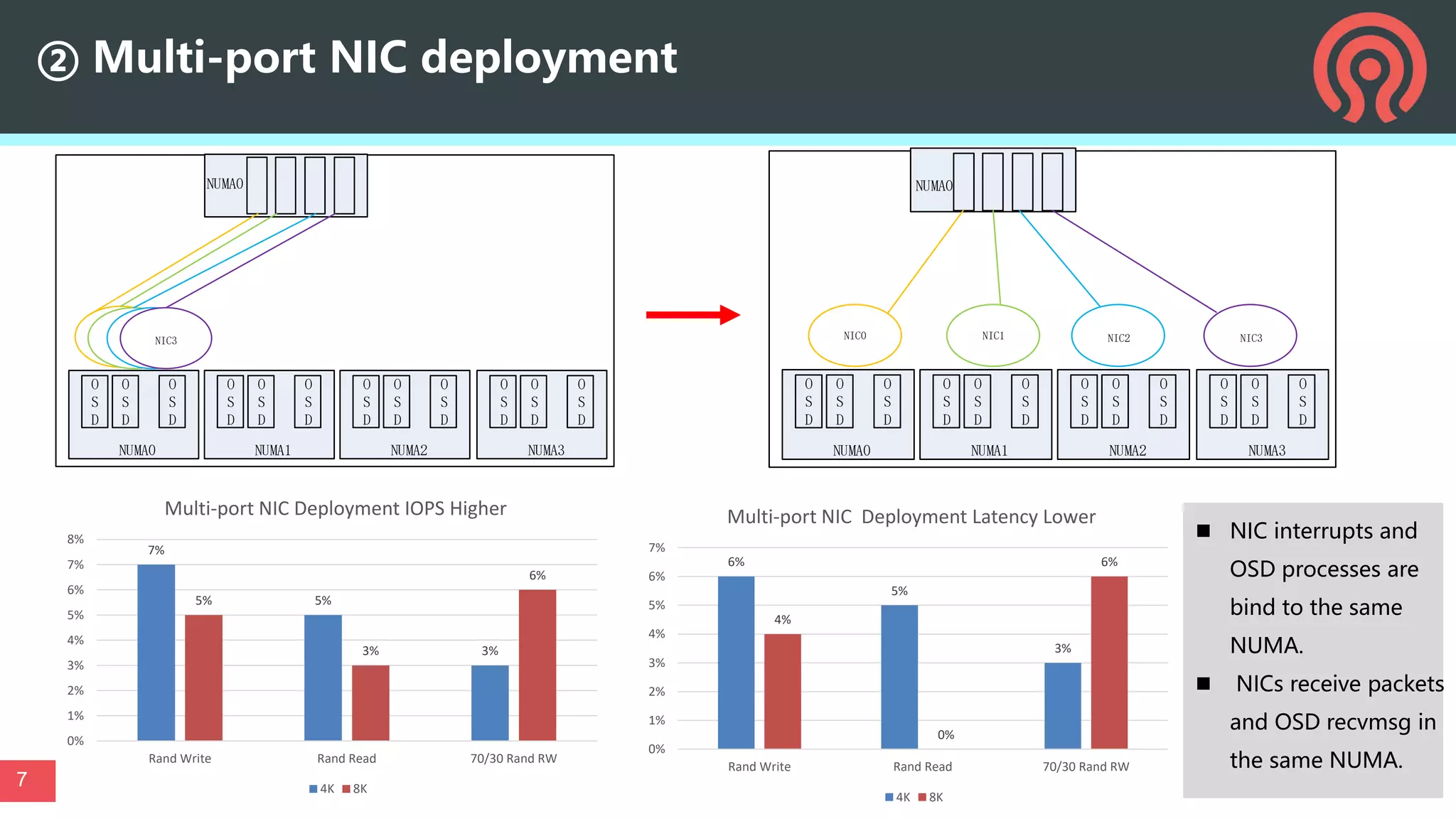

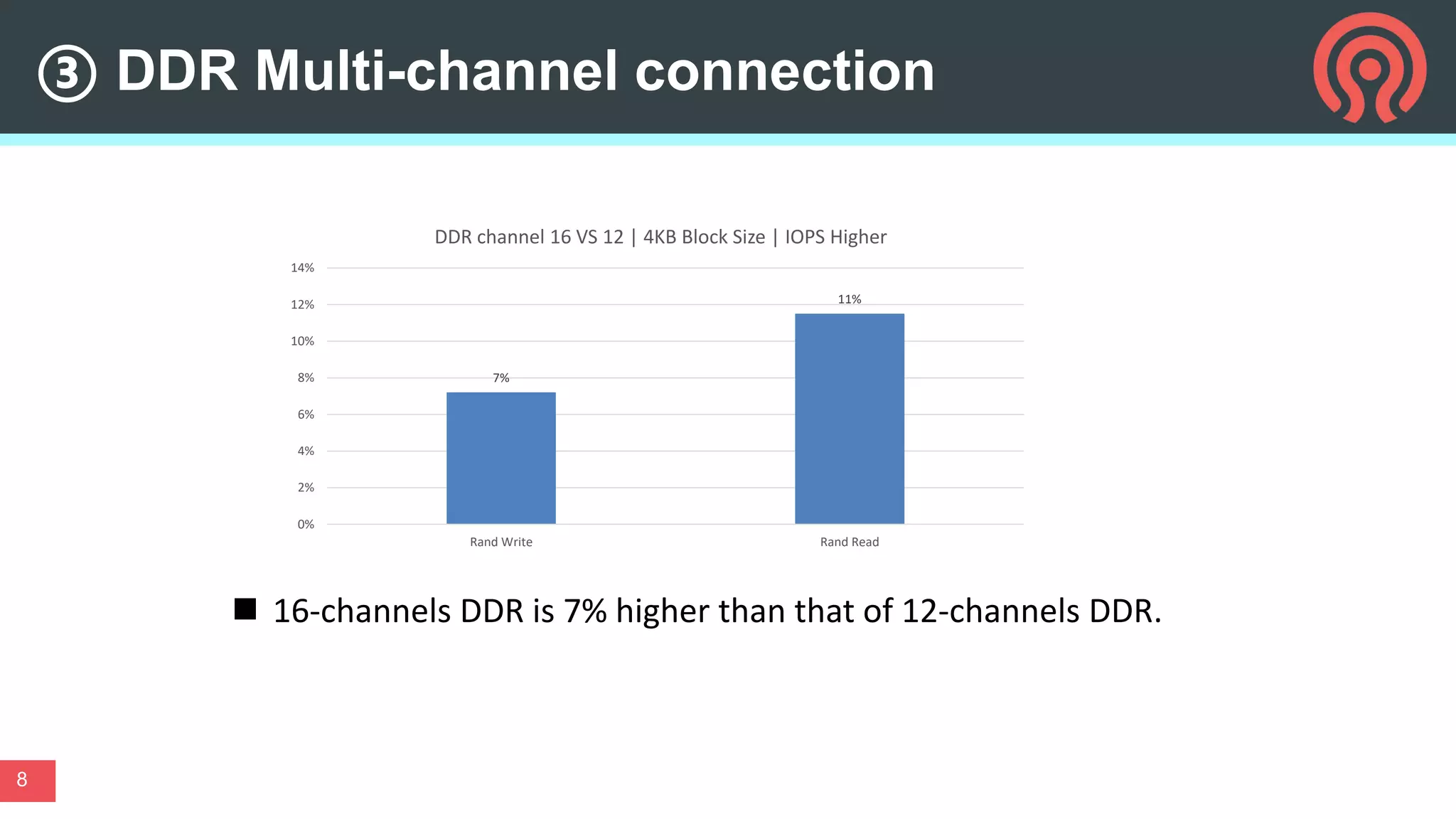

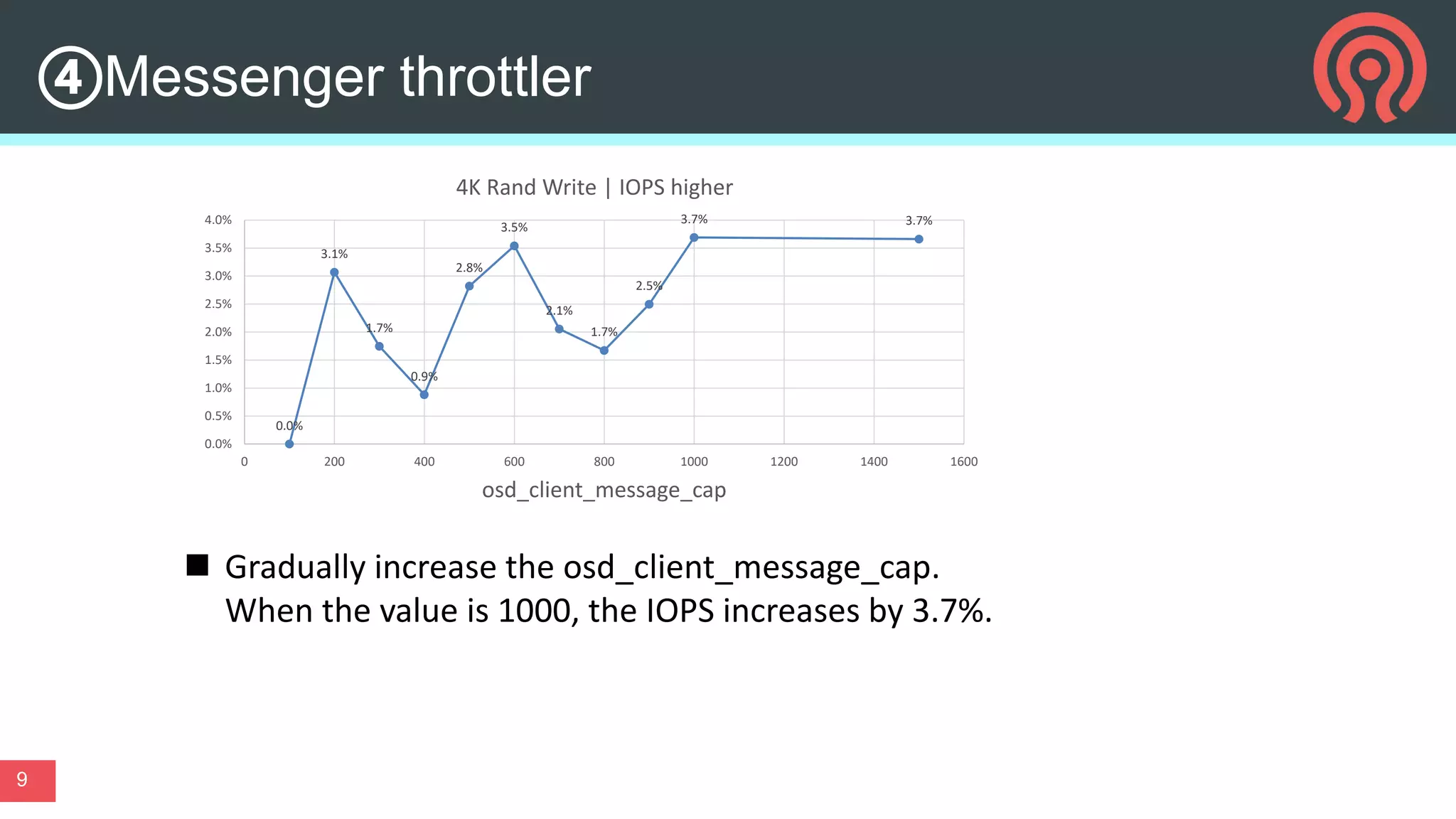

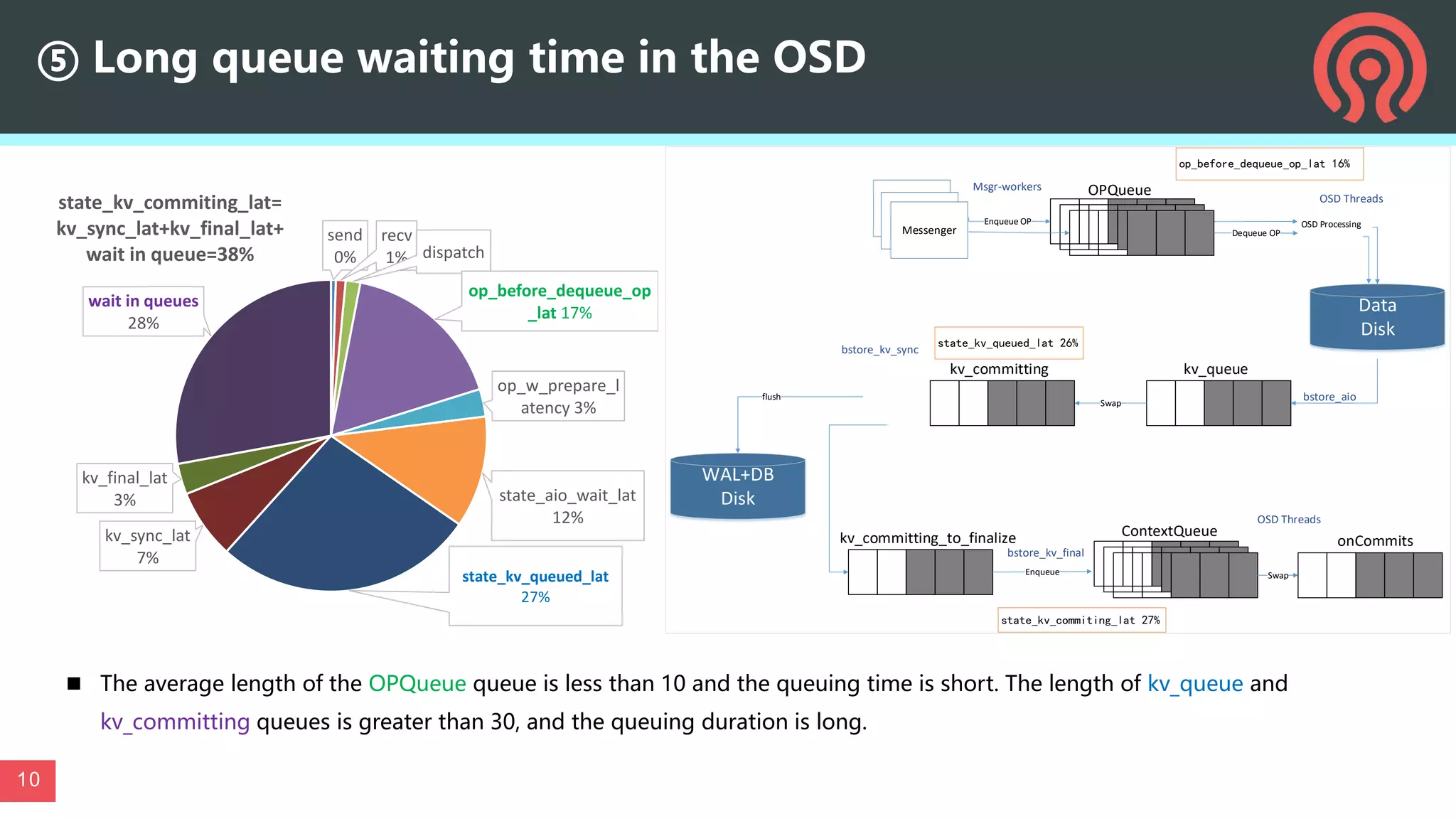

- Specific optimization techniques like data placement to reduce cross-NUMA access, multi-port NIC deployment, using multiple DDR channels, adjusting messaging throttling, and optimizing queue wait times in the object storage daemon (OSD).

- Other