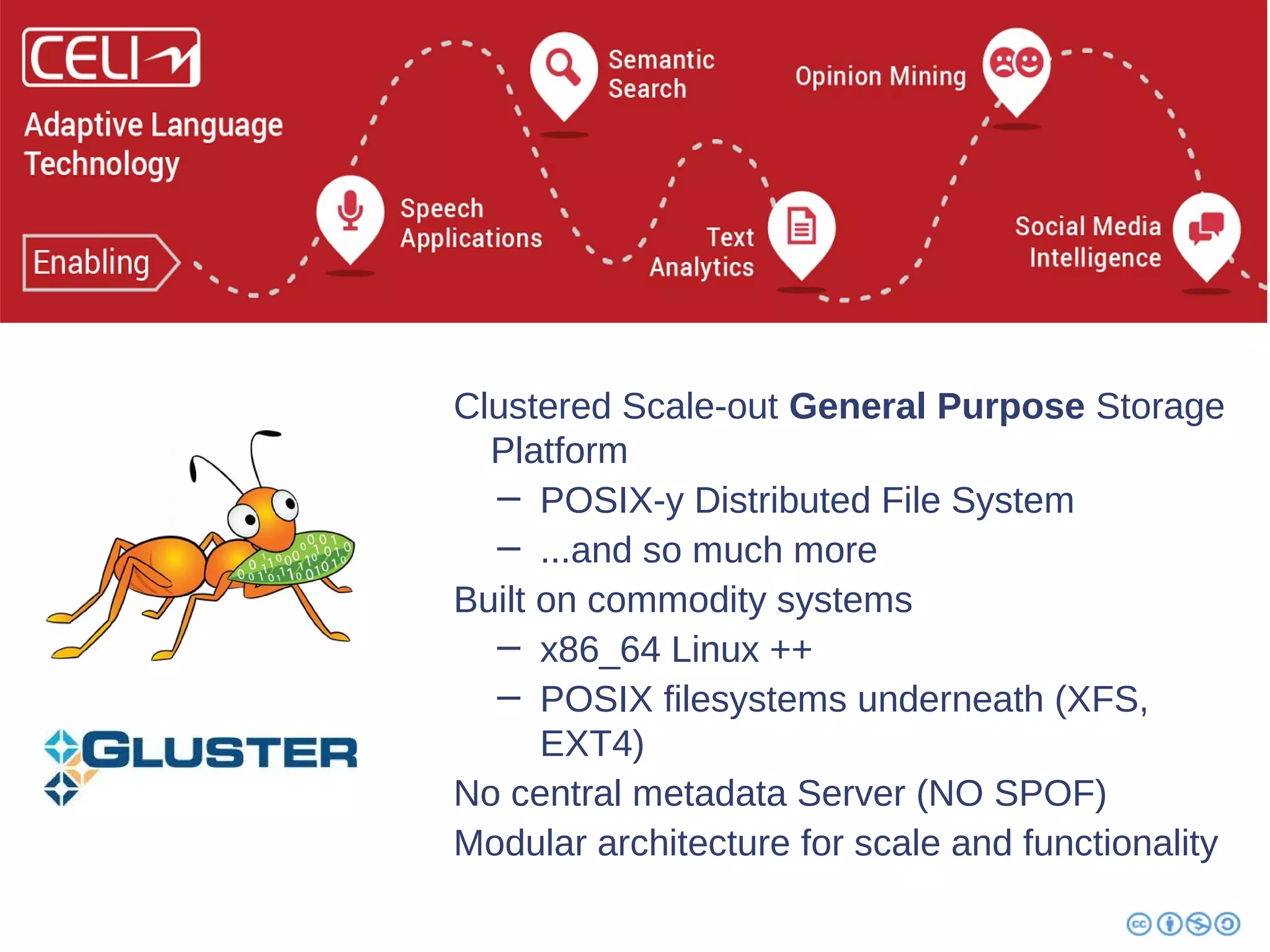

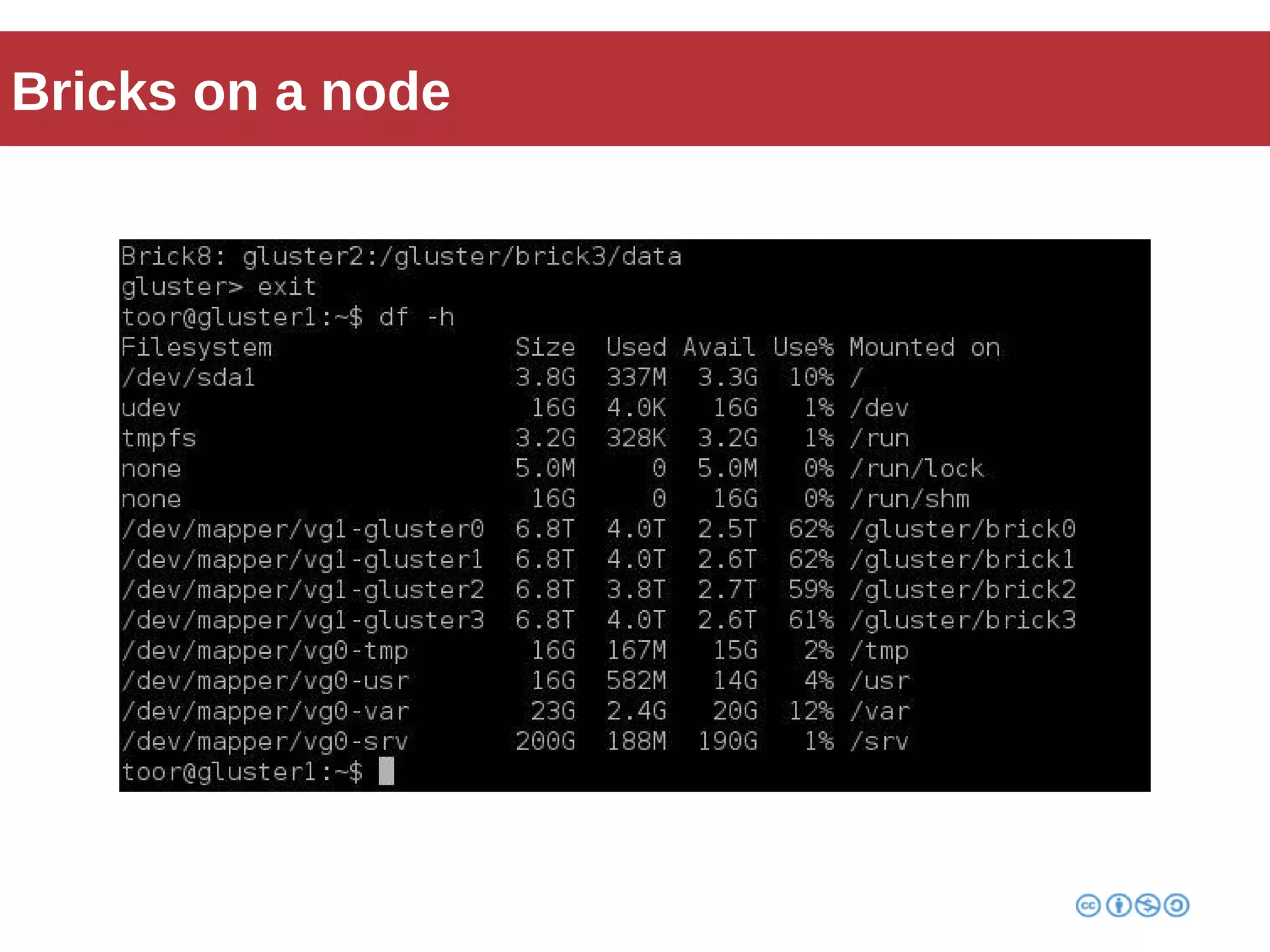

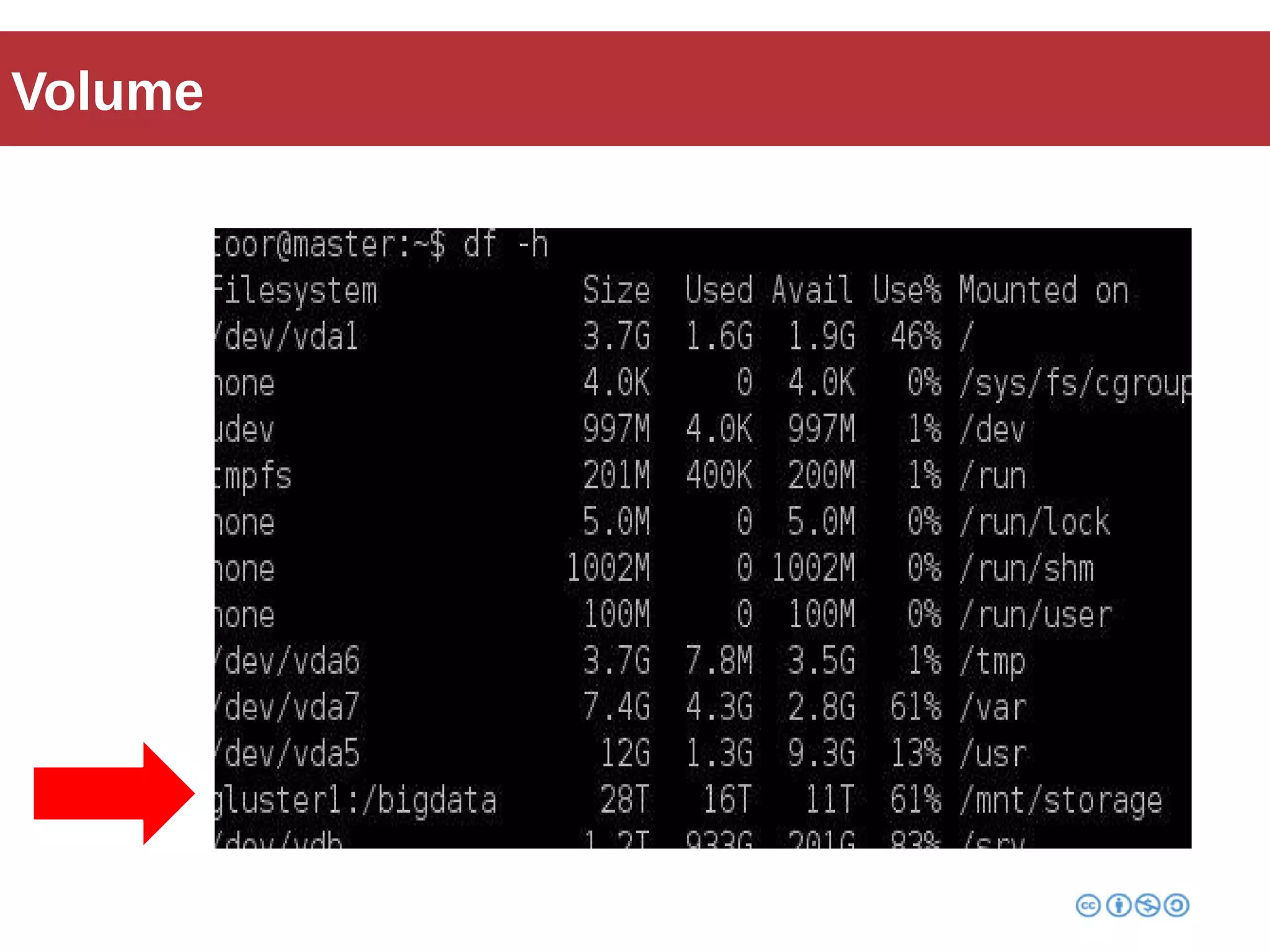

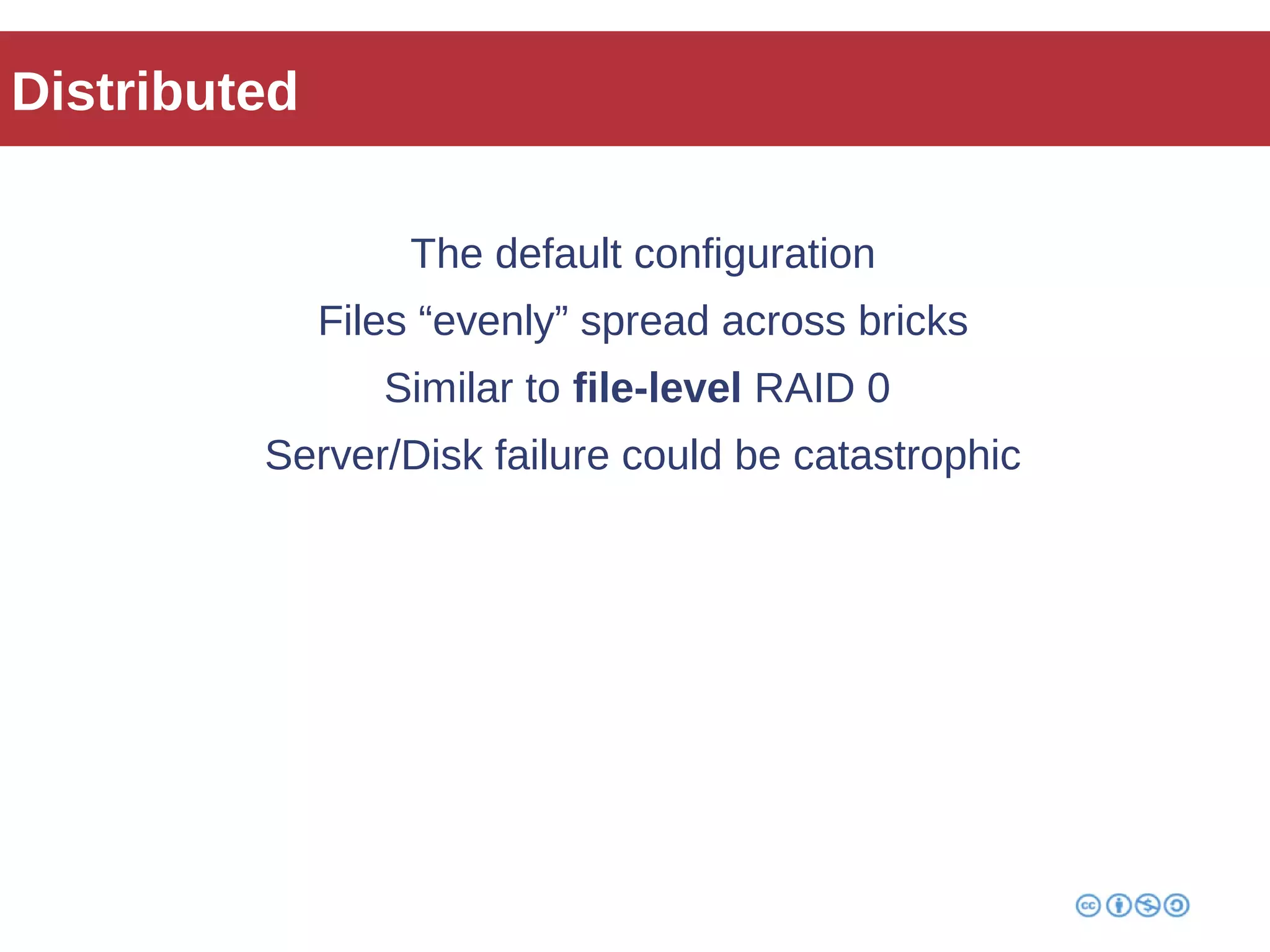

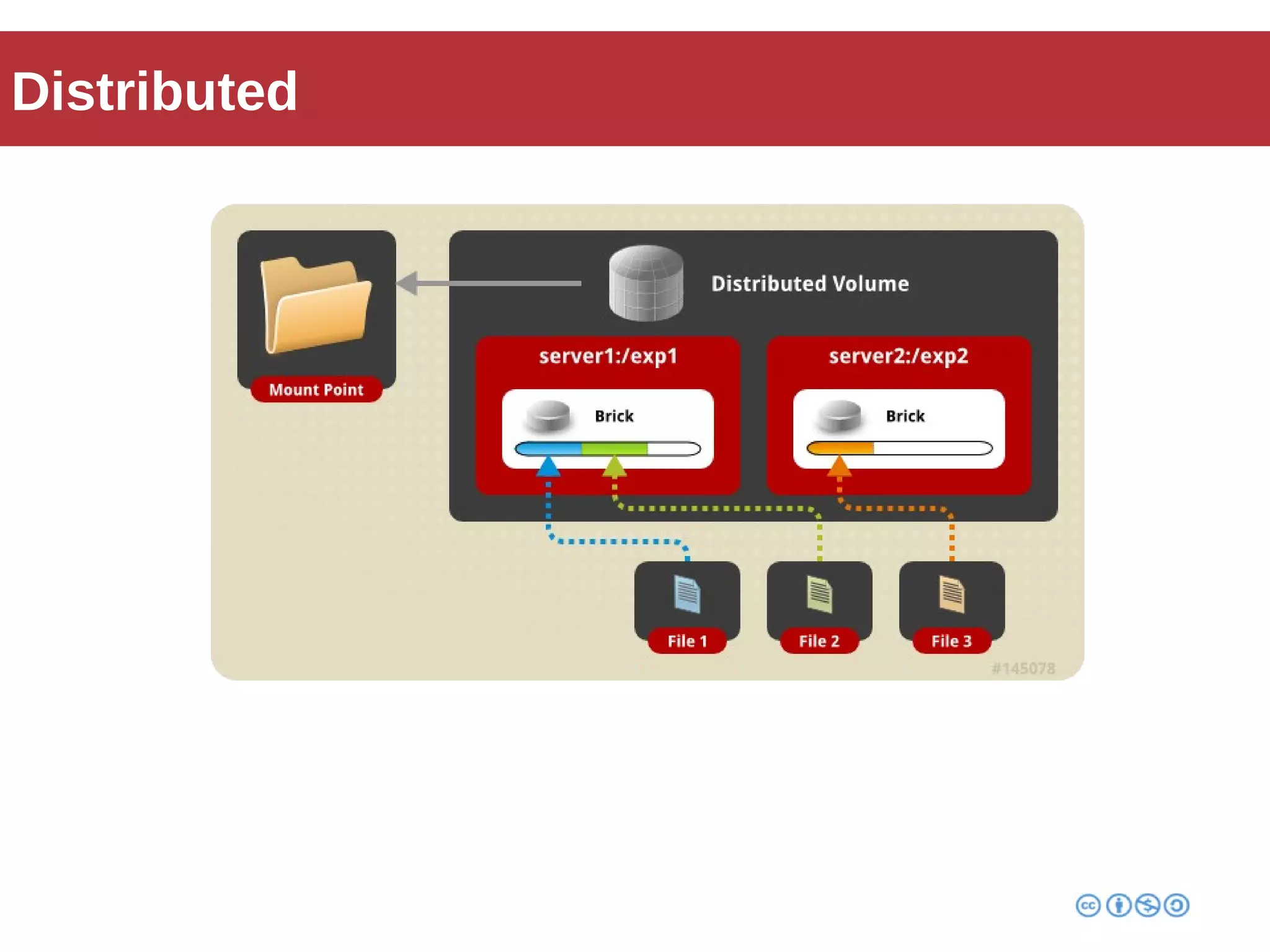

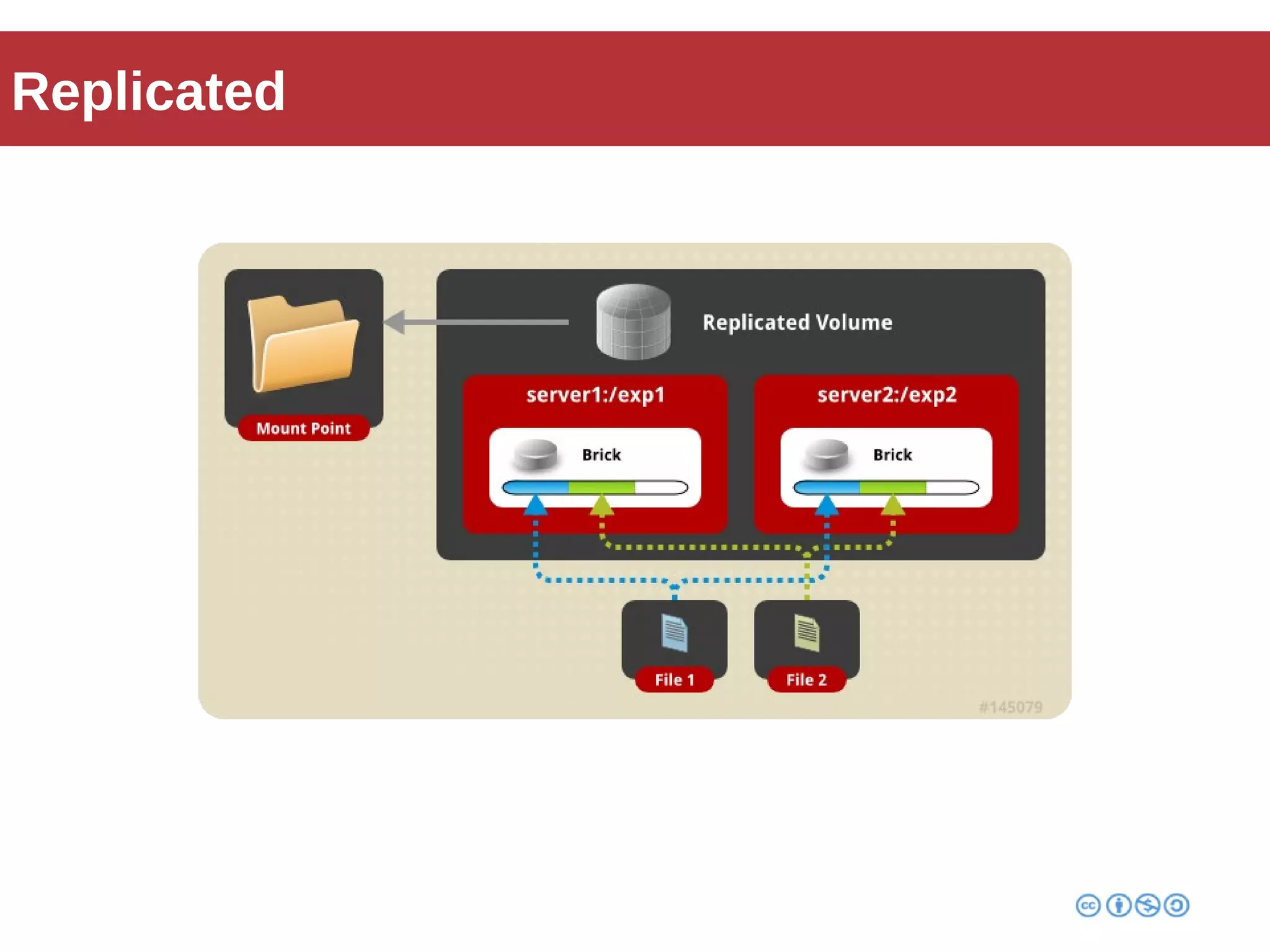

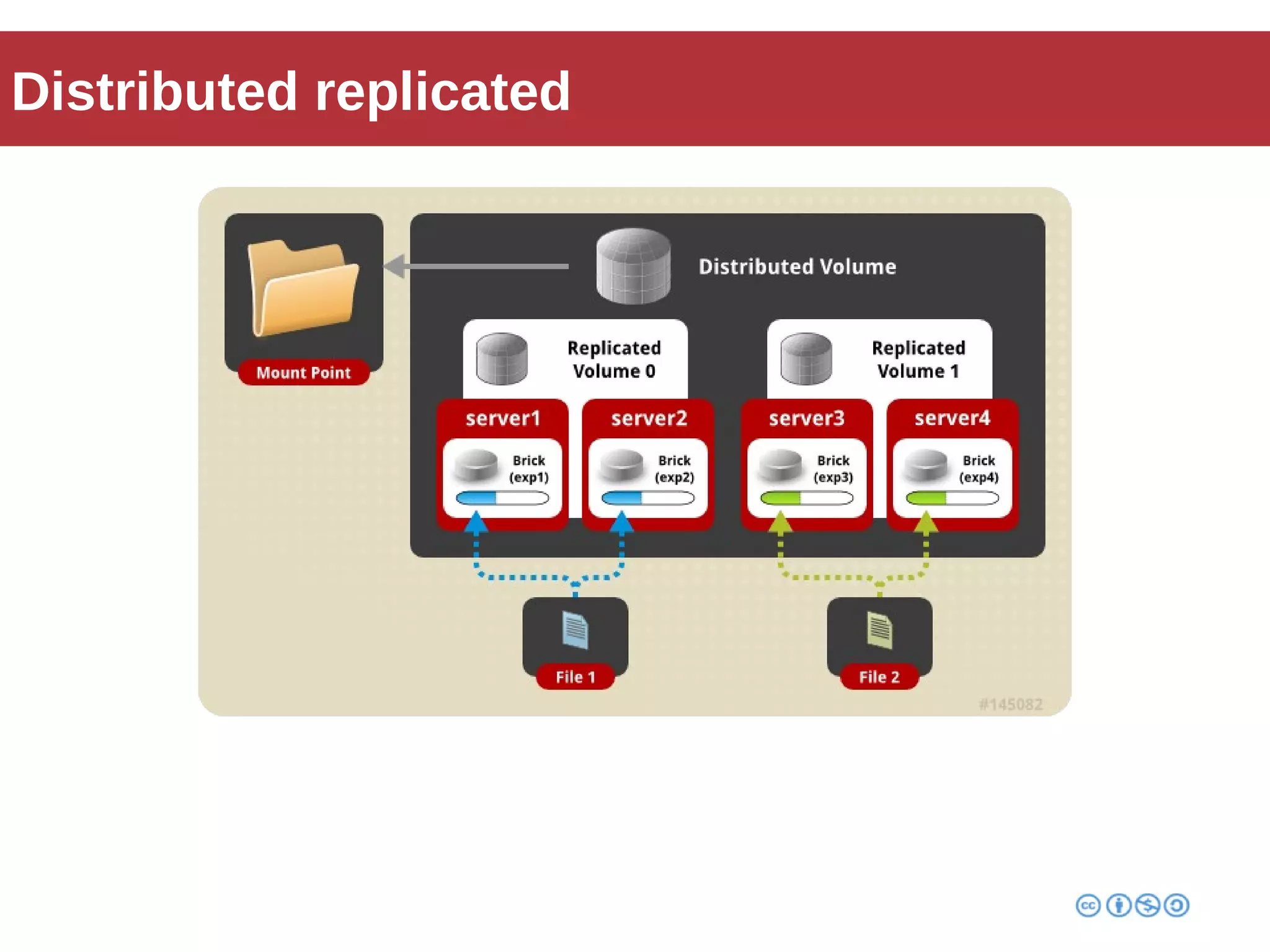

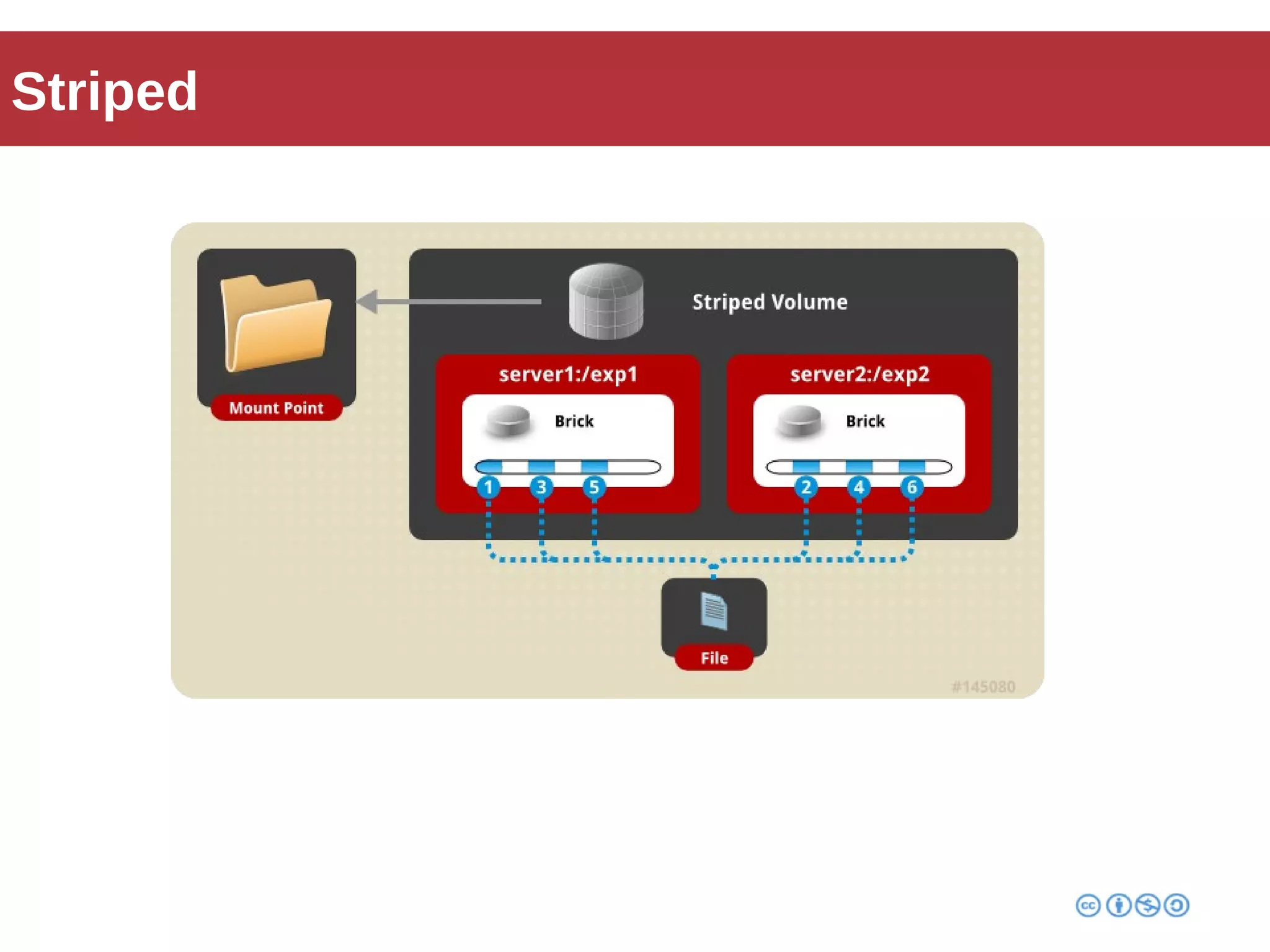

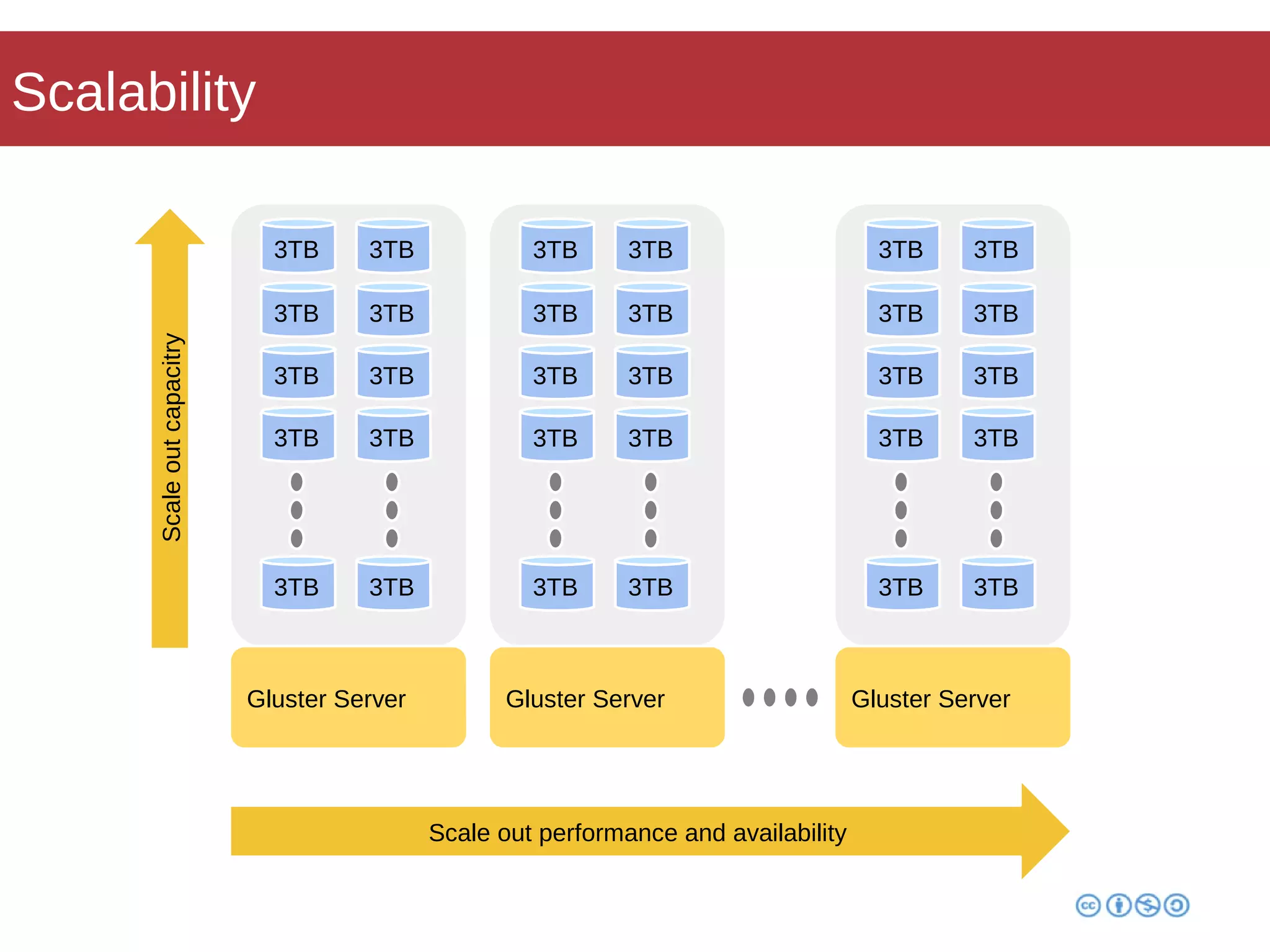

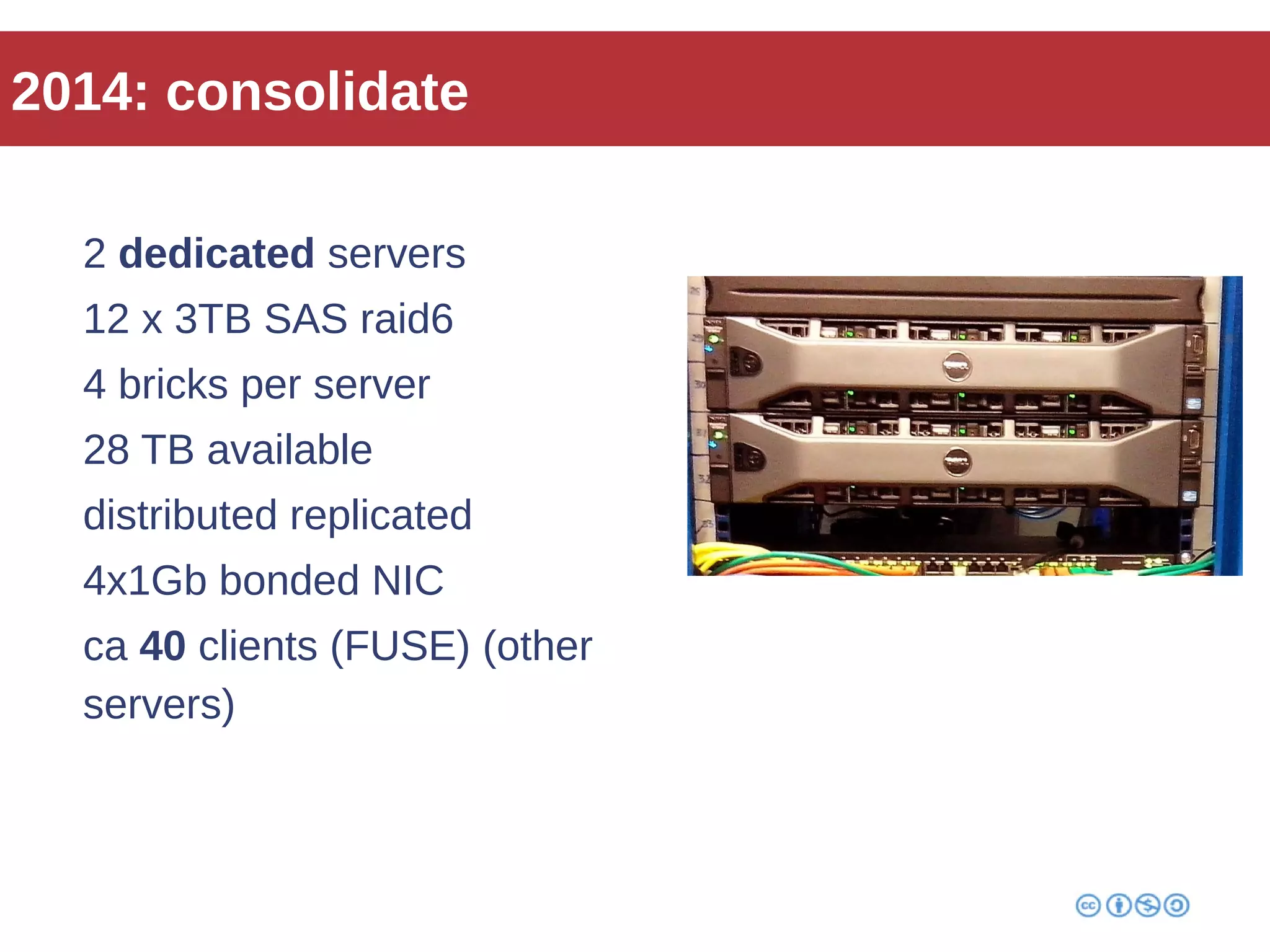

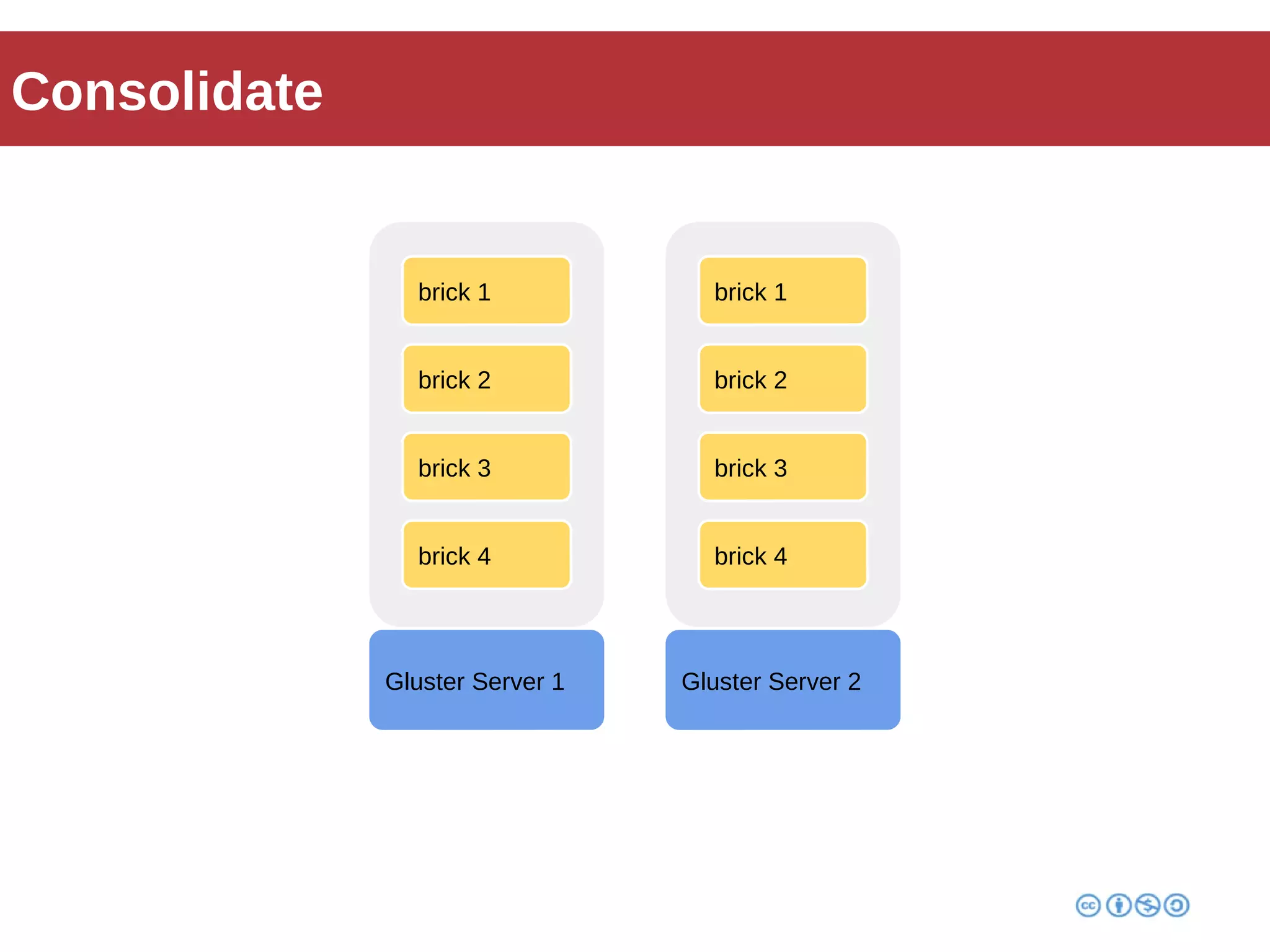

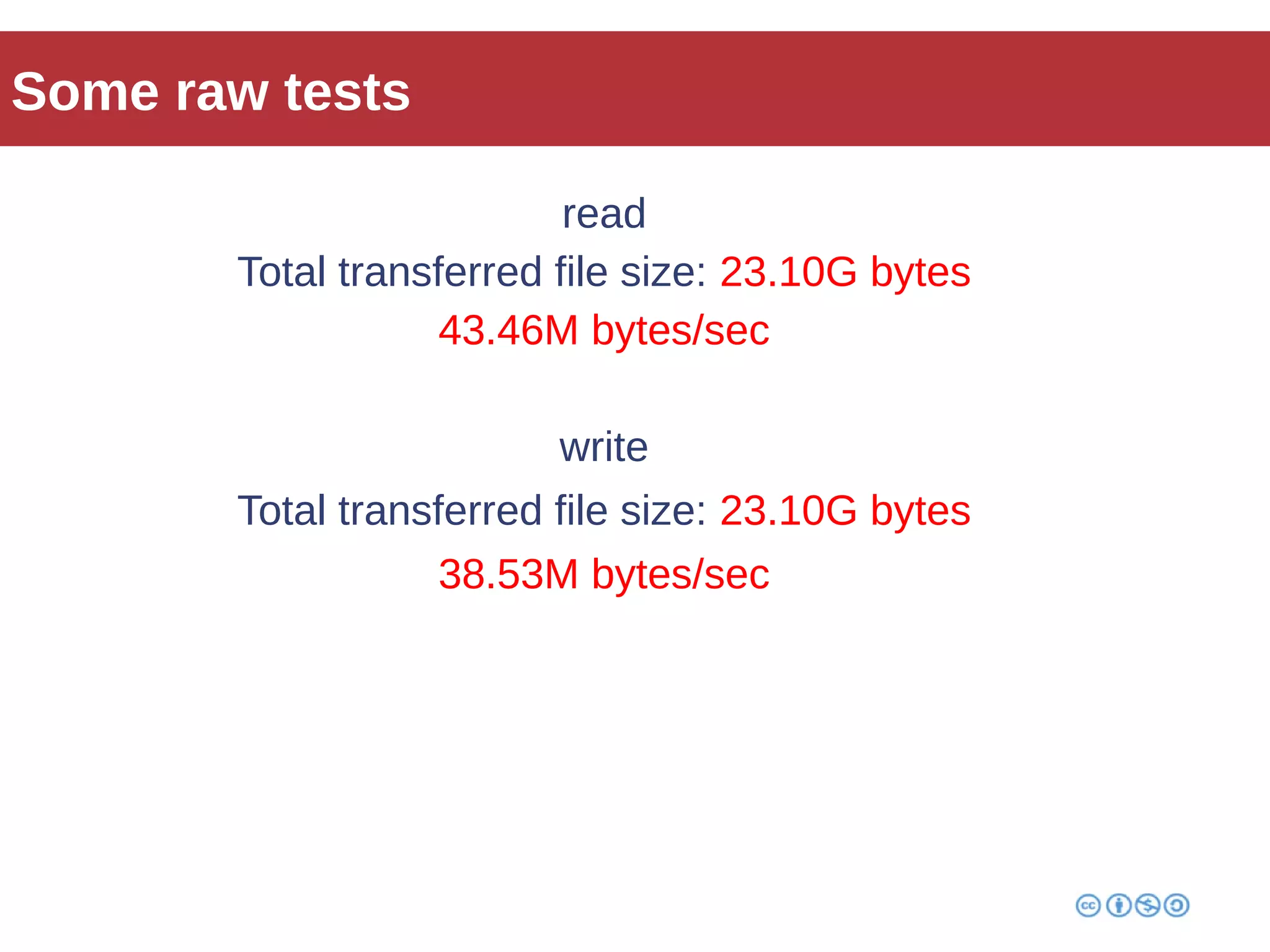

Roberto Franchini discusses GlusterFS, a scalable distributed file system suitable for managing large amounts of data without centralized metadata, catering to various use cases such as backup, media distribution, and high-performance computing. He details its architecture, including peer nodes, volumes, and different volume types like distributed and replicated, while highlighting its modular and fault-tolerant features. The presentation also covers the evolution and implementation of GlusterFS in production environments, showcasing its scalability and performance improvements over time.