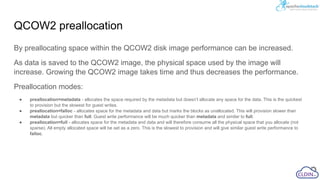

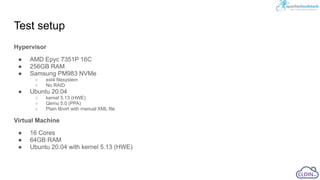

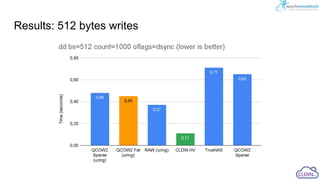

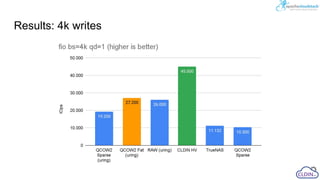

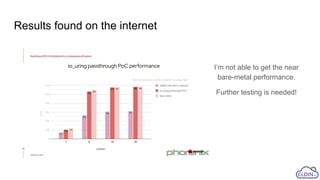

The document discusses the performance of KVM storage using io_uring in a cloud infrastructure environment, highlighting that while bare metal performance is optimal, virtual machines incur overhead that affects disk I/O efficiency. It details preallocation methods for qcow2 disk images, the testing setup, and the need for further investigation into performance limitations. The conclusion emphasizes improvements with io_uring, suggesting a significant potential for enhancing storage performance in cloud deployments.