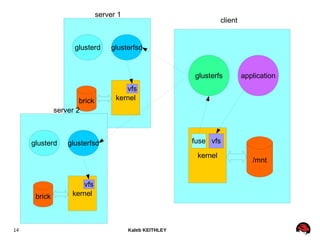

Gluster is a software defined distributed storage system. It uses translators to implement storage semantics like distribution and replication across server bricks. Clients can mount volumes using FUSE or access them via NFS or REST APIs. Translators are implemented as shared libraries and define fop methods and callbacks to handle storage operations in a distributed manner across the cluster. Errors are handled by unwinding the stack and returning failure codes to clients.

![Kaleb KEITHLEY25

Translator basics

● Translators are shared objects (shlibs)

● Methods

● int32_t init(xlator_t *this);

● void fini(xlator_t *this);

● Data

● struct xlator_fops fops { ... };

● struct xlator_cbks cbks { };

● struct volume_options options [] = { ... };

● Client, Server, Client/Server

● Threads: write MT-SAFE

● Portability: GlusterFS != Linux only

● License: GPLv2 or LGPLv3+](https://image.slidesharecdn.com/xg1wlbgyspeshbynfktb-signature-1e22e3c02f143bdbaade1033e41083e3186e10cee46774b782105433d60d093c-poli-160517072541/85/Gluster-technical-overview-25-320.jpg)

![Kaleb KEITHLEY33

Calling multiple children (fan out)

afr_writev_wind (...)

{

...

for (i = 0; i < priv>child_count; i++) {

if (local>transaction.pre_op[i]) {

STACK_WIND_COOKIE (frame, afr_writev_wind_cbk,

(void *) (long) i,

priv>children[i],

priv>children[i]>fops>writev,

local>fd, ...);

}

}

return 0;

}](https://image.slidesharecdn.com/xg1wlbgyspeshbynfktb-signature-1e22e3c02f143bdbaade1033e41083e3186e10cee46774b782105433d60d093c-poli-160517072541/85/Gluster-technical-overview-33-320.jpg)