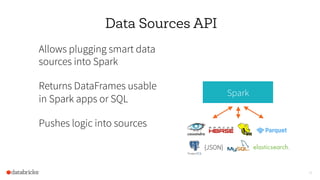

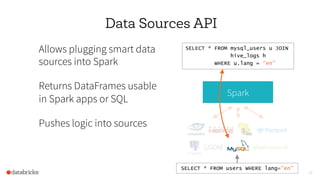

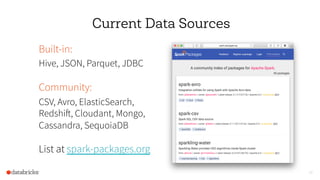

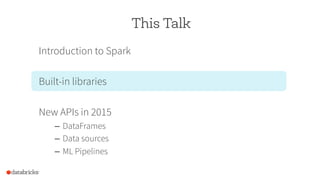

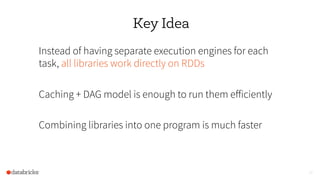

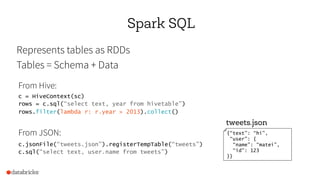

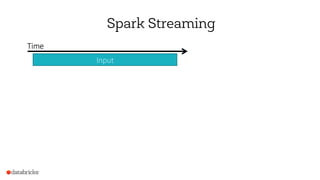

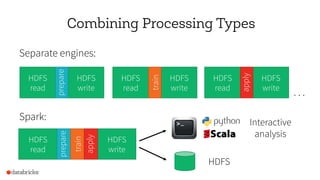

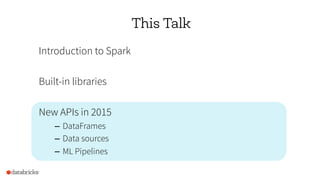

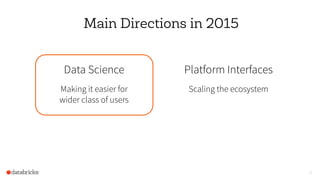

Apache Spark is a fast and general-purpose cluster computing system for large-scale data processing. It improves on MapReduce by allowing data to be kept in memory across jobs, enabling faster iterative jobs. Spark consists of a core engine along with libraries for SQL, streaming, machine learning, and graph processing. The document discusses new APIs in Spark including DataFrames, which provide a tabular interface like in R/Python, and data sources, which allow plugging external data systems into Spark. These changes aim to make Spark easier for data scientists to use at scale.

![Example: Log Mining

Load error messages from a log into memory, then interactively

search for various patterns

lines = spark.textFile(“hdfs://...”)

errors = lines.filter(lambda s: s.startswith(“ERROR”))

messages = errors.map(lambda s: s.split(‘t’)[2])

messages.cache()

Block 1

Block 2

Block 3

Worker

Worker

Worker

Driver

messages.filter(lambda s: “foo” in s).count()

messages.filter(lambda s: “bar” in s).count()

. . .

tasks

results

Cache 1

Cache 2

Cache 3

Base RDDTransformed RDD

Action

Full-text search of Wikipedia in <1 sec

(vs 20 sec for on-disk data)](https://image.slidesharecdn.com/bdtc2-150428121916-conversion-gate02/85/Simplifying-Big-Data-Analytics-with-Apache-Spark-11-320.jpg)

![Fault Tolerance

RDDs track the transformations used to build them (their lineage)

to recompute lost data

messages = textFile(...).filter(lambda s: “ERROR” in s)

.map(lambda s: s.split(“t”)[2])

HadoopRDD

path = hdfs://…

FilteredRDD

func = lambda s: …

MappedRDD

func = lambda s: …](https://image.slidesharecdn.com/bdtc2-150428121916-conversion-gate02/85/Simplifying-Big-Data-Analytics-with-Apache-Spark-12-320.jpg)

![Vectors, Matrices = RDD[Vector]

Iterative computation

MLlib

points = sc.textFile(“data.txt”).map(parsePoint)

model = KMeans.train(points, 10)

model.predict(newPoint)](https://image.slidesharecdn.com/bdtc2-150428121916-conversion-gate02/85/Simplifying-Big-Data-Analytics-with-Apache-Spark-24-320.jpg)

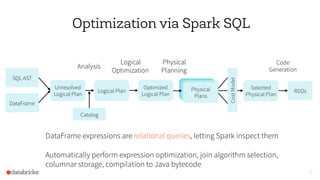

![36

Spark DataFrames

Collections of structured

data similar to R, pandas

Automatically optimized

via Spark SQL

– Columnar storage

– Code-gen. execution

df = jsonFile(“tweets.json”)

df[df[“user”] == “matei”]

.groupBy(“date”)

.sum(“retweets”)

0

5

10

Python Scala DataFrame

RunningTime](https://image.slidesharecdn.com/bdtc2-150428121916-conversion-gate02/85/Simplifying-Big-Data-Analytics-with-Apache-Spark-36-320.jpg)

![38

Machine Learning Pipelines

tokenizer = Tokenizer()

tf = HashingTF(numFeatures=1000)

lr = LogisticRegression()

pipe = Pipeline([tokenizer, tf, lr])

model = pipe.fit(df)

tokenizer TF LR

modelDataFrame

High-level API similar to

SciKit-Learn

Operates on DataFrames

Grid search to tune params

across a whole pipeline](https://image.slidesharecdn.com/bdtc2-150428121916-conversion-gate02/85/Simplifying-Big-Data-Analytics-with-Apache-Spark-38-320.jpg)

![39

Spark R Interface

Exposes DataFrames and

ML pipelines in R

Parallelize calls to R code

df = jsonFile(“tweets.json”)

summarize(

group_by(

df[df$user == “matei”,],

“date”),

sum(“retweets”))

Target: Spark 1.4 (June)](https://image.slidesharecdn.com/bdtc2-150428121916-conversion-gate02/85/Simplifying-Big-Data-Analytics-with-Apache-Spark-39-320.jpg)