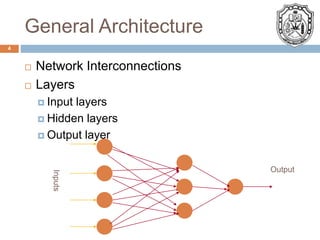

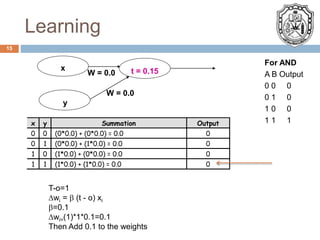

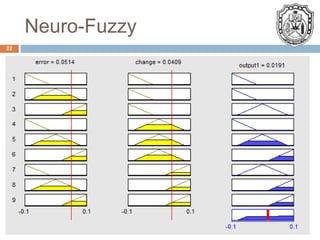

This presentation provides an overview of artificial neural networks. It discusses the general architecture including layers, activation functions, and learning methods like supervised learning. Applications mentioned include control systems, engine control units, and neuro-fuzzy hybrid systems. The strengths of neural networks are their ability to solve complex problems by learning from large amounts of input data and adapting. Some shortfalls discussed are the time required for learning and processing.