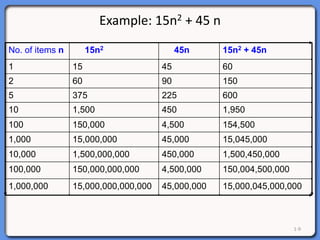

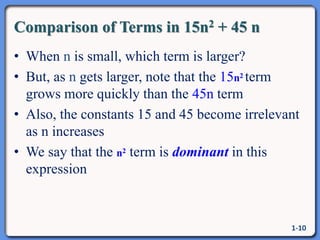

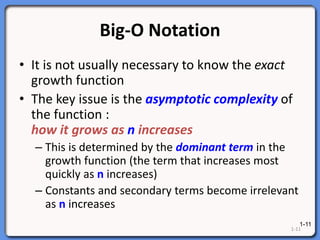

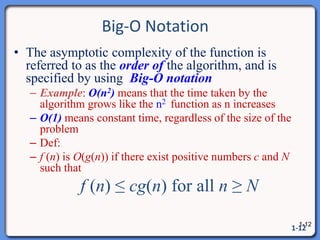

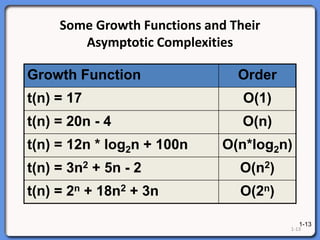

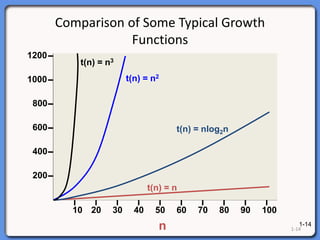

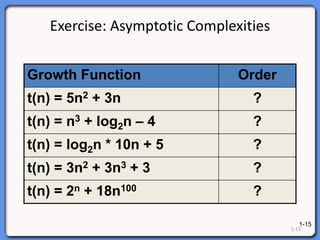

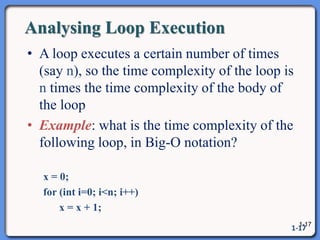

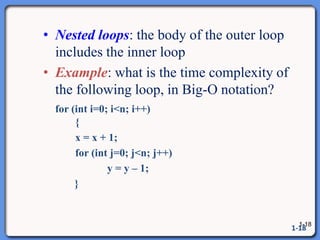

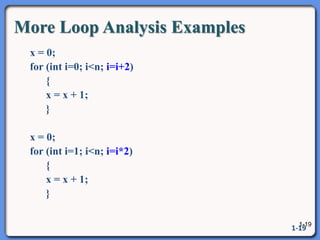

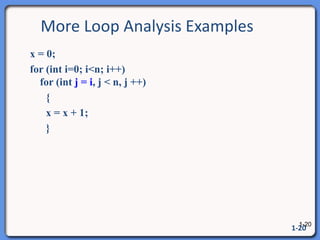

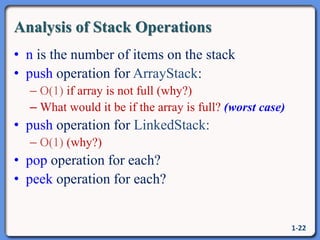

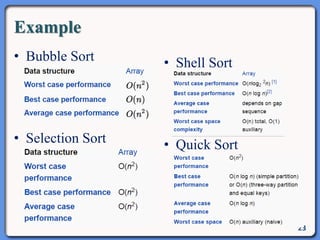

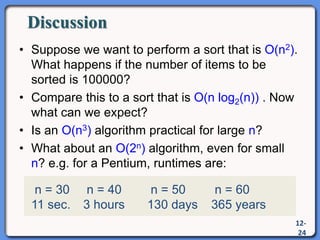

The document outlines a syllabus on data structures and algorithms, focusing on the analysis of algorithms, particularly time complexity. It discusses worst, average, and best case scenarios along with growth functions and big-O notation to classify algorithm efficiency. Additionally, it covers the analysis of loop execution and various algorithms such as sorting methods, comparing their time complexities.