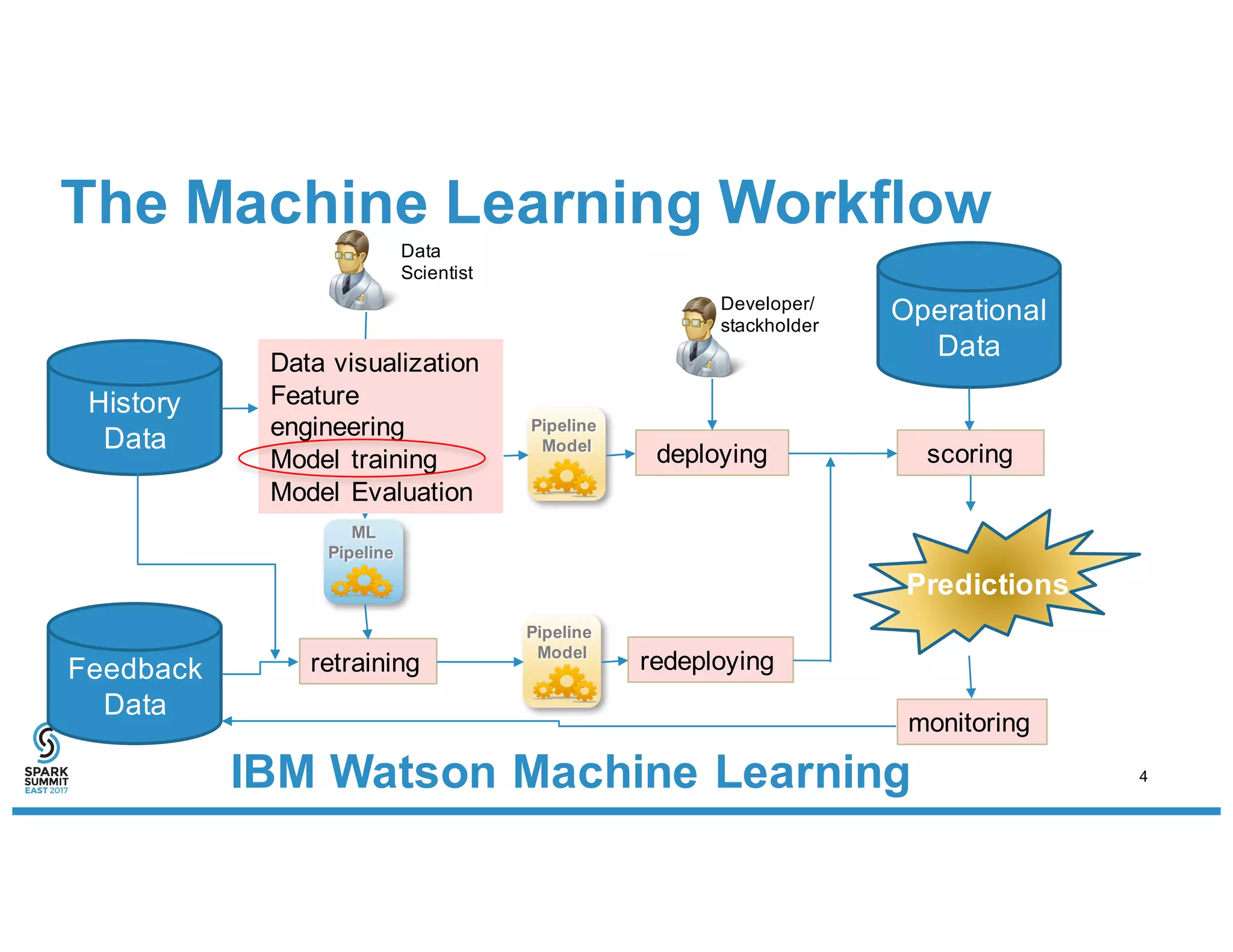

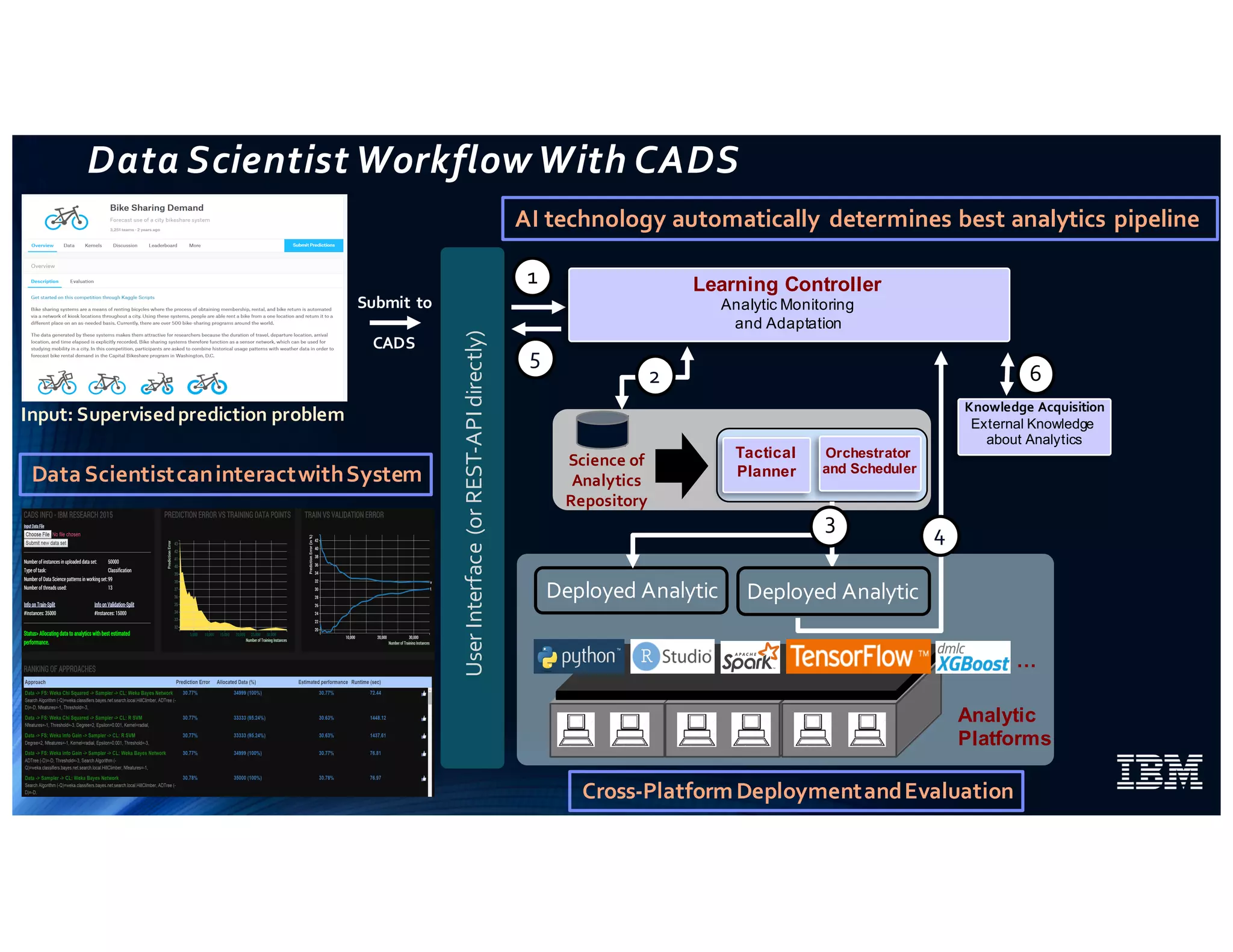

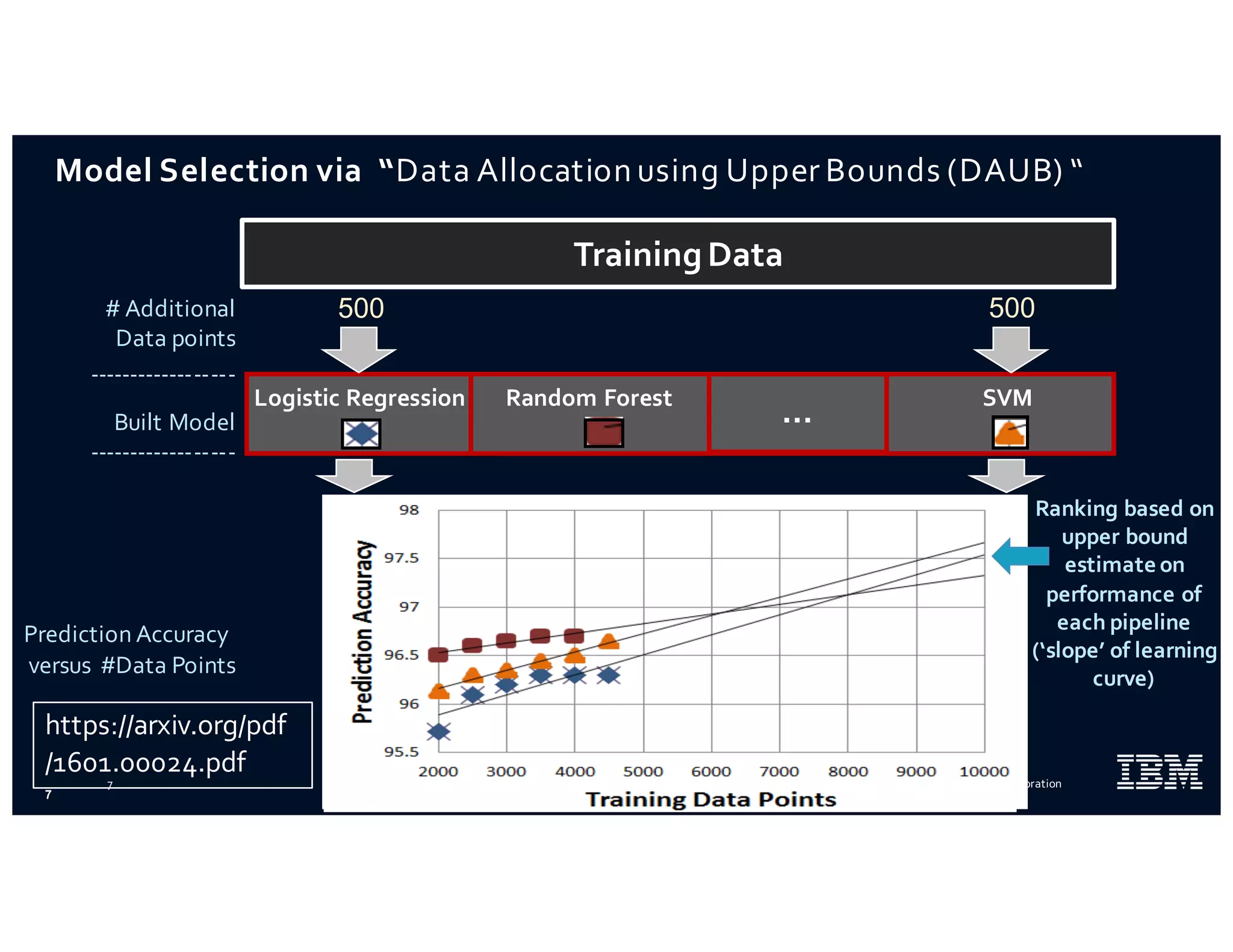

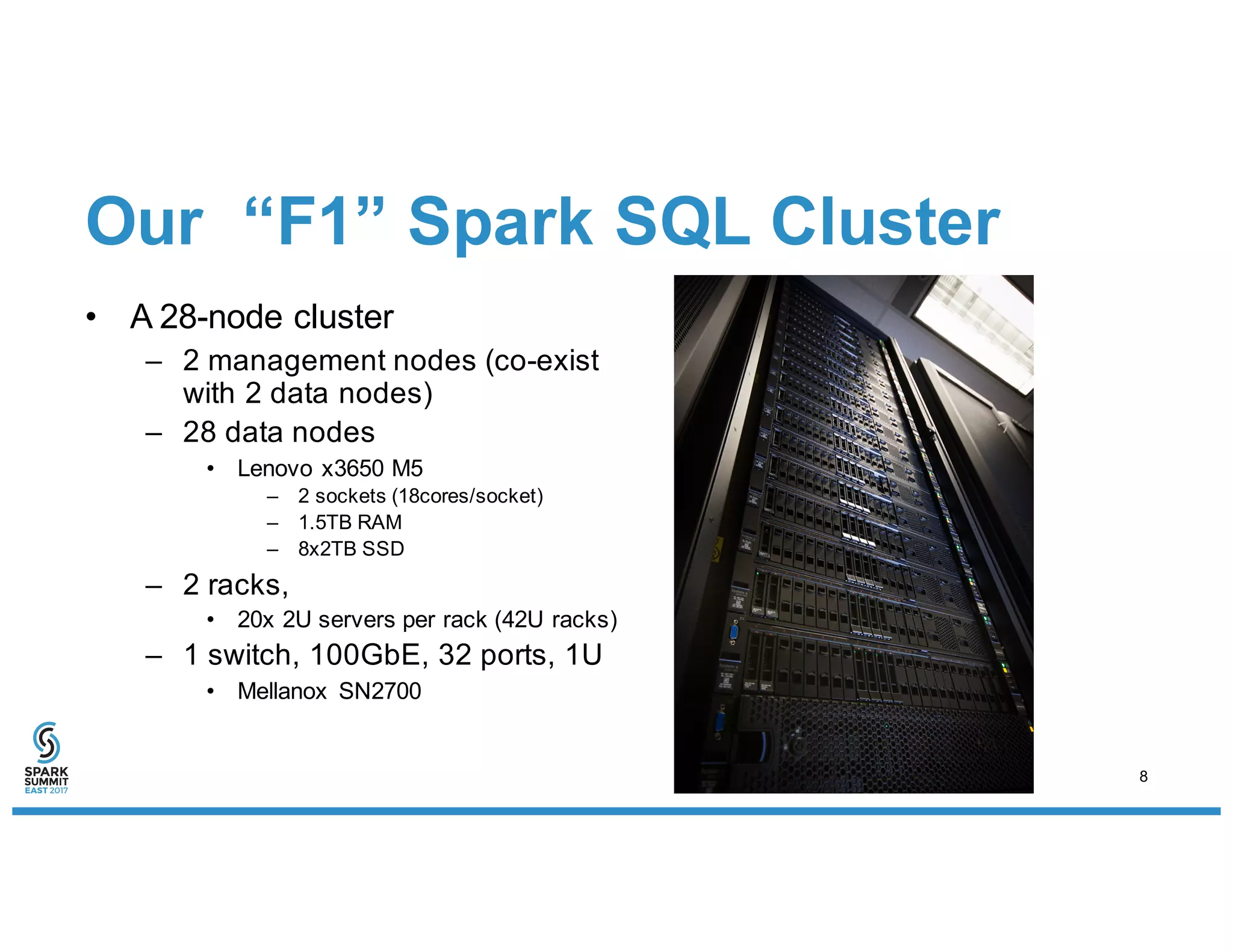

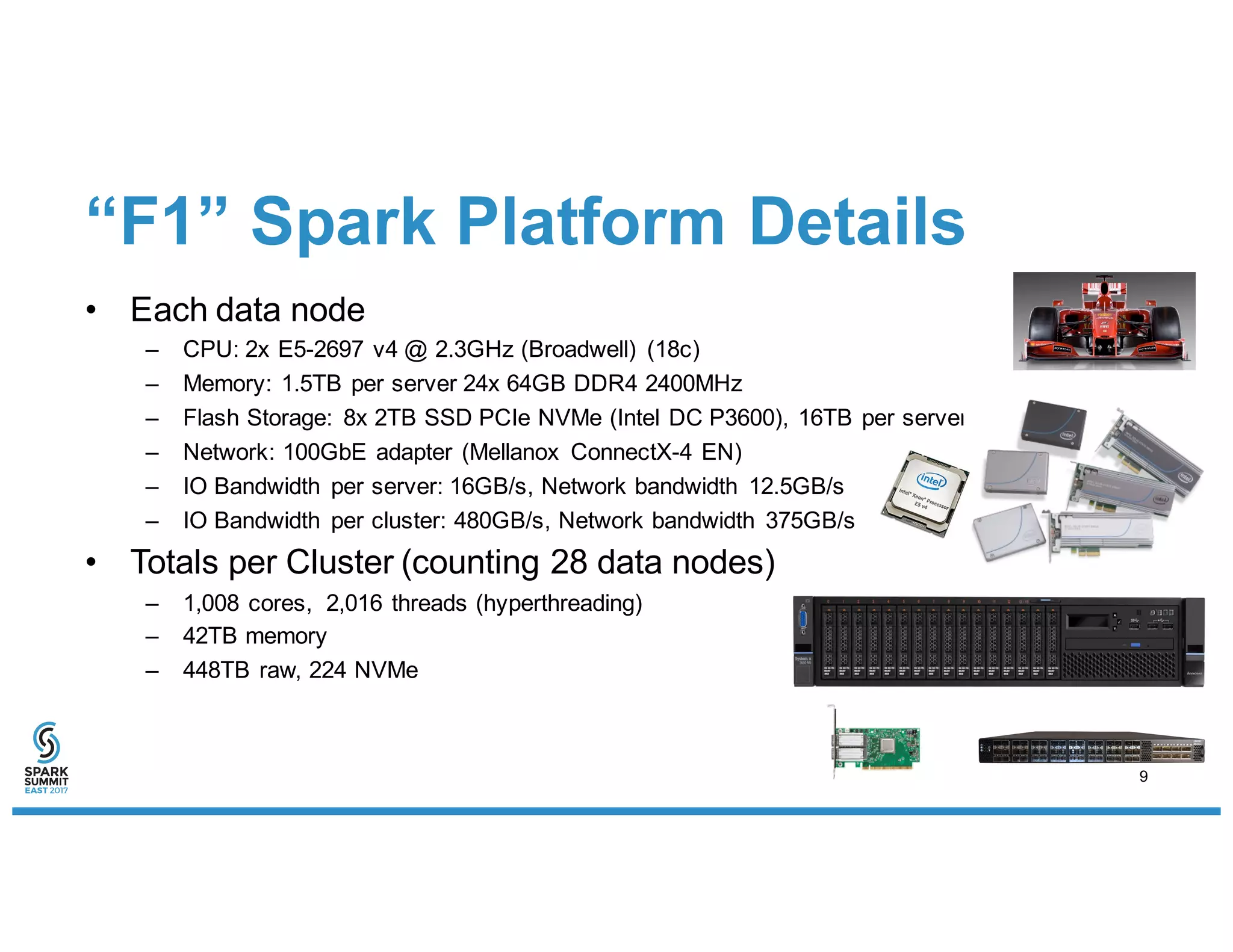

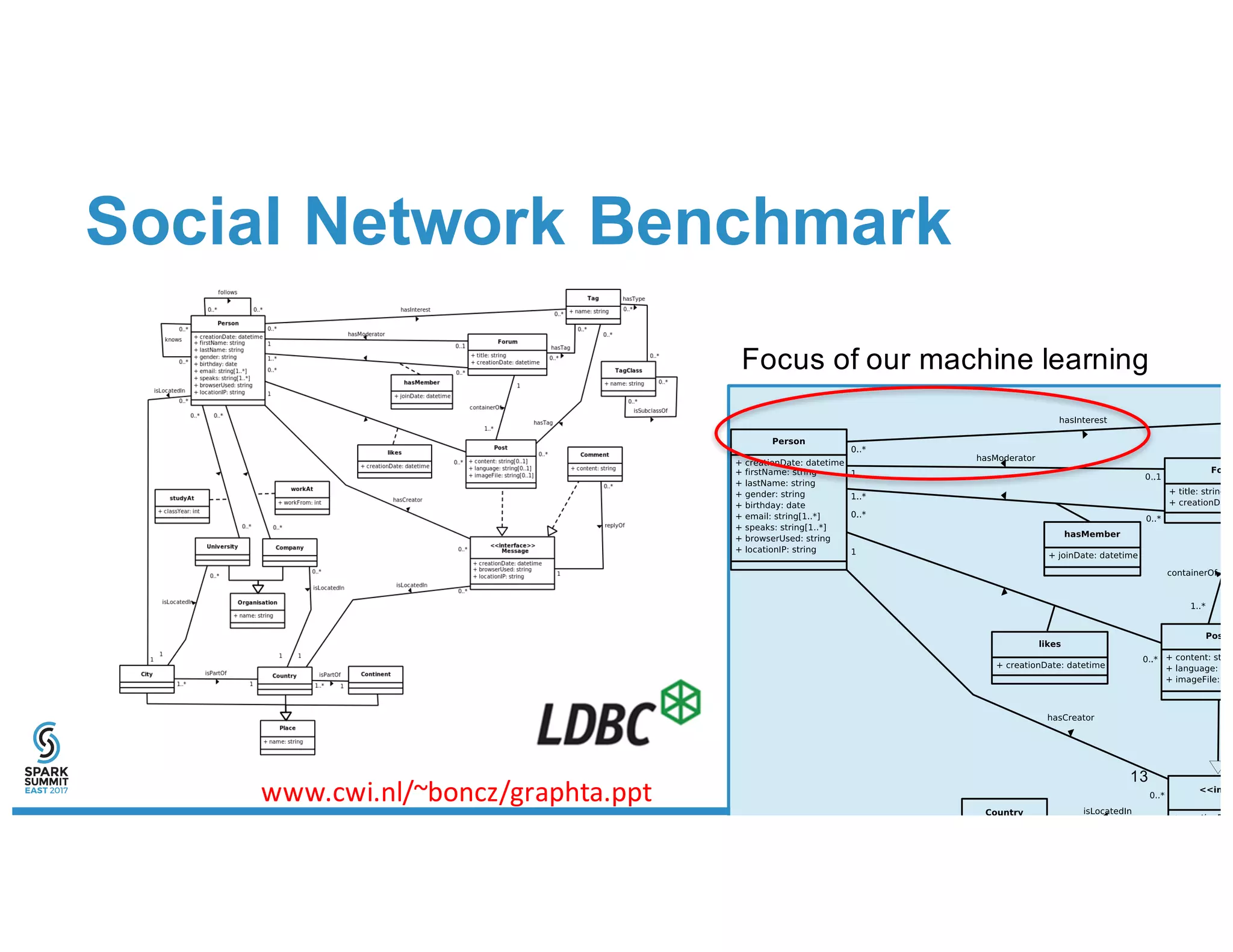

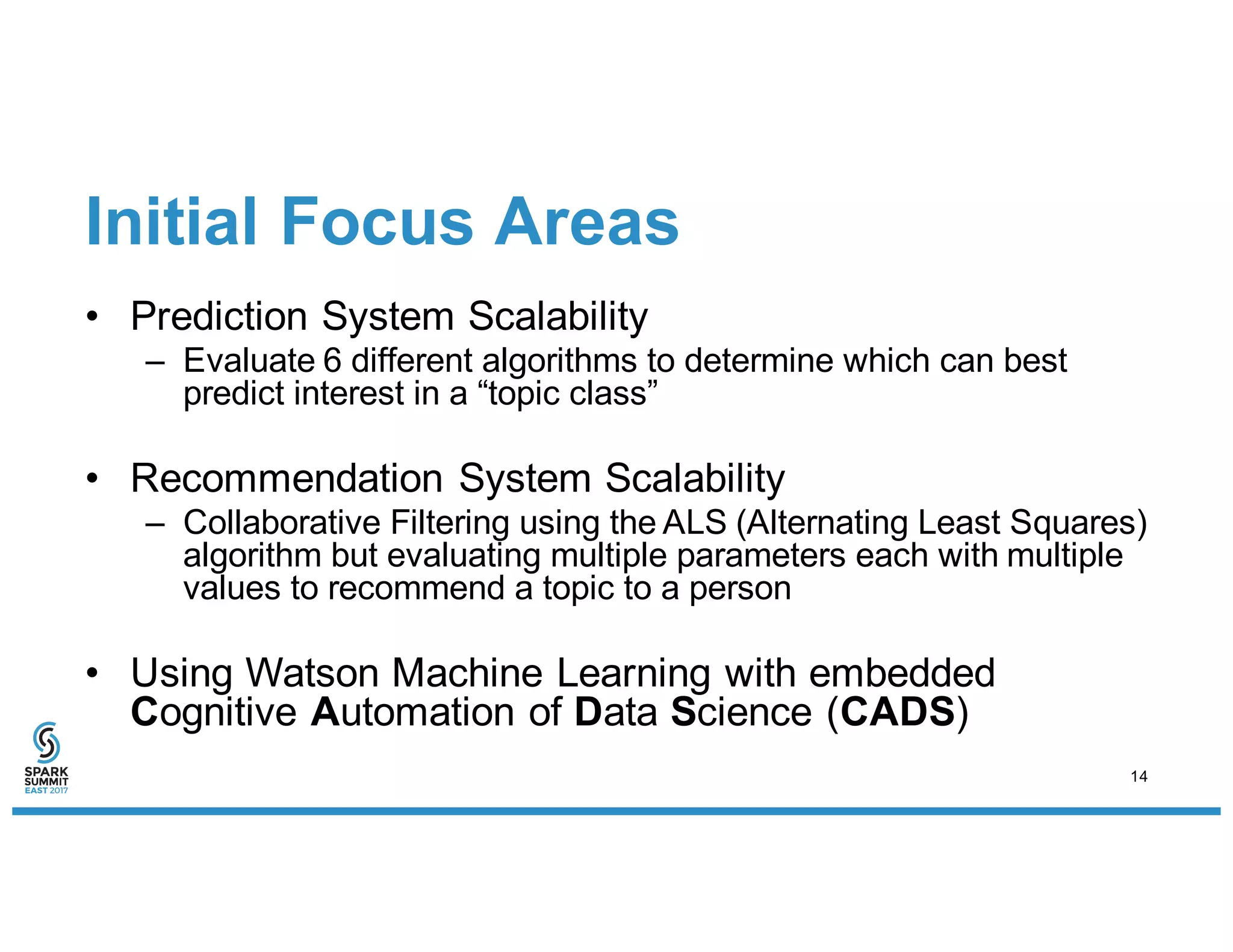

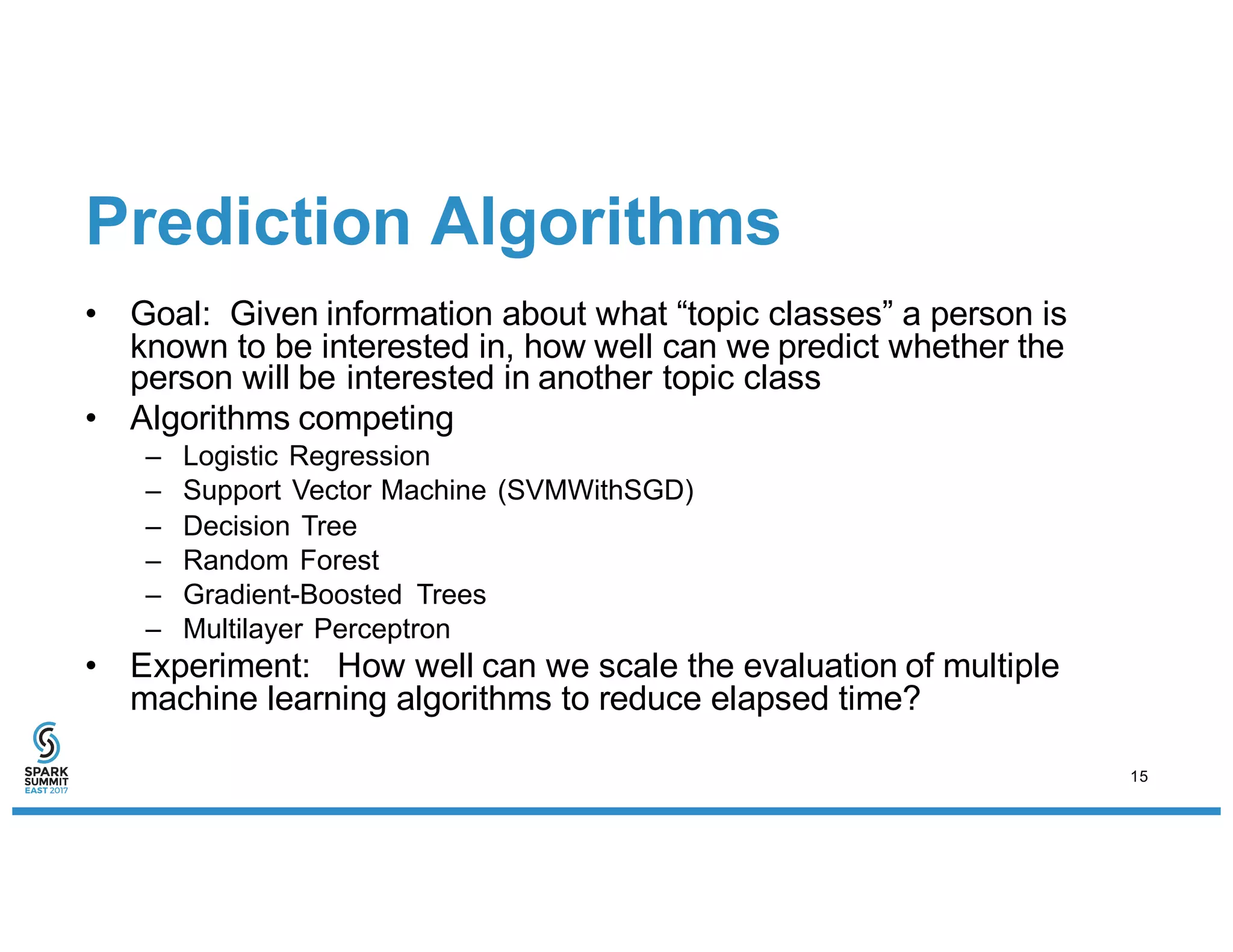

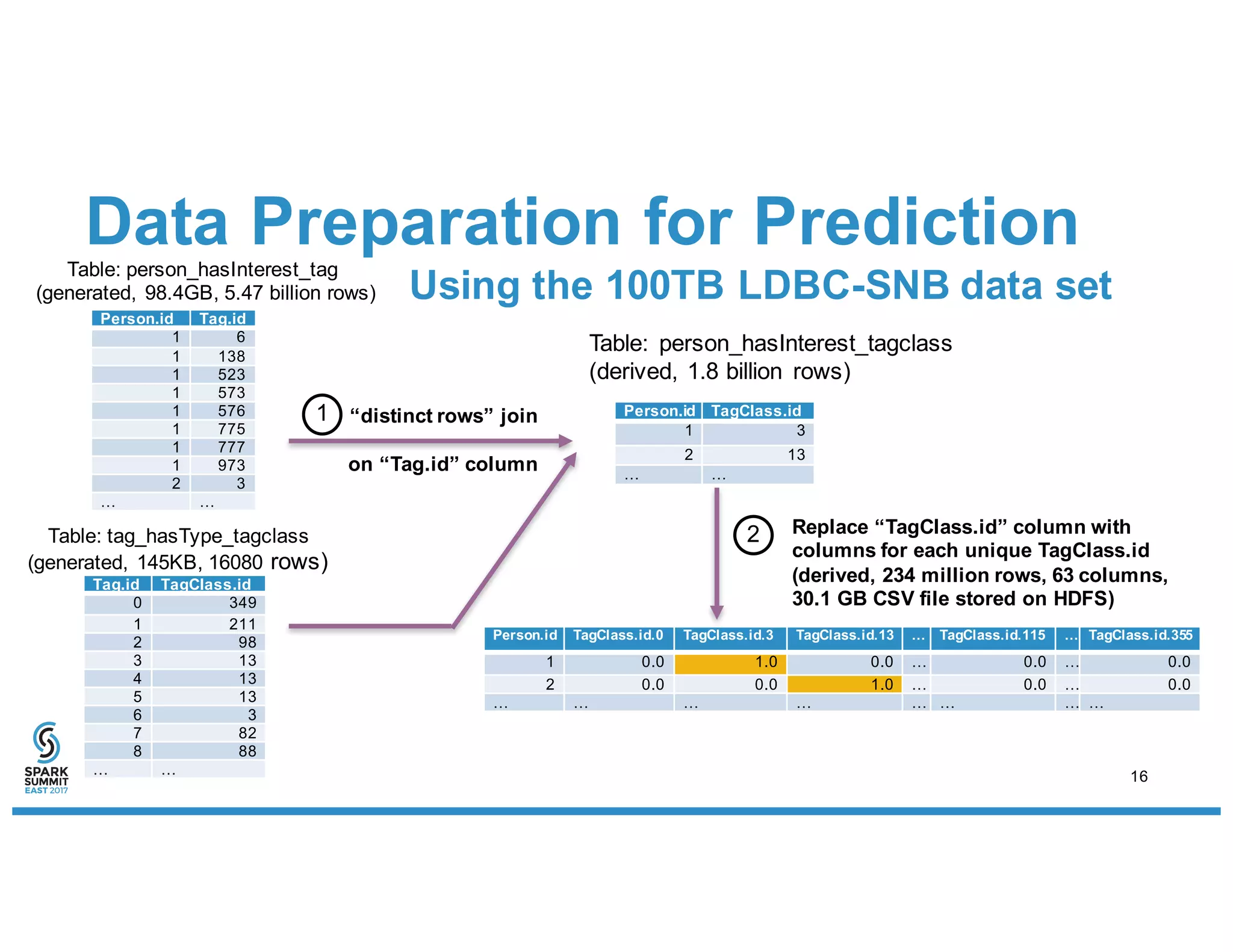

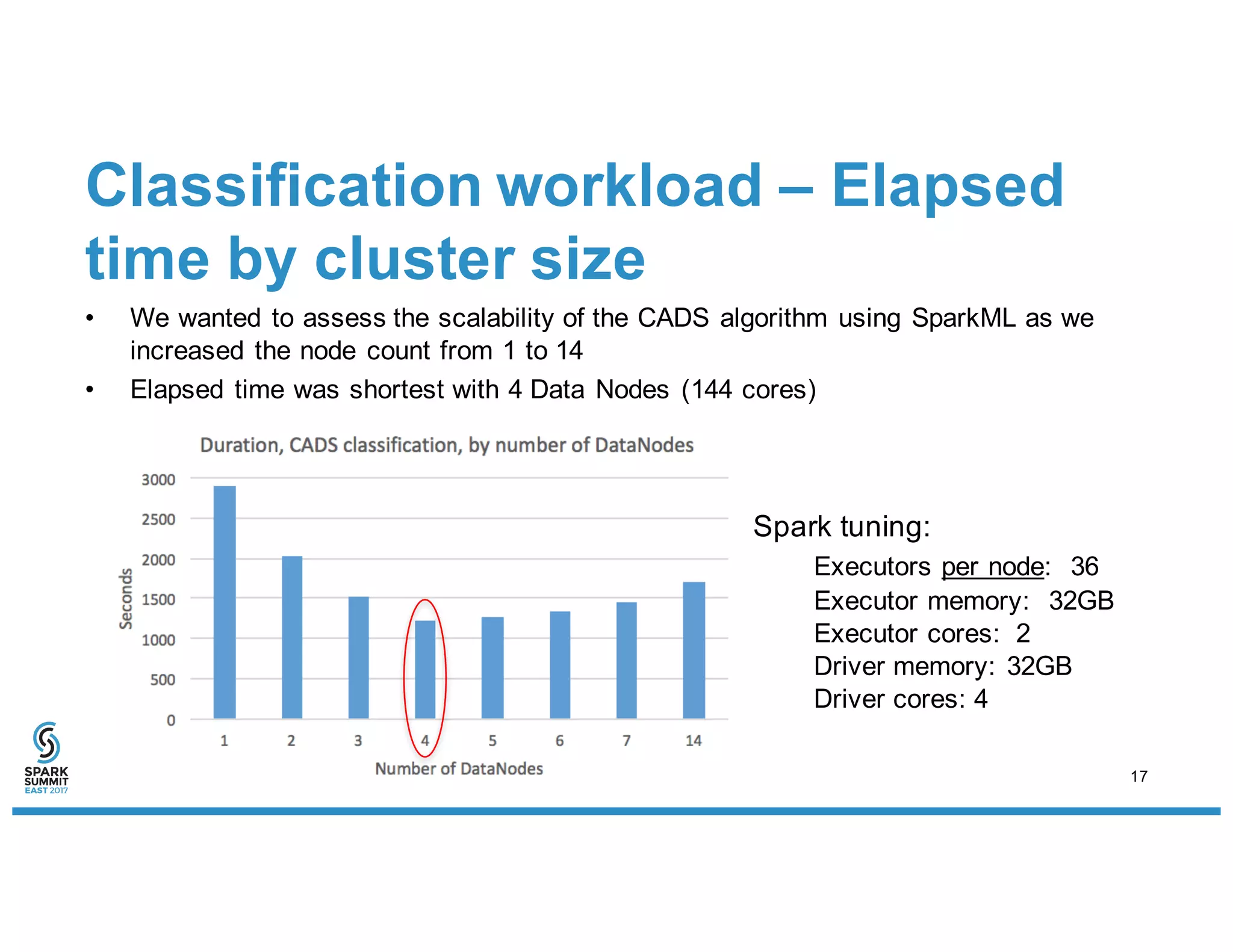

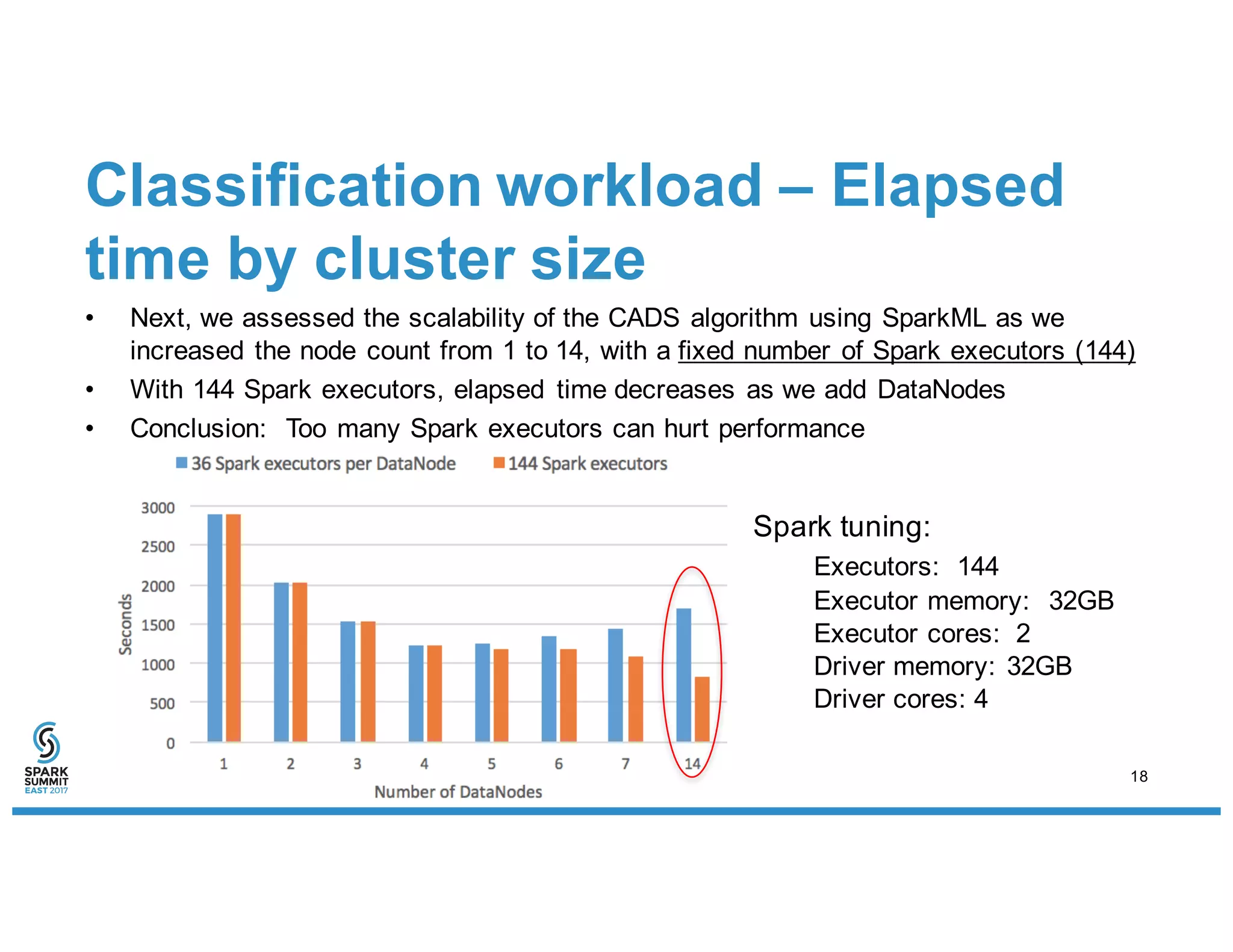

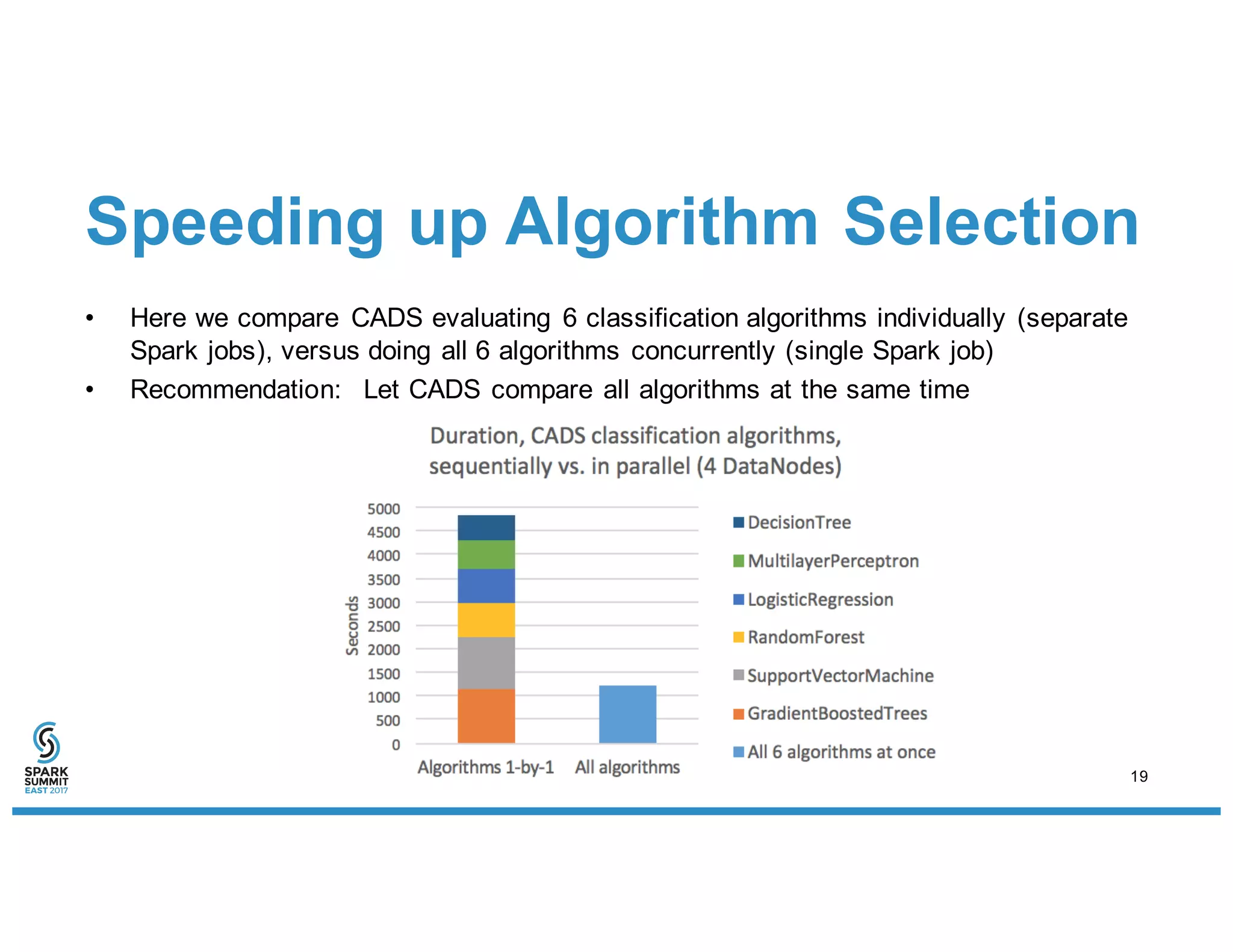

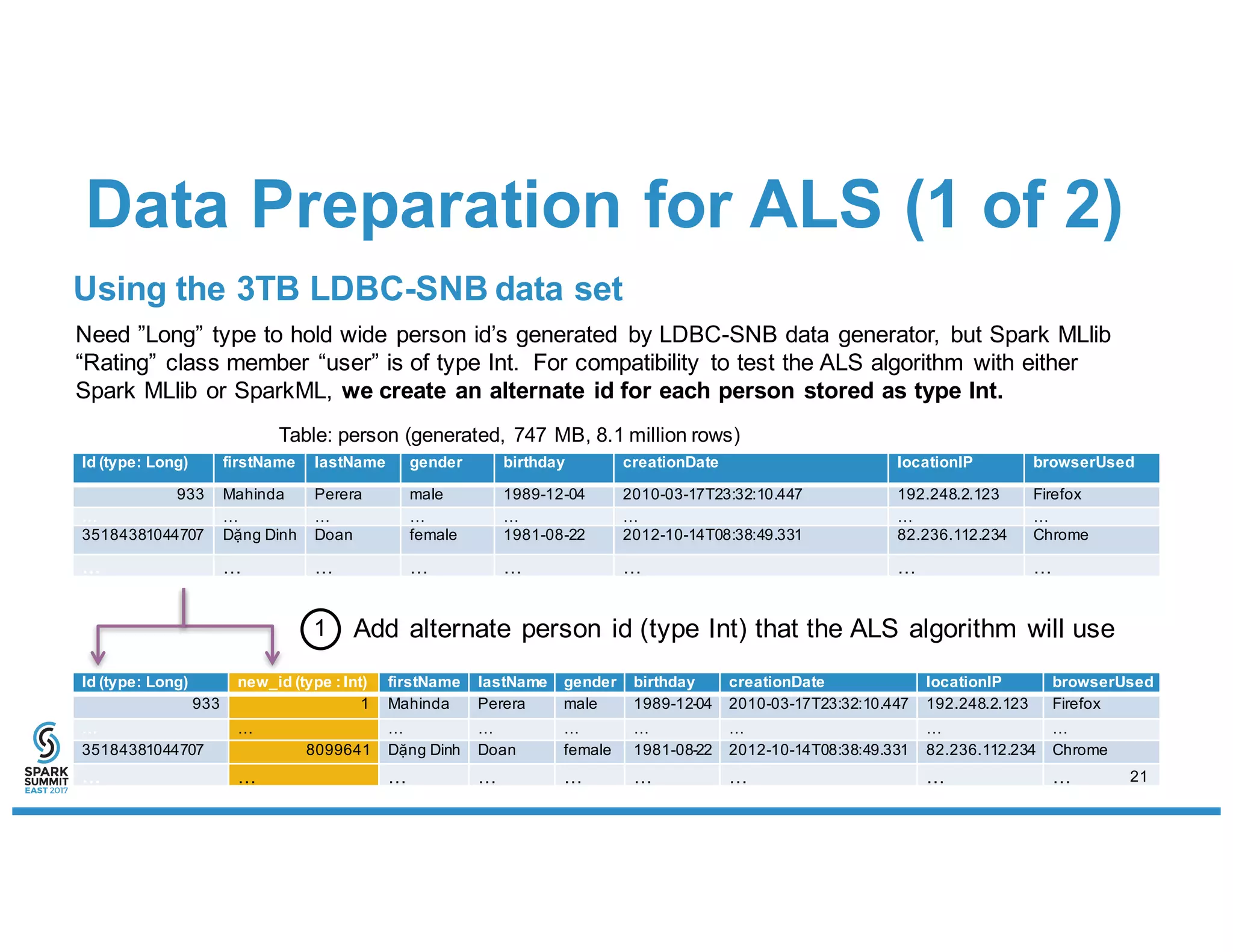

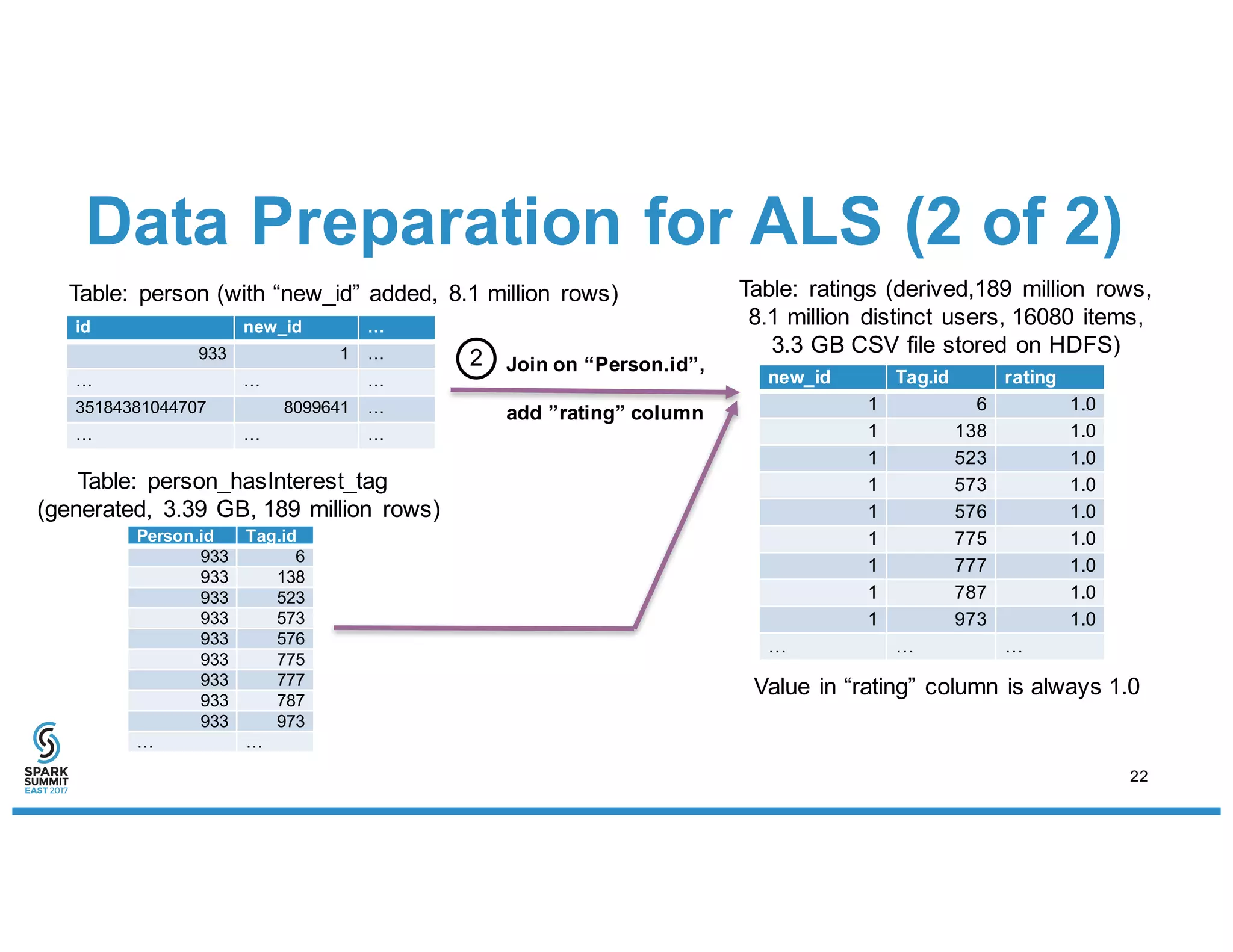

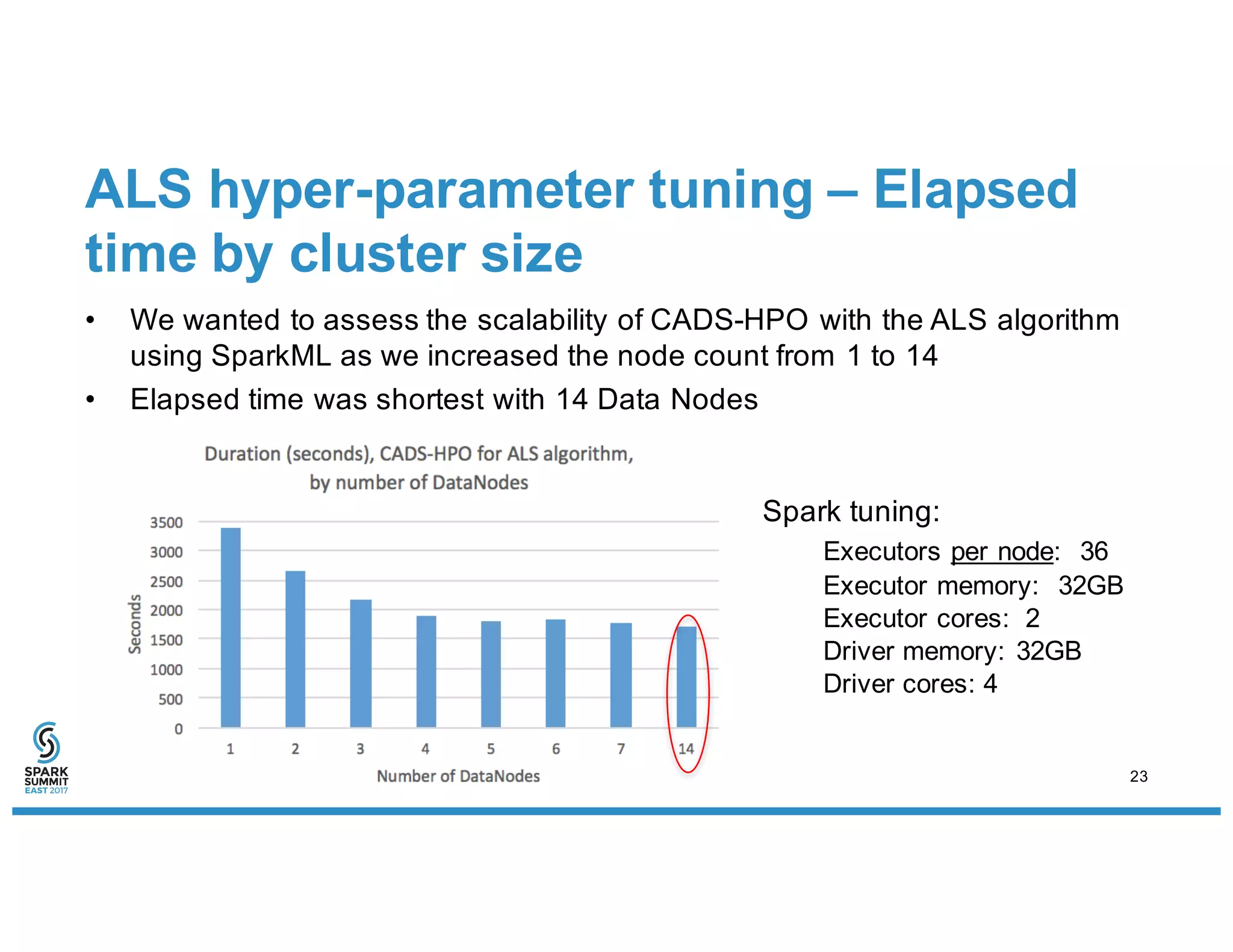

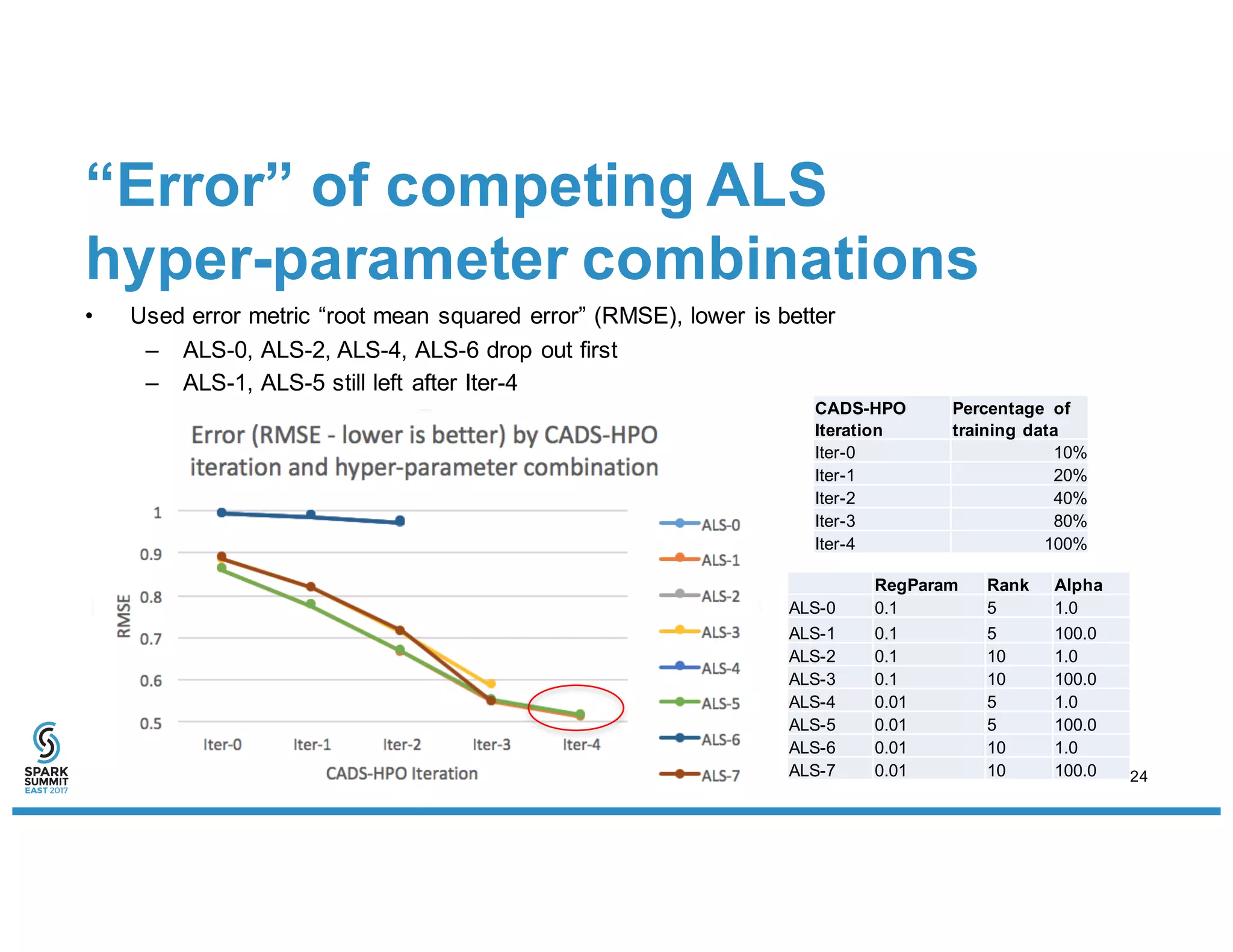

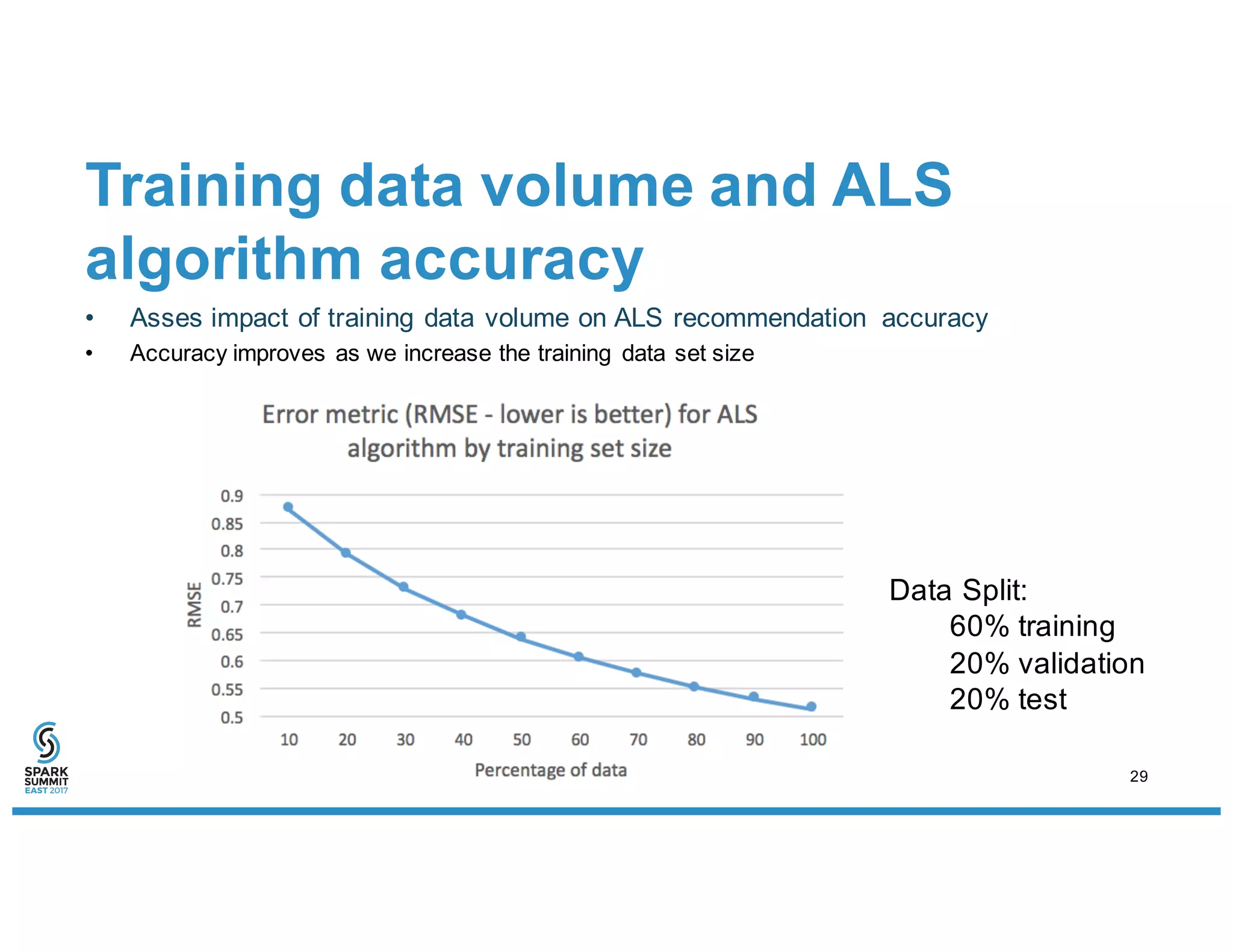

The document discusses IBM's use of SparkML for machine learning, detailing an experimental environment with a robust cluster setup for scalability exploration. It covers various machine learning algorithms and their performance, with a specific focus on predictive and recommendation systems using synthetic data. Future work includes further optimization and tuning of SparkML algorithms and exploring additional classification methods.