Embed presentation

Download as PDF, PPTX

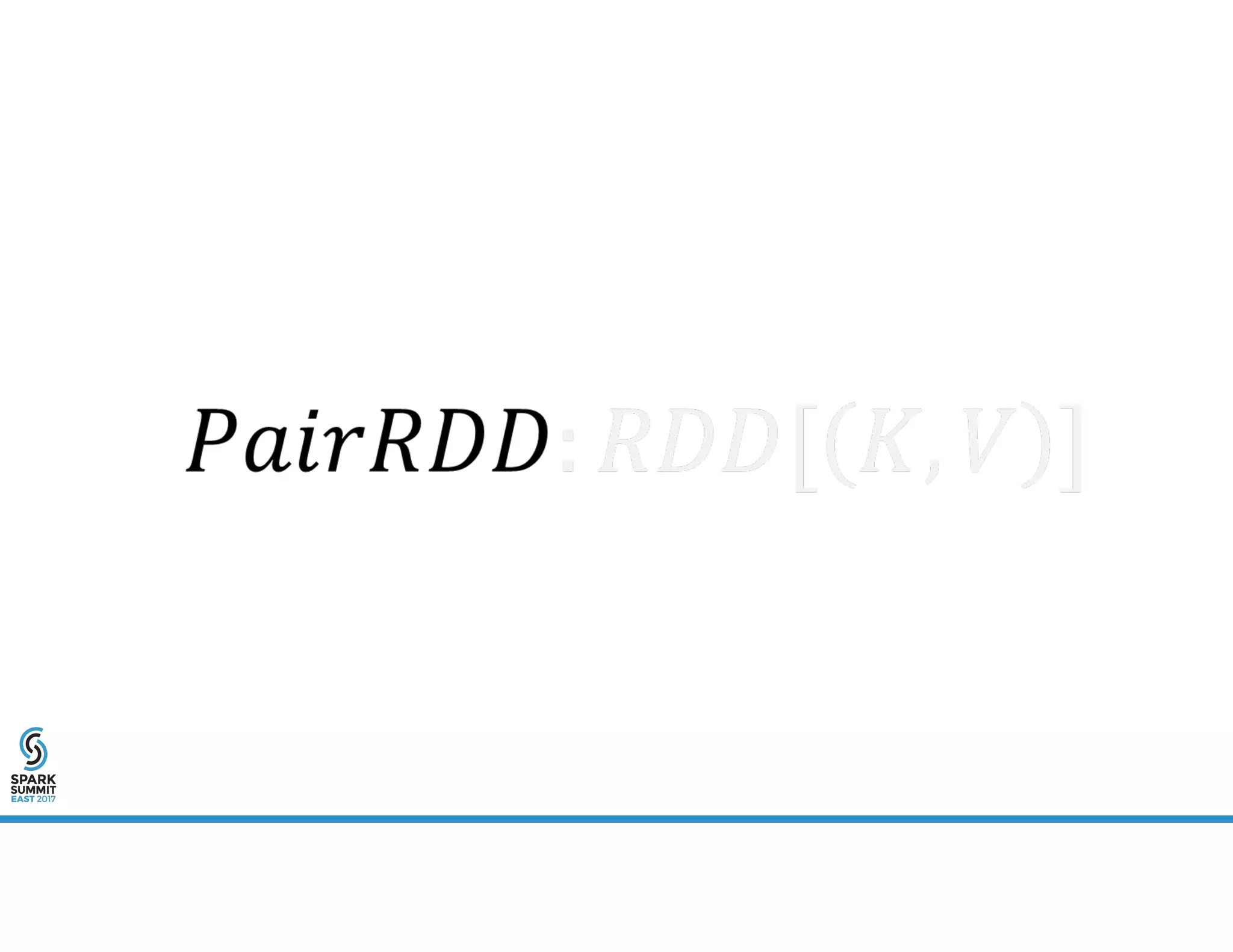

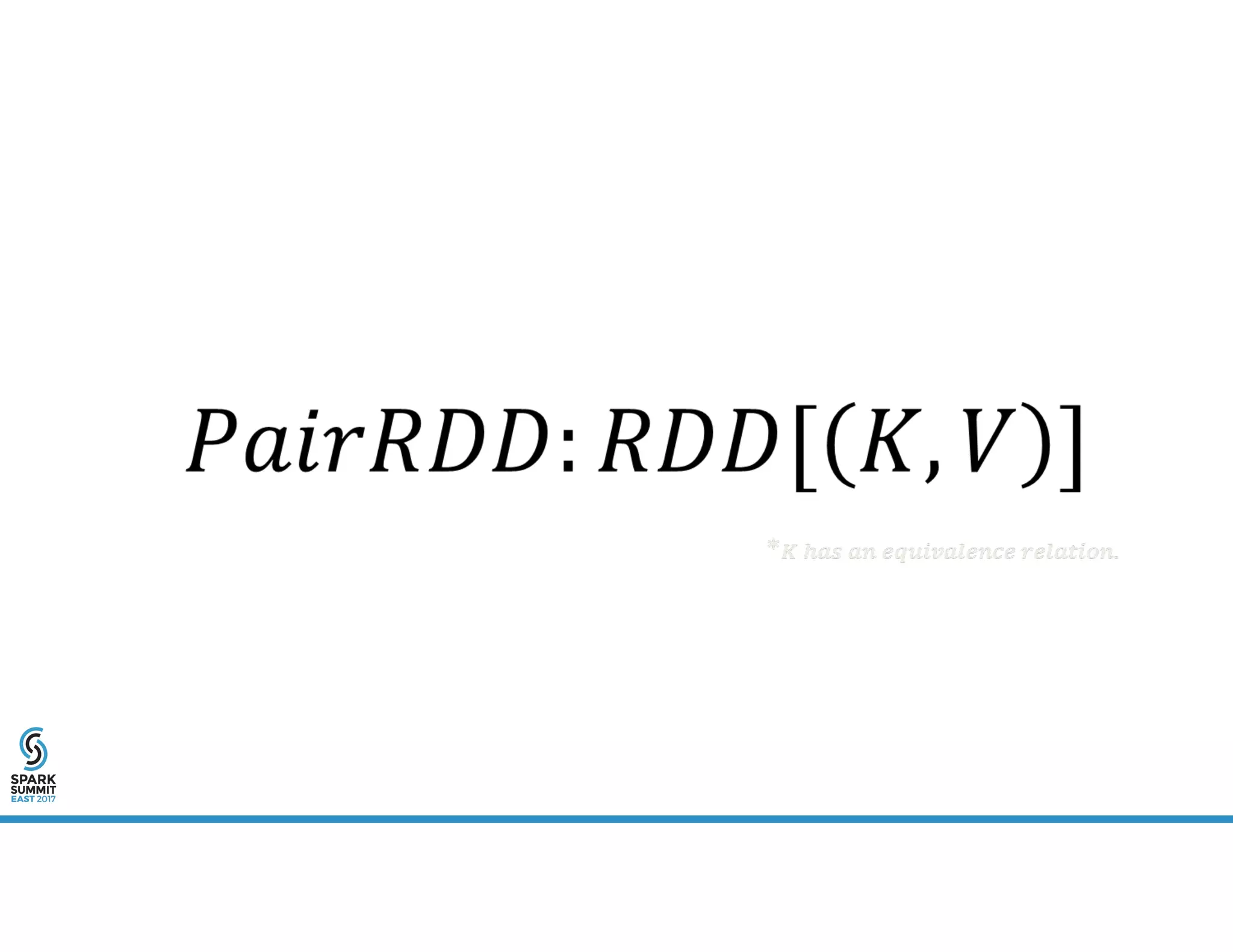

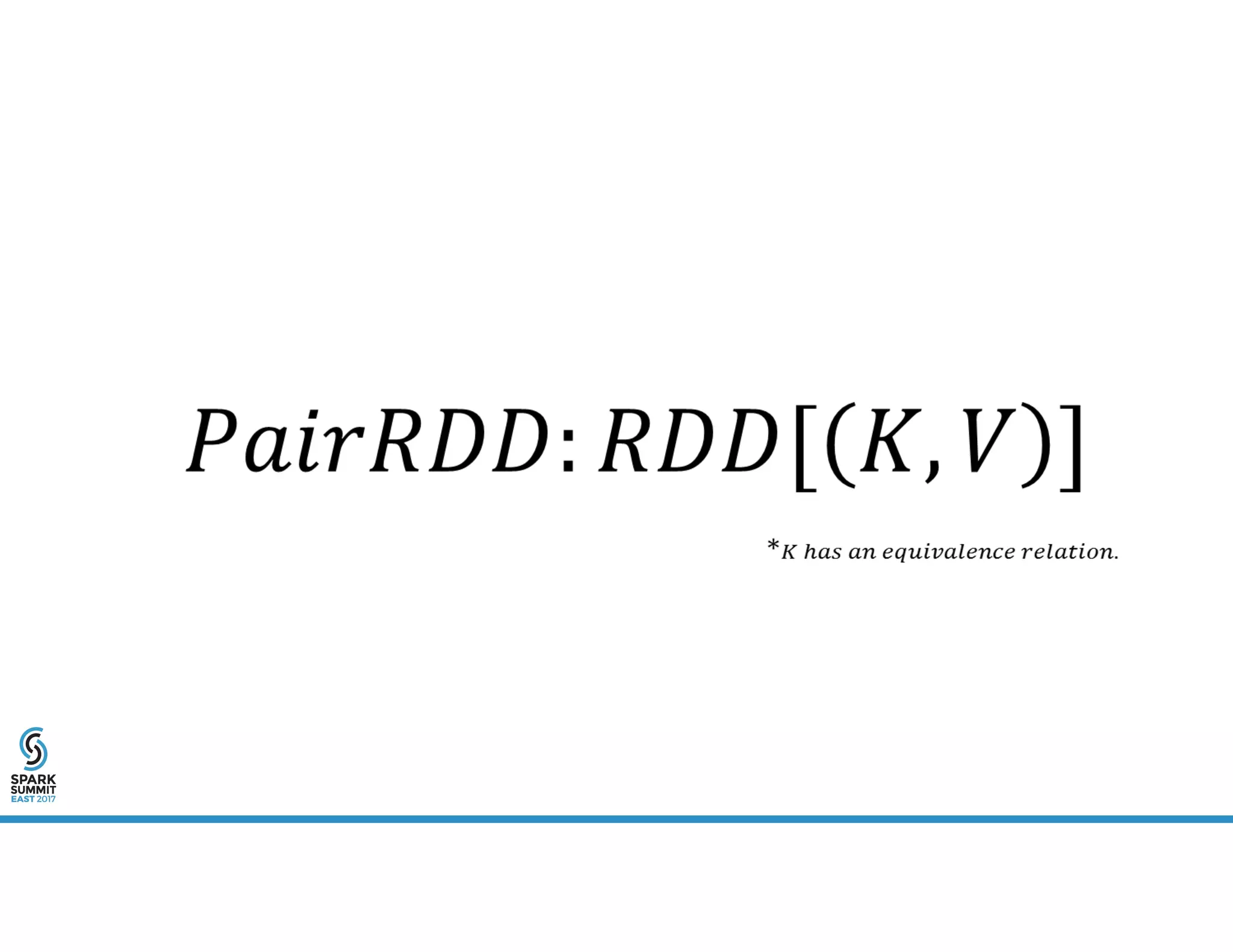

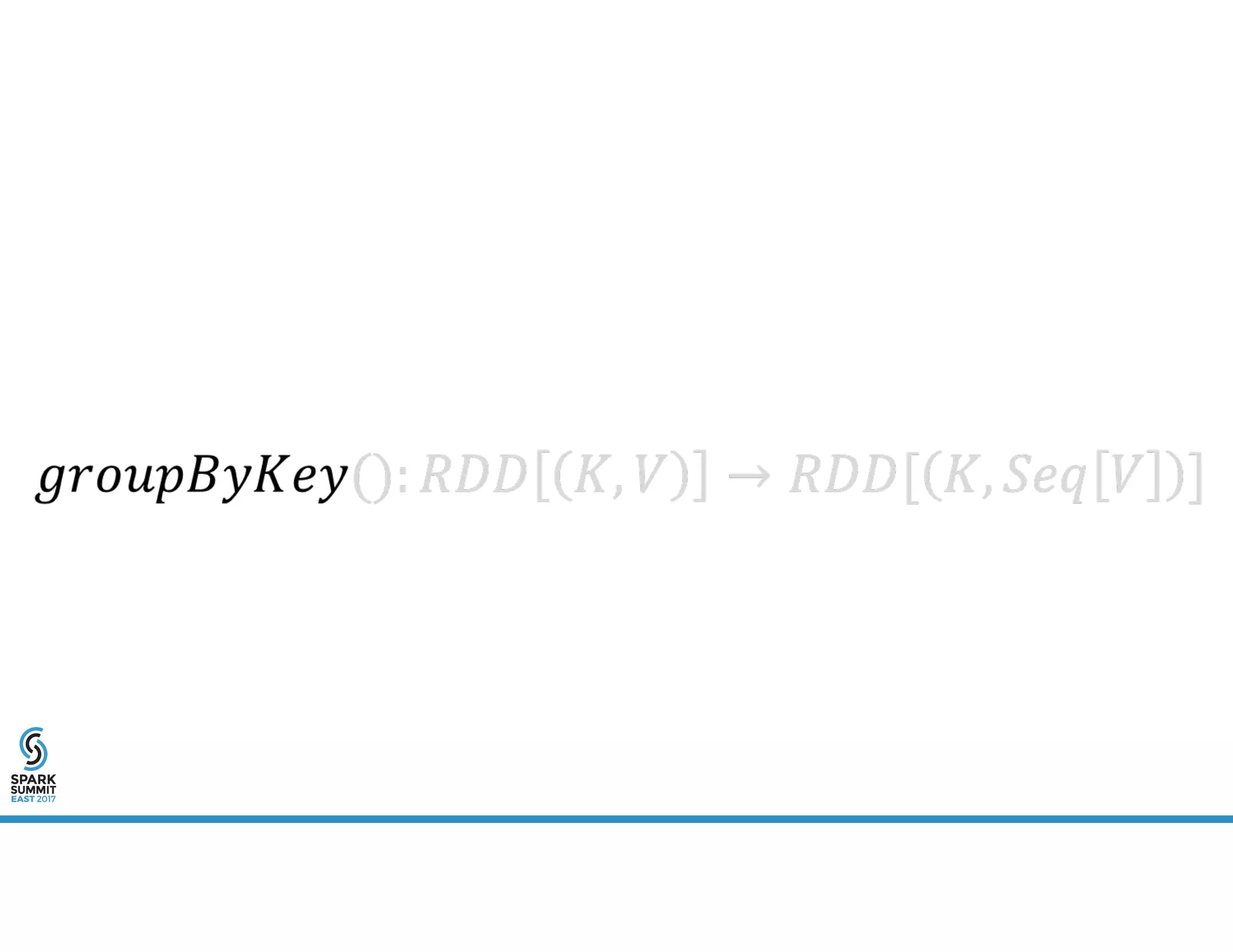

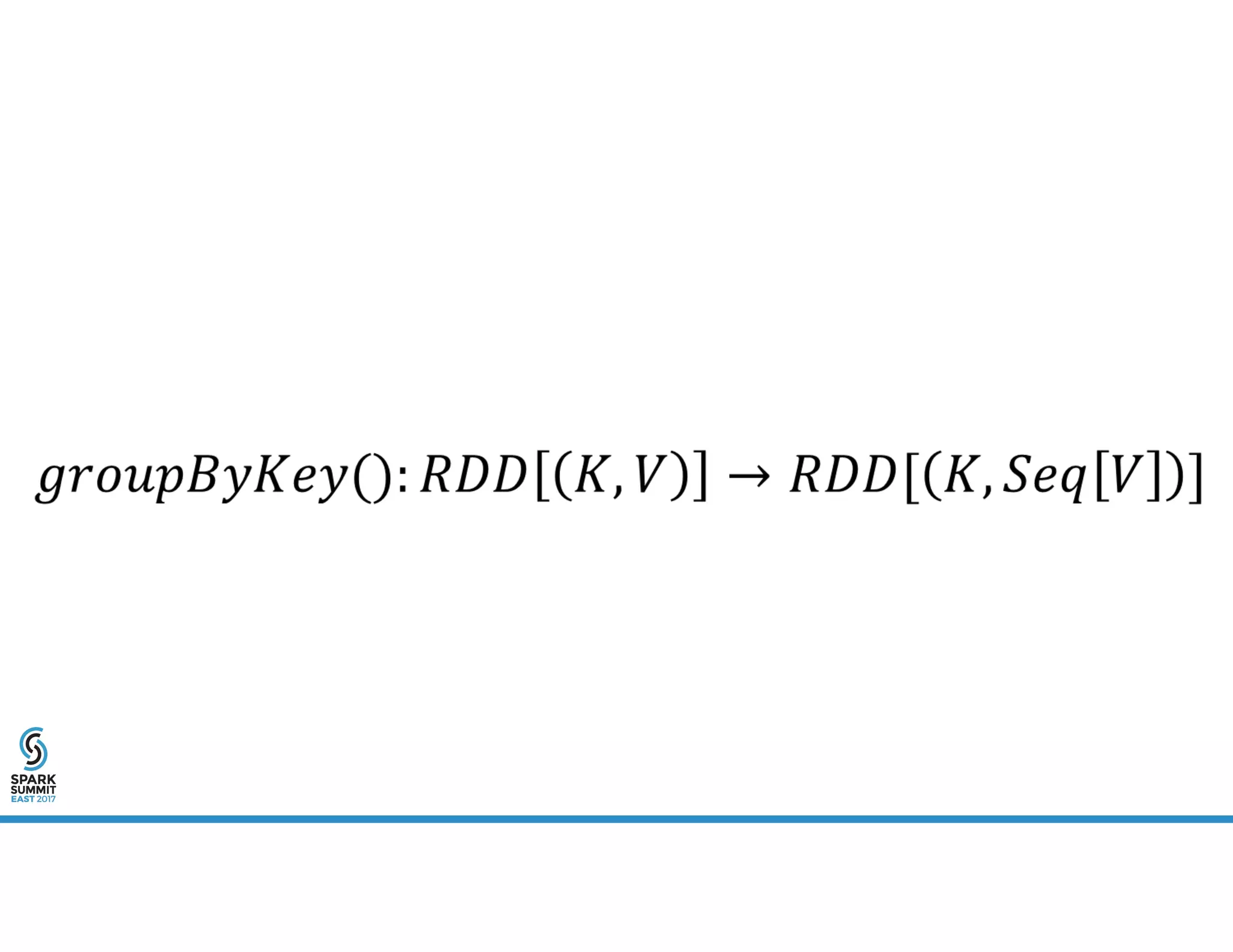

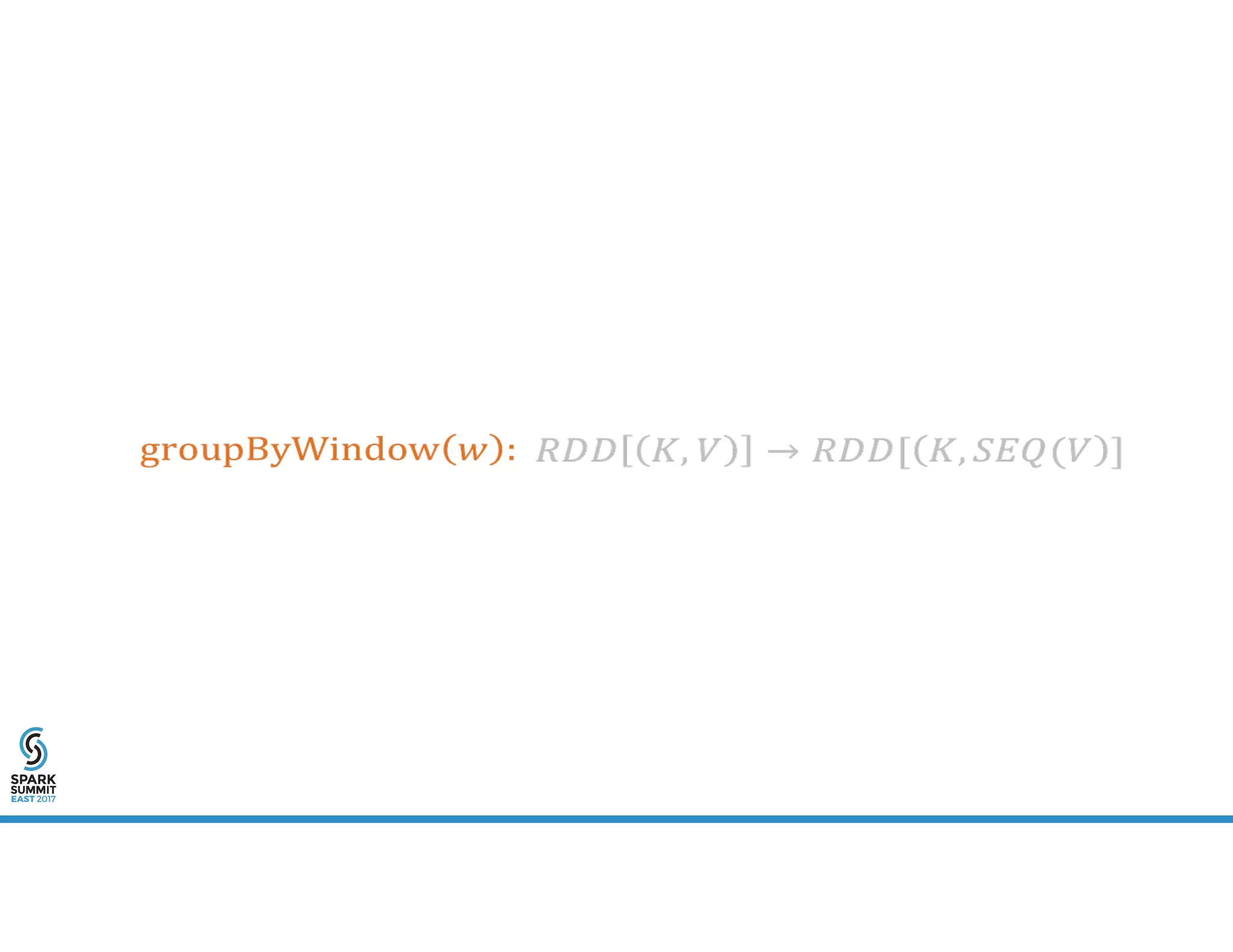

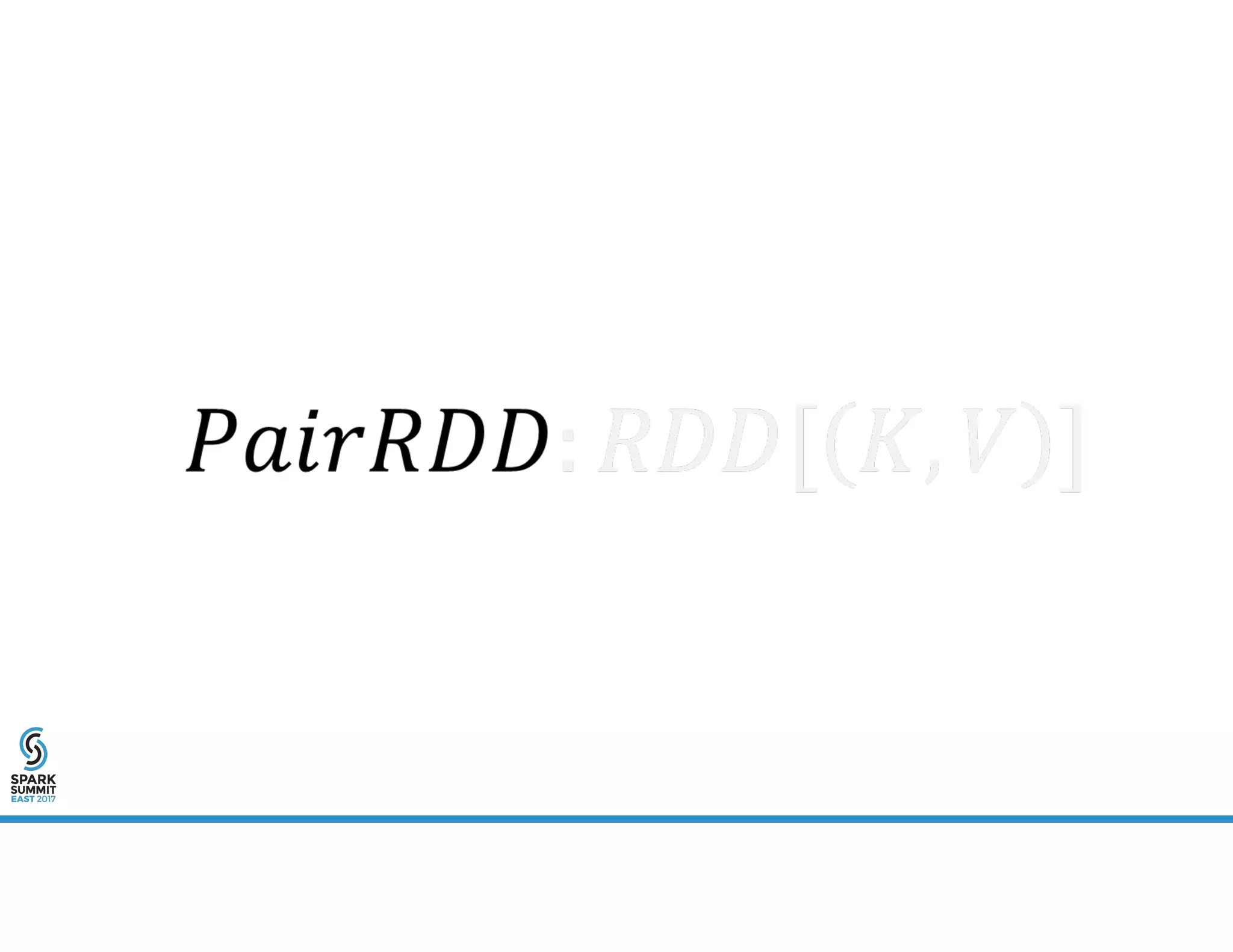

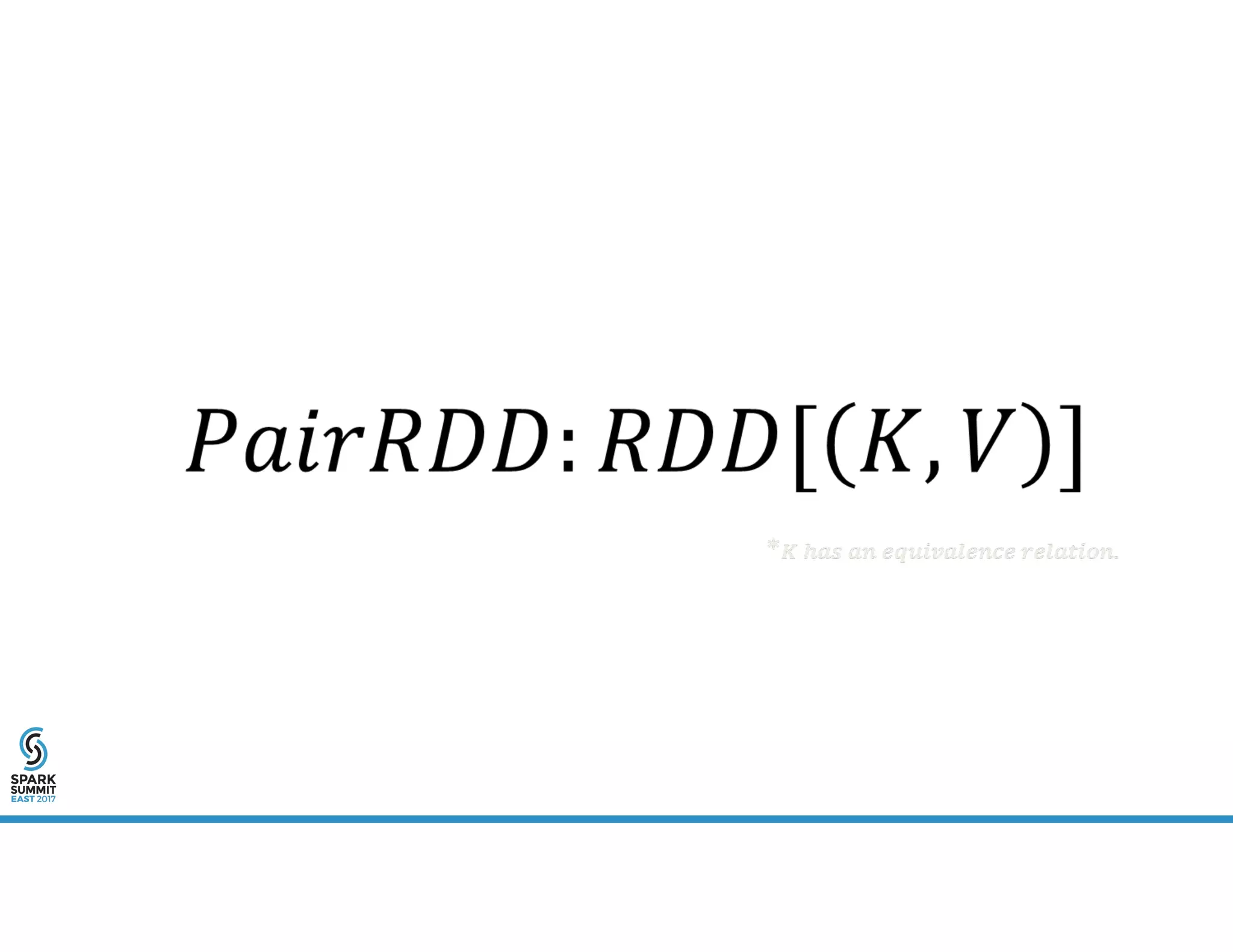

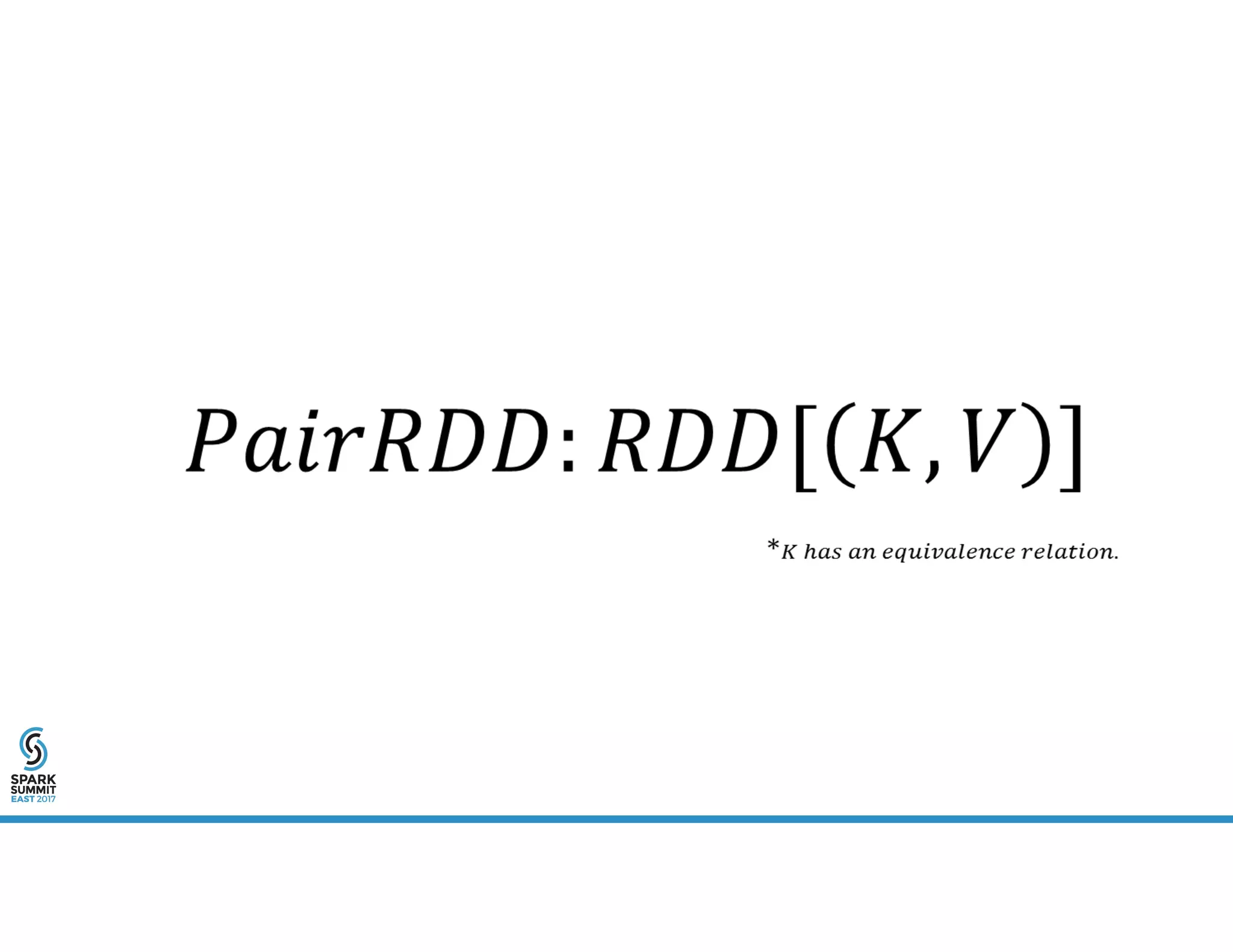

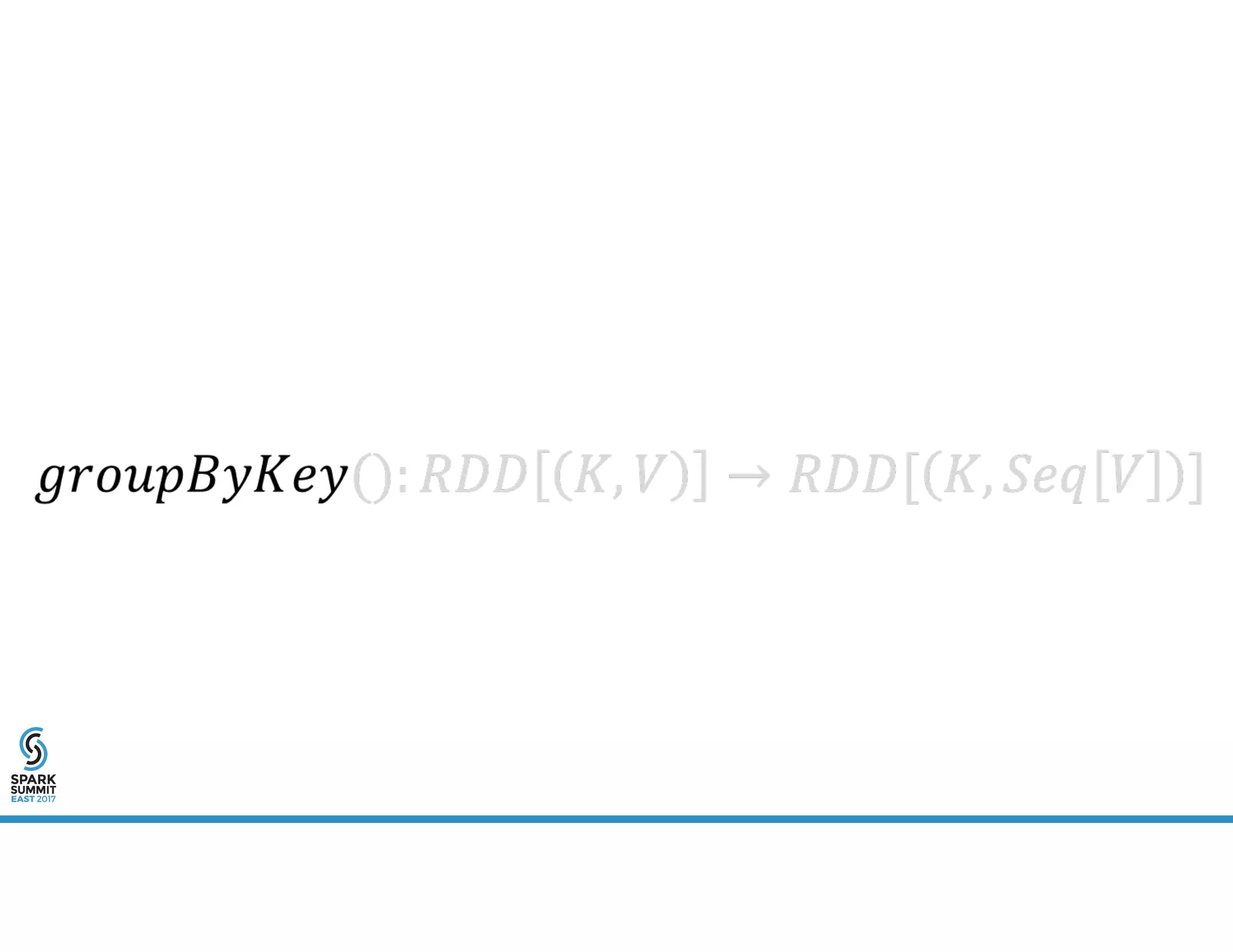

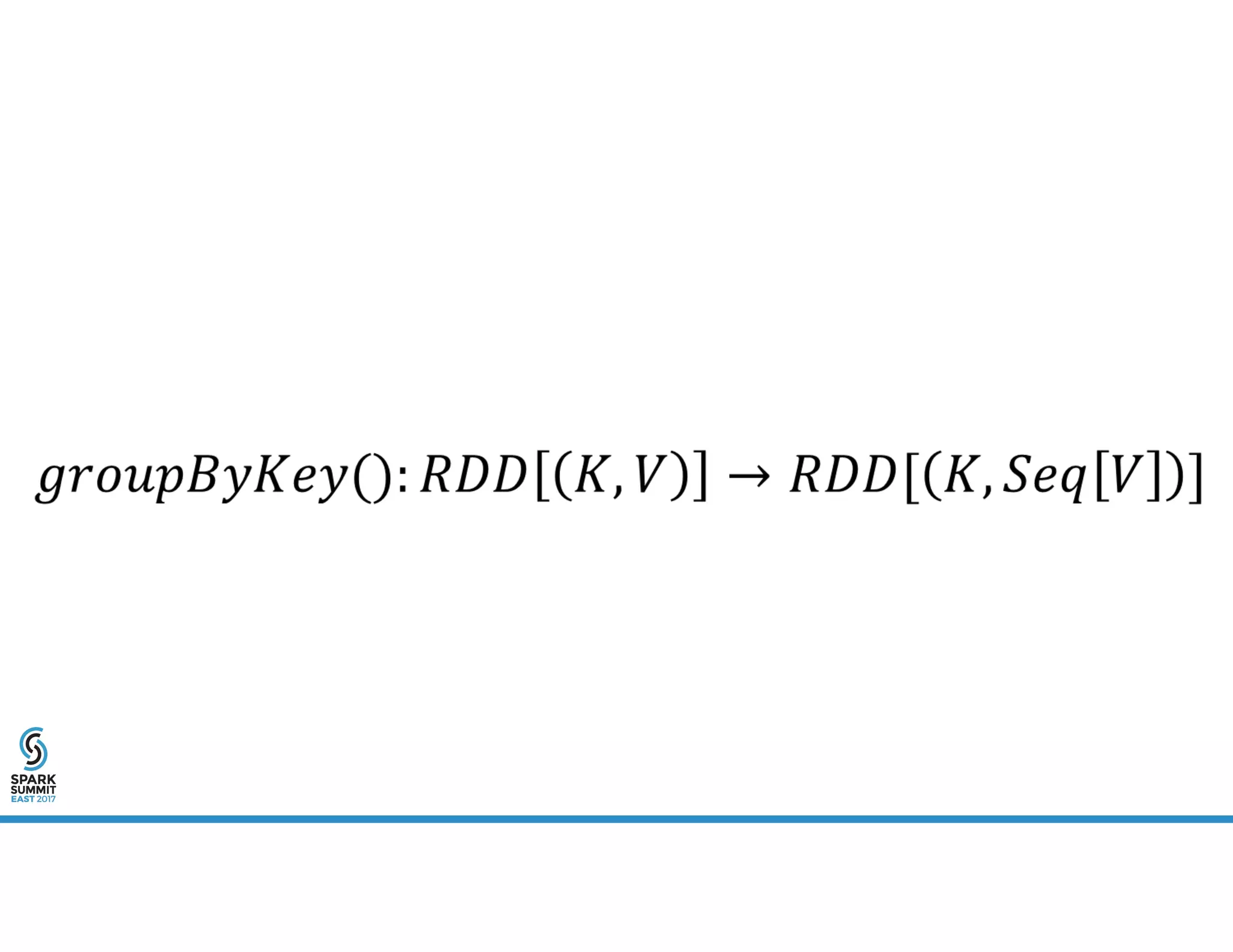

![w is a window specification e.g. 500ms, 5s, 3 business days

RDD[(K,V)] -> RDD[(K,Seq[V])]](https://image.slidesharecdn.com/1adavidpalaitis-170214185024/75/New-Directions-in-pySpark-for-Time-Series-Analysis-Spark-Summit-East-talk-by-David-Palaitis-40-2048.jpg)

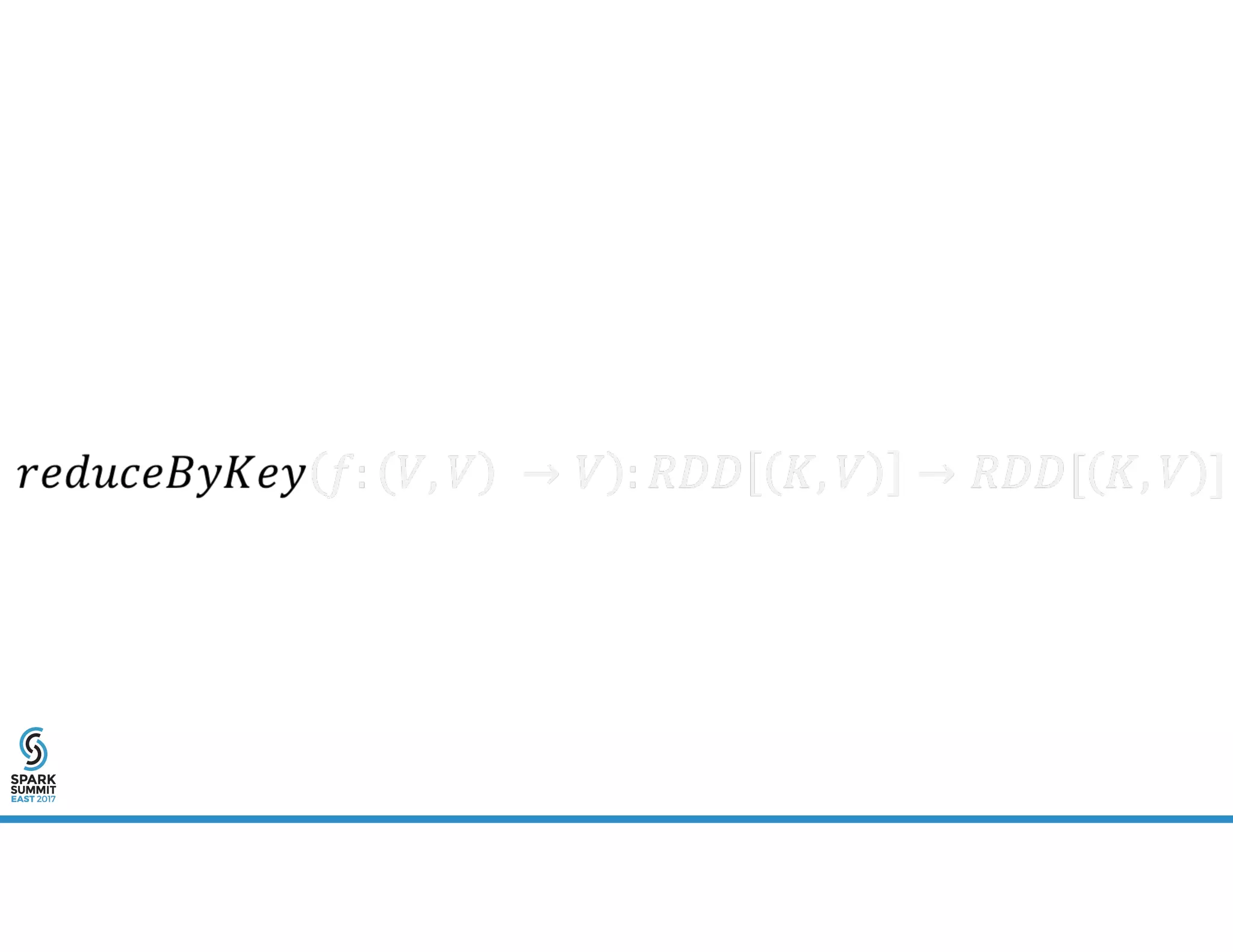

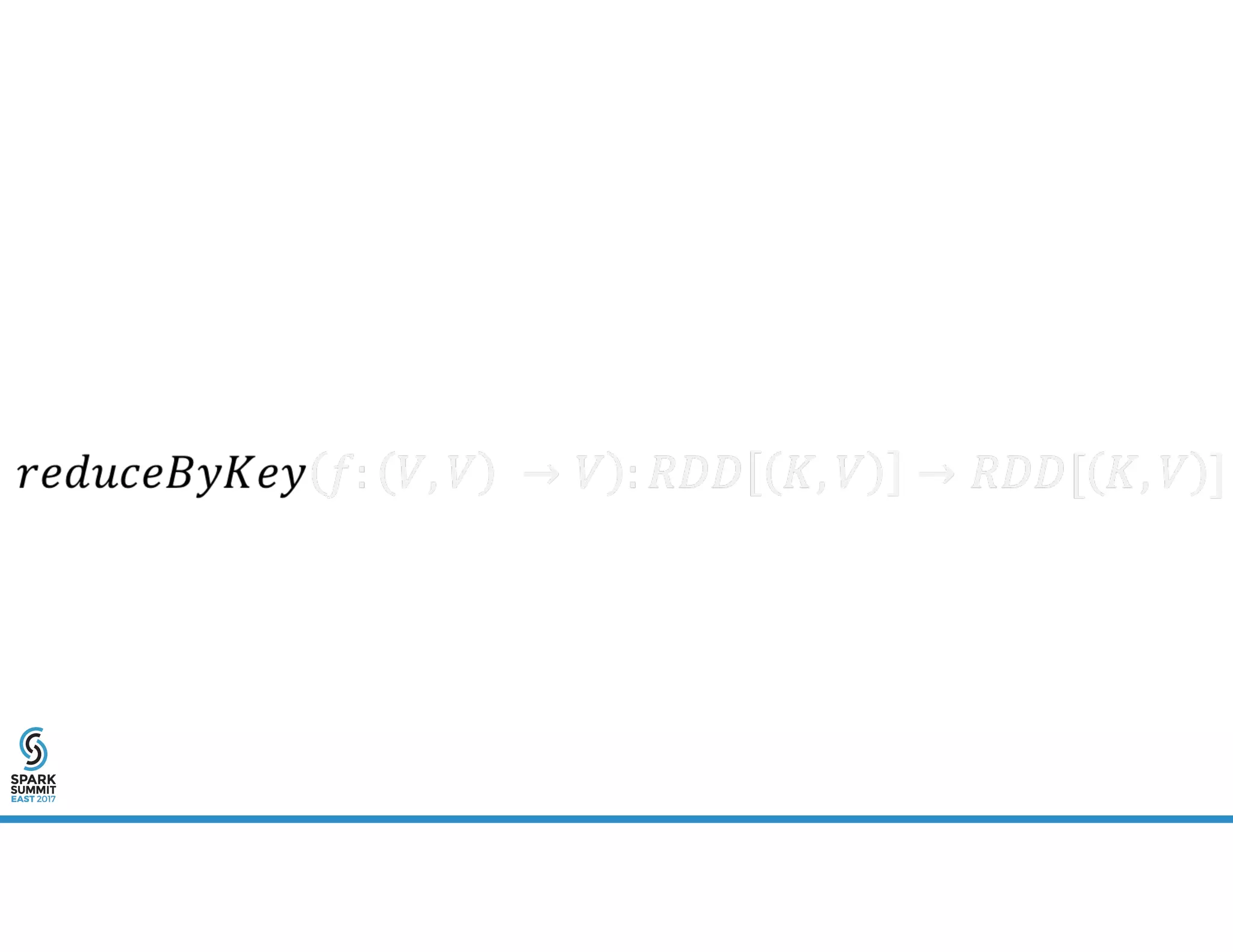

![reduceByWindow(f: (V, V) => V, w): RDD[(K, W)] => RDD[(K, V)]](https://image.slidesharecdn.com/1adavidpalaitis-170214185024/75/New-Directions-in-pySpark-for-Time-Series-Analysis-Spark-Summit-East-talk-by-David-Palaitis-41-2048.jpg)

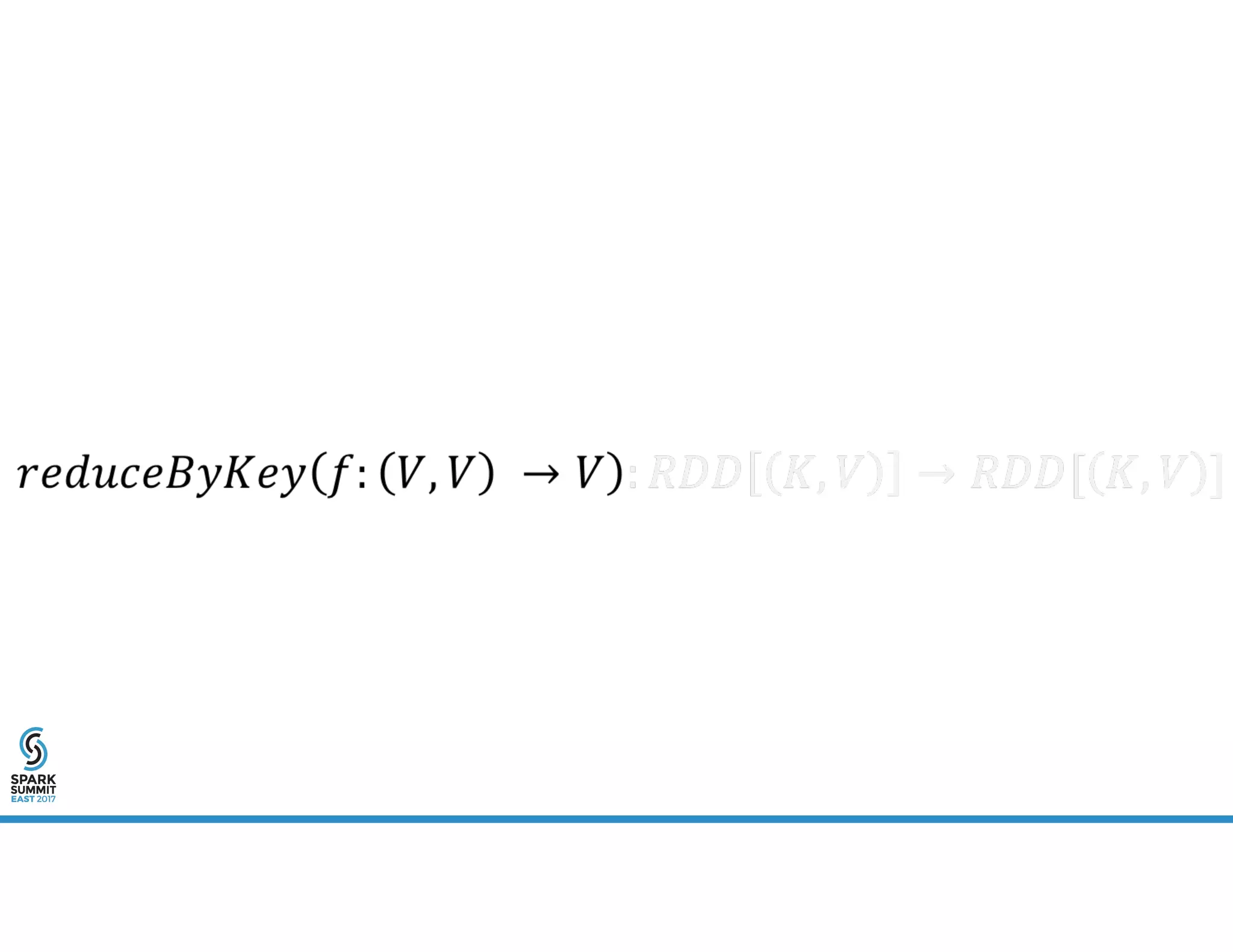

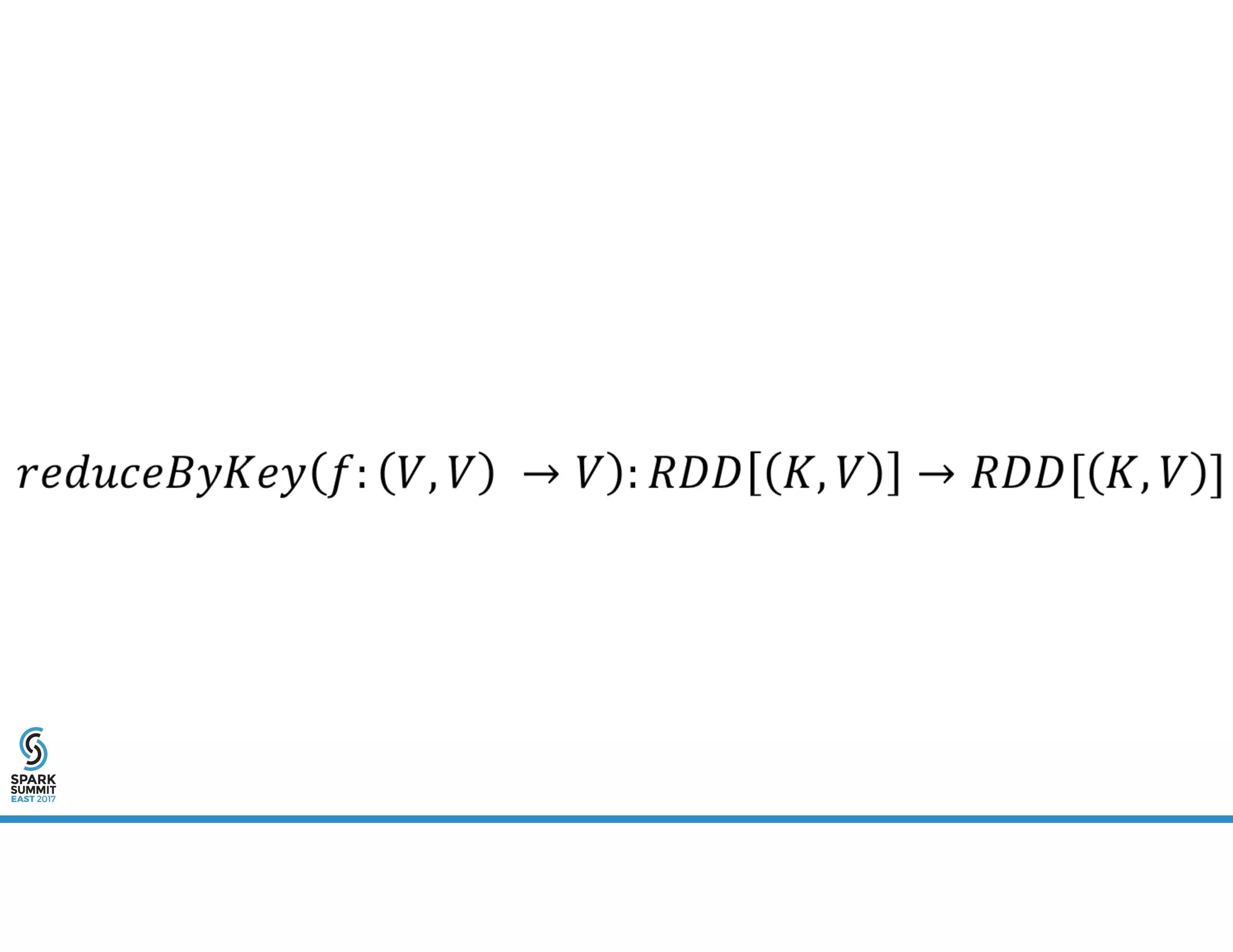

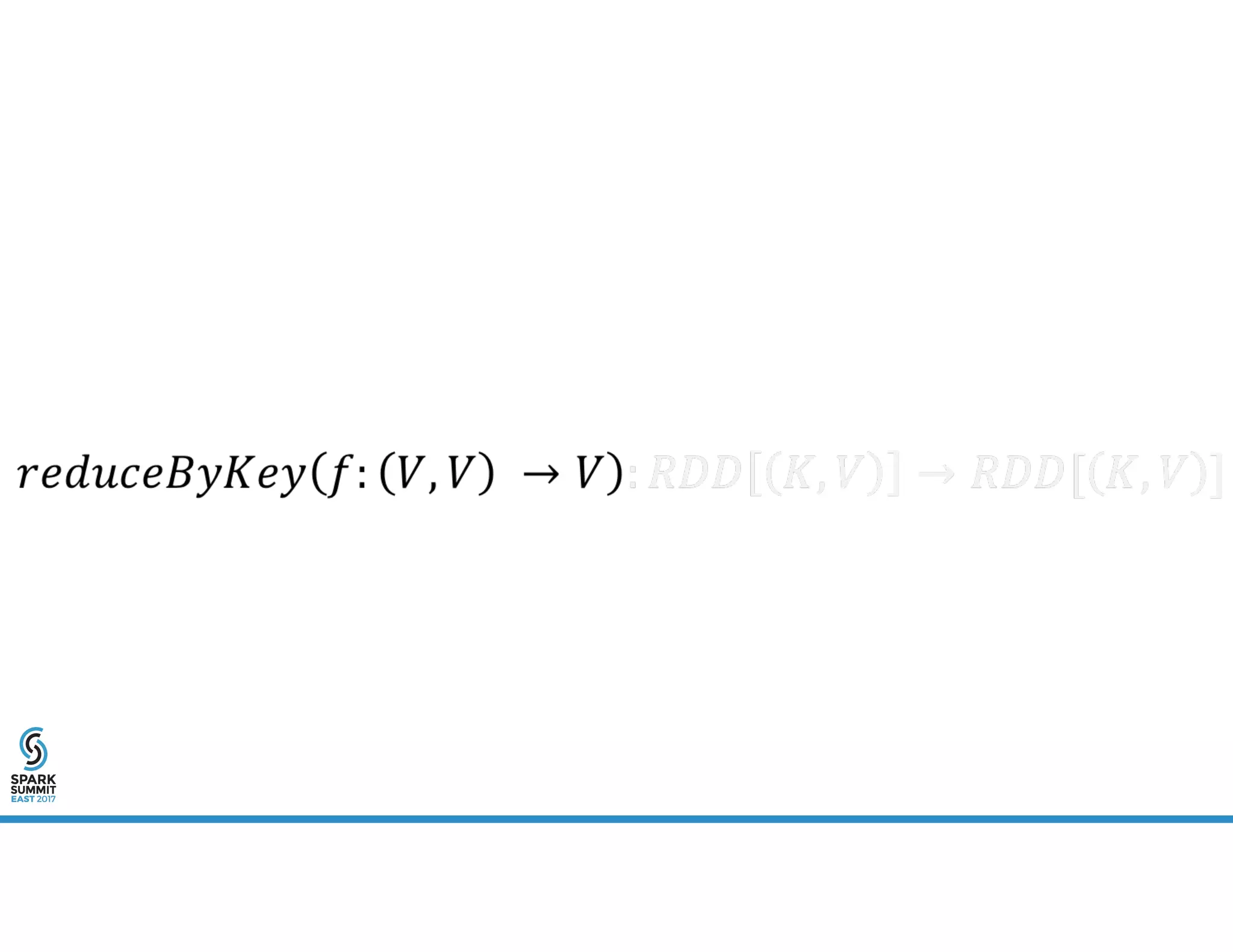

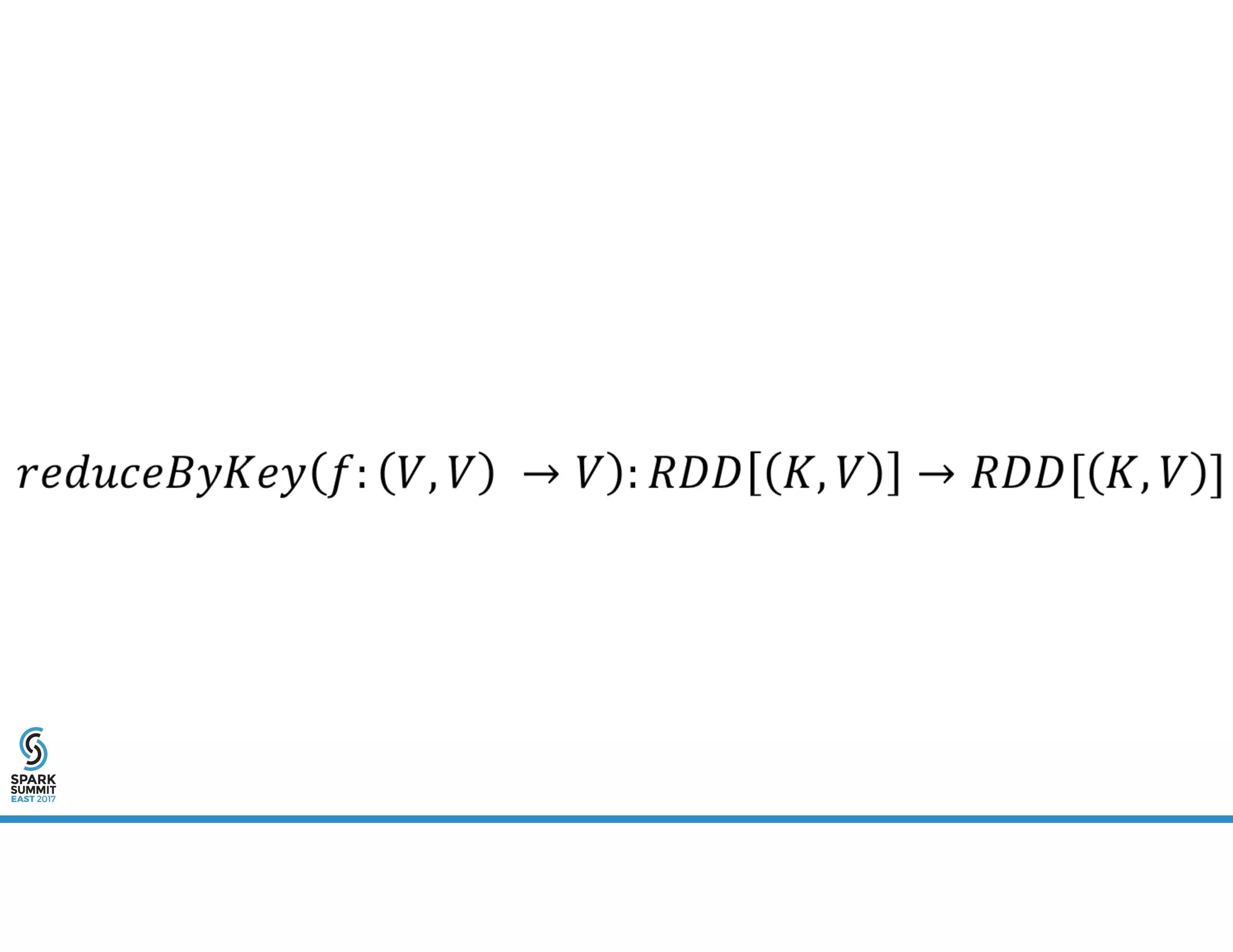

![reduceByWindow(f: (V, V) => V, w): RDD[(K, V)] => RDD[(K, V)]](https://image.slidesharecdn.com/1adavidpalaitis-170214185024/75/New-Directions-in-pySpark-for-Time-Series-Analysis-Spark-Summit-East-talk-by-David-Palaitis-42-2048.jpg)

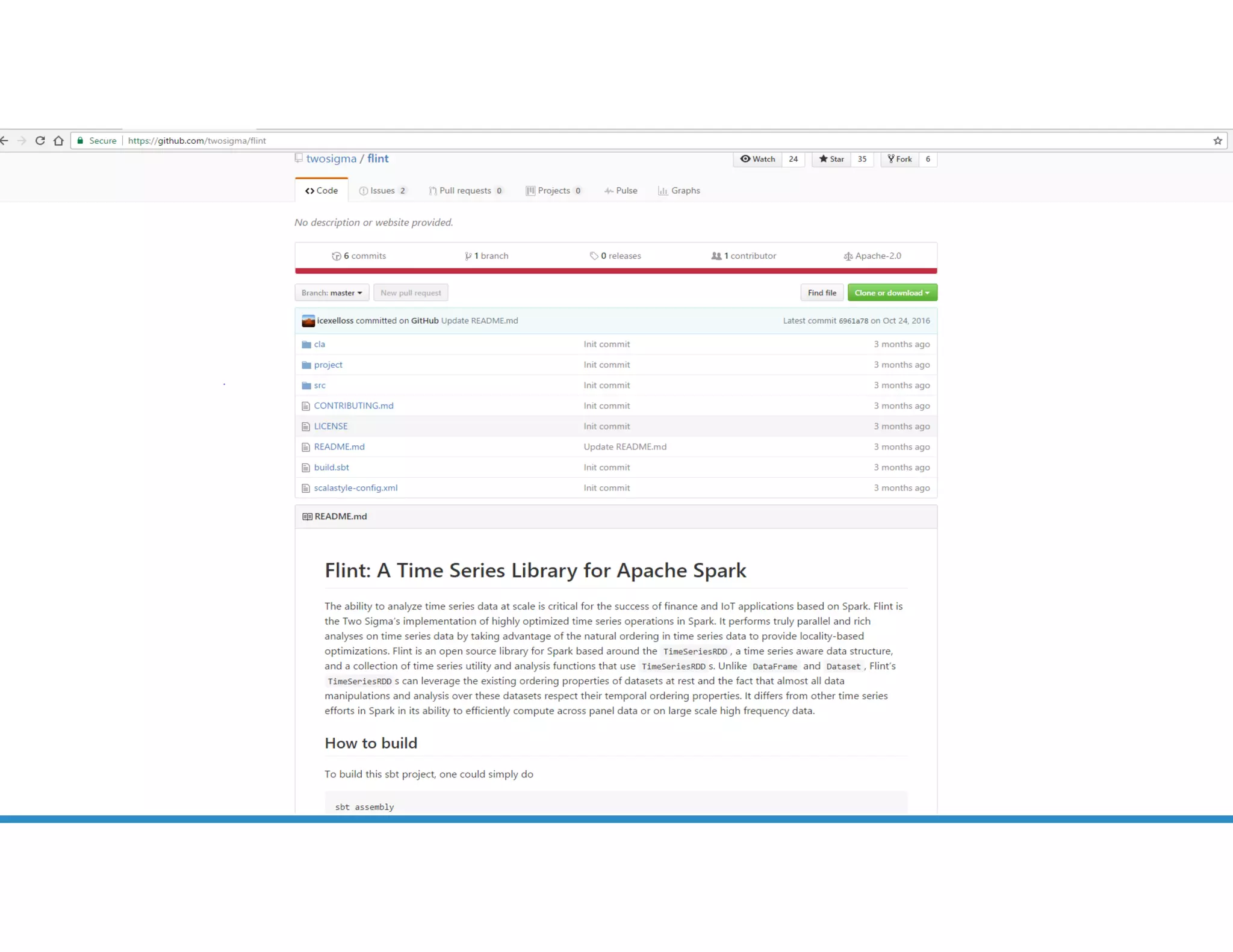

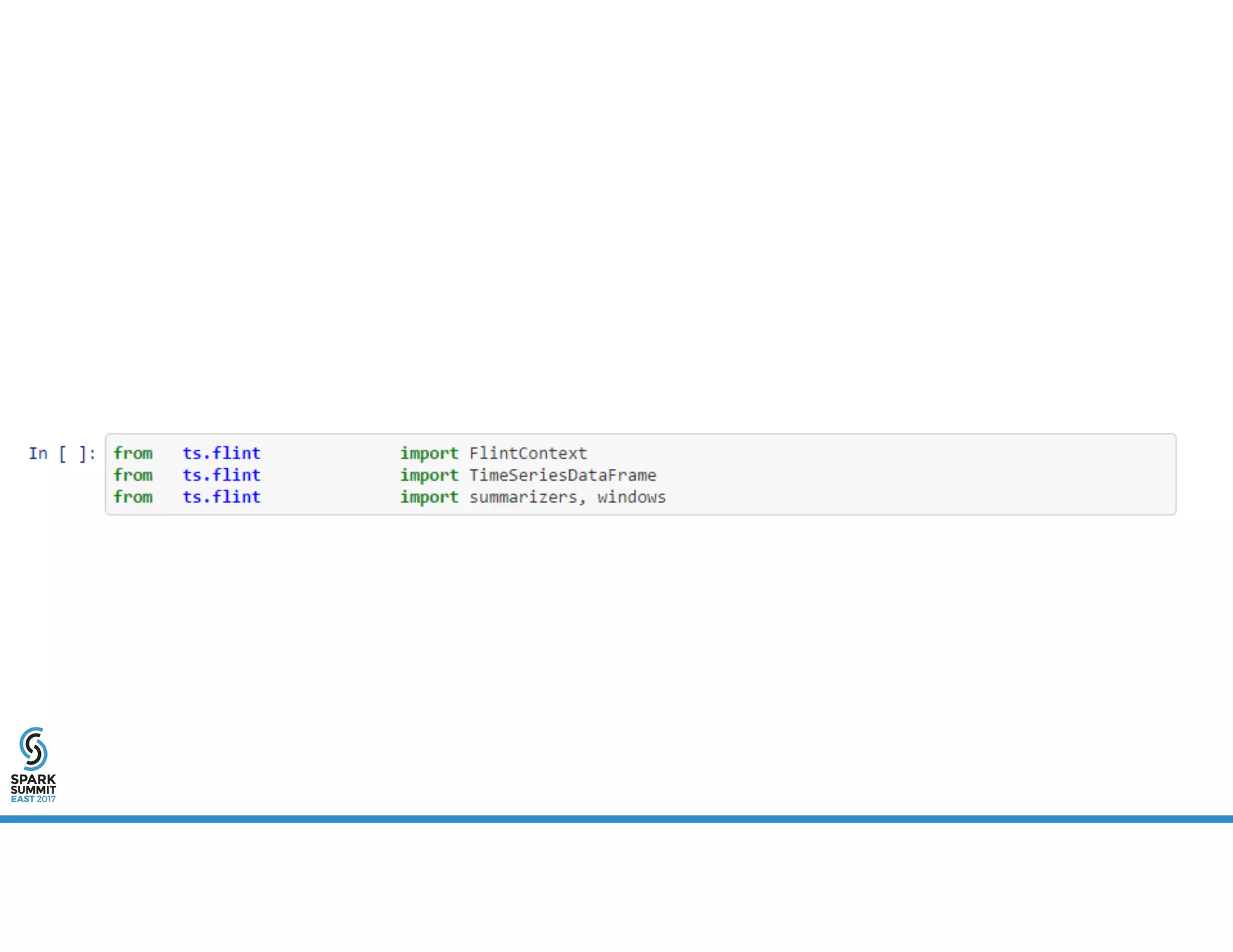

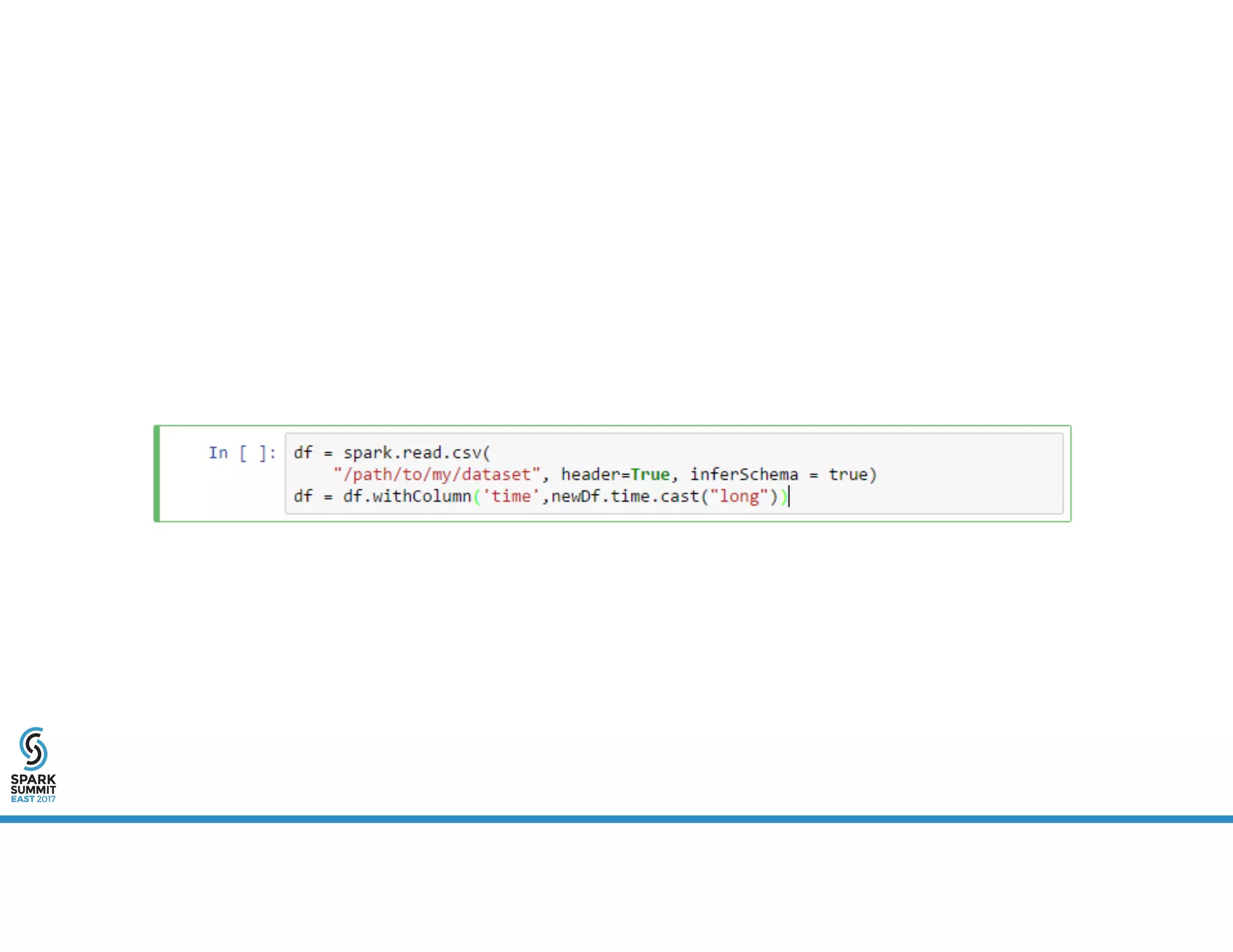

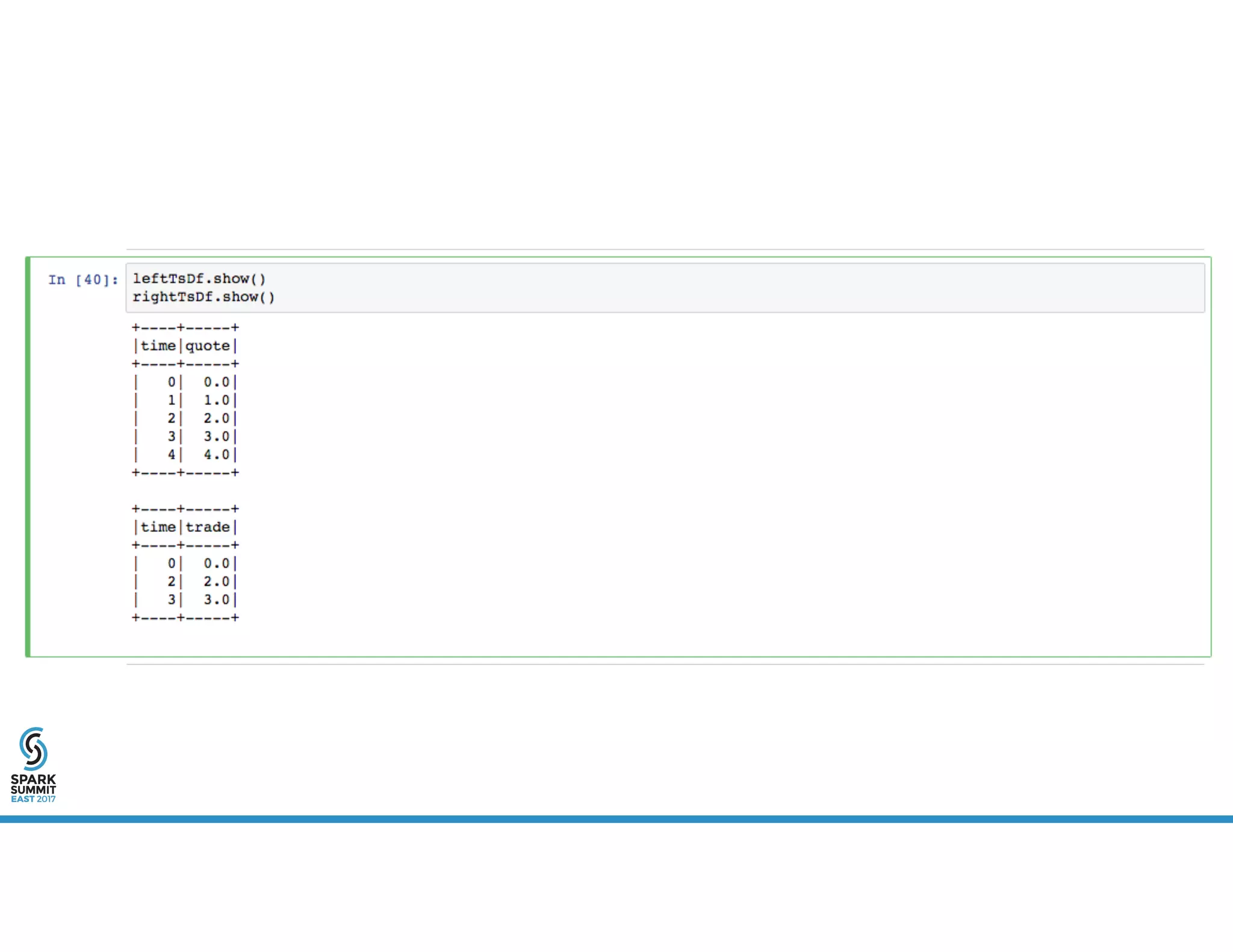

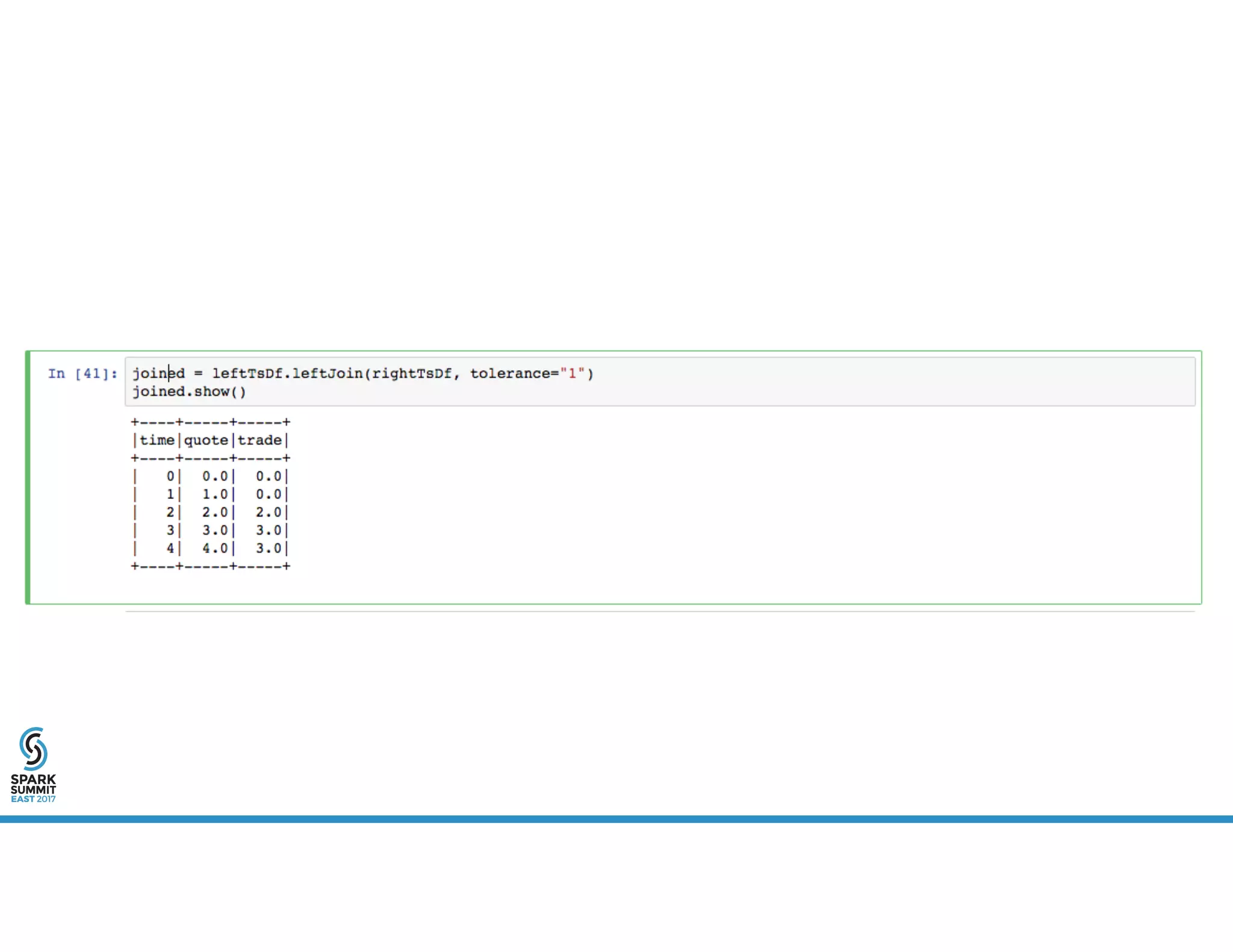

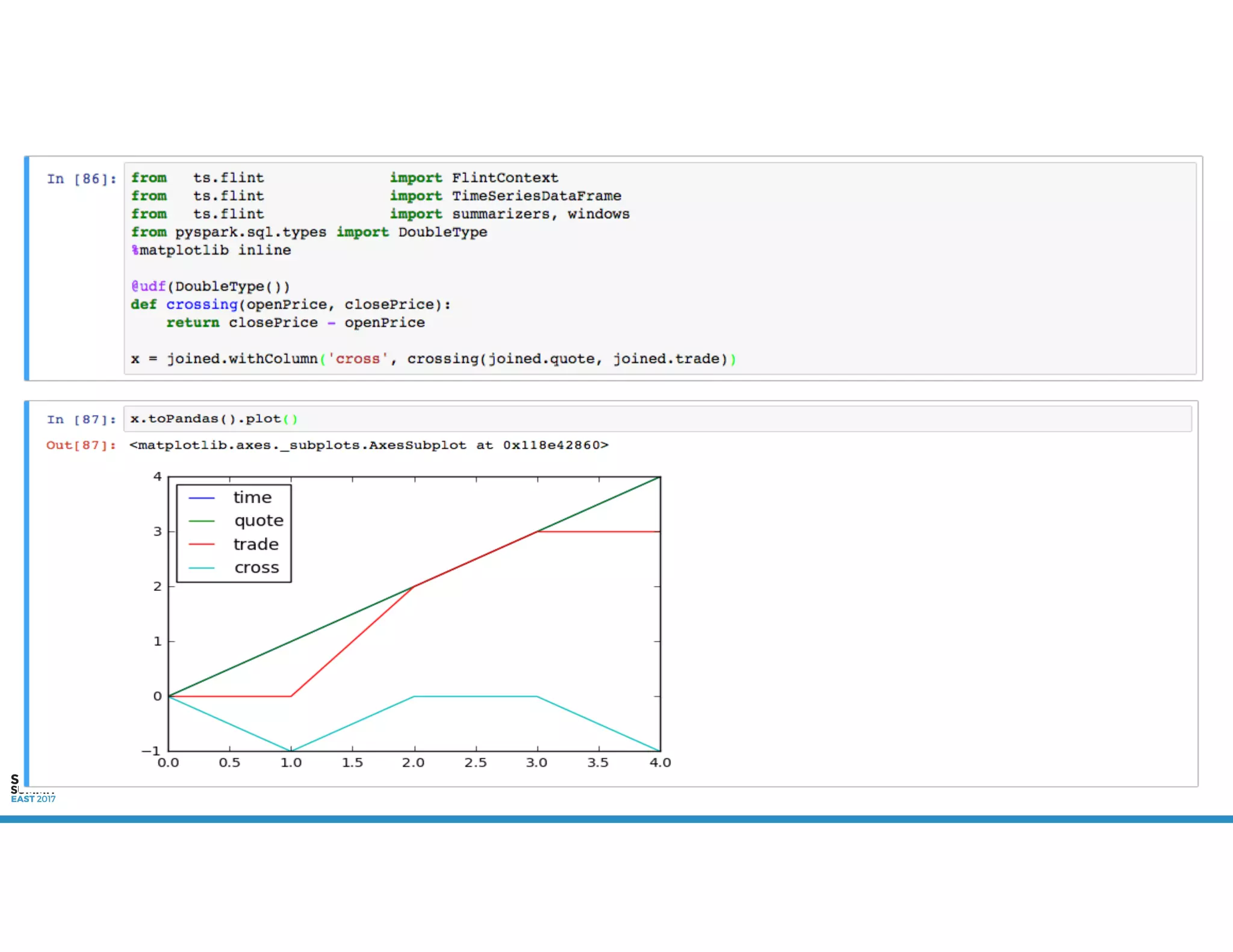

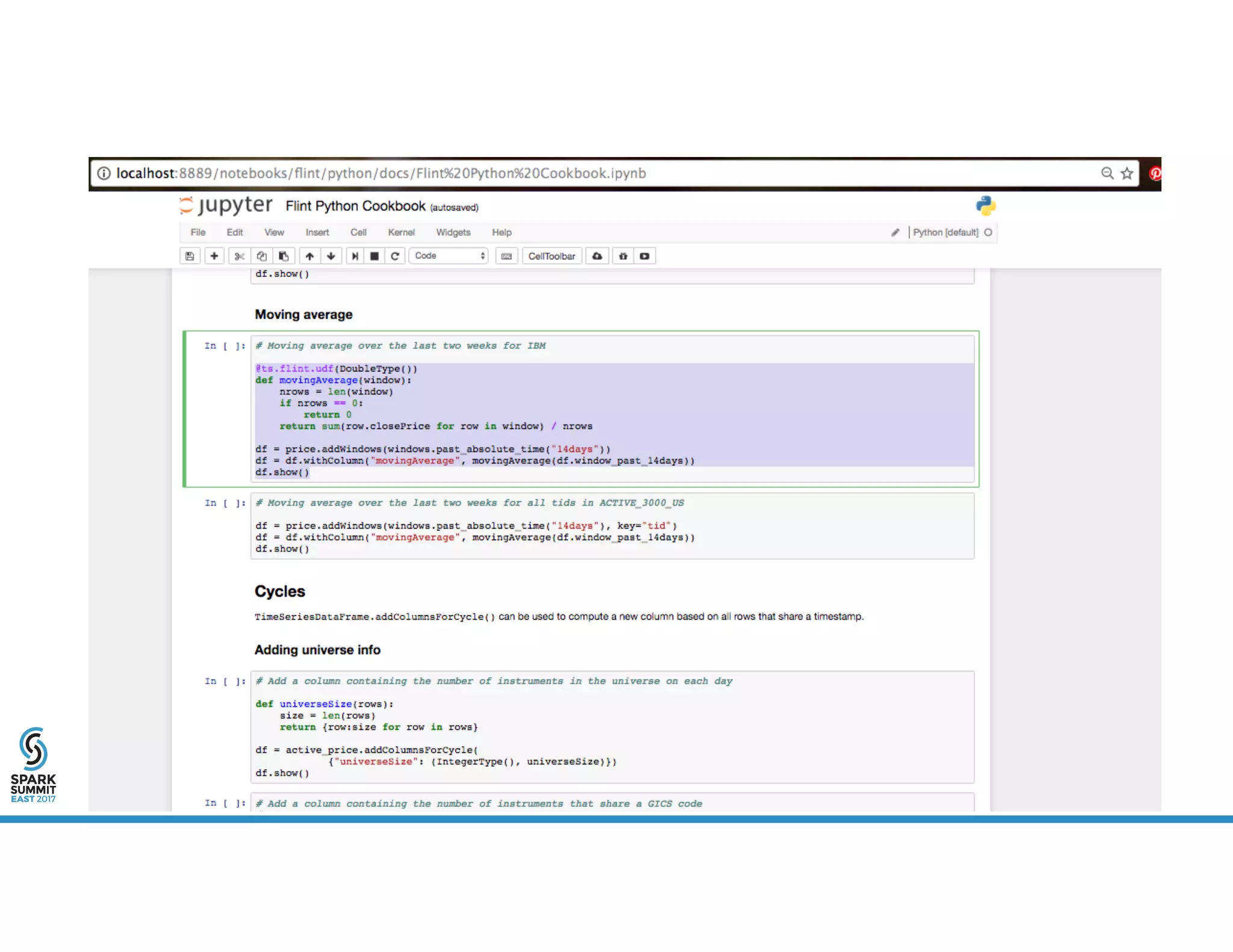

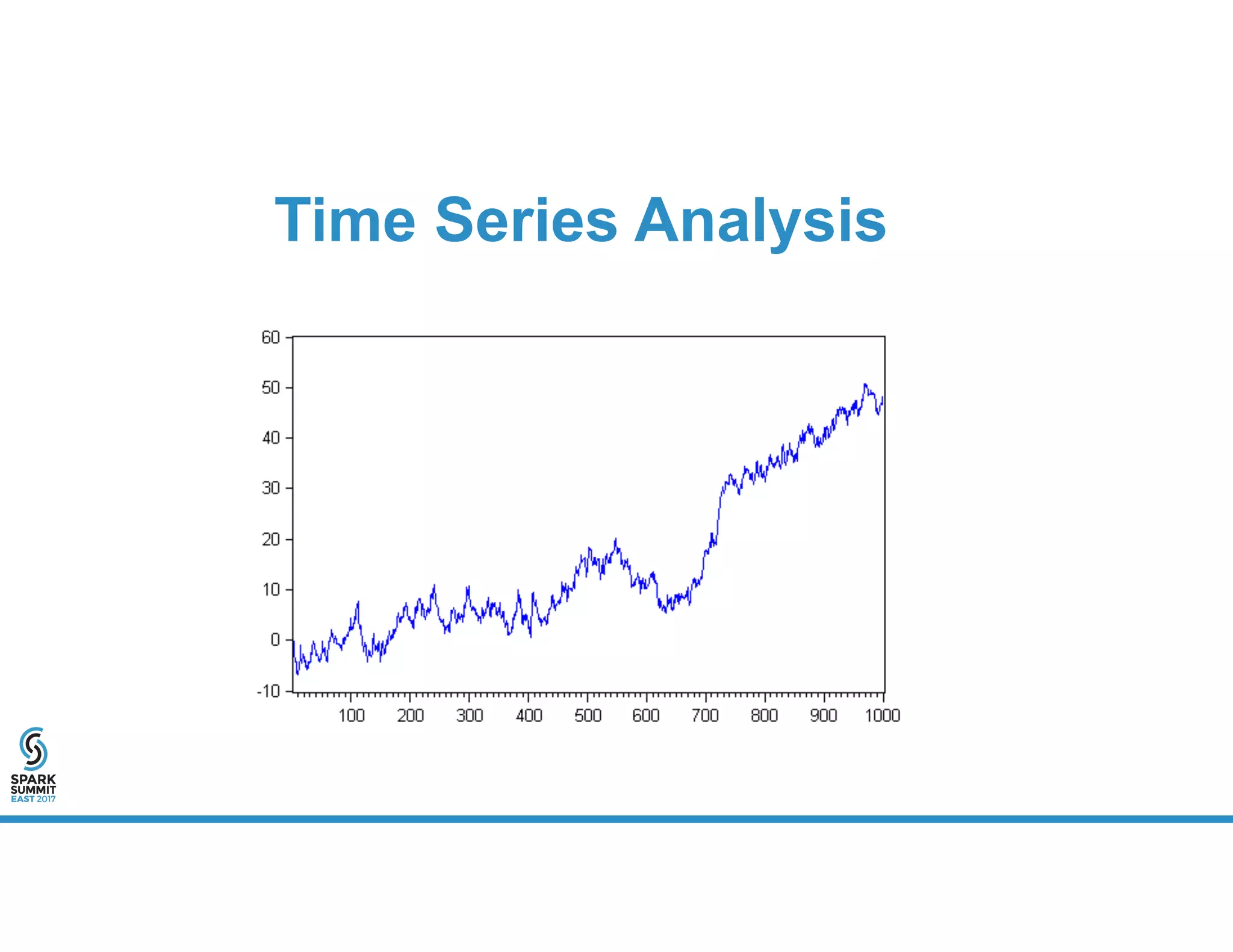

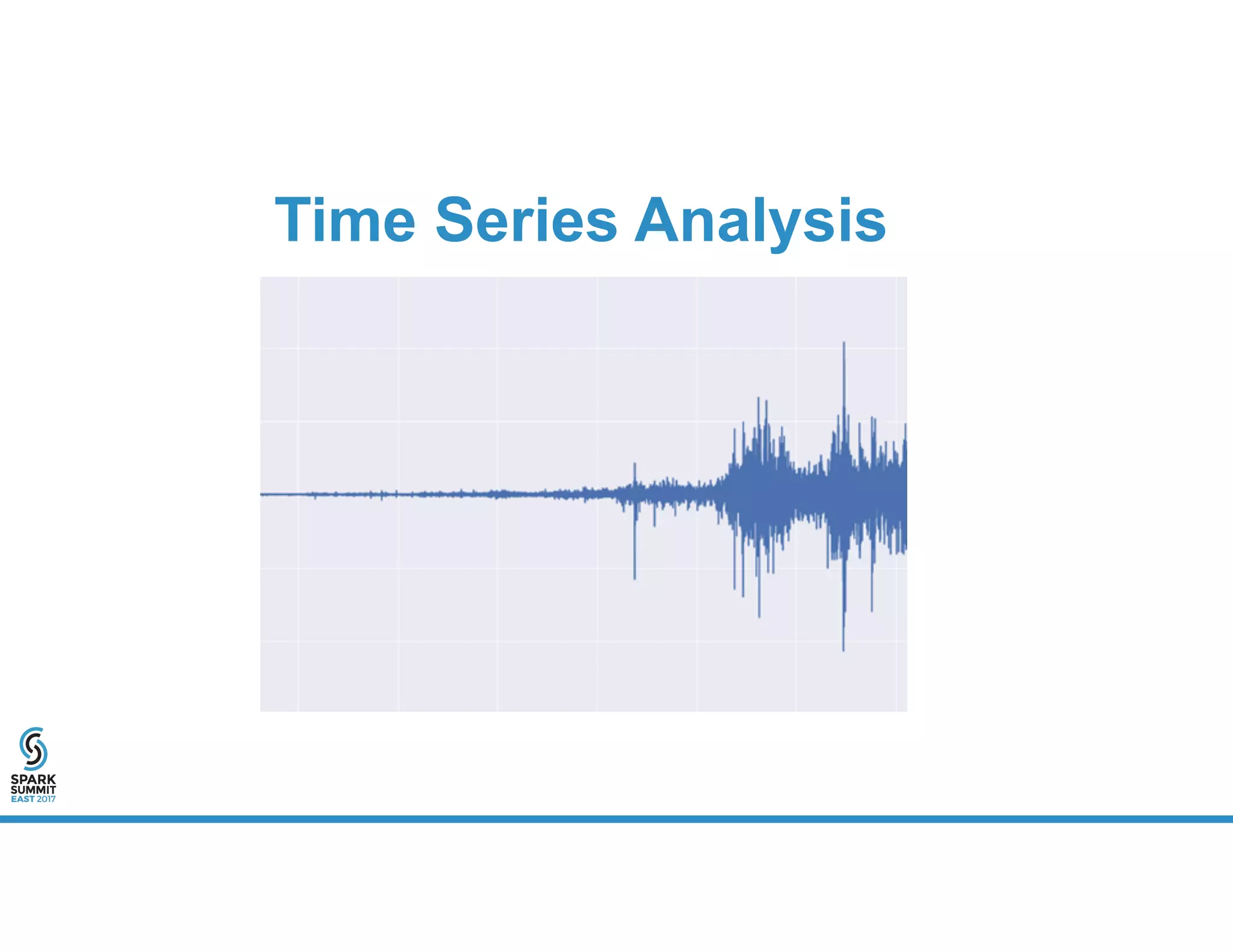

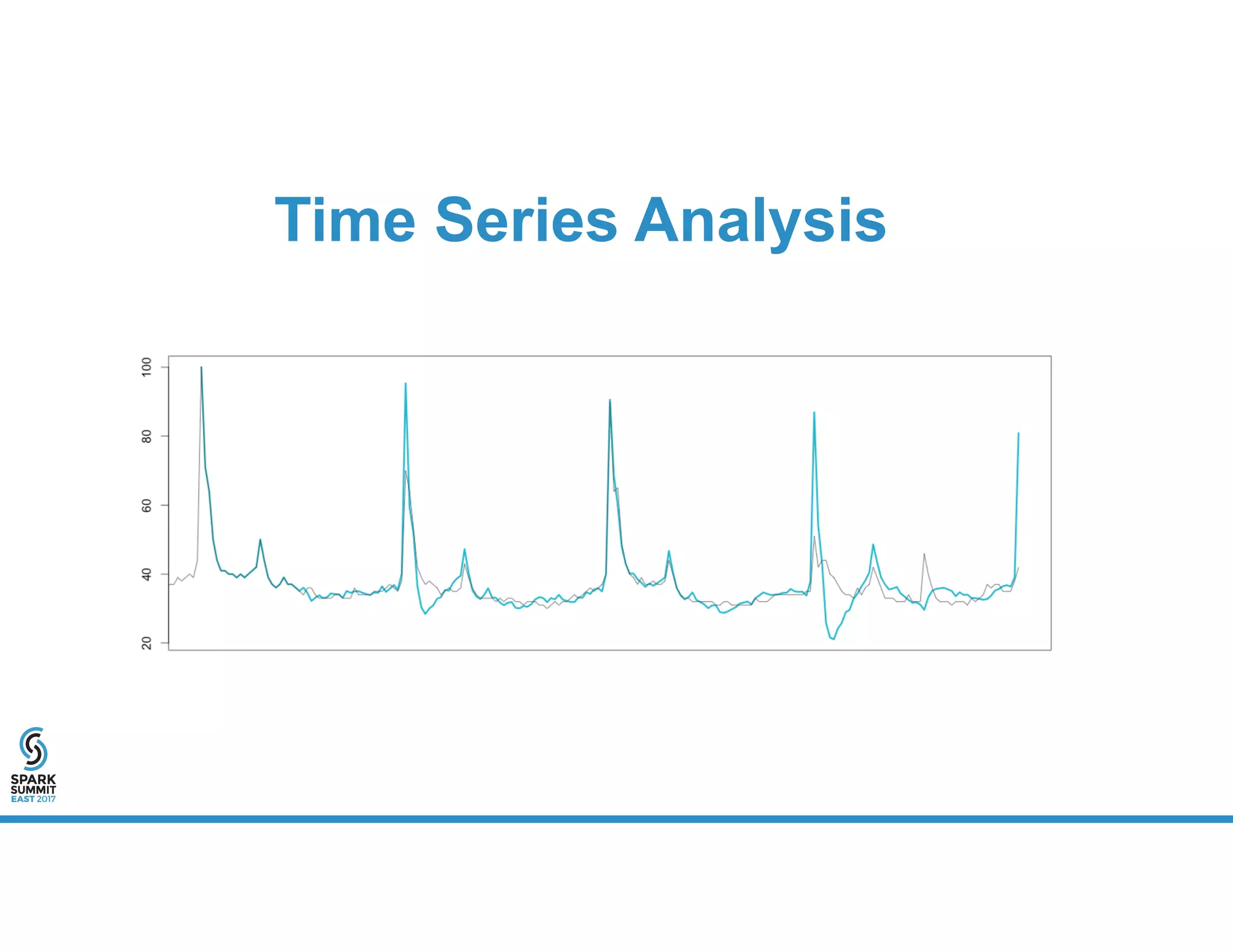

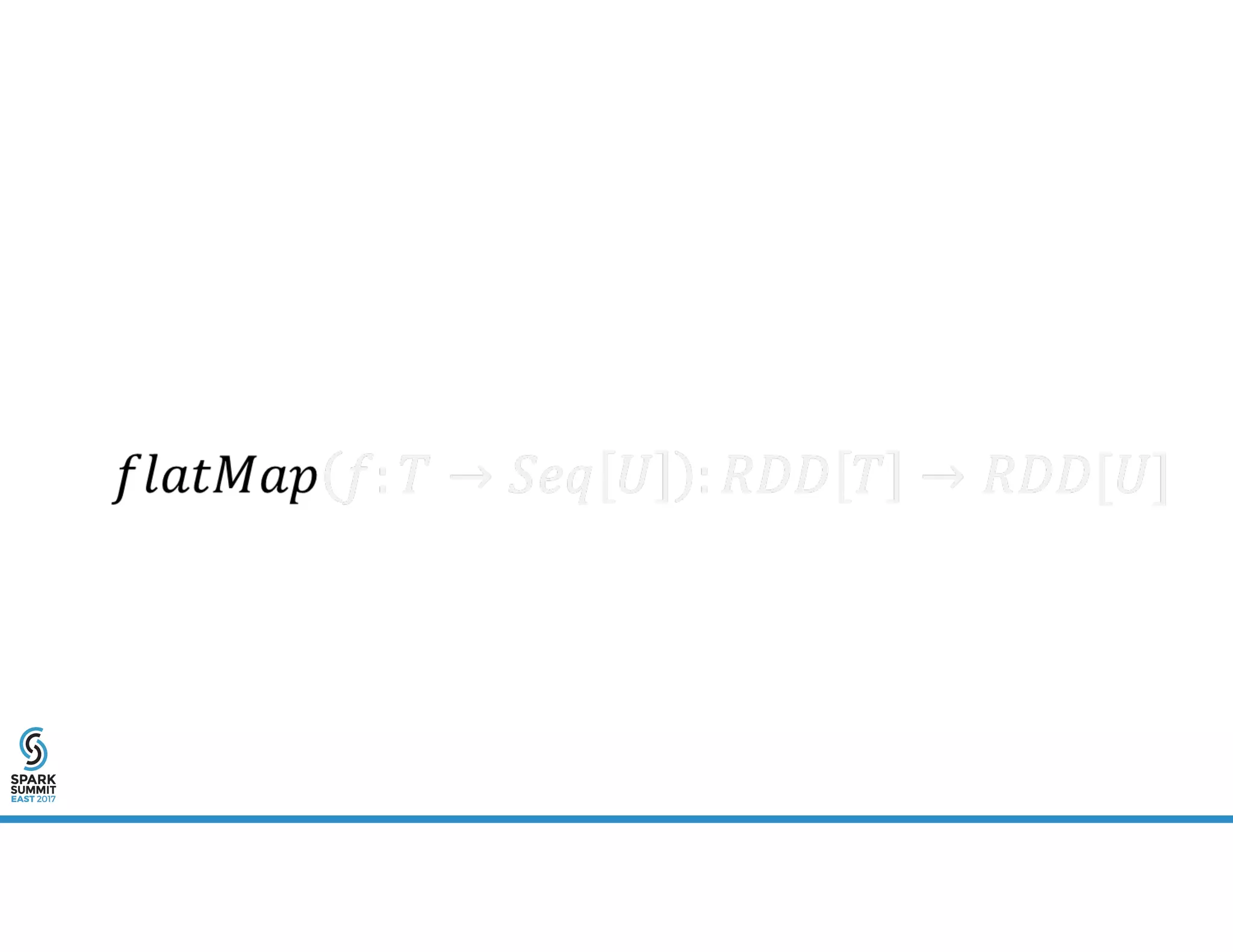

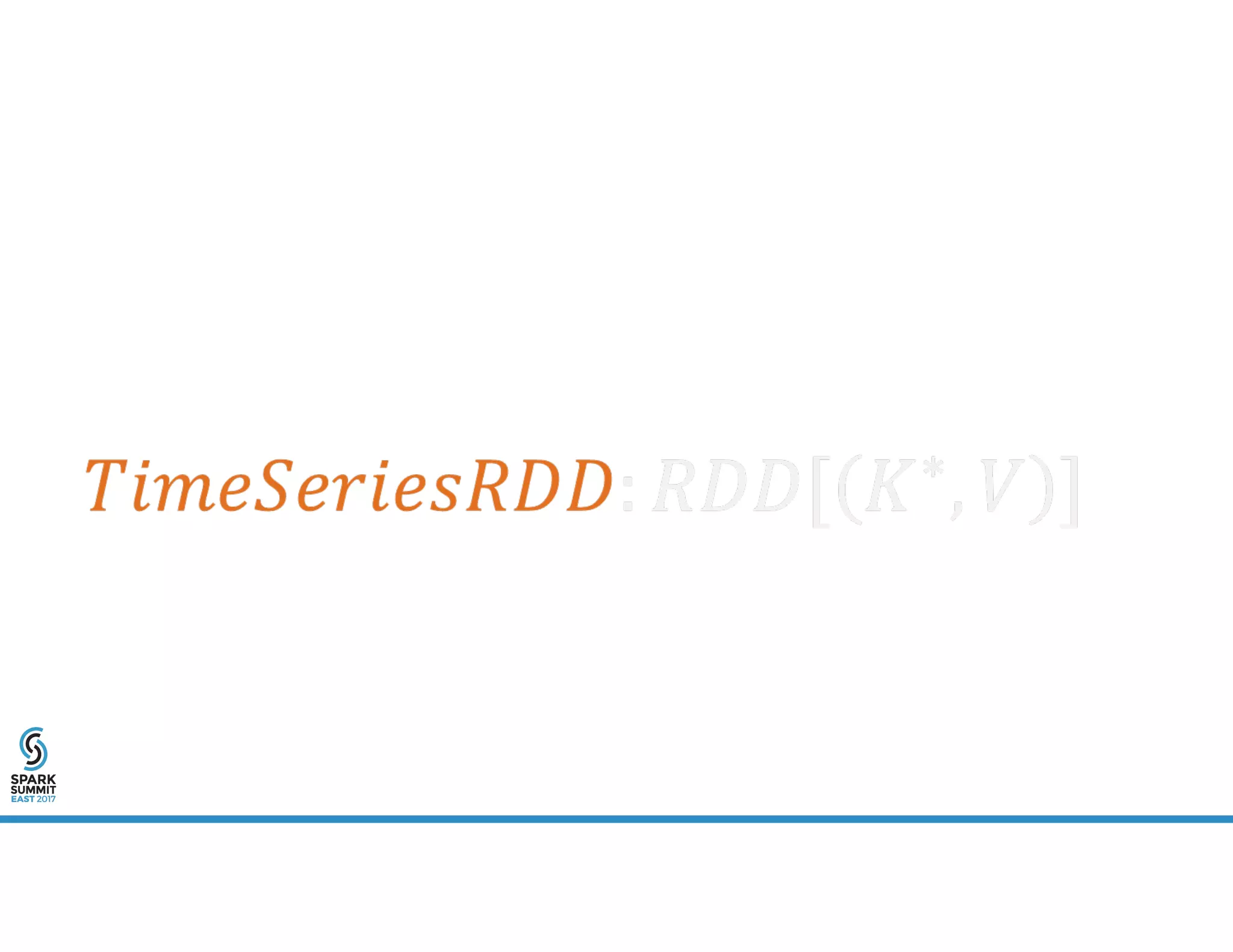

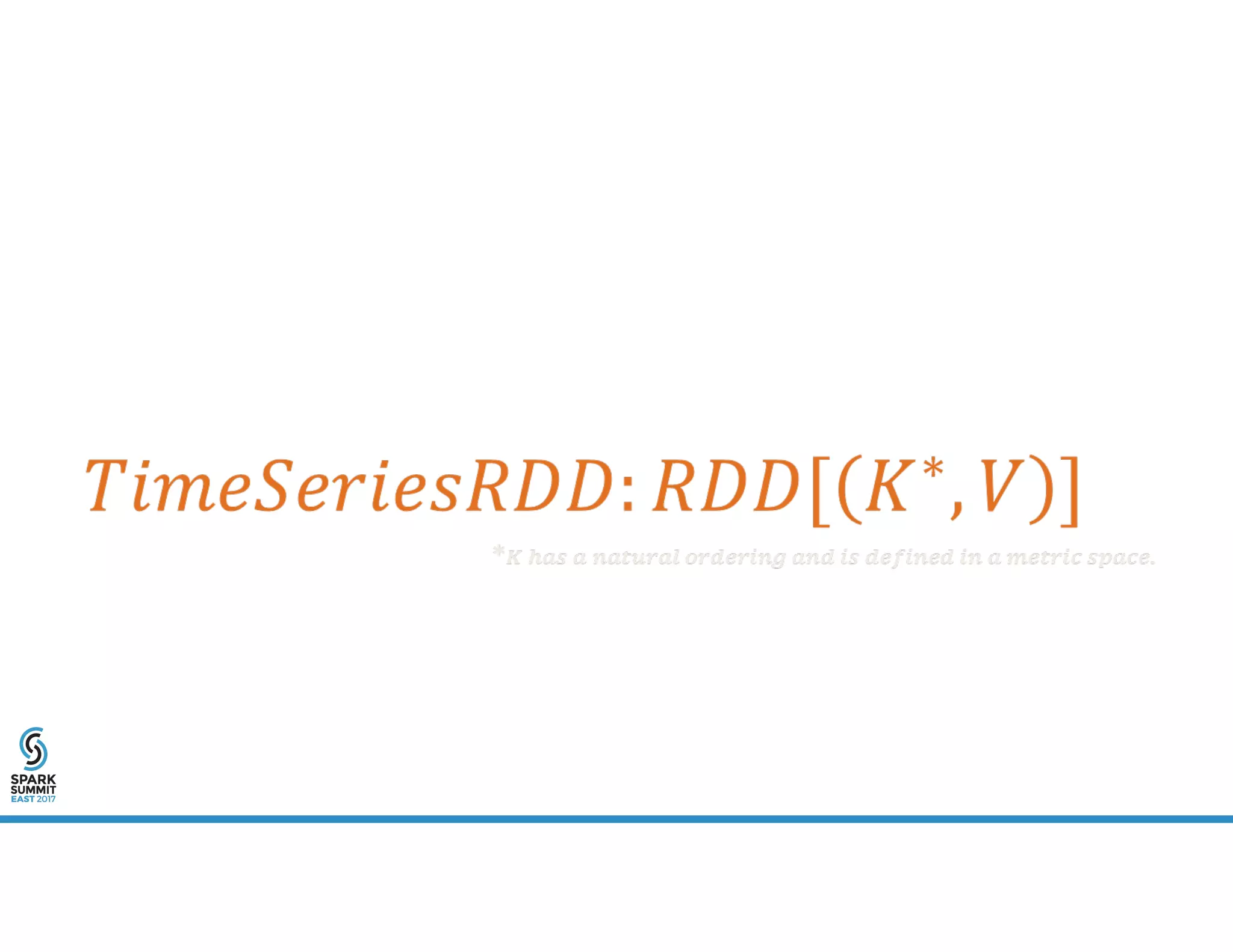

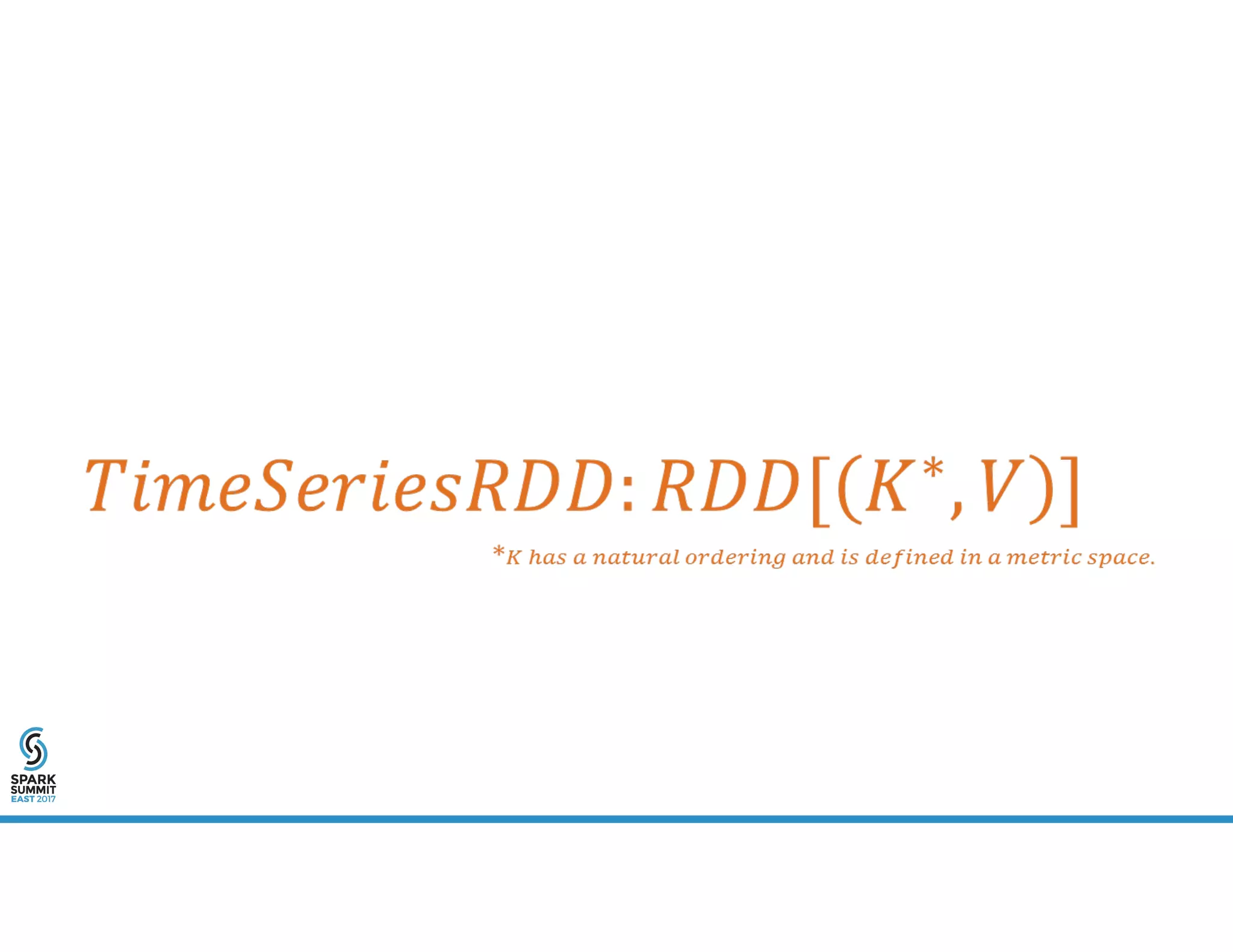

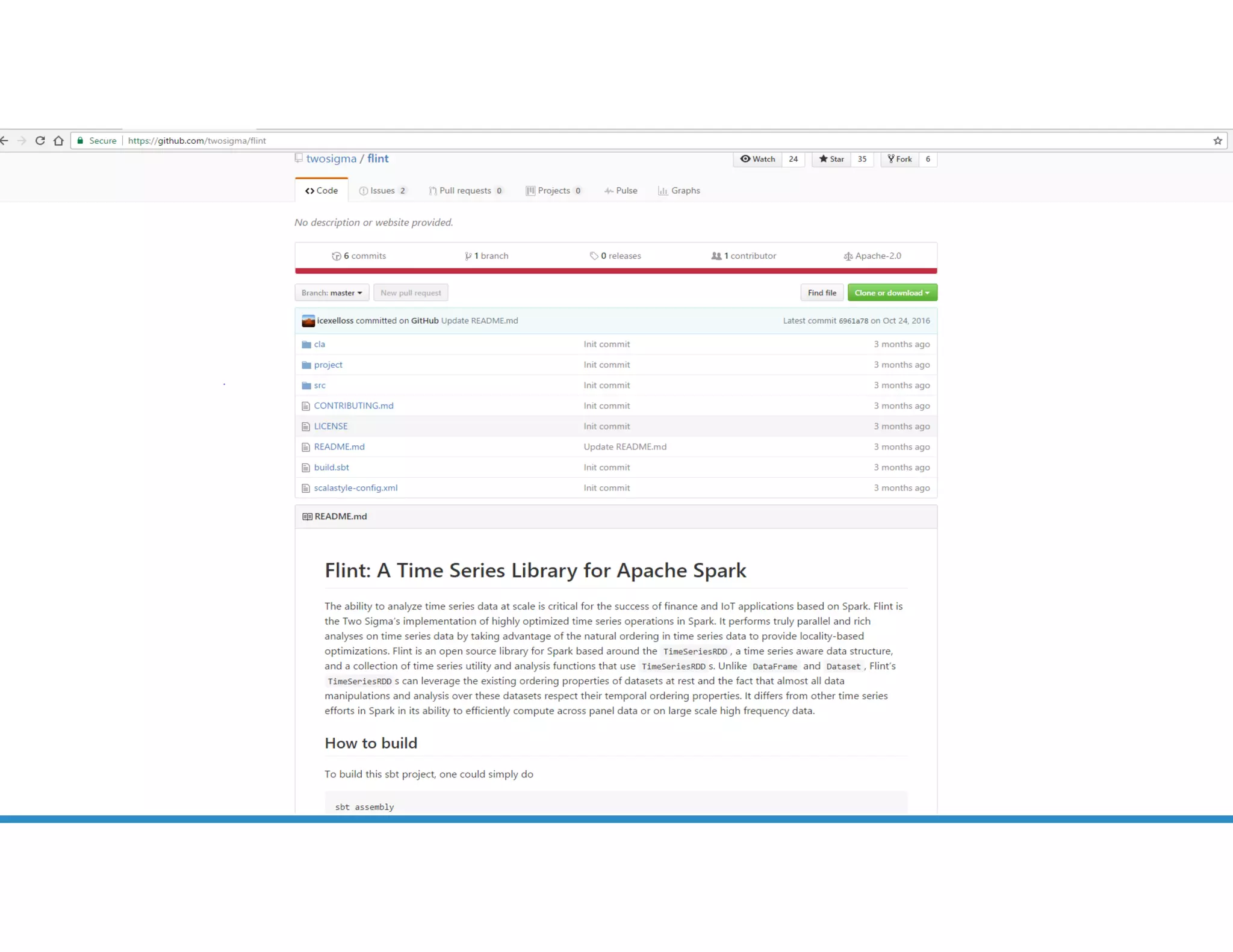

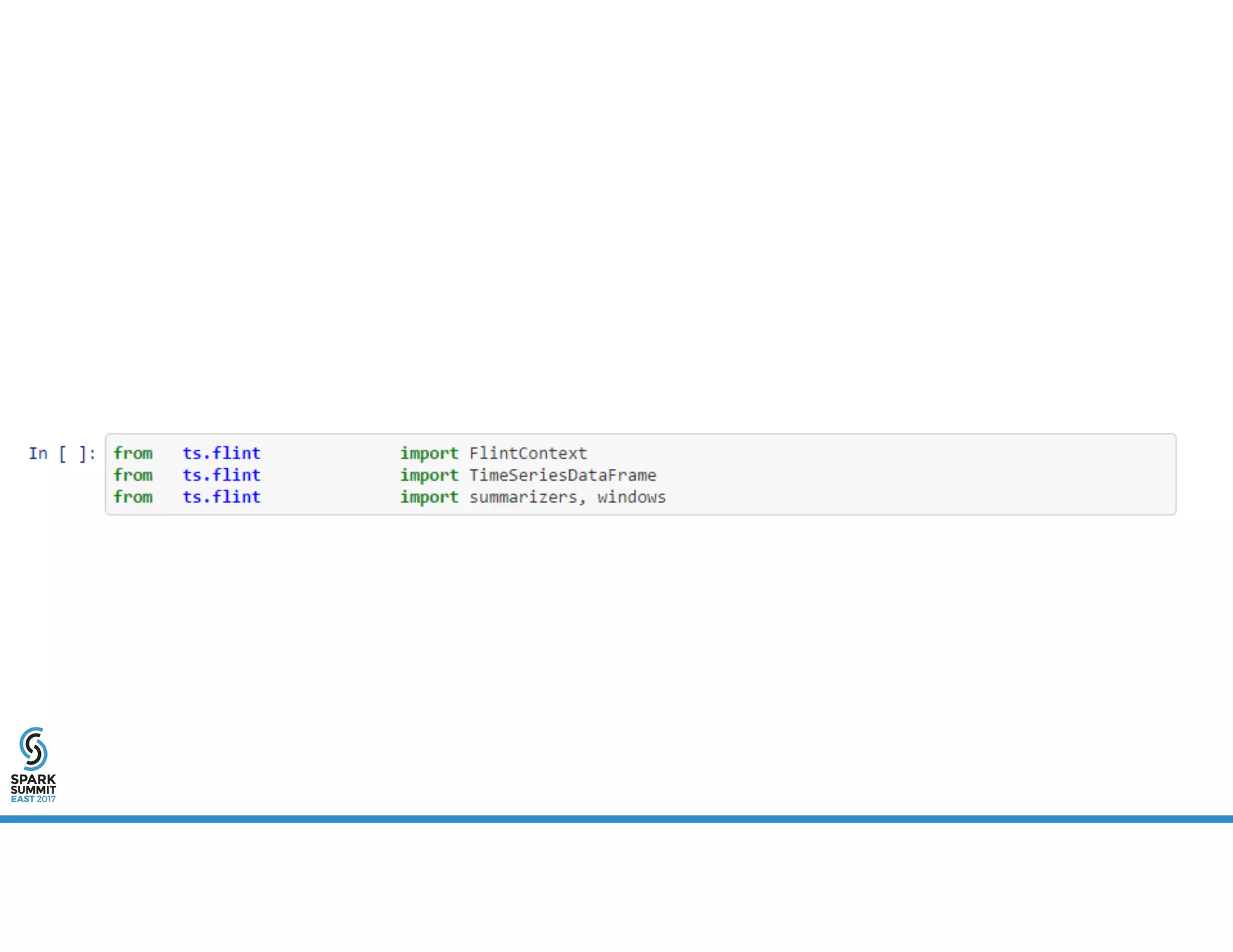

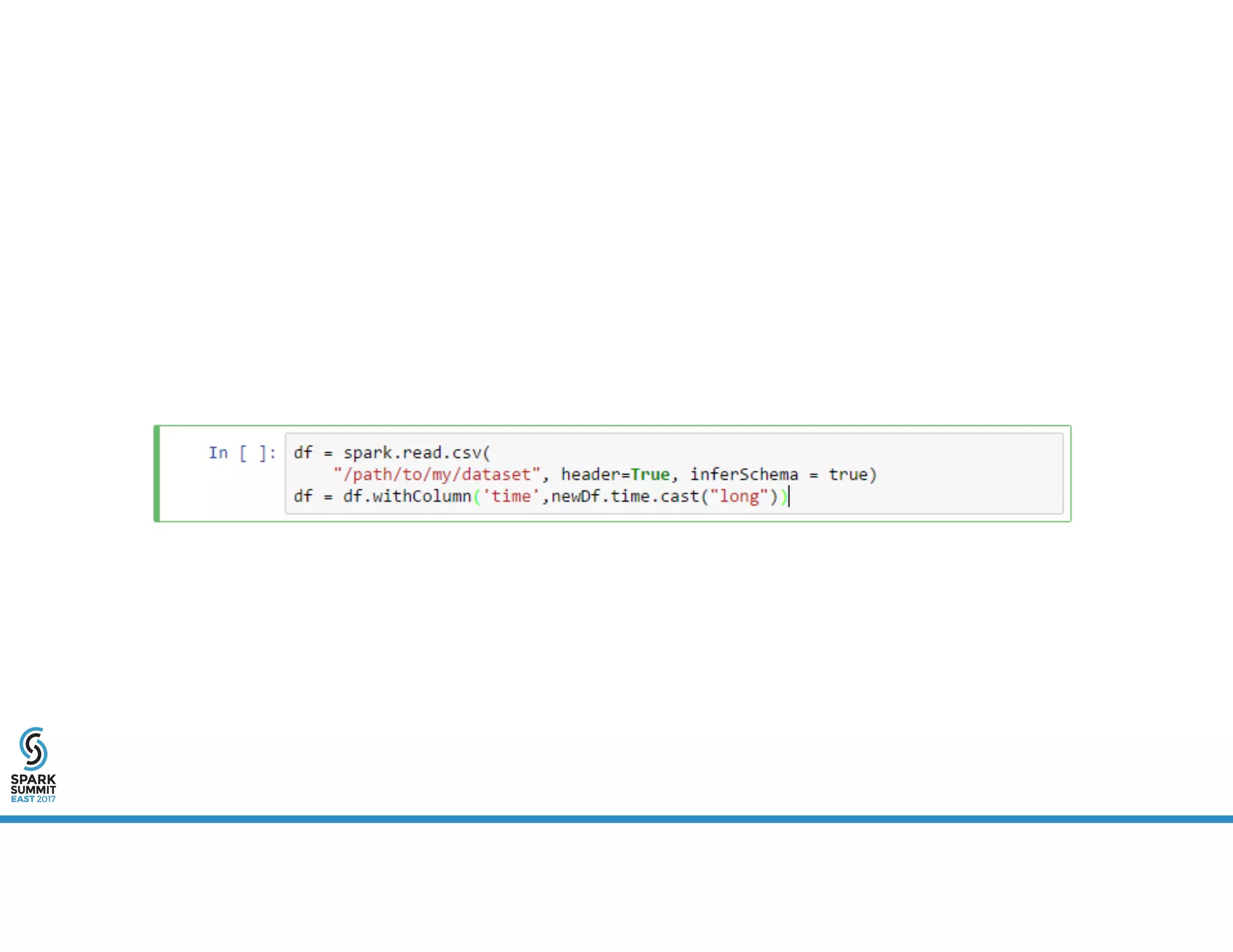

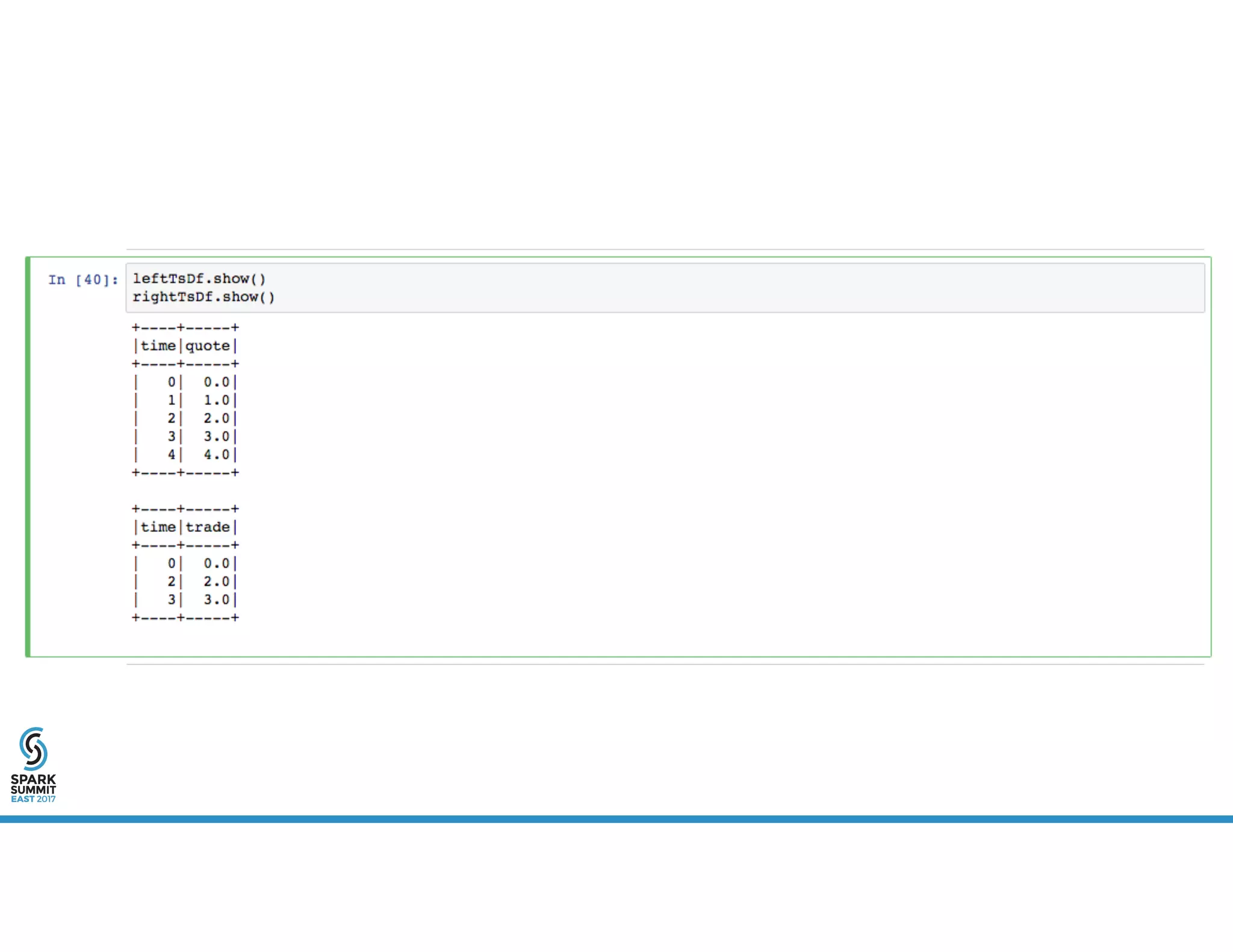

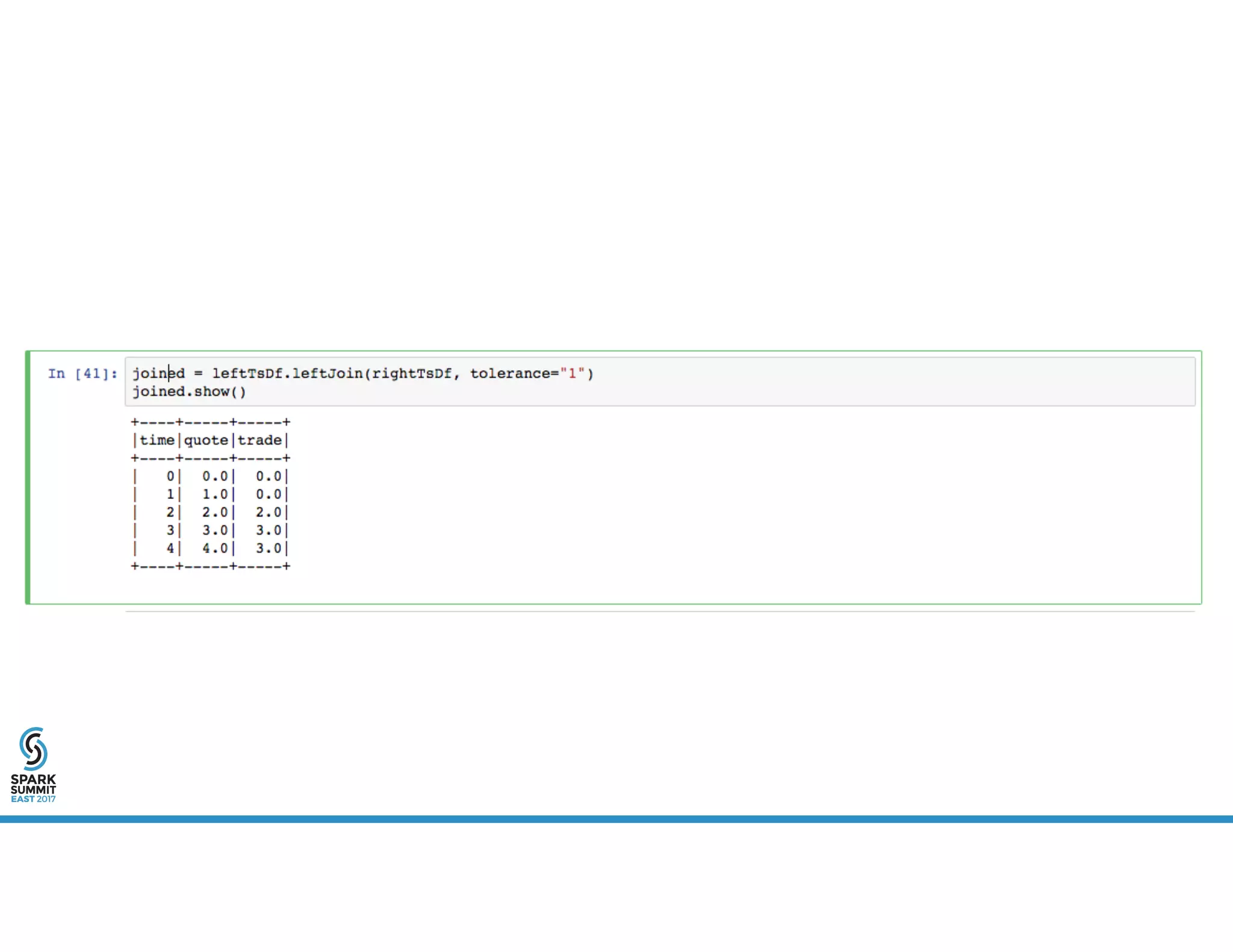

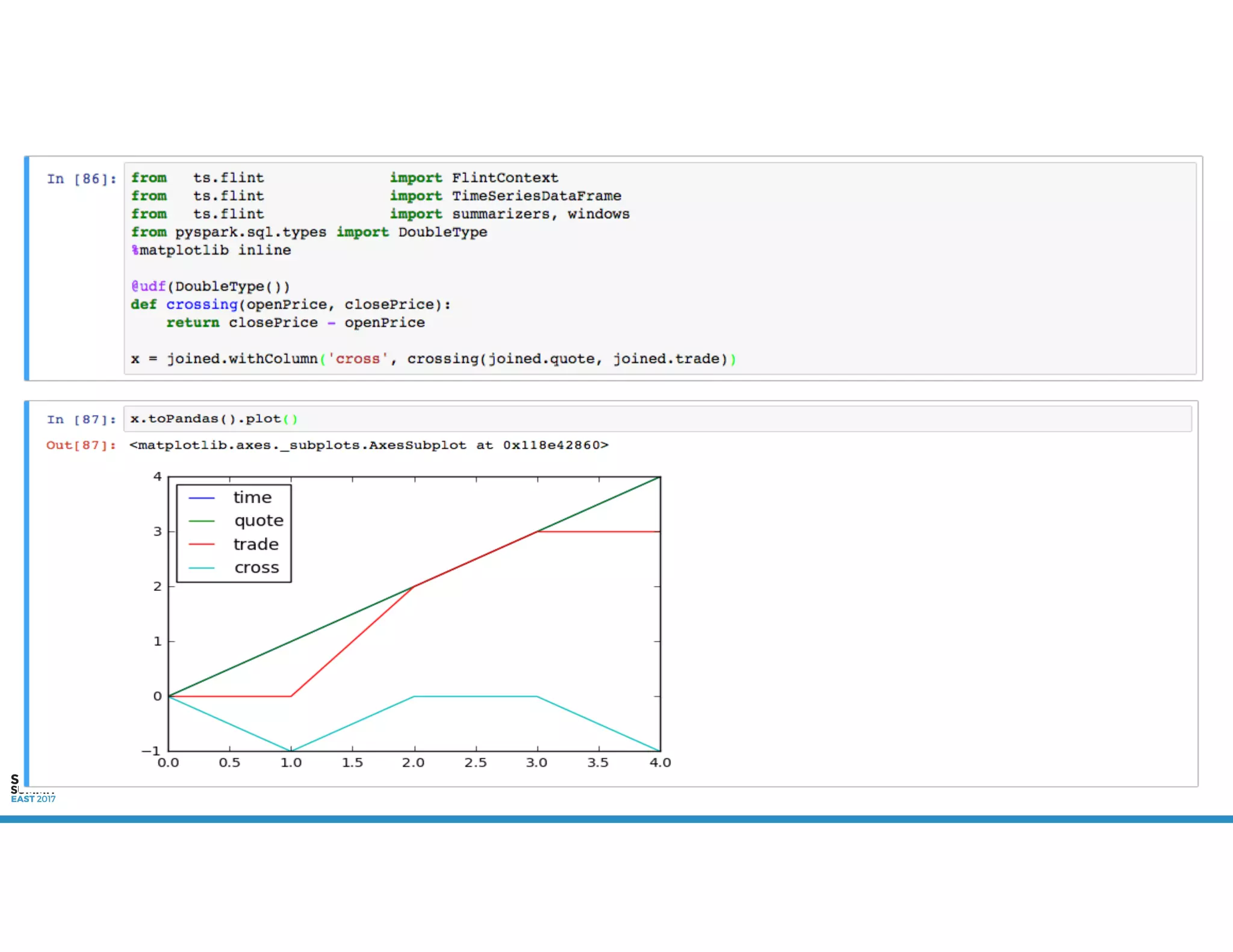

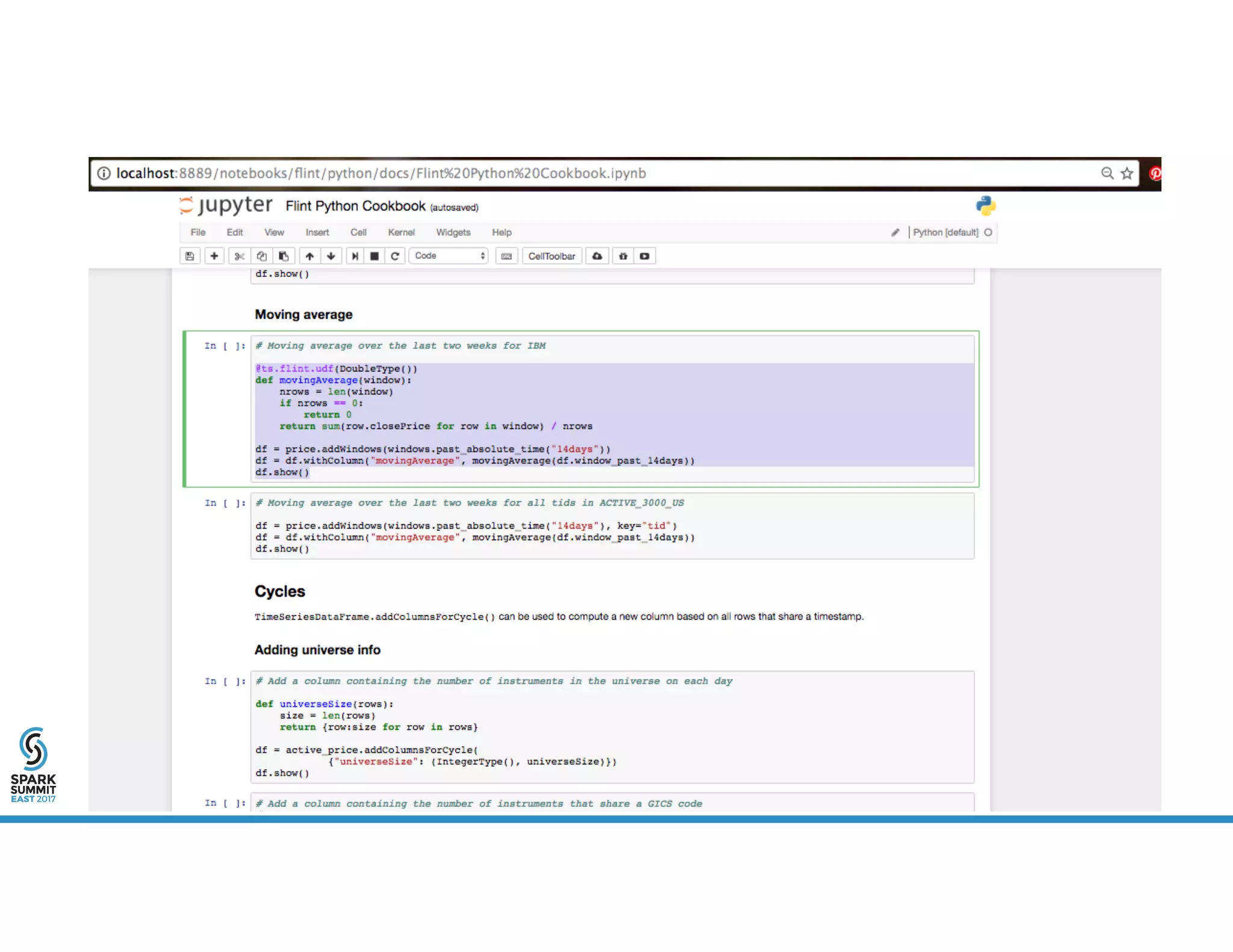

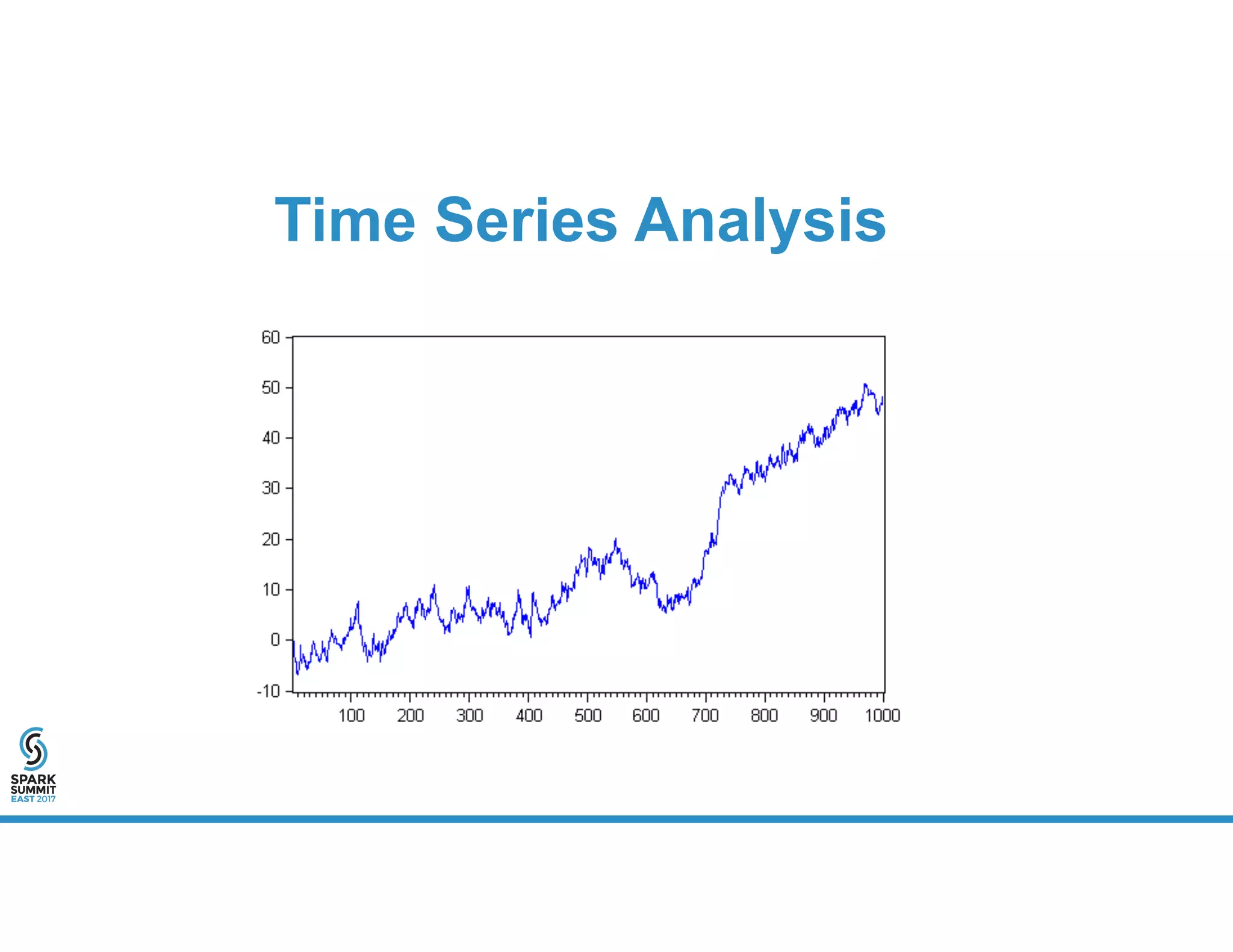

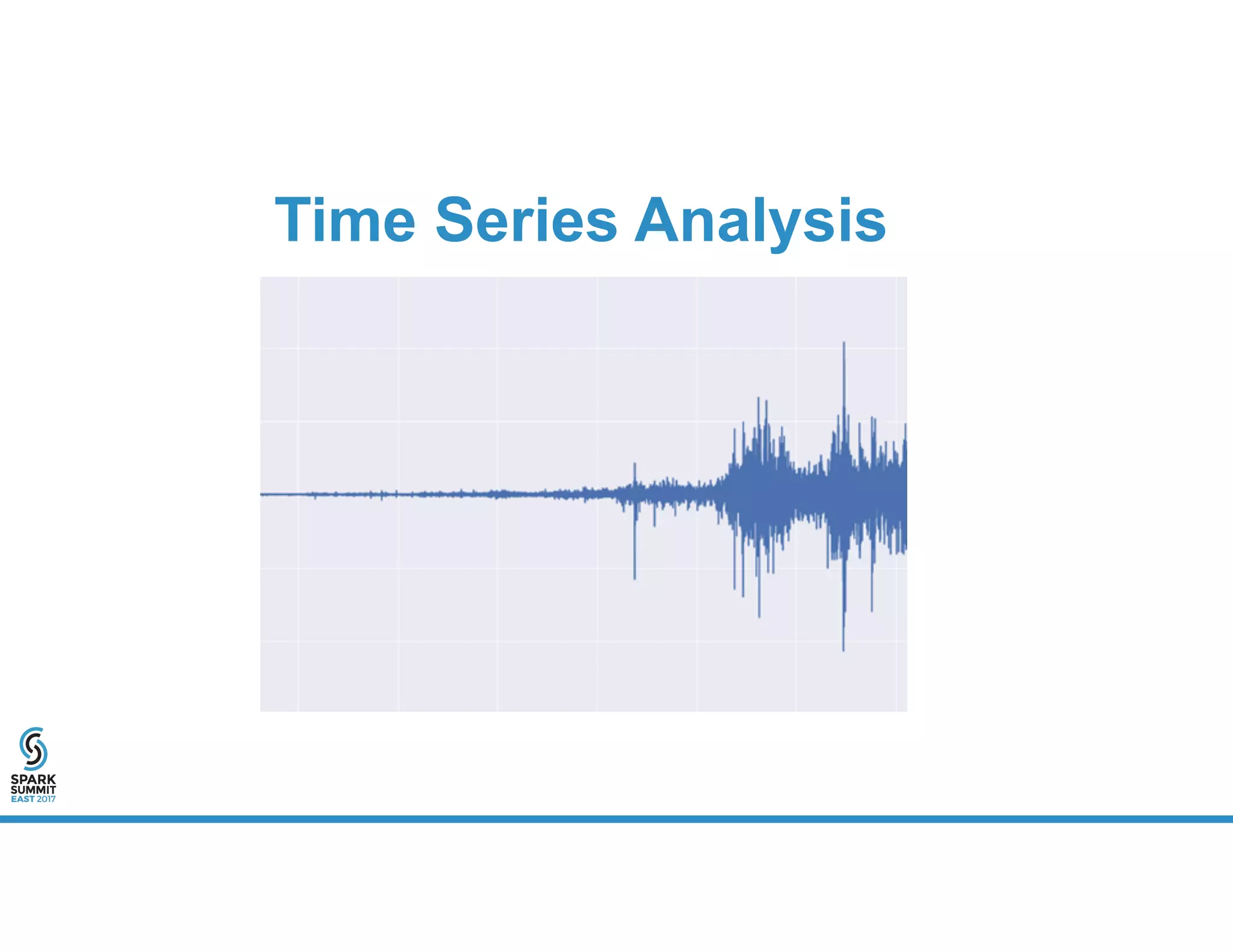

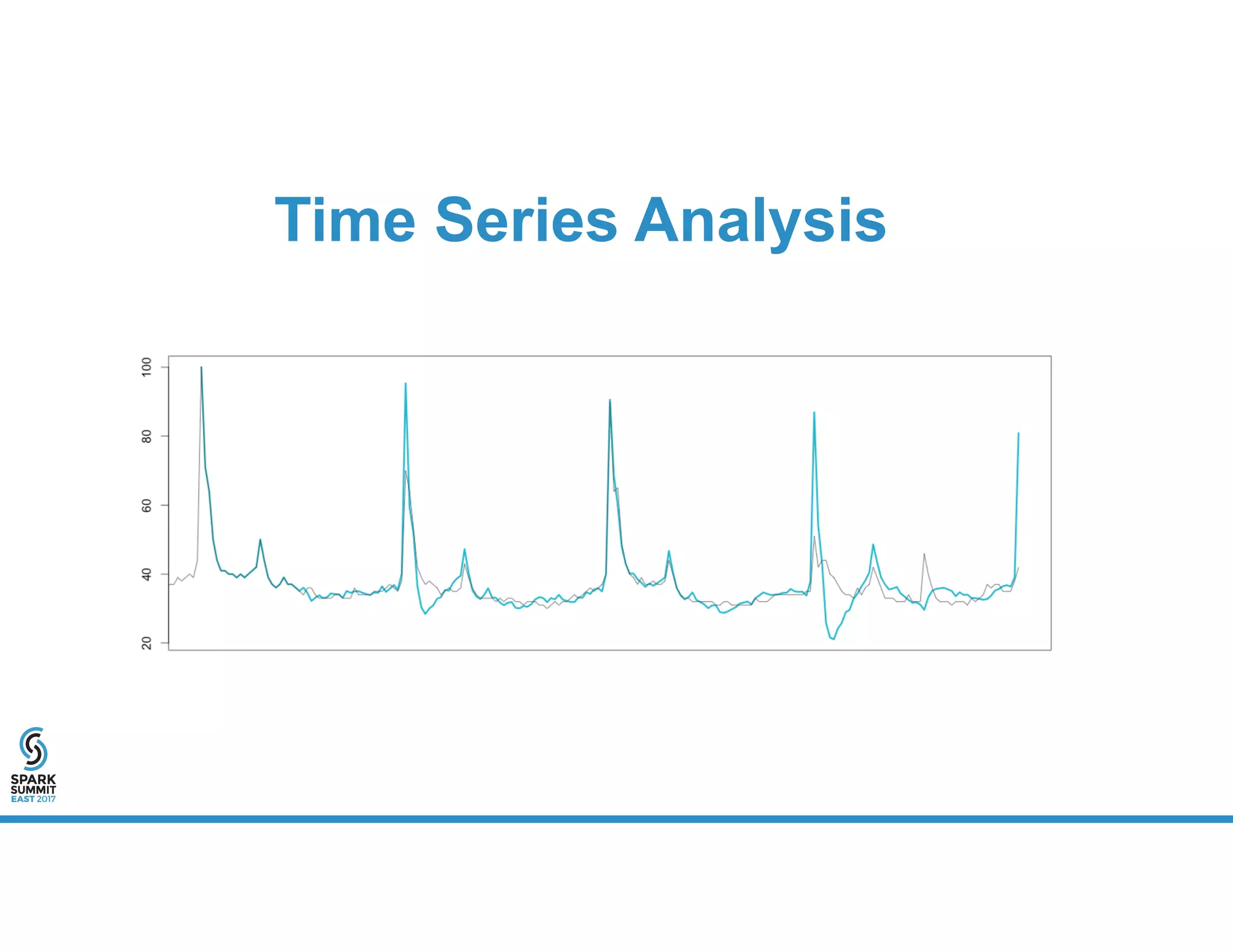

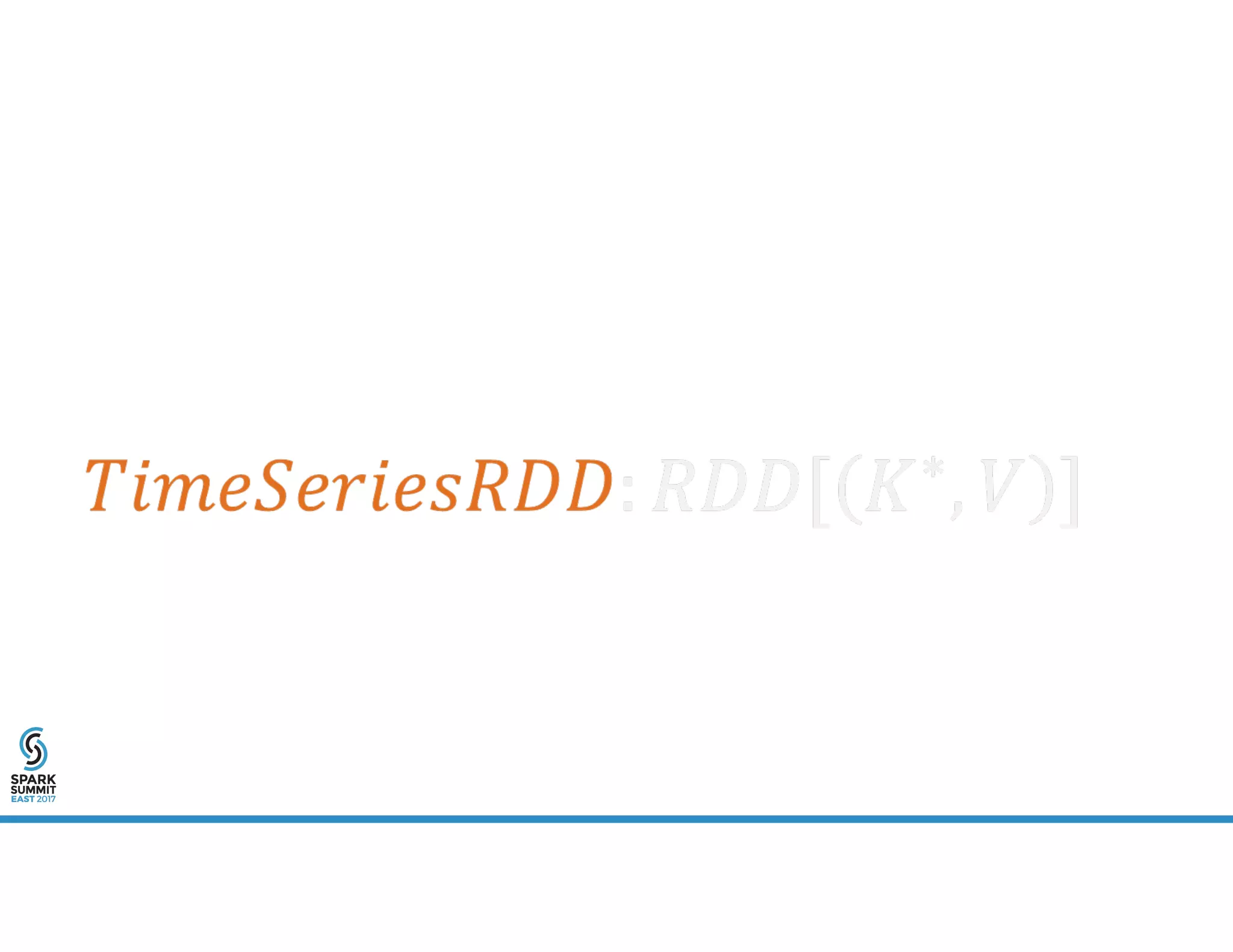

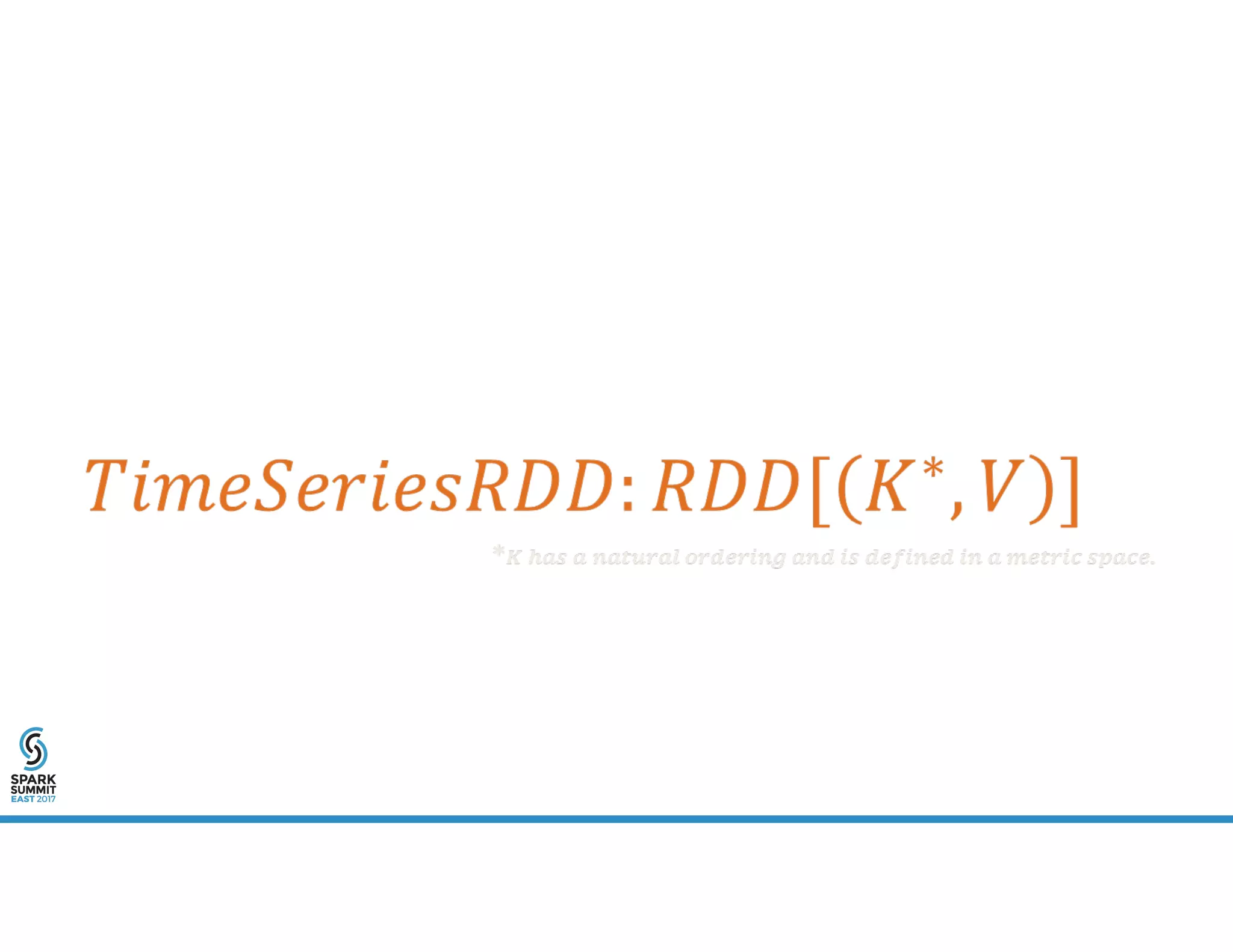

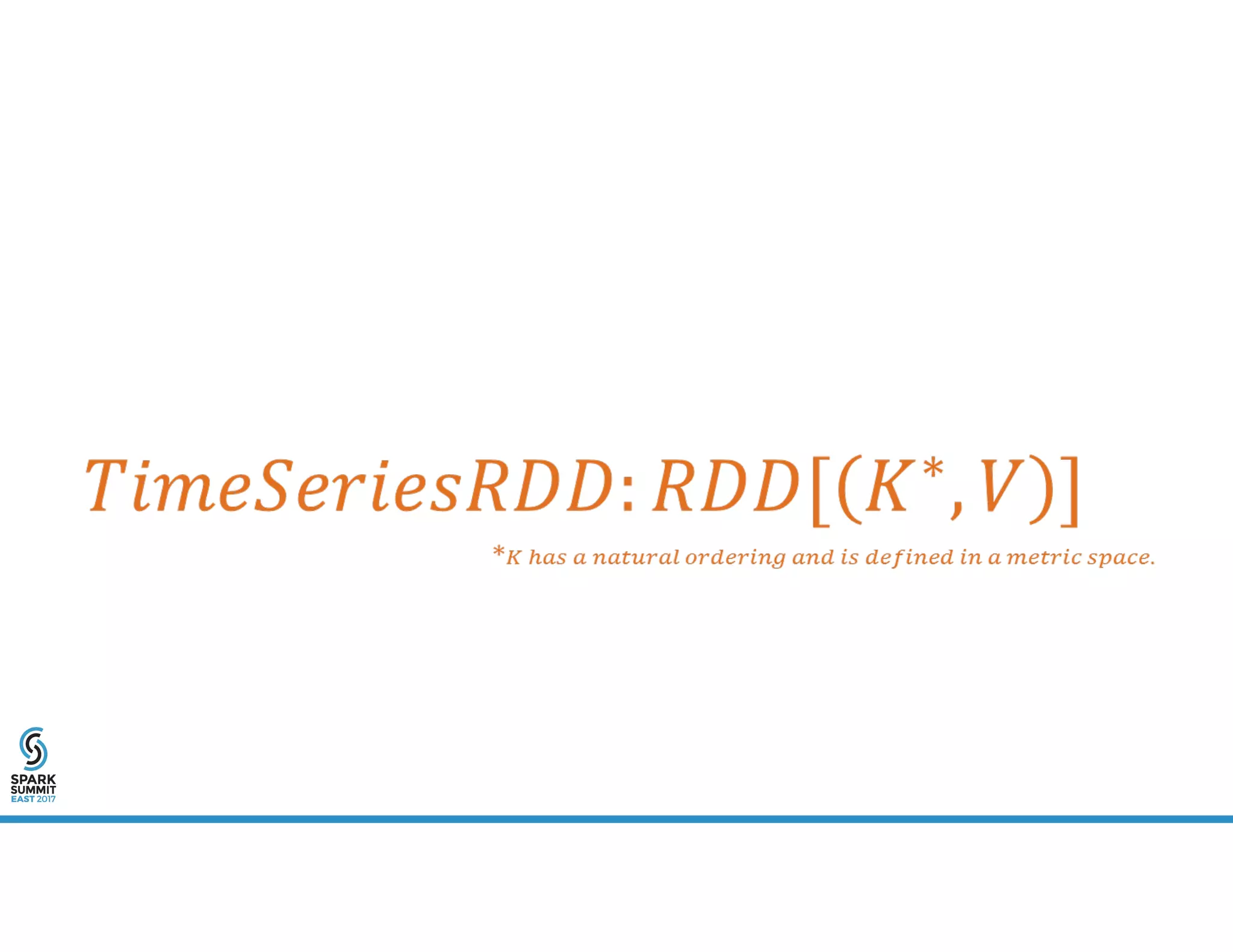

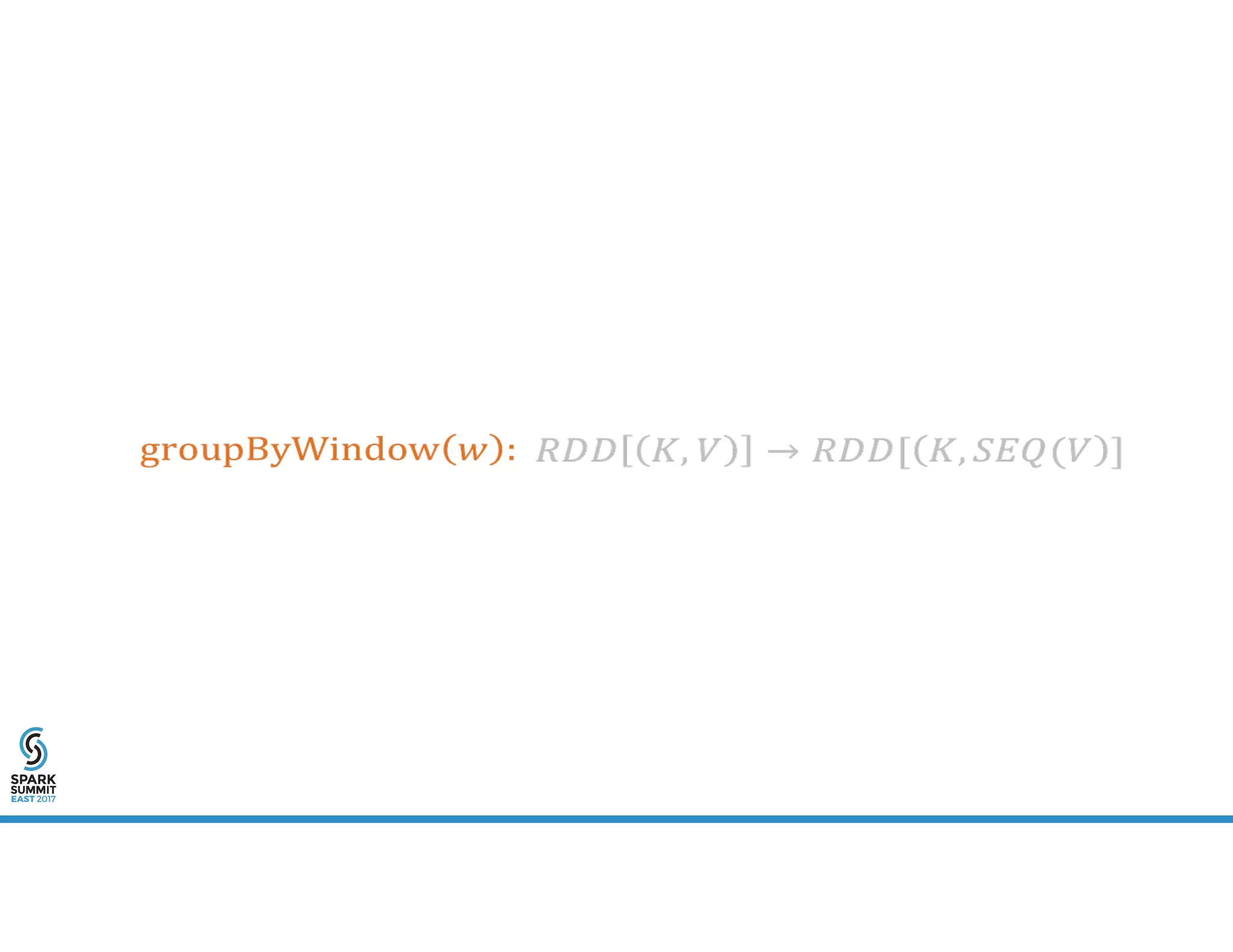

The document provides an overview of using PySpark for time series analysis. It discusses that time series data can come from sources like IOT feeds, sensor data, and economic indicators. Time series analysis in PySpark allows for windowed aggregations and temporal joins on massive time series datasets that can be both wide and narrow. While basic analytics are possible in PySpark, libraries like Flint provide additional functions specialized for time series analysis on large datasets in a distributed environment. The document encourages attendees to speak with the author after the talk to see a time series analysis library in PySpark demonstrated.

![w is a window specification e.g. 500ms, 5s, 3 business days

RDD[(K,V)] -> RDD[(K,Seq[V])]](https://image.slidesharecdn.com/1adavidpalaitis-170214185024/75/New-Directions-in-pySpark-for-Time-Series-Analysis-Spark-Summit-East-talk-by-David-Palaitis-40-2048.jpg)

![reduceByWindow(f: (V, V) => V, w): RDD[(K, W)] => RDD[(K, V)]](https://image.slidesharecdn.com/1adavidpalaitis-170214185024/75/New-Directions-in-pySpark-for-Time-Series-Analysis-Spark-Summit-East-talk-by-David-Palaitis-41-2048.jpg)

![reduceByWindow(f: (V, V) => V, w): RDD[(K, V)] => RDD[(K, V)]](https://image.slidesharecdn.com/1adavidpalaitis-170214185024/75/New-Directions-in-pySpark-for-Time-Series-Analysis-Spark-Summit-East-talk-by-David-Palaitis-42-2048.jpg)