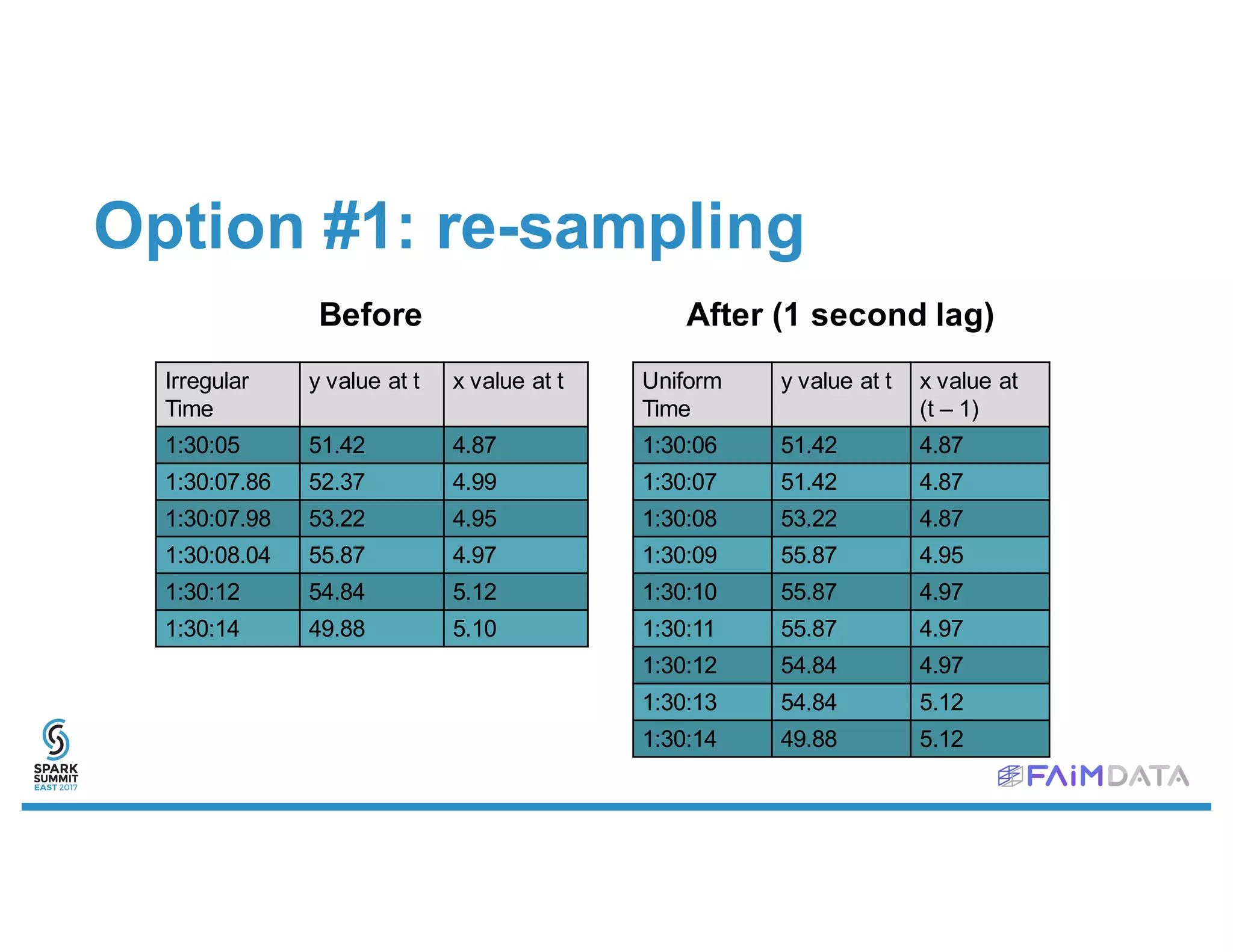

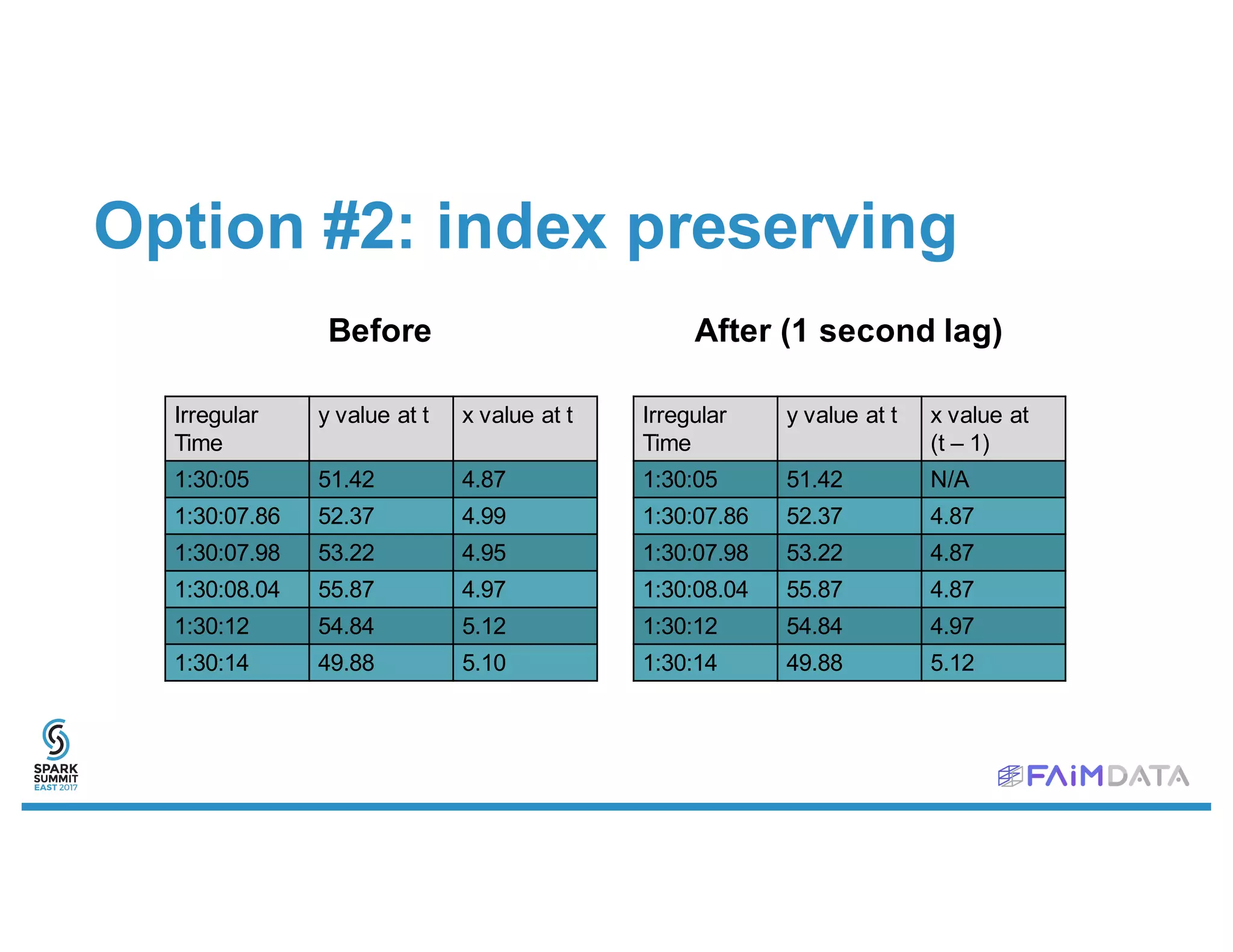

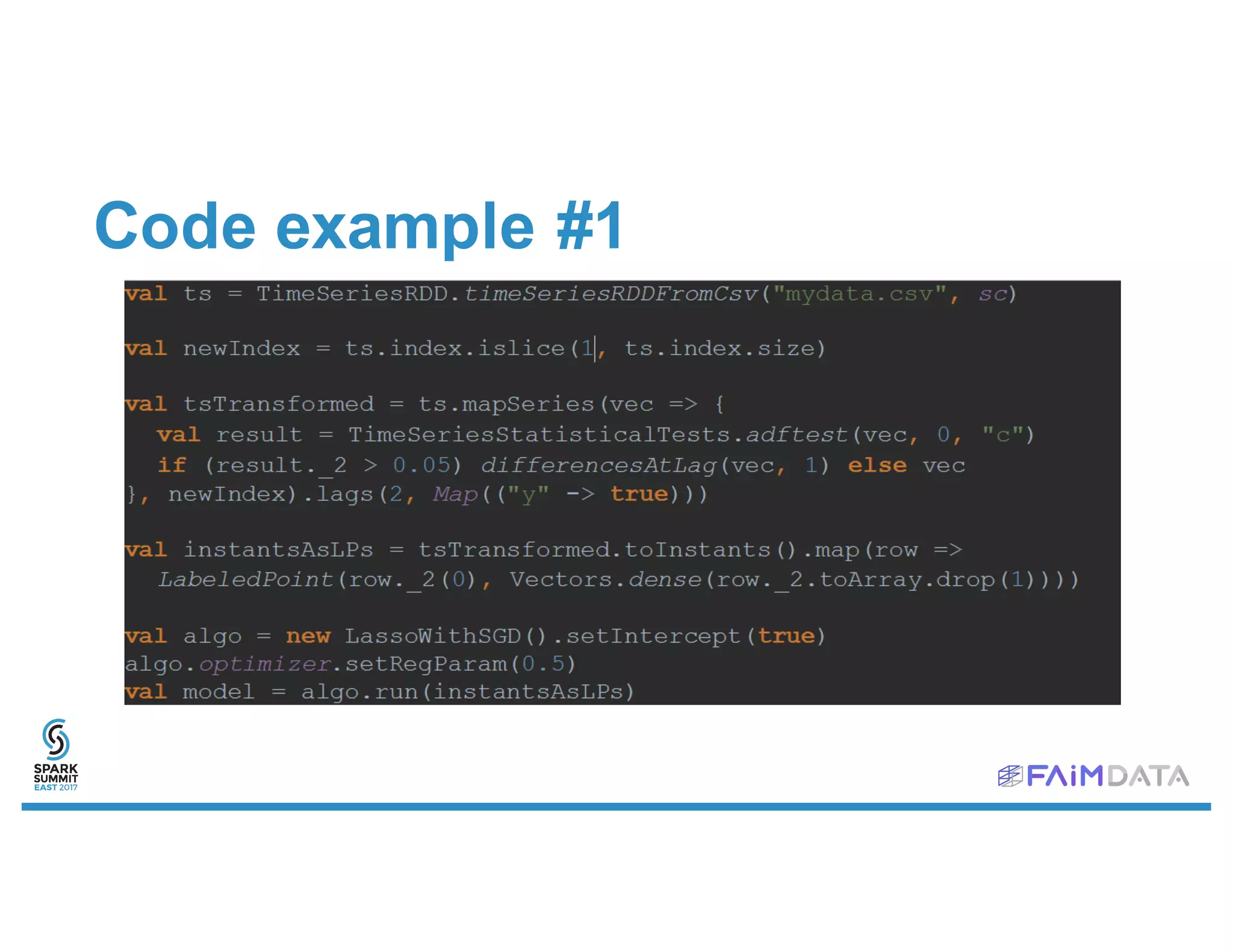

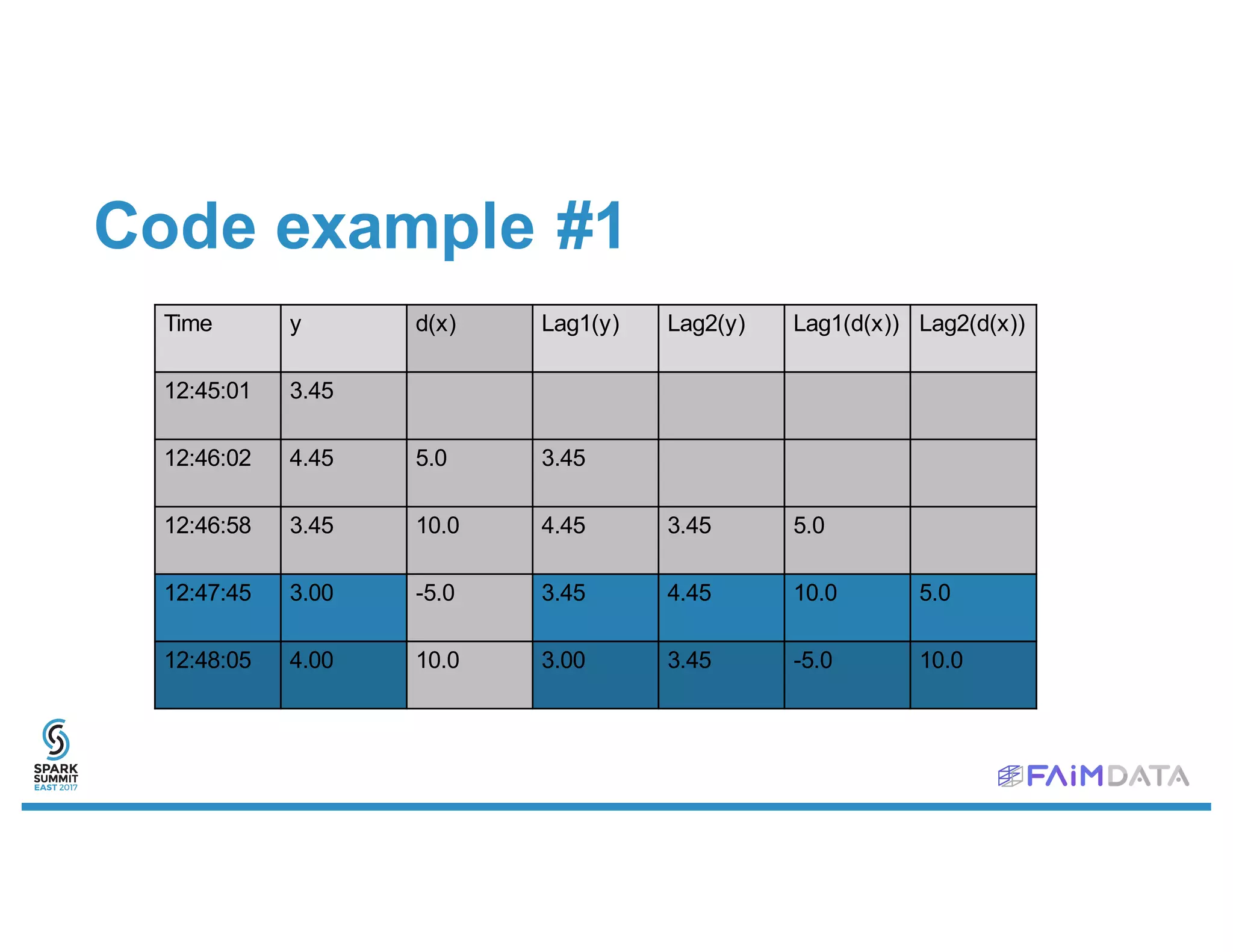

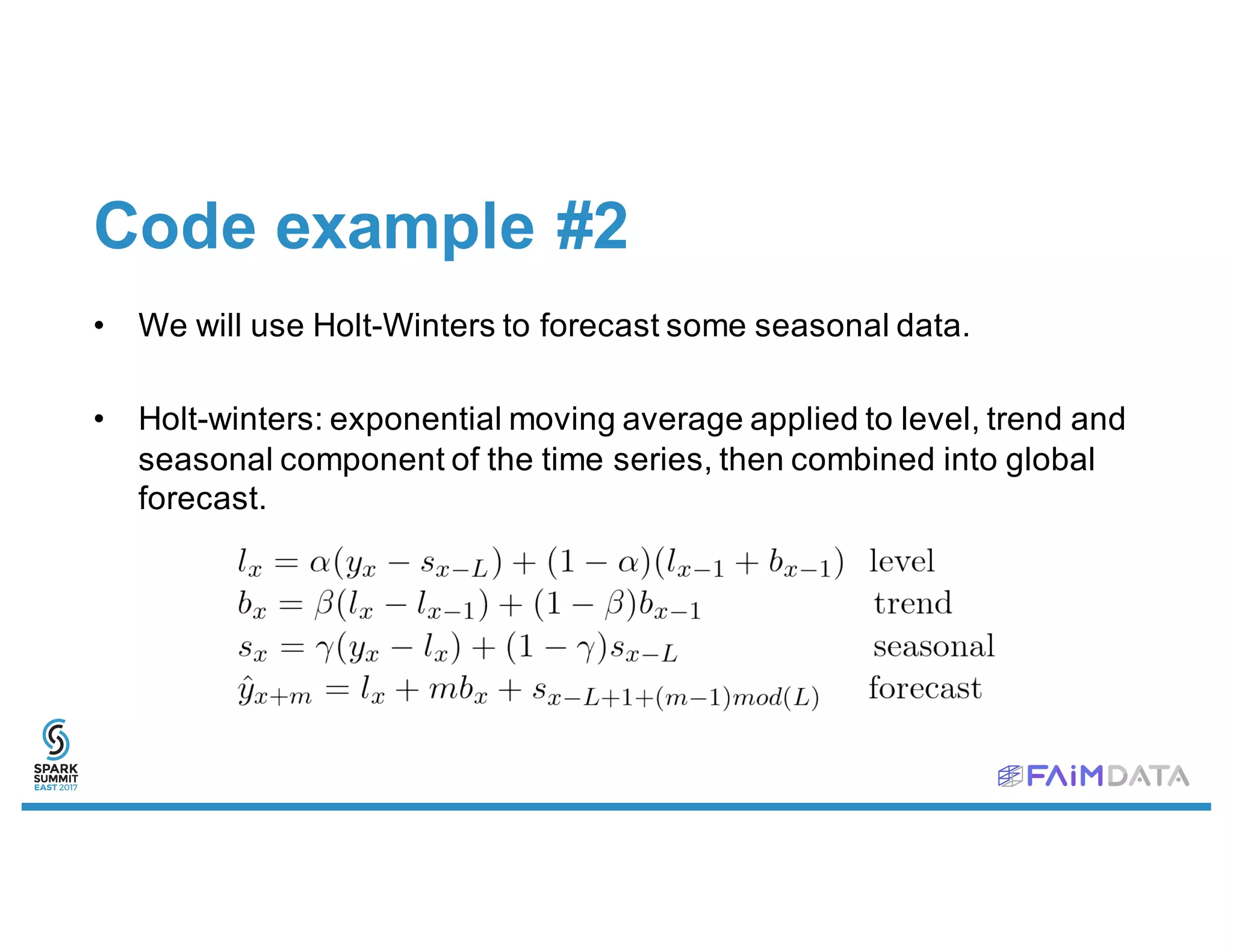

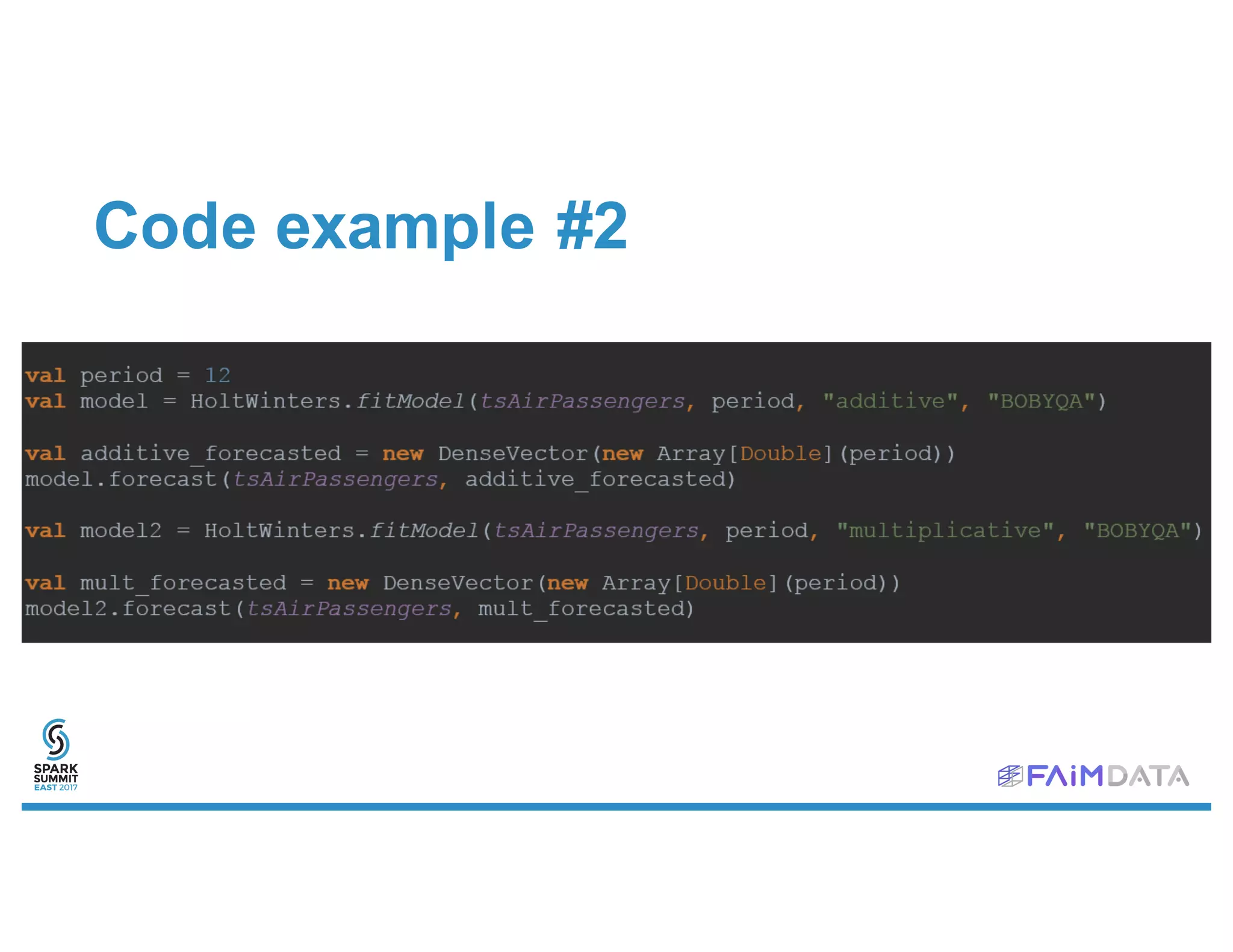

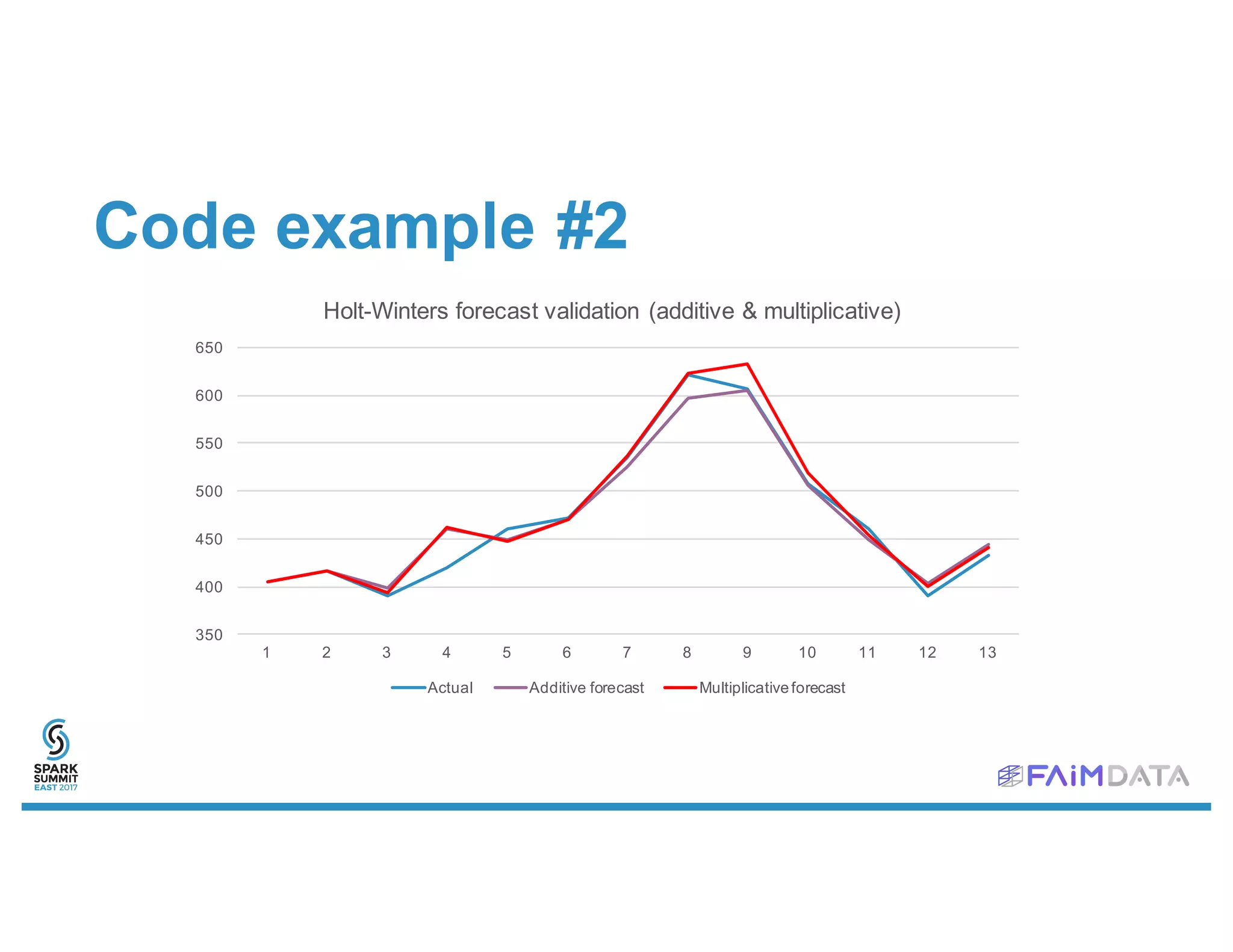

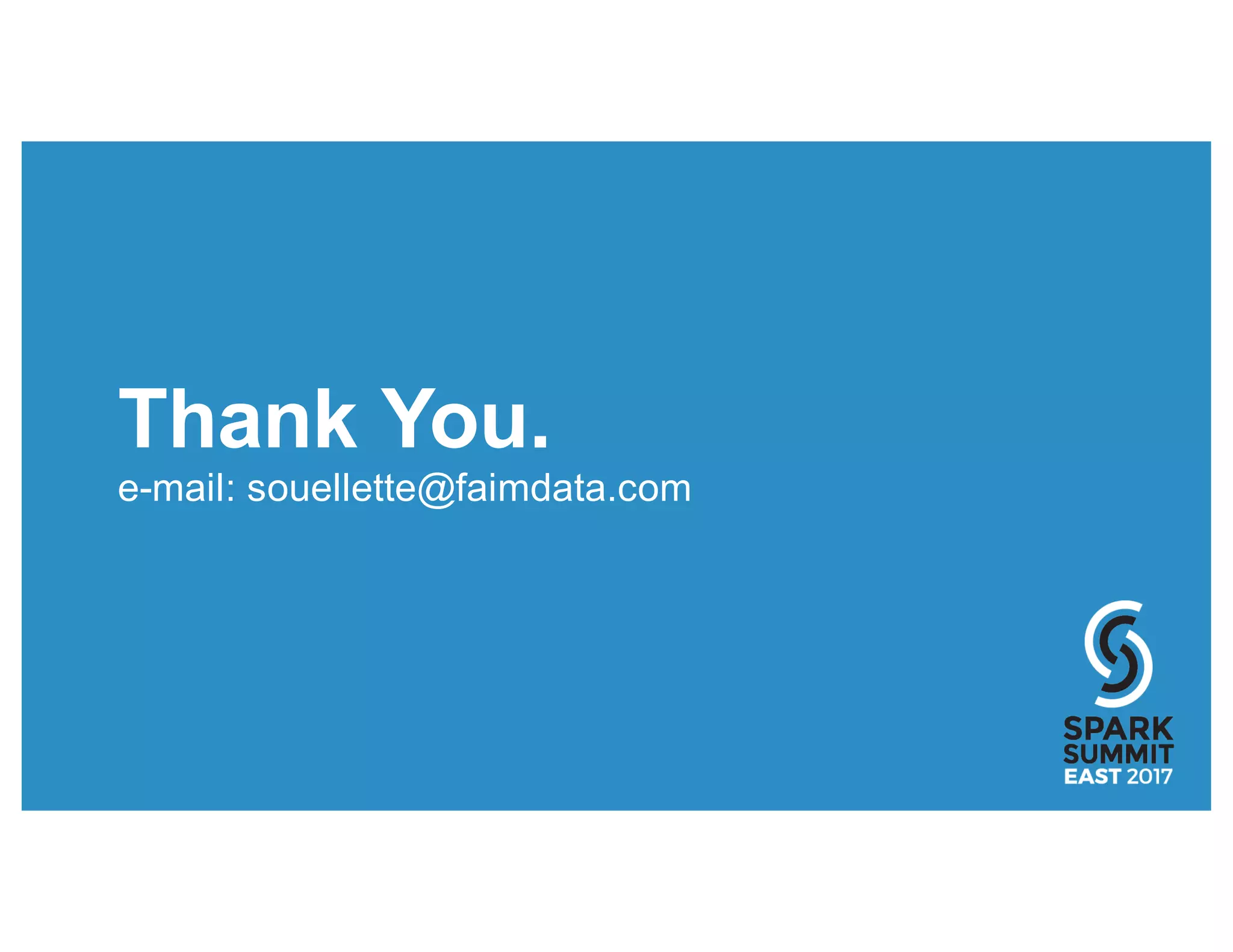

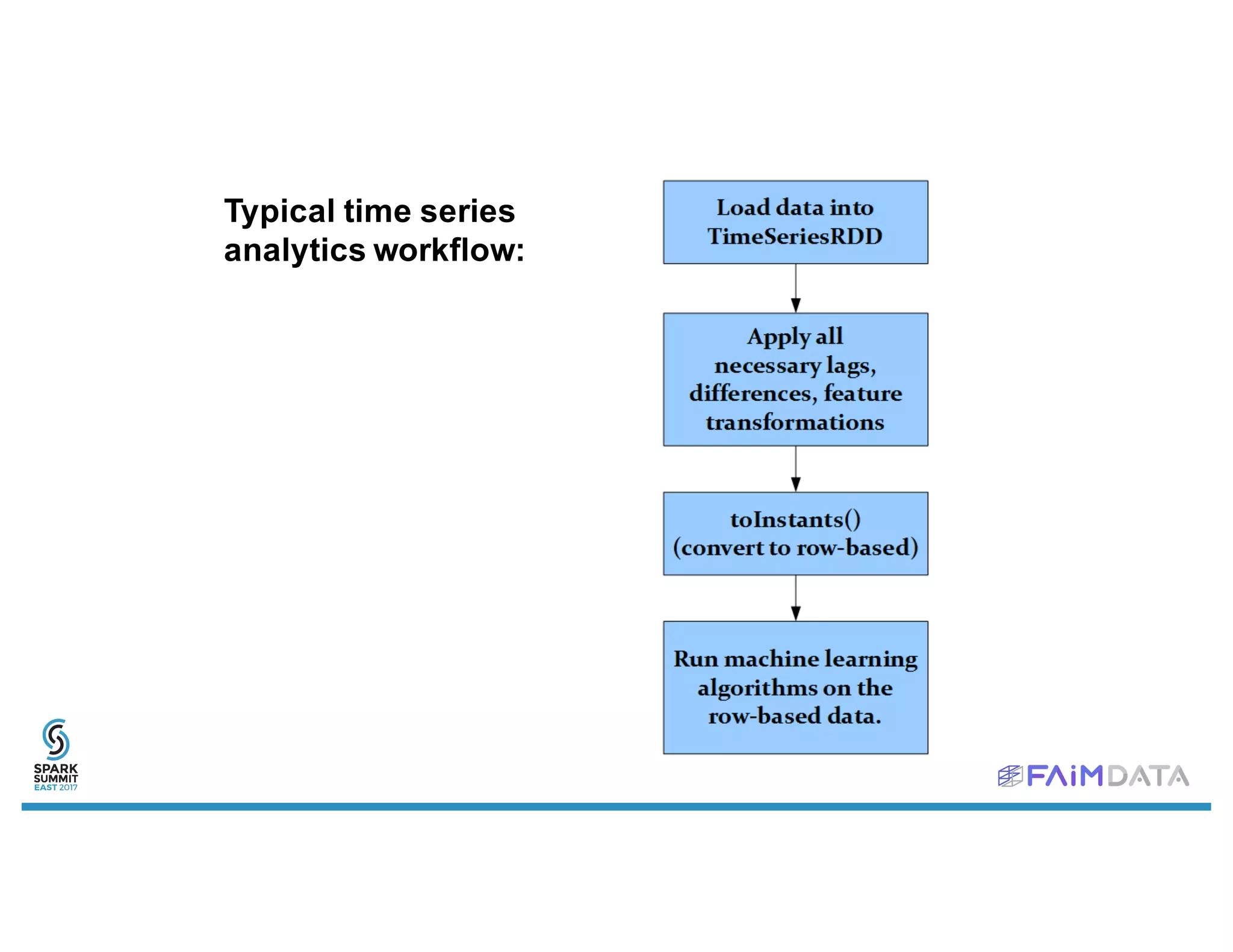

This document provides an overview of spark-timeseries, an open source time series library for Apache Spark. It discusses the library's design choices around representing multivariate time series data, partitioning time series data for distributed processing, and handling operations like lagging and differencing on irregular time series data. It also presents examples of using the library to test for stationarity, generate lagged features, and perform Holt-Winters forecasting on seasonal passenger data.

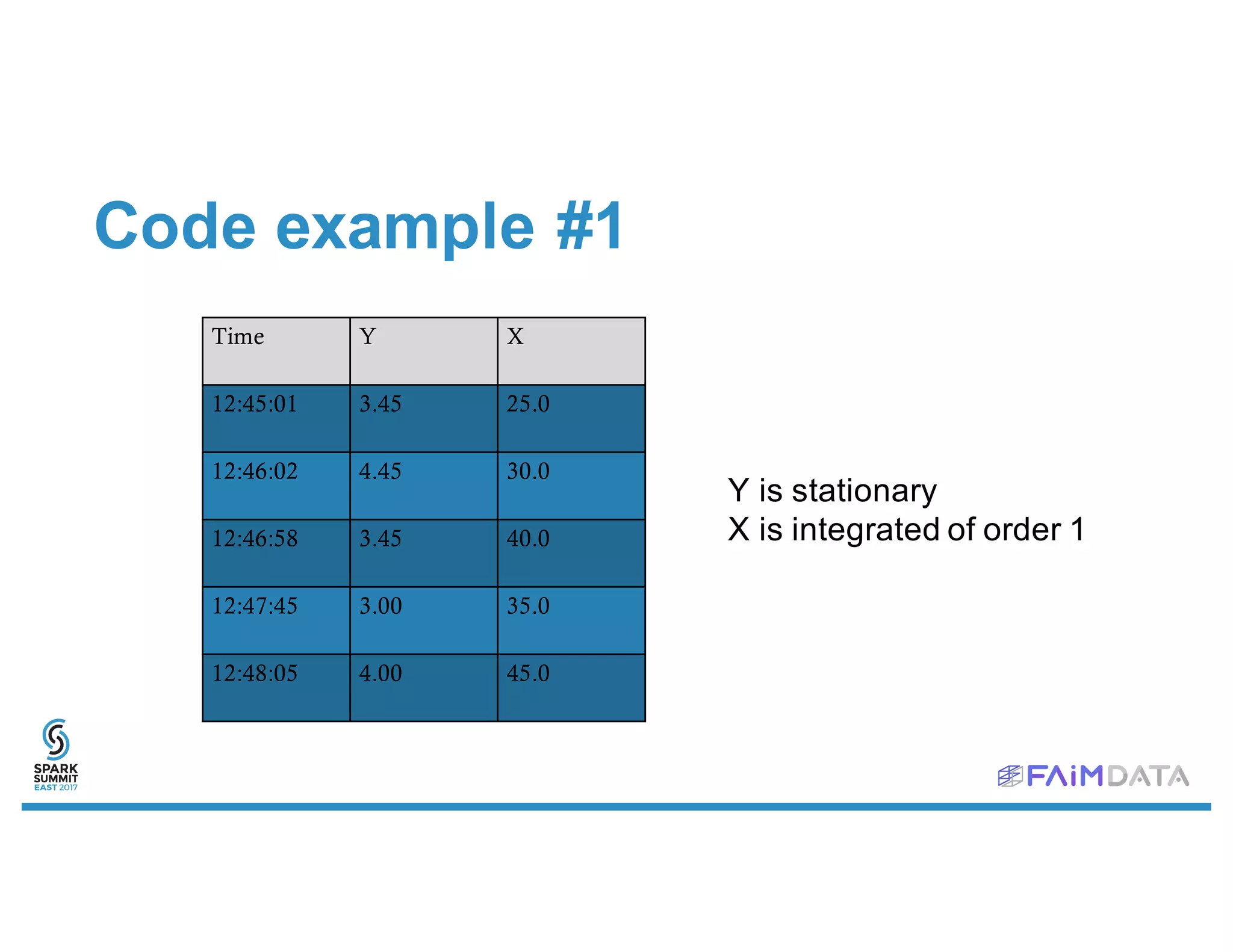

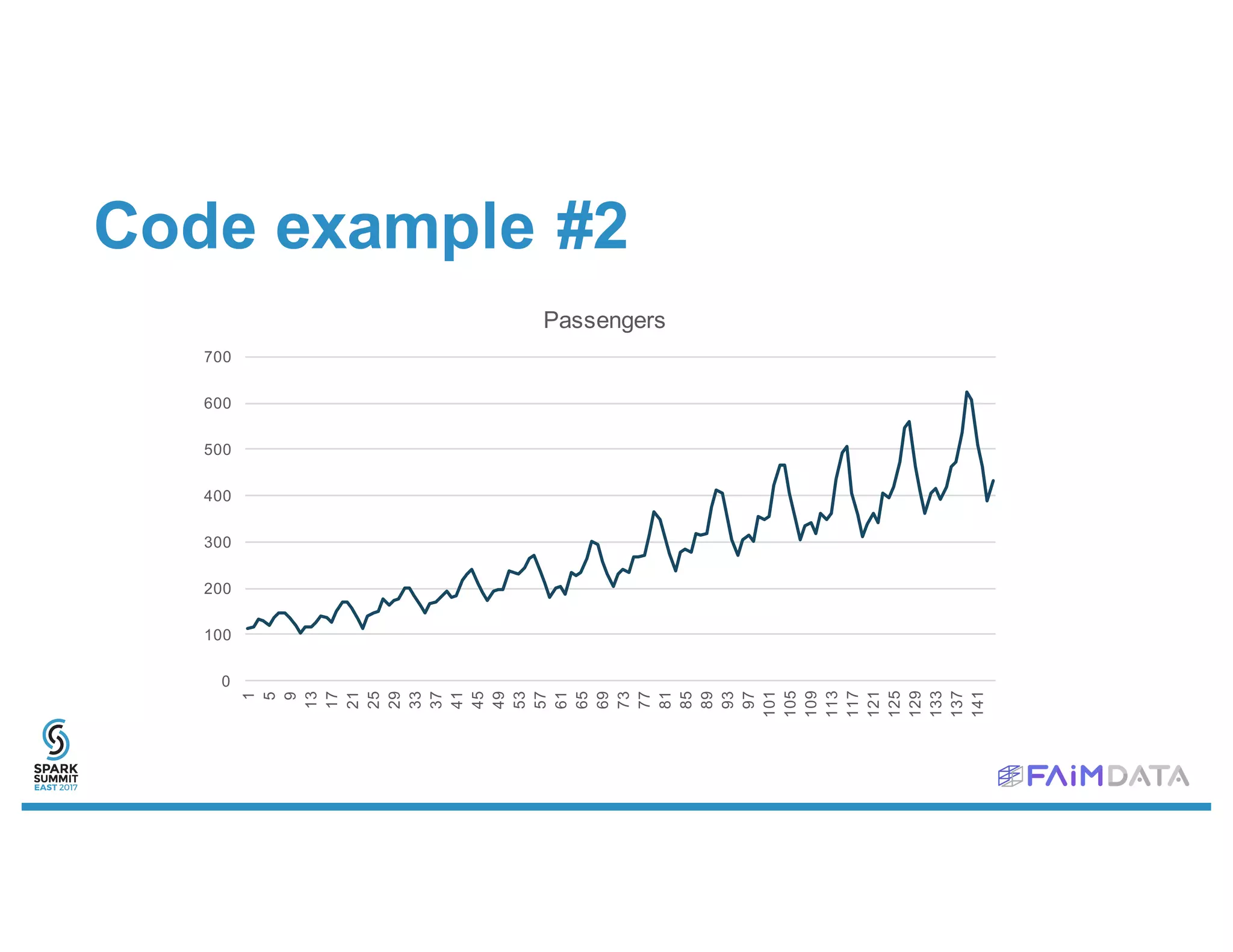

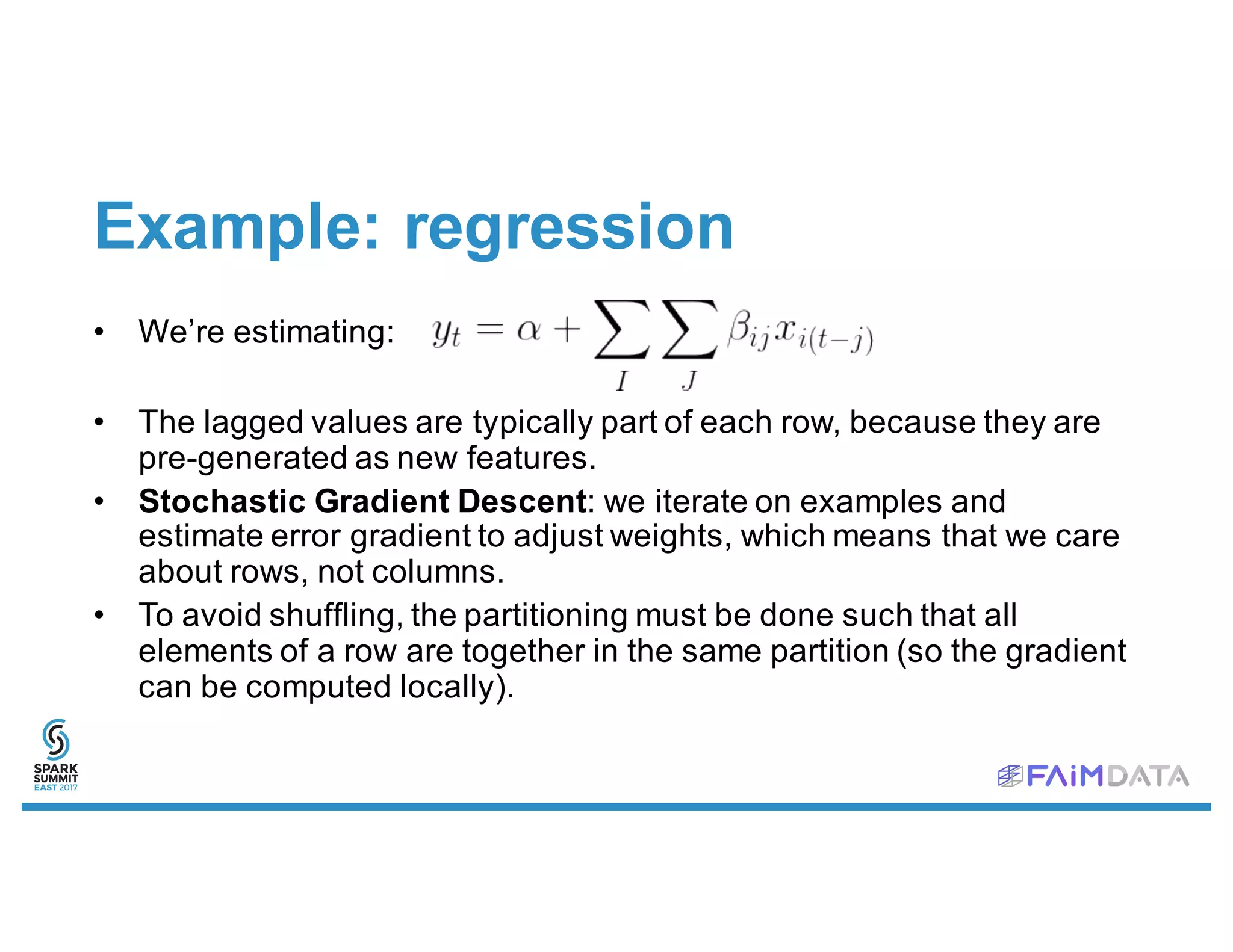

![Vectors Date/

Time

Series 1 Series 2

Vector 1 2:30:01 4.56 78.93

Vector 2 2:30:02 4.57 79.92

Vector 3 2:30:03 4.87 79.91

Vector 4 2:30:04 4.48 78.99

RDD[(ZonedDateTime, Vector)]

DateTime

Index

Vector for

Series 1

Vector for

Series 2

2:30:01 4.56 78.93

2:30:02 4.57 79.92

2:30:03 4.87 79.91

2:30:04 4.48 78.99

TimeSeriesRDD(

DateTimeIndex,

RDD[Vector]

)

Columnar representation Row-based representation](https://image.slidesharecdn.com/2asimonouellette-170214185944/75/Time-Series-Analytics-with-Spark-Spark-Summit-East-talk-by-Simon-Ouellette-6-2048.jpg)

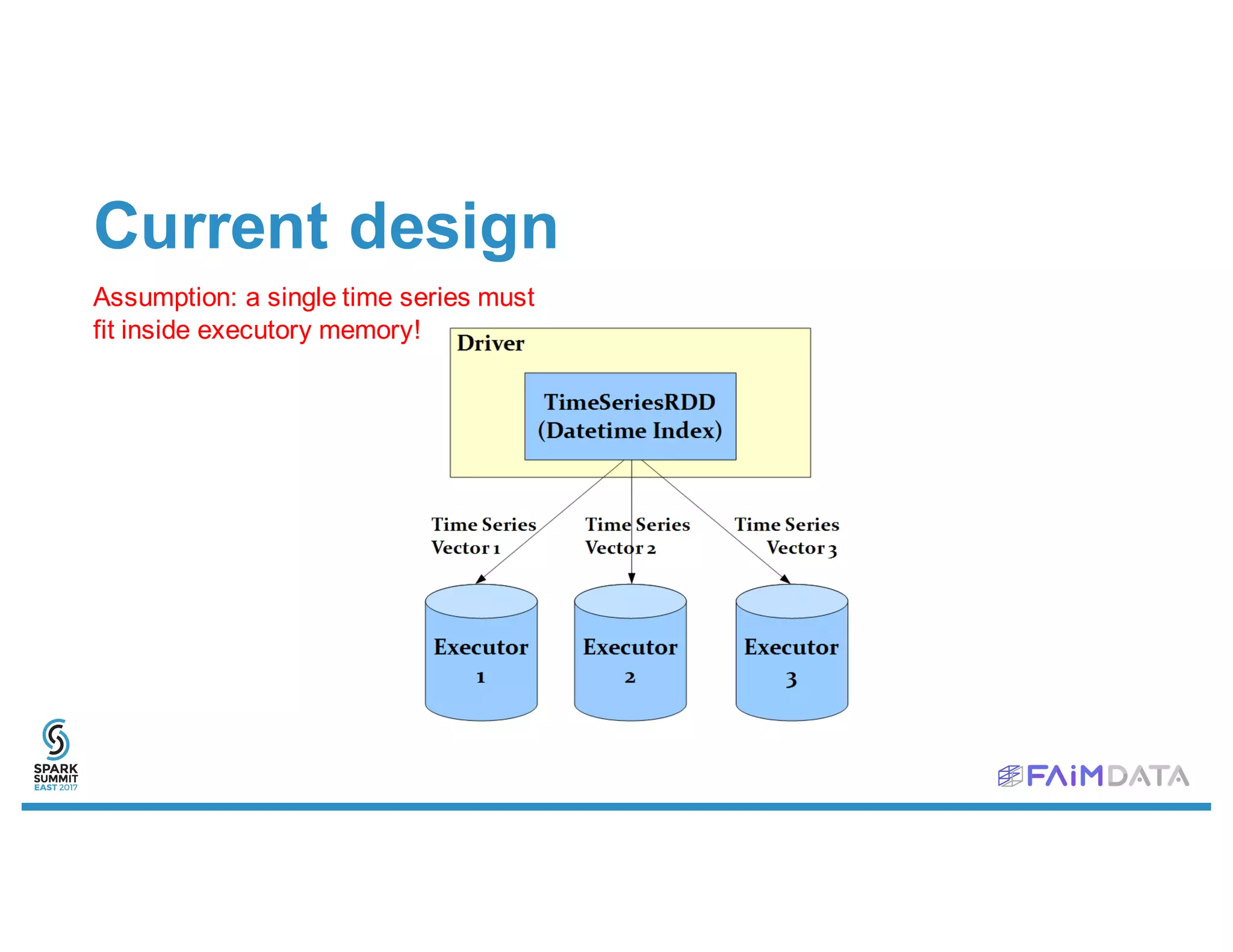

![Current design

Assumption: a single time series must

fit inside executory memory!

TimeSeriesRDD (

DatetimeIndex,

RDD[(K, Vector)]

)

IrregularDatetimeIndex (

Array[Long], // Other limitation: Scala arrays = 232 elements

java.time.ZoneId

)](https://image.slidesharecdn.com/2asimonouellette-170214185944/75/Time-Series-Analytics-with-Spark-Spark-Summit-East-talk-by-Simon-Ouellette-15-2048.jpg)