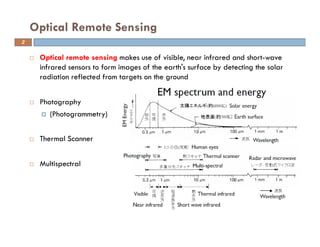

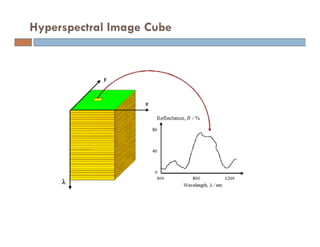

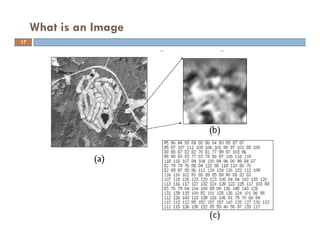

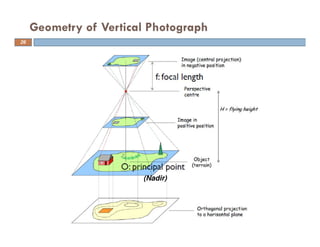

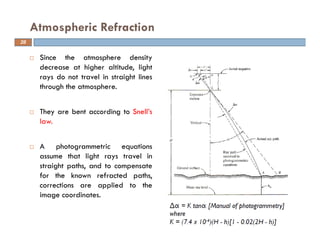

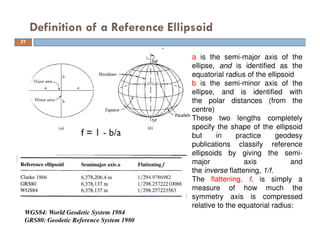

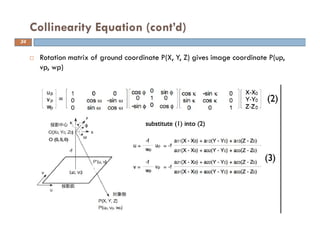

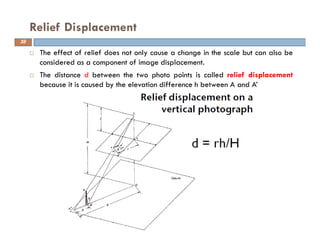

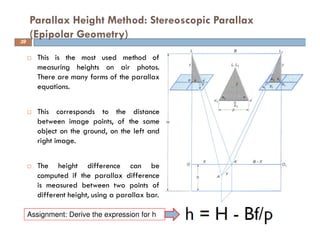

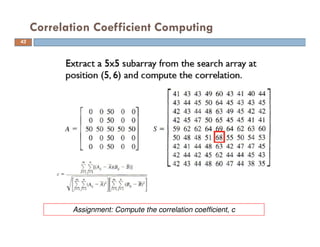

This document discusses different types of remote sensing systems used in civil engineering, including optical, photogrammetric, thermal, multispectral, hyperspectral, and panchromatic systems. It provides examples and specifications of various sensors, such as MODIS, AVIRIS, IKONOS, and WorldView. The document also covers digital image formats, photogrammetry, image distortions and displacements, reference ellipsoids, relief displacement, and methods of measuring heights from aerial photographs.