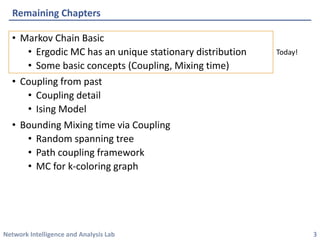

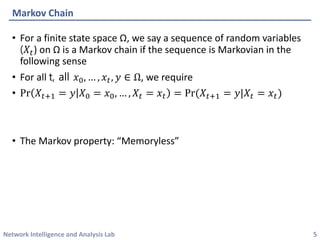

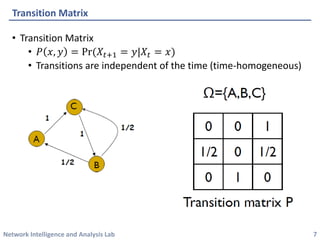

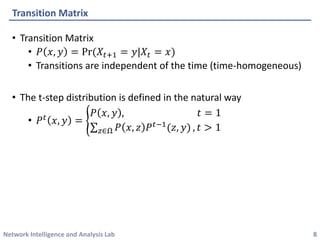

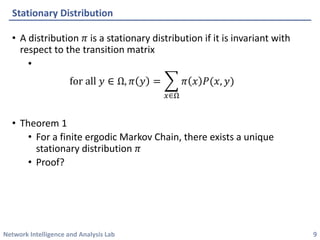

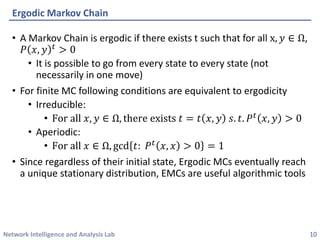

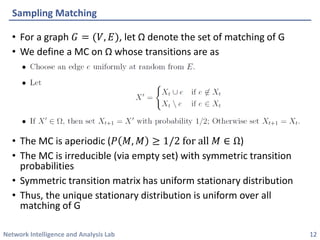

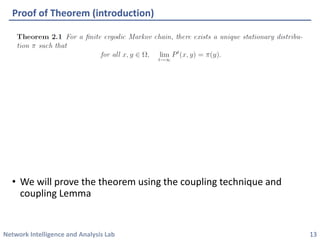

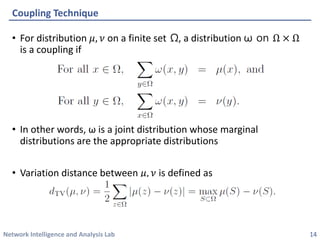

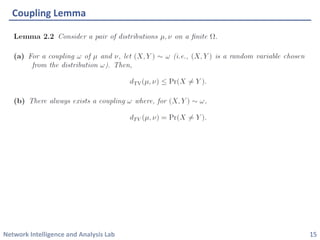

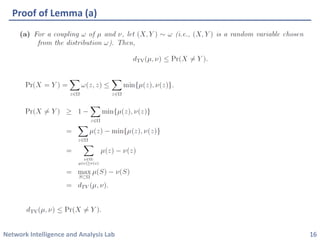

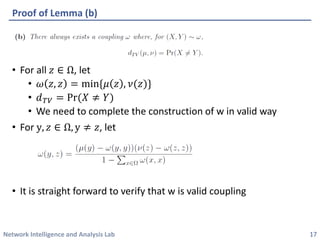

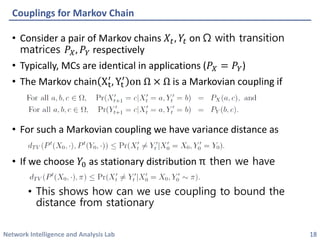

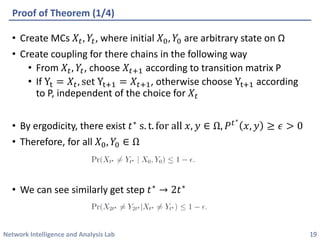

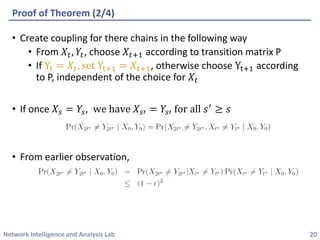

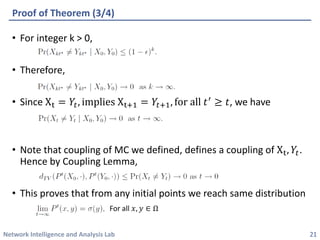

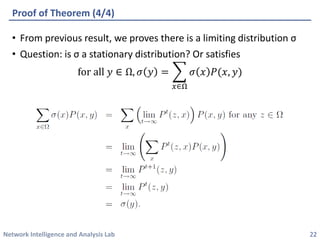

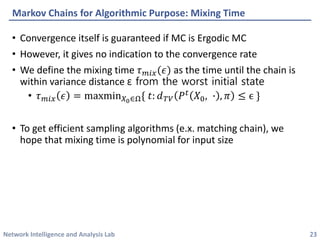

This document discusses Markov chains and their use for algorithmic sampling. It introduces Markov chains and their transition matrices. An example of using a Markov chain for card shuffling is provided. It is shown that an ergodic Markov chain has a unique stationary distribution that it converges to. Coupling techniques are introduced to prove this, and the mixing time is defined as the time it takes to converge to the stationary distribution. An example of using a Markov chain to sample matchings in a graph uniformly is given.