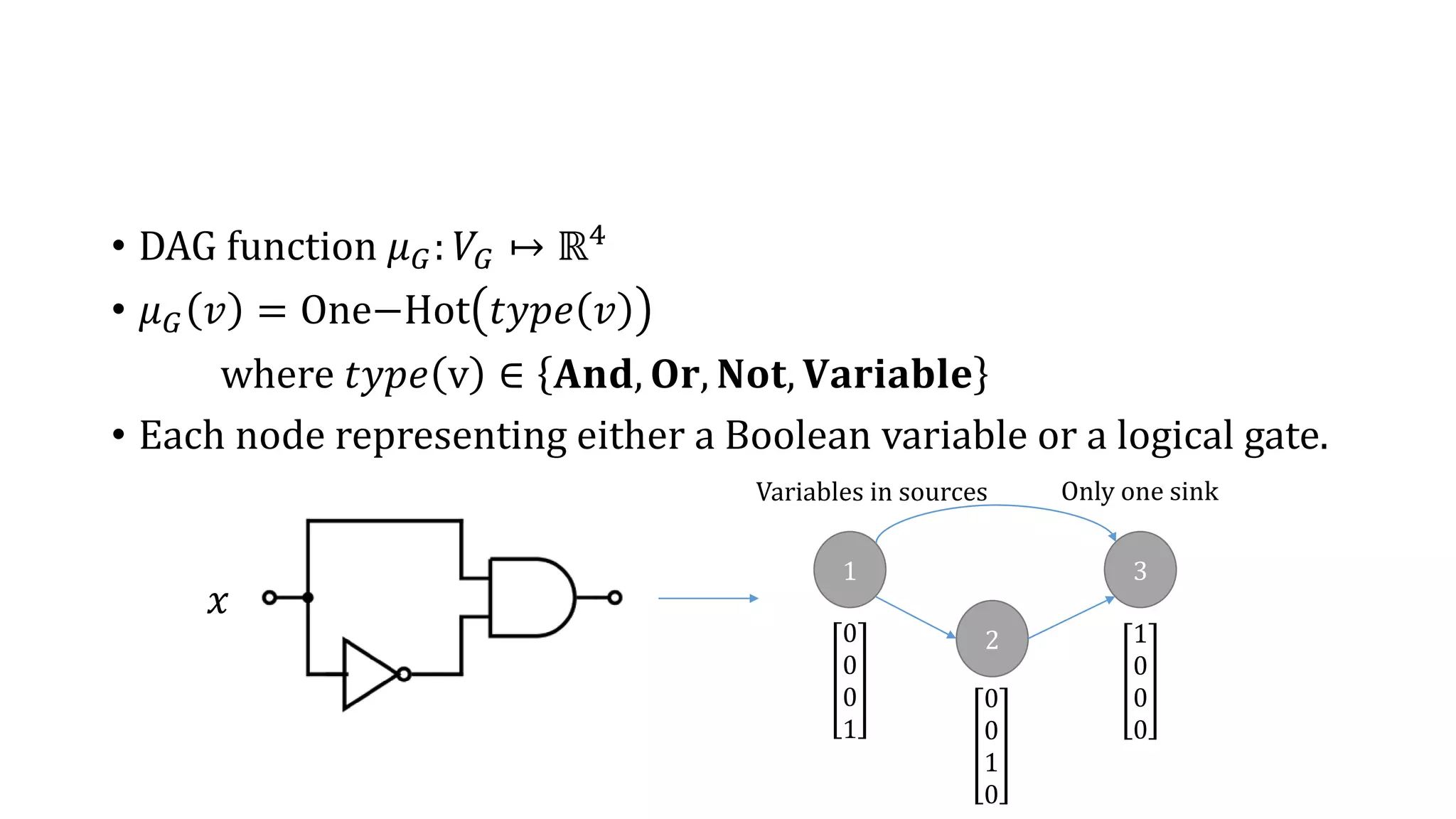

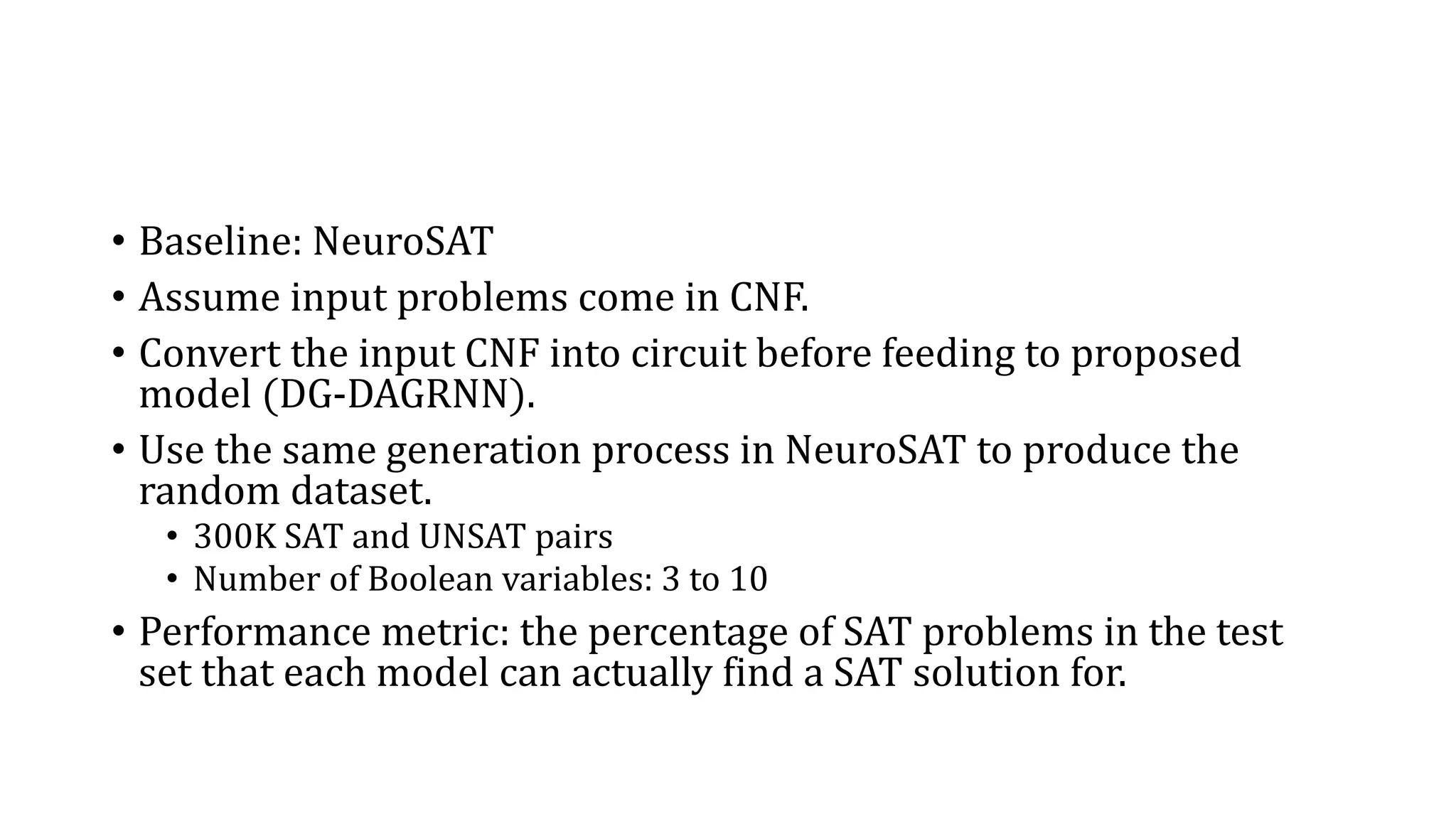

The document presents a neural framework designed to solve the circuit-satisfiability (circuit-sat) problem using a differentiable approach and a directed acyclic graph embedding. It highlights the advantages of this model over the neurosat method, showcasing greater out-of-sample generalization performance while addressing the inherent structures of combinatorial optimization problems. Experimental results demonstrate the model's effectiveness in generating solutions for various Boolean circuits with improved efficiency compared to traditional methods.

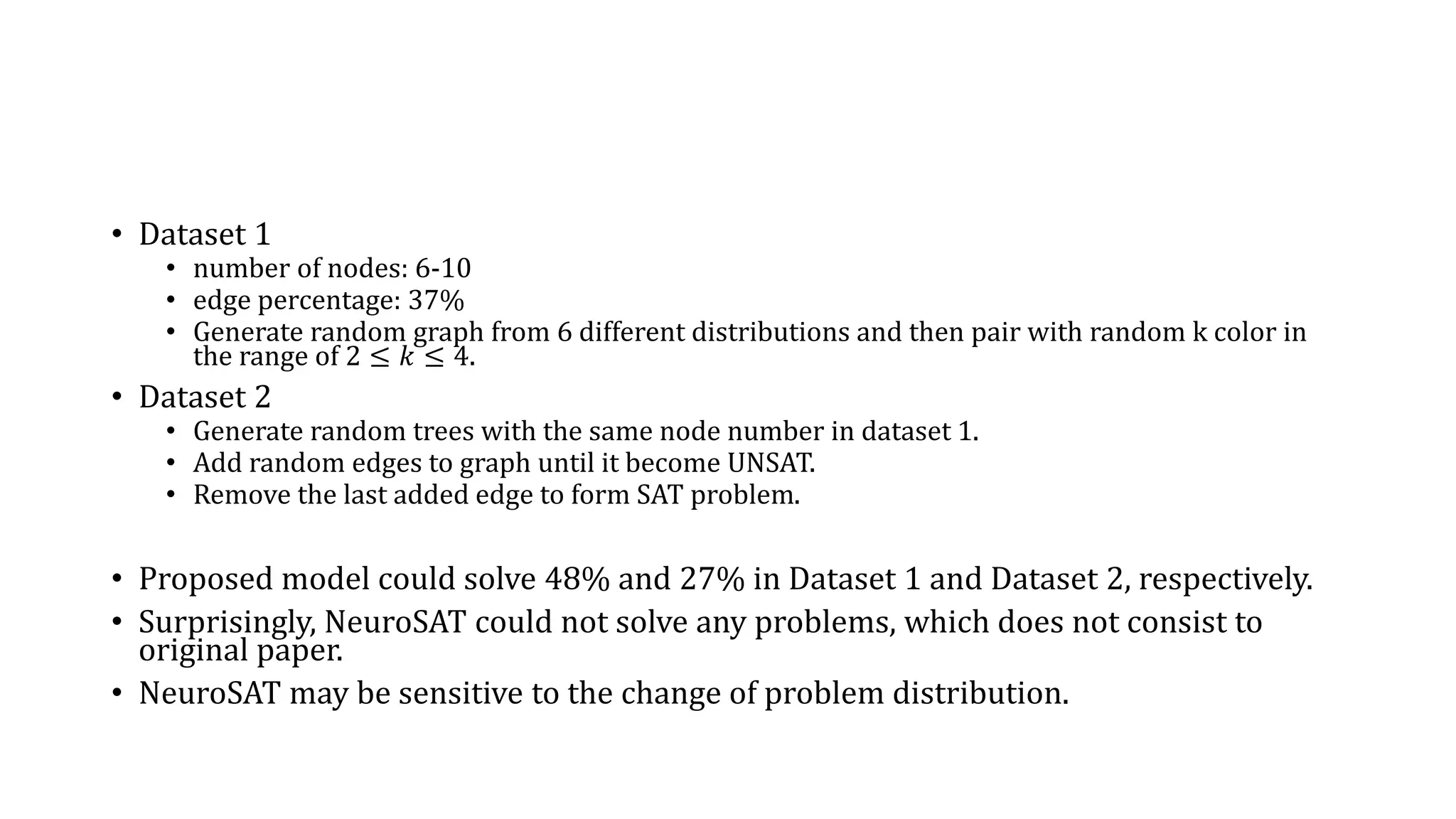

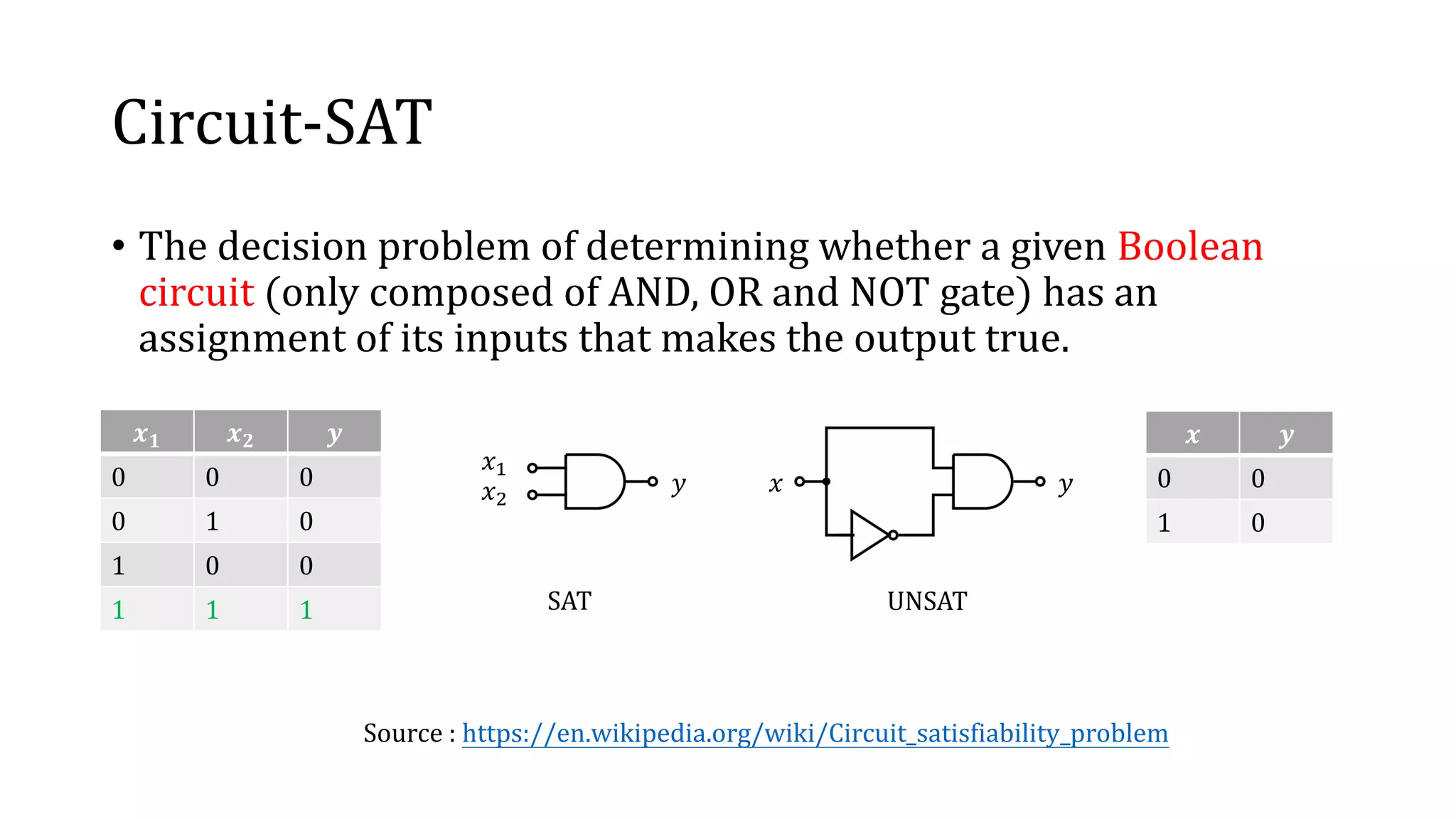

![Solver Network

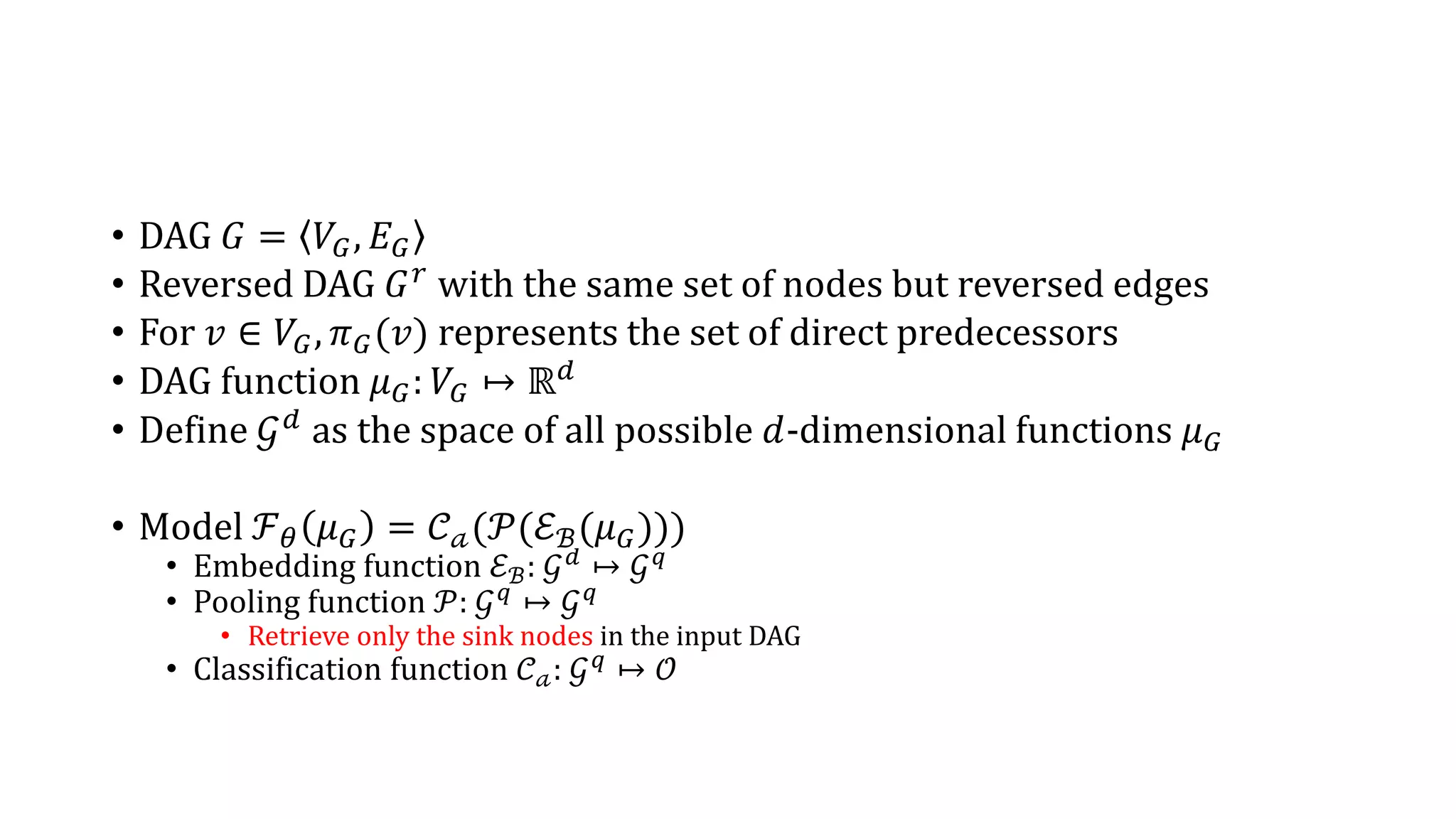

• Embedding function ℰℬ:

• multi-layer recursive embedding with interleaving forward and

reversed layers

• Last layer is a reversed layer so we can read the output from

Variable nodes.

• Output space encode the soft assignment (in range [0−1]) to the

corresponding variable node in the input circuit.

• ℱ 𝜃 acts as policy network.

Pooling: Retrieve only the sink nodes](https://image.slidesharecdn.com/learningtosolvecircuit-sat-190923020628/75/Paper-study-Learning-to-solve-circuit-sat-21-2048.jpg)

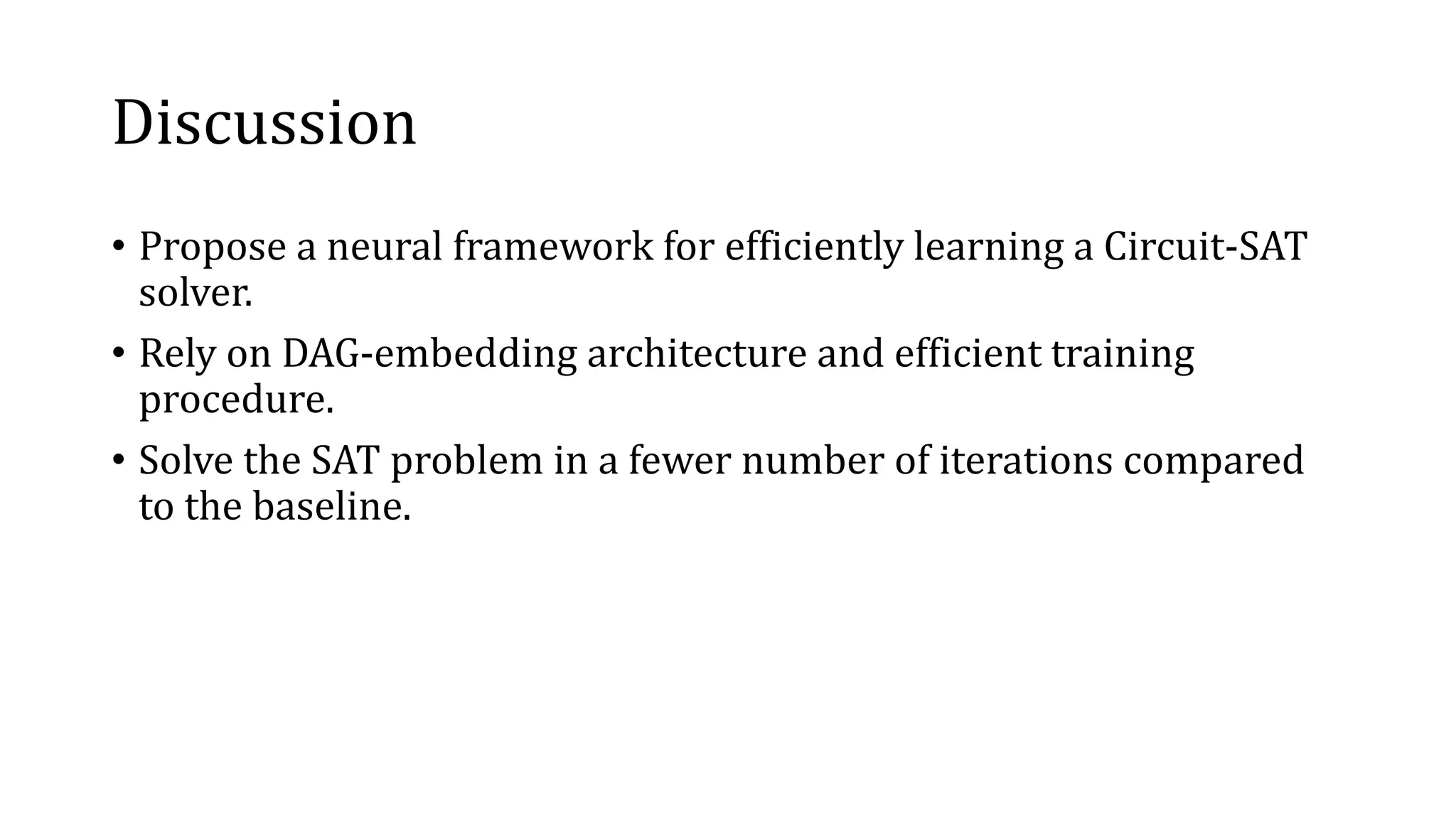

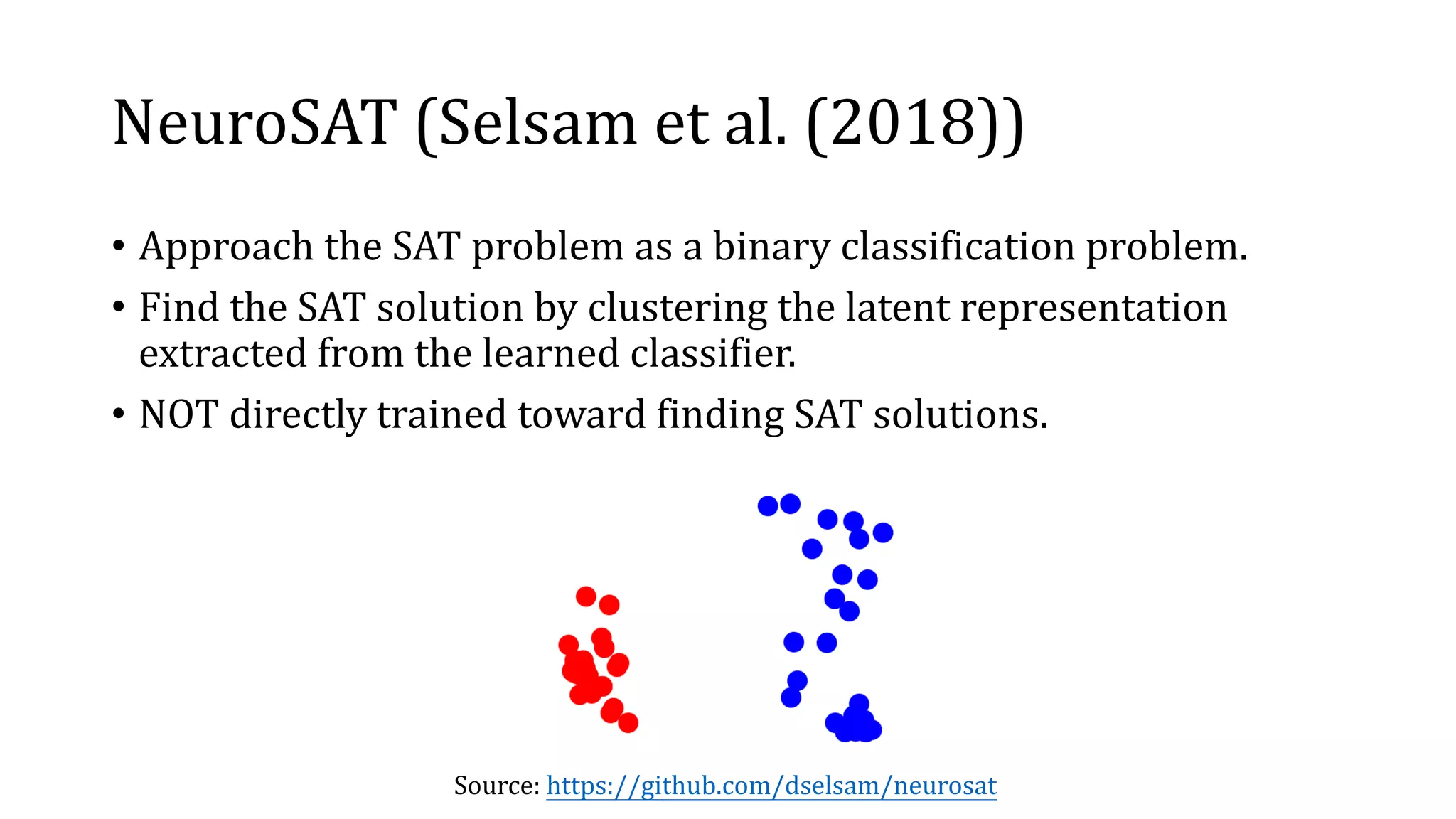

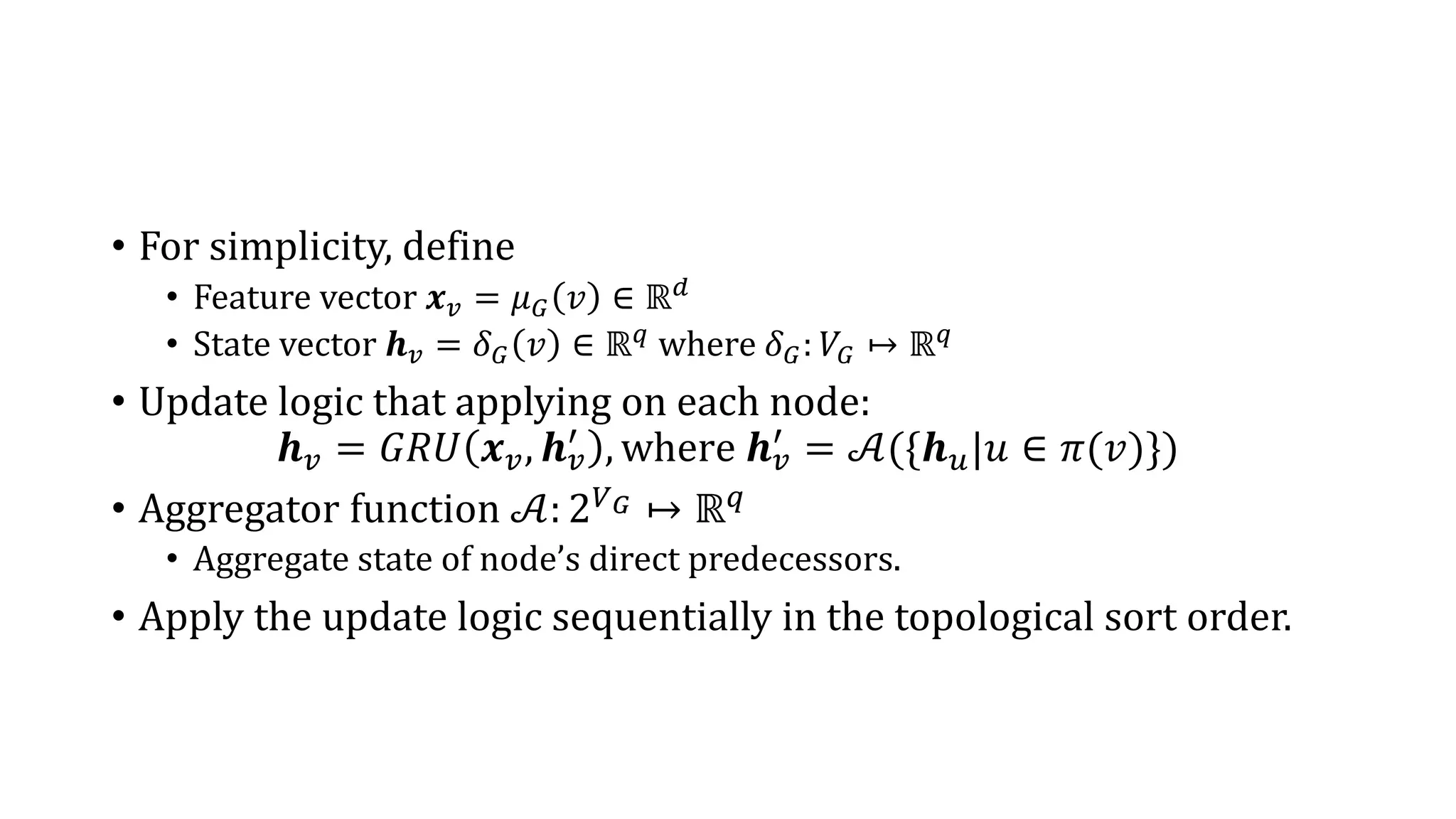

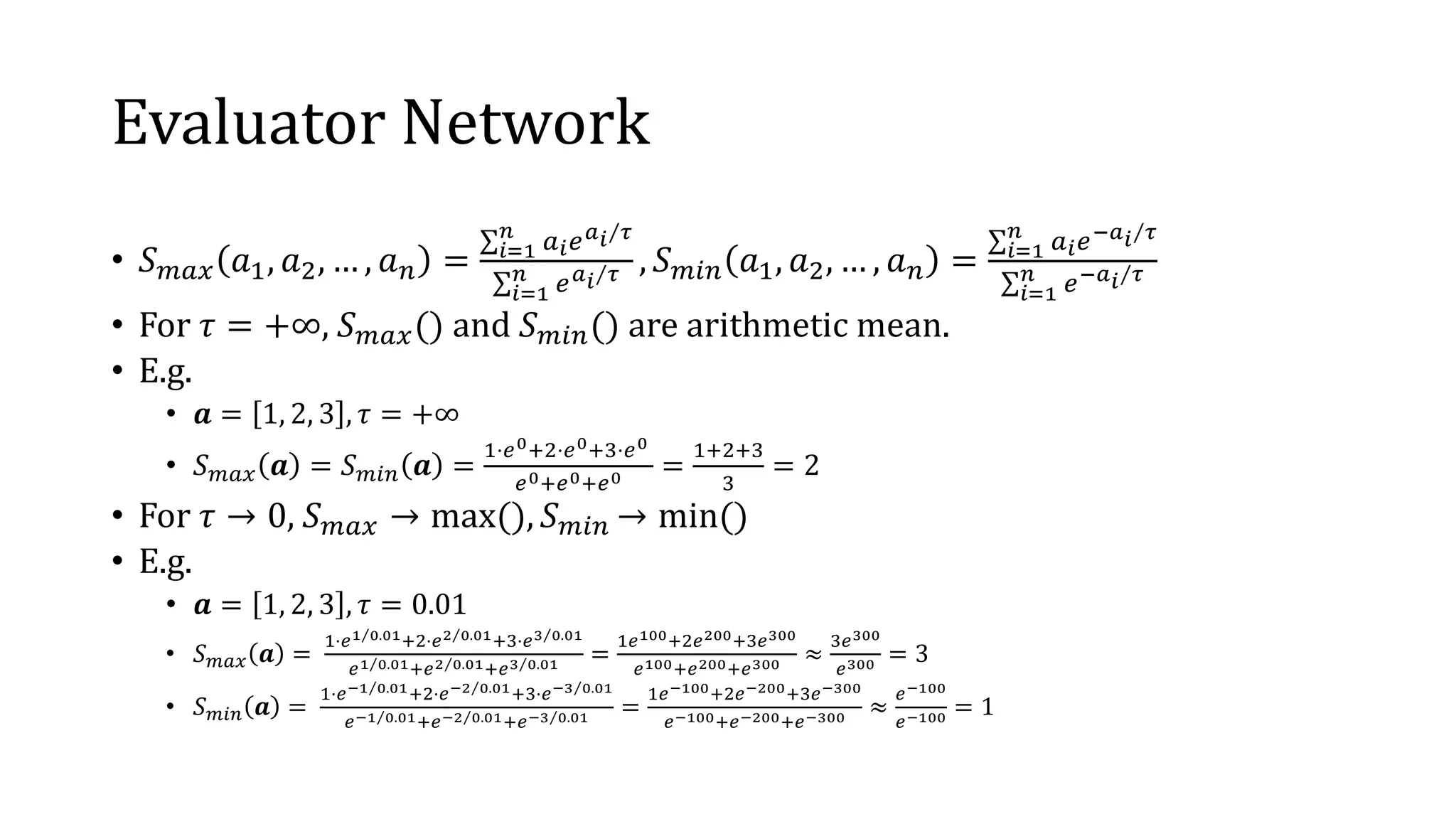

![Optimization

• 𝒮 𝜃: 𝒢 ↦ [0, 1] as 𝑆 𝜃 𝜇 𝐺 = ℛ 𝐺(ℱ 𝜃(𝜇 𝐺))

• Use the policy network to produce assignment and feed to reward

network to validate the satisfiability.

• Minimize the loss function

ℒ 𝑠 =

1−𝑠 𝜅

1−𝑠 𝜅+𝑠 𝜅 where 𝑠 = 𝒮 𝜃(𝜇 𝐺) and 𝜅 ≥ 1

• Could be seen as the portion of un-satisfiability.](https://image.slidesharecdn.com/learningtosolvecircuit-sat-190923020628/75/Paper-study-Learning-to-solve-circuit-sat-24-2048.jpg)

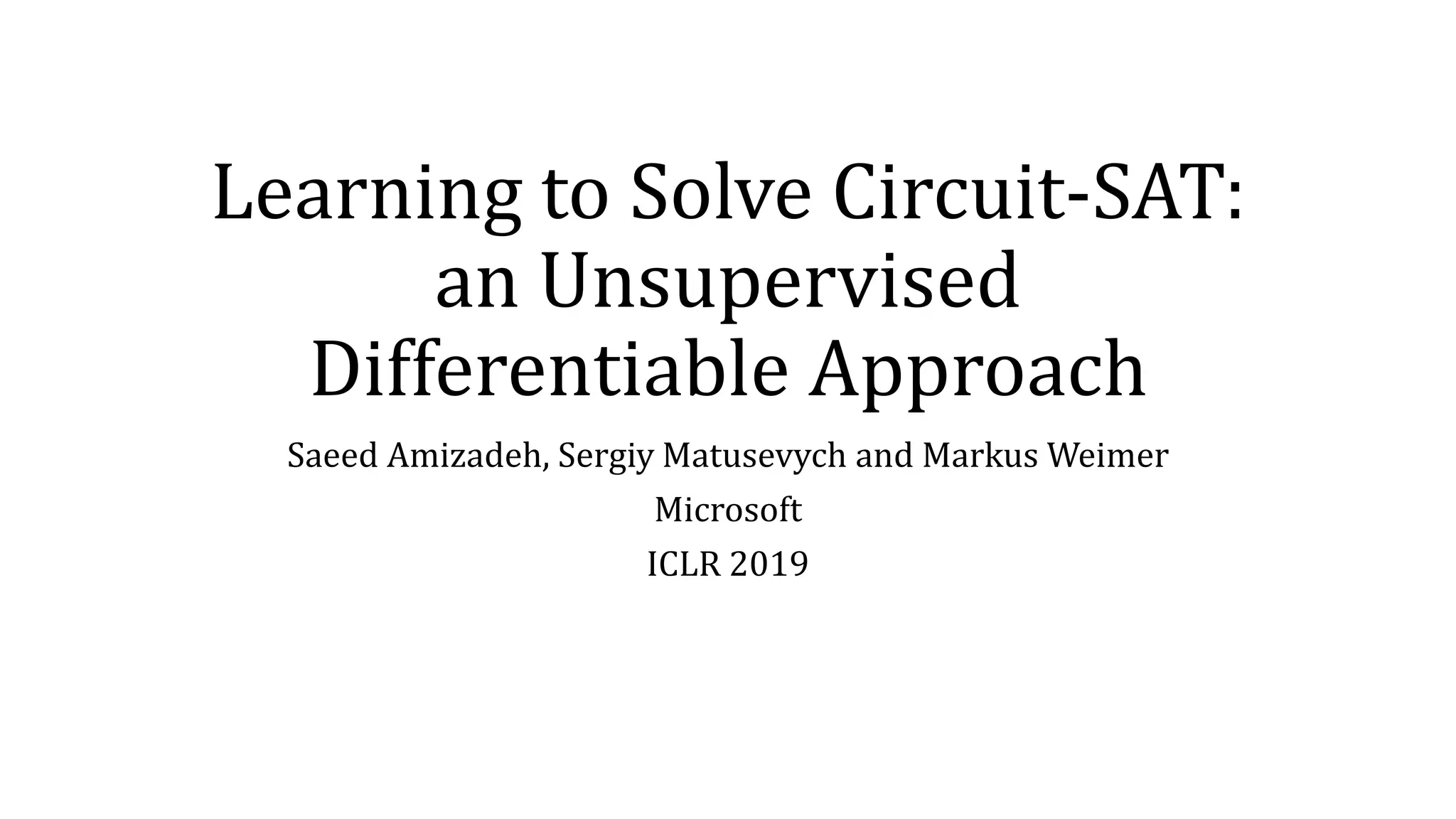

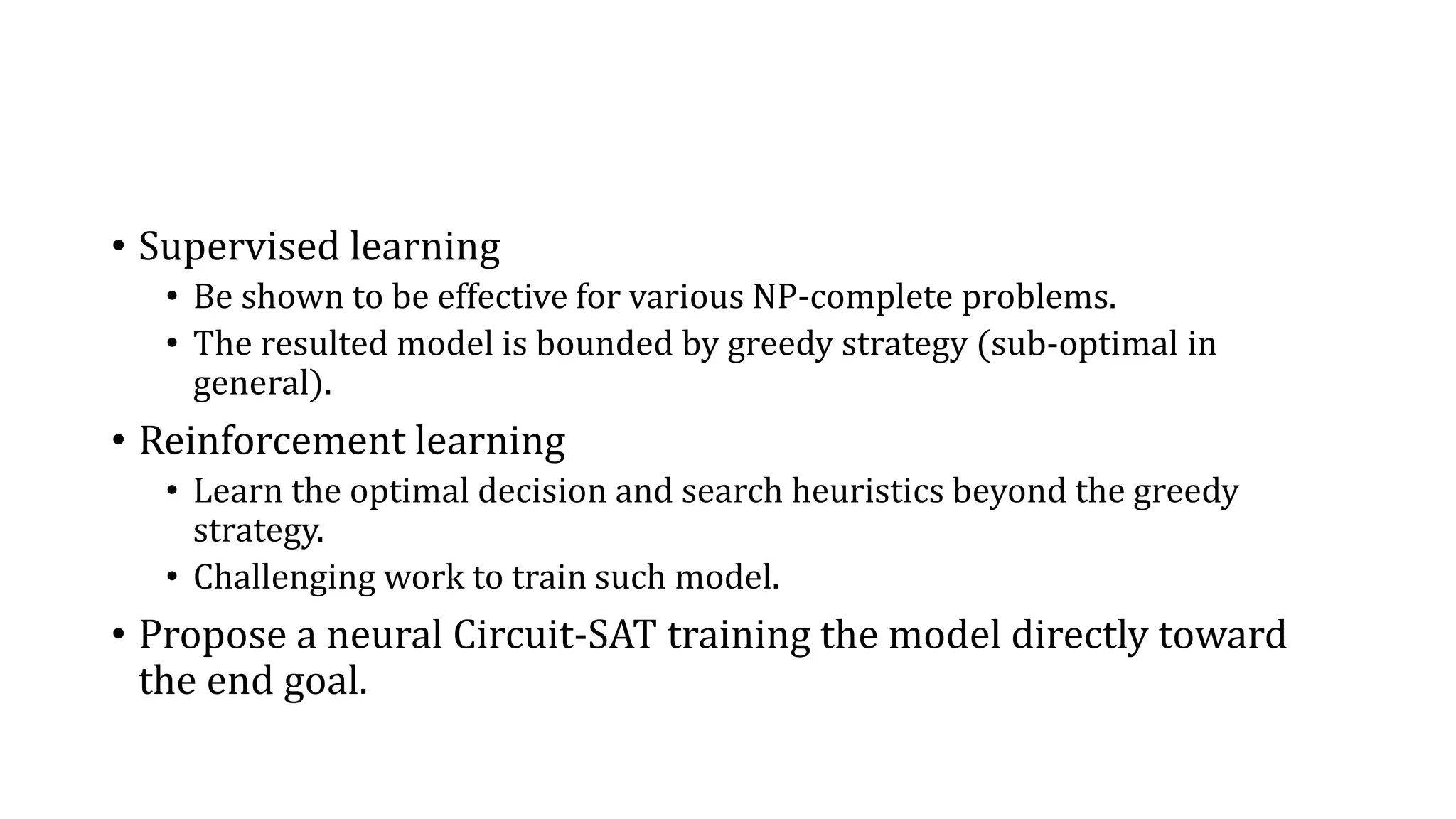

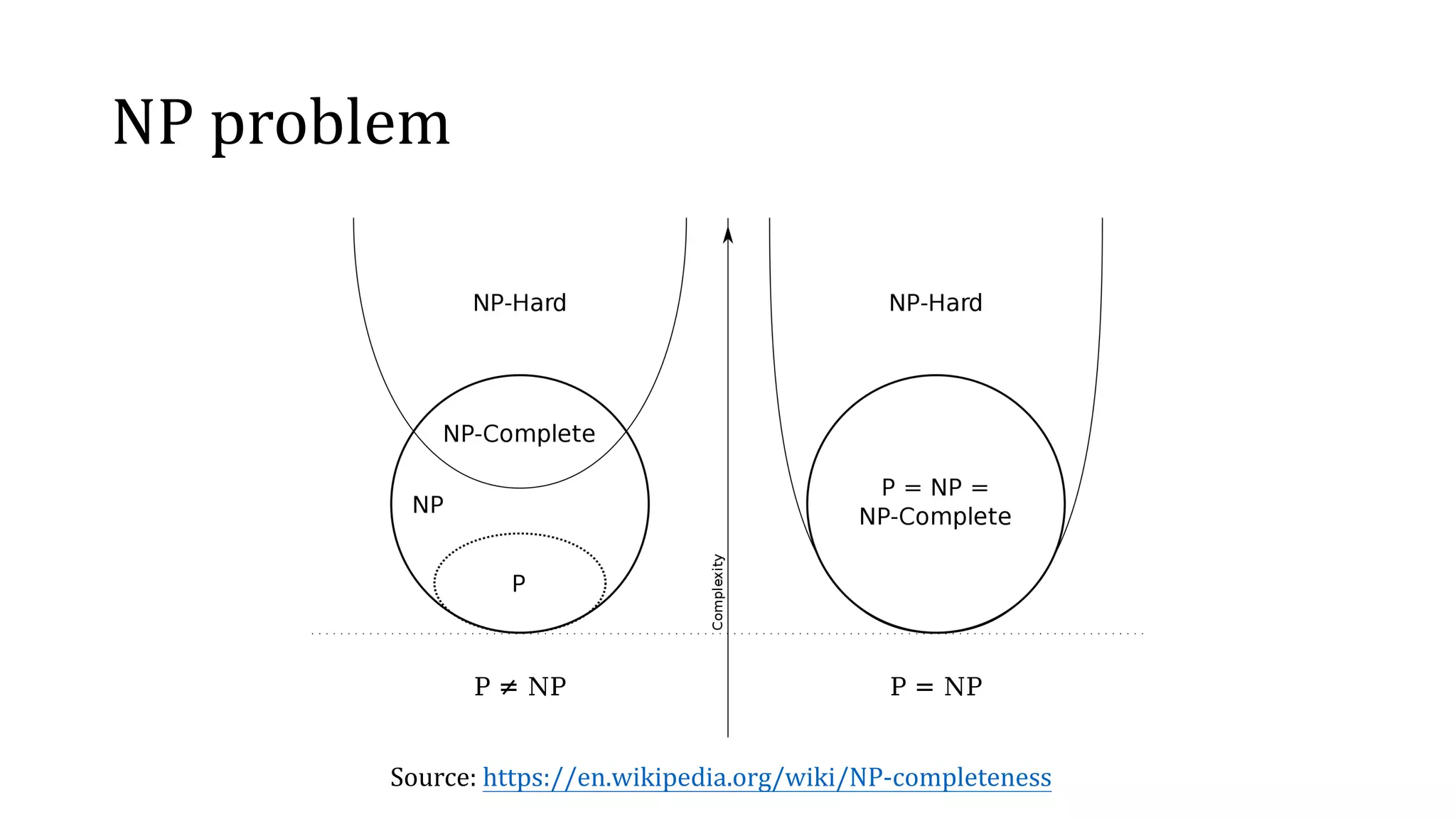

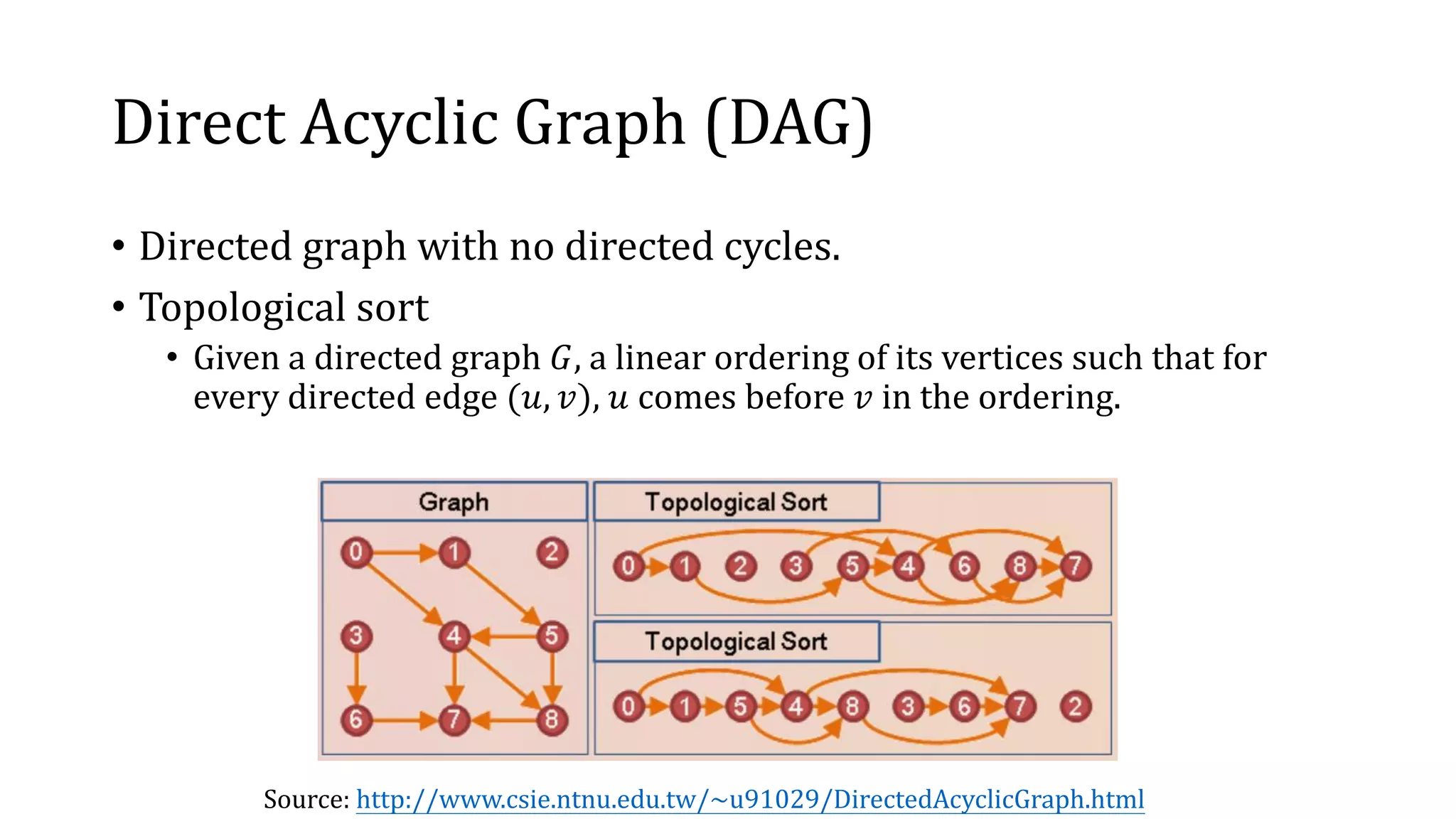

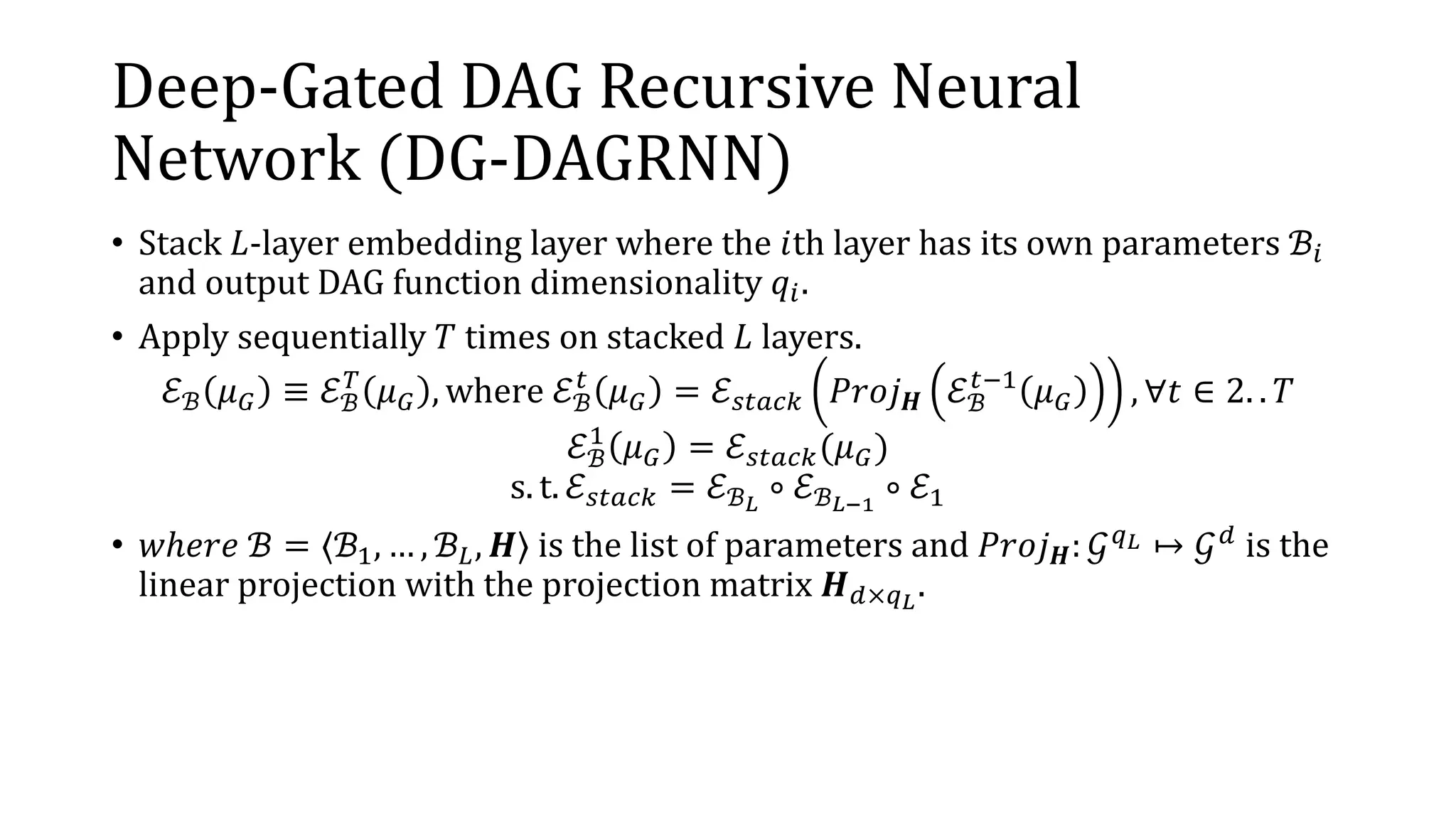

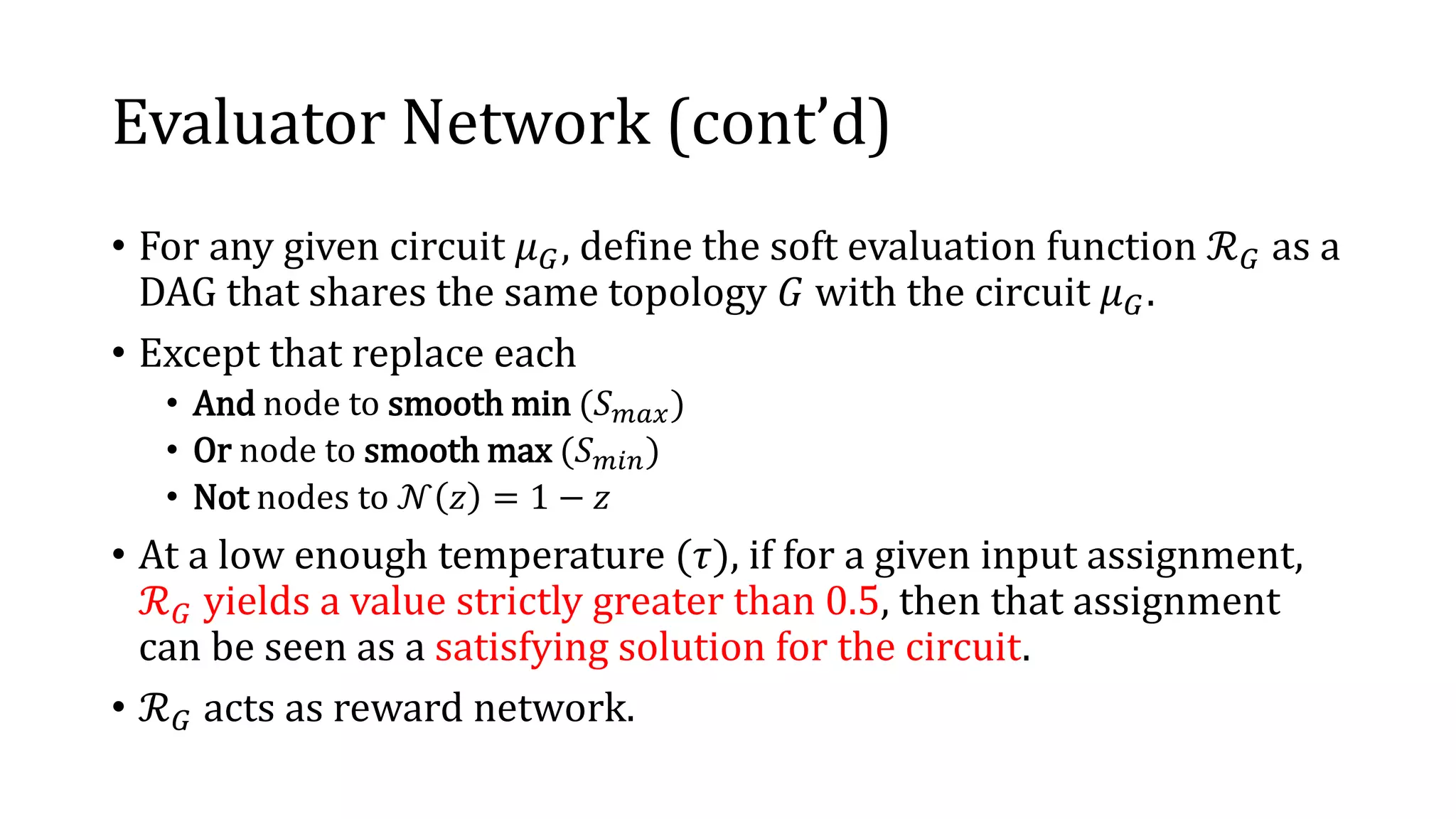

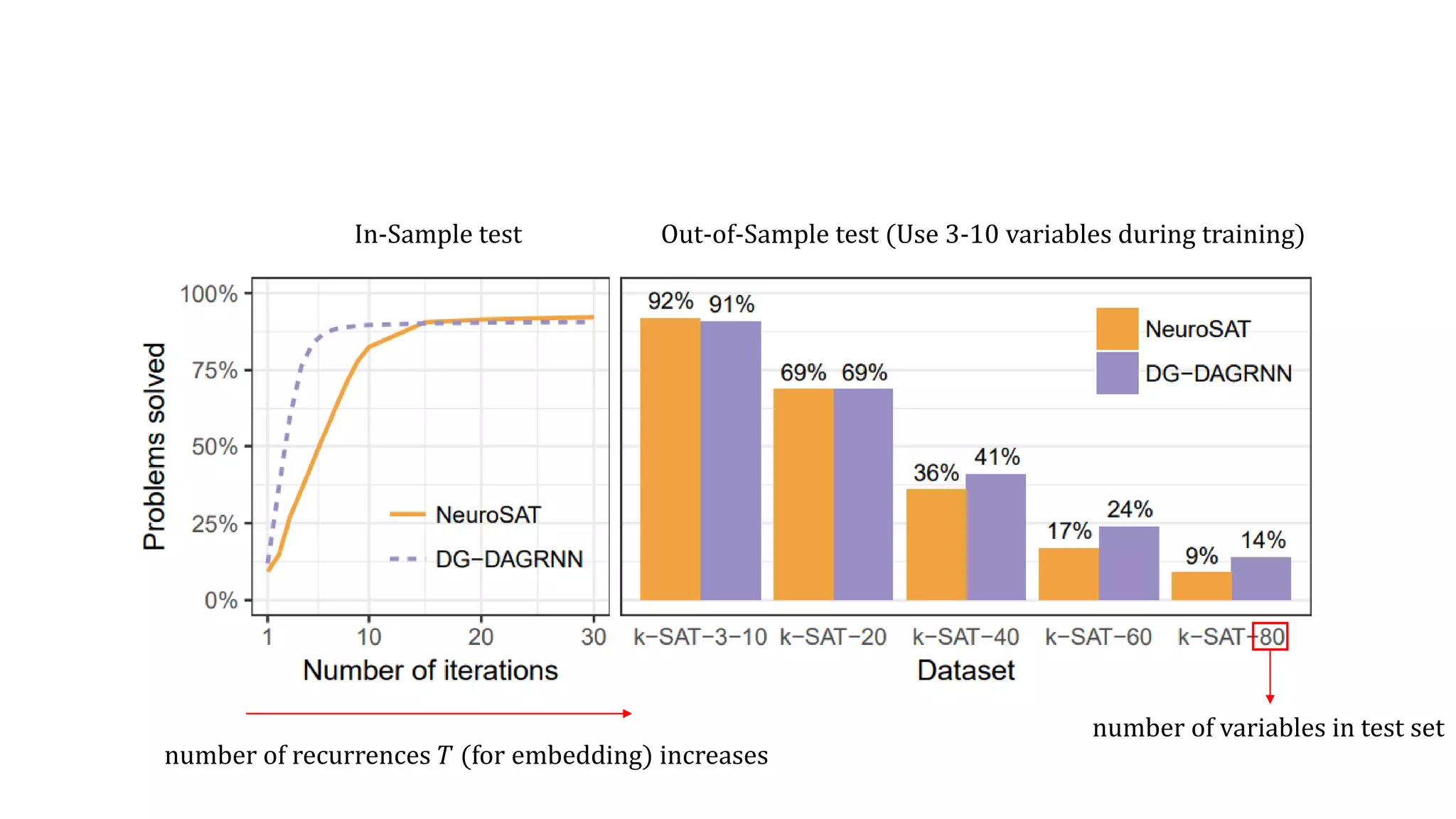

![Graph k-coloring (cont’d)

• Given a graph with 𝑁 nodes and maximum 𝑘 allowed colors,

• Define 𝑥𝑖𝑗 for 1 ≤ 𝑖 ≤ 𝑁 and 1 ≤ 𝑗 ≤ 𝑘, where 𝑥𝑖𝑗 = 1 indicates

that the 𝑖th node is colored by the 𝑗th color.

• Transform the problem to SAT problem with Boolean CNF:

• ⋀𝑖=1

𝑁

(⋁𝑗=1

𝑘

𝑥𝑖𝑗) ∧ [⋀ 𝑝,𝑞 ∈𝐸(⋀𝑗=1

𝑘

(¬𝑥 𝑝𝑗 ∨ ¬𝑥 𝑞𝑗))]

• Left set of clauses (red): ensure that each node of the graph takes at least

one color.

• Right set of clauses (blue): constrain that the neighboring nodes cannot

take the same color. 𝒙 𝒑𝒋 𝒙 𝒒𝒋 (¬𝑥 𝑝𝑗 ∨ ¬𝑥 𝑞𝑗)

0 0 1

0 1 1

1 0 1

1 1 0](https://image.slidesharecdn.com/learningtosolvecircuit-sat-190923020628/75/Paper-study-Learning-to-solve-circuit-sat-29-2048.jpg)

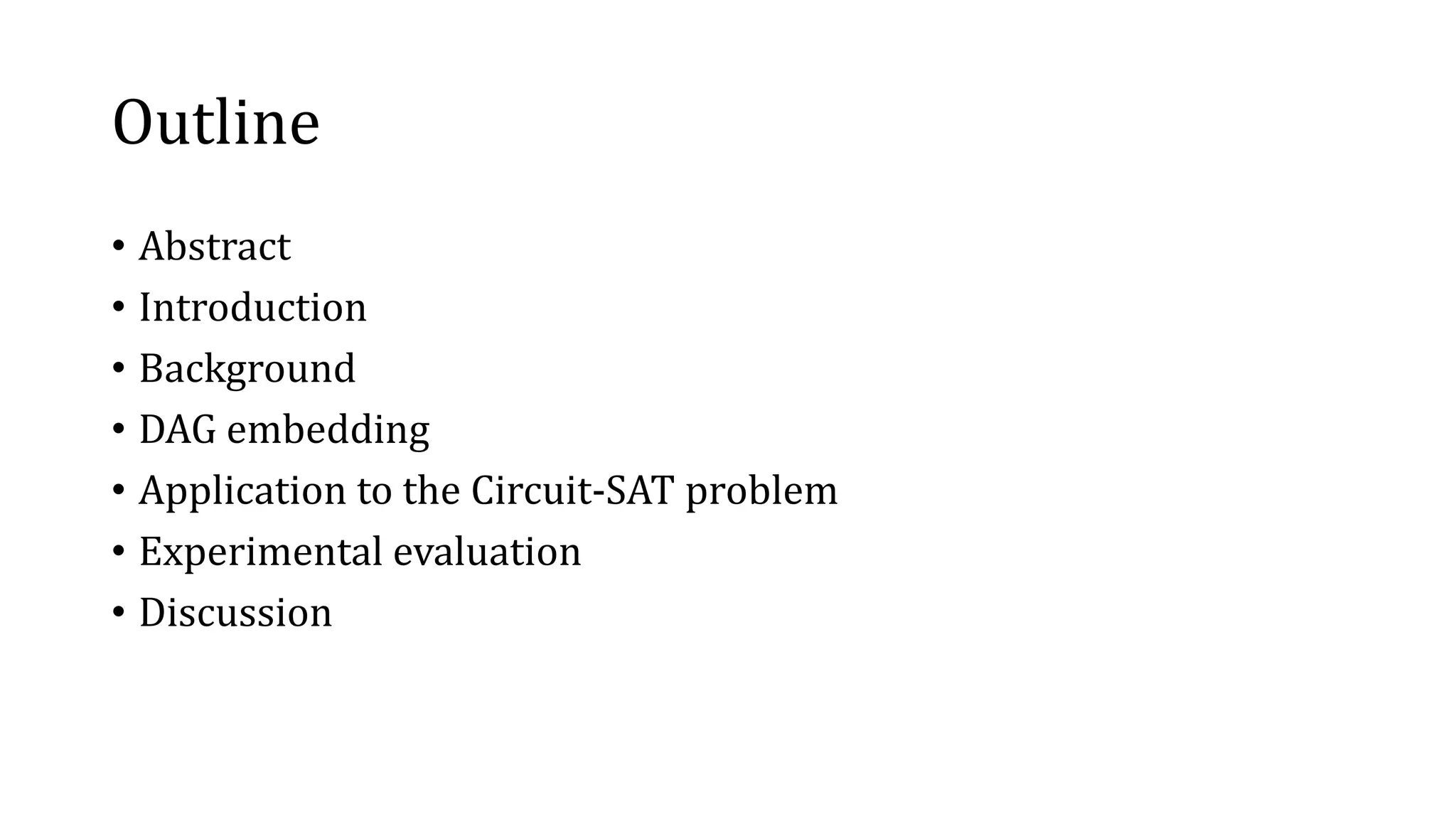

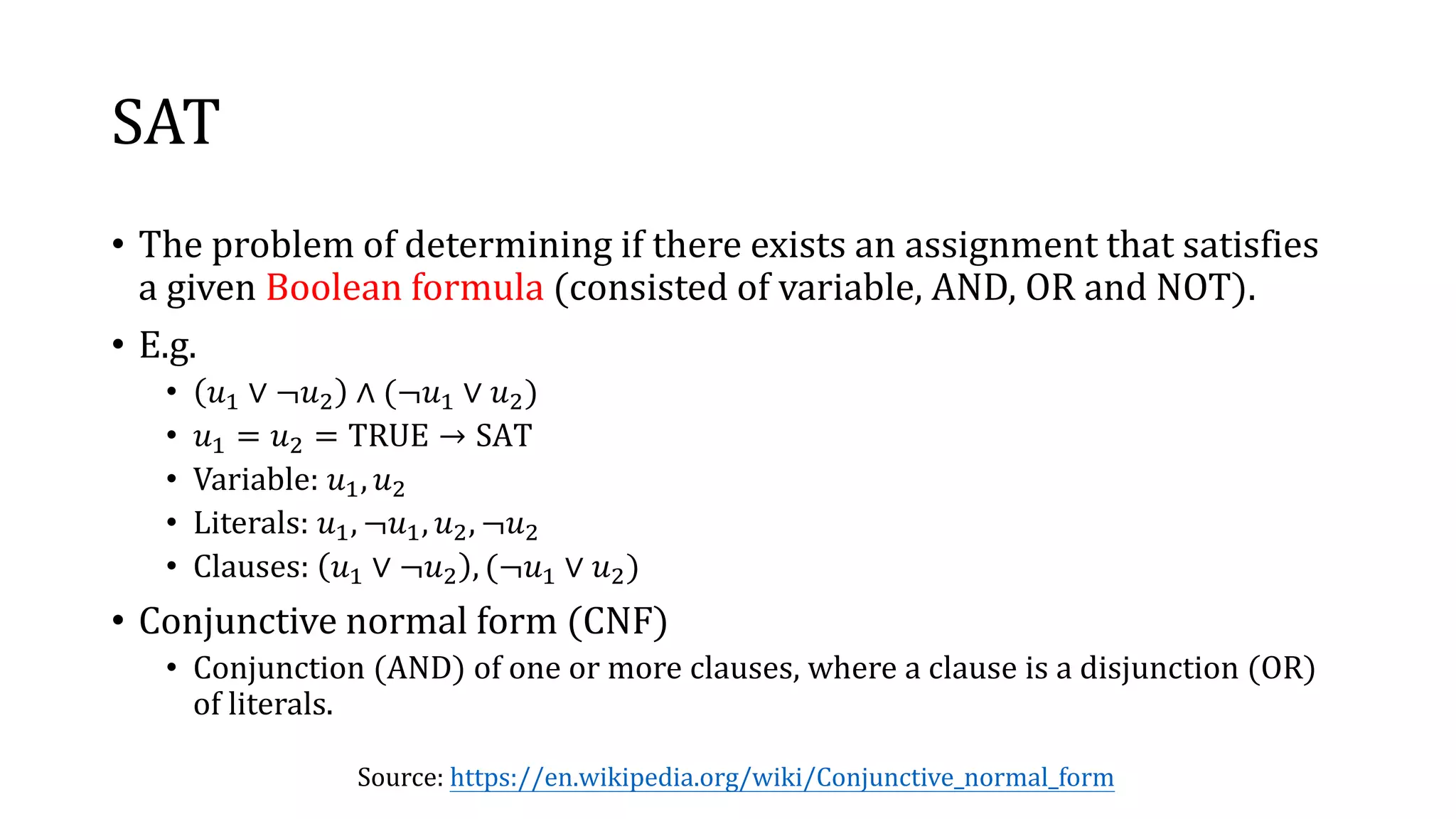

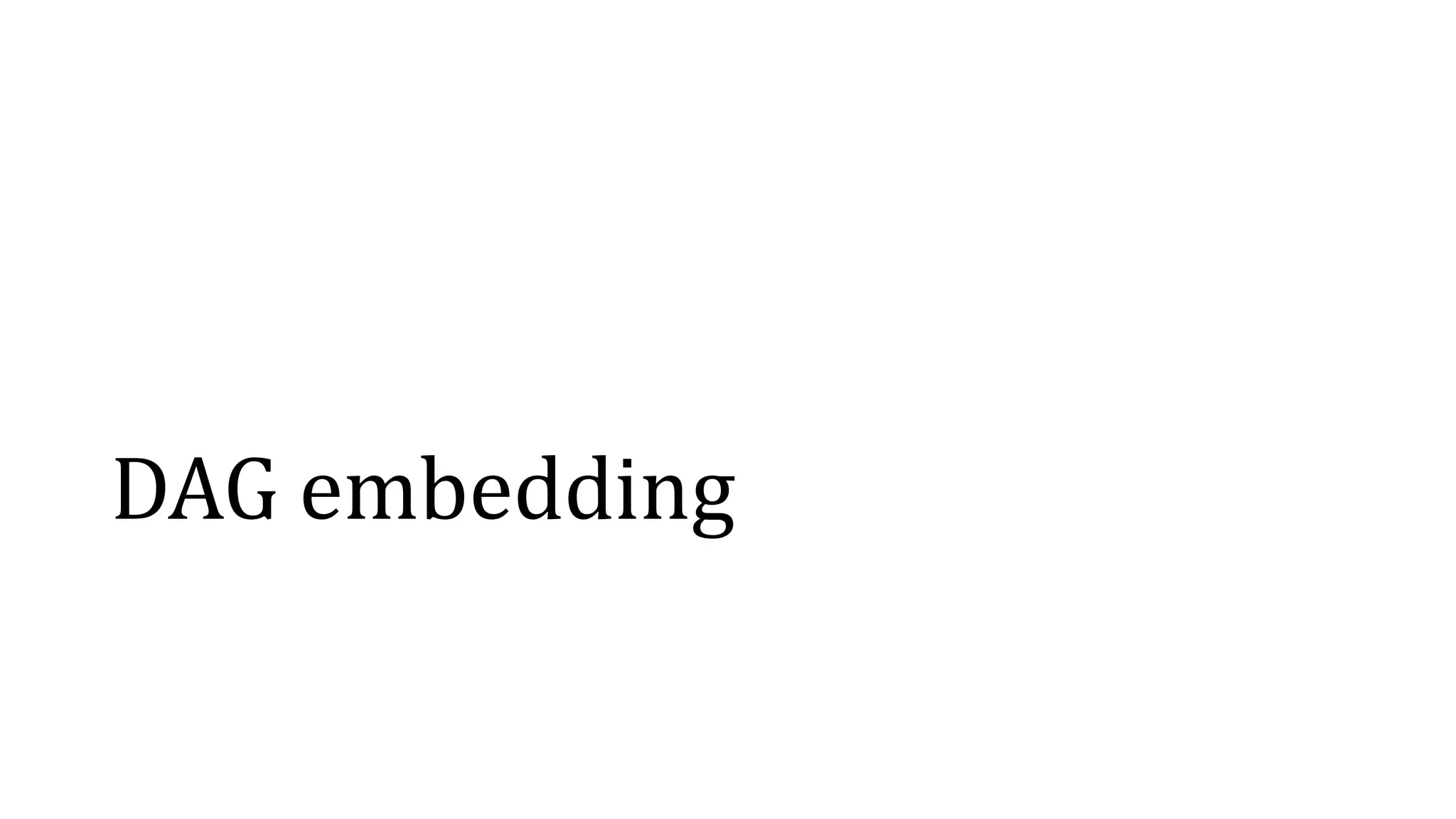

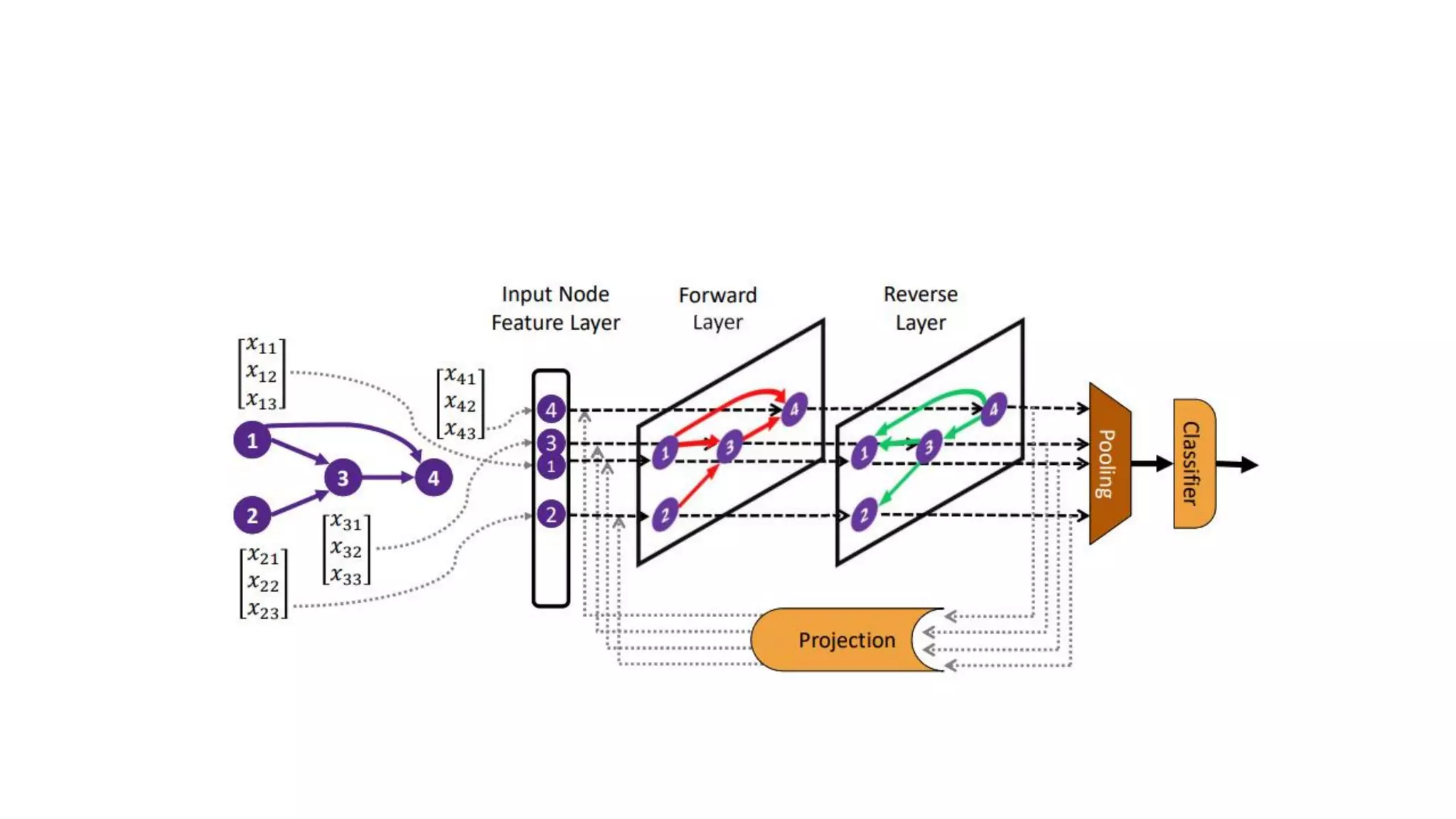

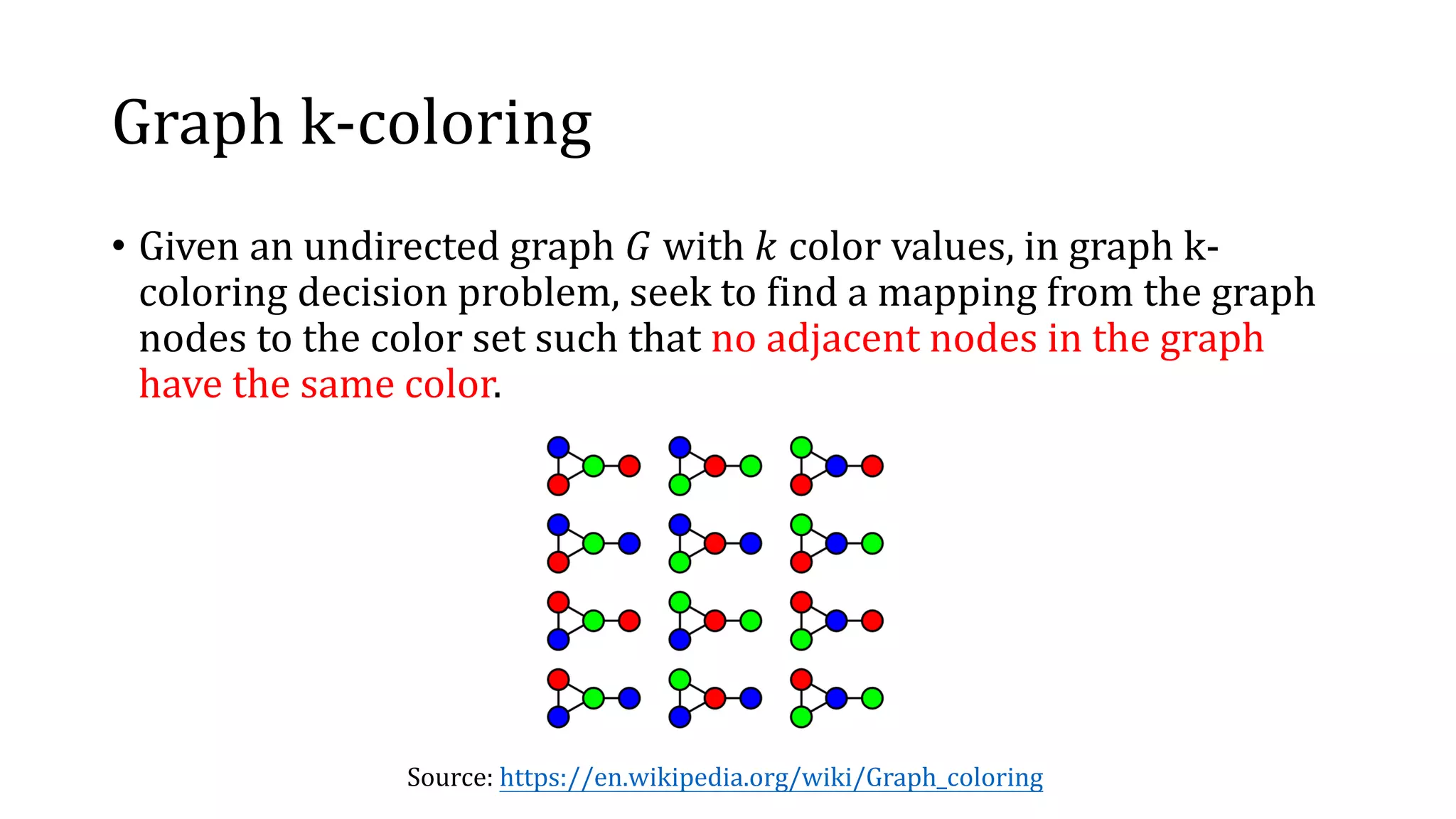

![Example

blue green red

1 T F F

2 F F T

3 F T F

4 F F T

1

3

2

4

⋀𝑖=1

𝑁

(⋁𝑗=1

𝑘

𝑥𝑖𝑗) ∧ [⋀ 𝑝,𝑞 ∈𝐸(⋀𝑗=1

𝑘

(¬𝑥 𝑝𝑗 ∨ ¬𝑥 𝑞𝑗))]

colornode](https://image.slidesharecdn.com/learningtosolvecircuit-sat-190923020628/75/Paper-study-Learning-to-solve-circuit-sat-30-2048.jpg)