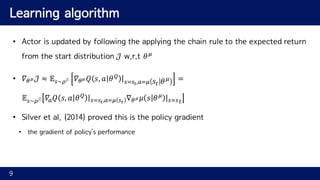

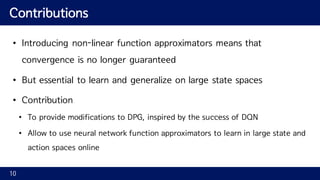

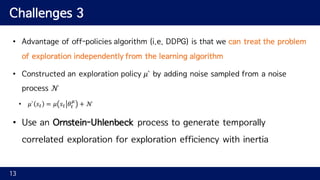

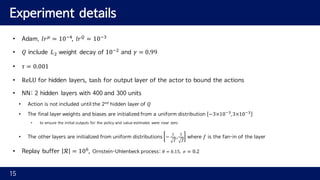

This document presents a model-free, off-policy actor-critic algorithm to learn policies in continuous action spaces using deep reinforcement learning. The algorithm is based on deterministic policy gradients and extends DQN to continuous action domains by using deep neural networks to approximate the actor and critic. Challenges addressed include ensuring samples are i.i.d. by using a replay buffer, stabilizing learning with a target network, normalizing observations with batch normalization, and exploring efficiently with an Ornstein-Uhlenbeck process. The algorithm is able to learn policies on high-dimensional continuous control tasks.

![Background

• Discounted future reward 𝑅" = ∑ 𝛾F9"

𝑟(𝑠F, 𝑎F)H

FI"

• Goal of RL is to learn a policy 𝜋 which maximizes the expected return

• from the start distribution 𝐽 = 𝔼LM ,NM~P,QM~R[𝑅7]

• Discounted state visitation distribution for a policy 𝜋: ρR

5](https://image.slidesharecdn.com/ddpgcontinuouscontrolwithdeepreinforcementlearning-160708110337/85/Continuous-control-with-deep-reinforcement-learning-DDPG-5-320.jpg)

![Background

• action-value function 𝑄R

𝑠", 𝑎" = 𝔼LMW",NMXY~P,QMXY~R[𝑅"|𝑠", 𝑎"]

• expected return after taking an action 𝑎" in state 𝑠" and following policy 𝜋

• Bellman equation

• 𝑄R

𝑠", 𝑎" = 𝔼LY,NYZ[~P[𝑟 𝑠", 𝑎" + 𝛾𝔼QYZ[~R 𝑄R

(𝑠"A7, 𝑎"A7) ]

• With deterministic policy 𝜇: 𝒮 → 𝒜

• 𝑄^

𝑠", 𝑎" = 𝔼LY,NYZ[~P[𝑟 𝑠", 𝑎" + 𝛾𝑄^

𝑠"A7, 𝜇(𝑠"A7 )]

6](https://image.slidesharecdn.com/ddpgcontinuouscontrolwithdeepreinforcementlearning-160708110337/85/Continuous-control-with-deep-reinforcement-learning-DDPG-6-320.jpg)

![Background

• Expectation only depends on the environment

• possible to learn 𝑄 𝝁

off-policy, where transitions are generated from

different stochastic policy 𝜷

• Q-learning with greedy policy 𝜇 𝑠 = arg max

f

𝑄 𝑠, 𝑎

• 𝐿 𝜃i

= 𝔼NY~jk,QY~l,NY~P[ 𝑄 𝑠", 𝑎" 𝜃i

− 𝑦"

n

]

• where 𝑦" = 𝑟 𝑠", 𝑎" + 𝛾𝑄(𝑠"A7, 𝜇(𝑠"A7)|𝜃i

)

• To scale Q-learning into large non-linear approximators:

• a replay buffer, a separate target network

7

(a commonly used off-policy algorithm)](https://image.slidesharecdn.com/ddpgcontinuouscontrolwithdeepreinforcementlearning-160708110337/85/Continuous-control-with-deep-reinforcement-learning-DDPG-7-320.jpg)

![Challenges 2

• When learning from low dimensional feature vector, observations may have

different physical units (i.e. positions and velocities)

• make it difficult to learn effectively and also to find hyper-parameters which generalize across

environments

• Use batch normalization [Ioffe & Szegedy, 2015] to normalize each dimension

across the samples in a minibatch to have unit mean and variance

• Also maintains a running average of the mean and variance for normalization during testing

• Use all layers of 𝜇 and 𝑄 prior to the action input

• Can train different units without needing to manually ensure the units were within a set range

12

(exploration or evaluation)](https://image.slidesharecdn.com/ddpgcontinuouscontrolwithdeepreinforcementlearning-160708110337/85/Continuous-control-with-deep-reinforcement-learning-DDPG-12-320.jpg)

![References

1. [Wang, 2015] Wang, Z., de Freitas, N., & Lanctot, M. (2015). Dueling network architectures for

deep reinforcement learning. arXiv preprint arXiv:1511.06581.

2. [Van, 2015] Van Hasselt, H., Guez, A., & Silver, D. (2015). Deep reinforcement learning with

double Q-learning. CoRR, abs/1509.06461.

3. [Schaul, 2015] Schaul, T., Quan, J., Antonoglou, I., & Silver, D. (2015). Prioritized experience

replay. arXiv preprint arXiv:1511.05952.

4. [Sutton, 1998] Sutton, R. S., & Barto, A. G. (1998). Reinforcement learning: An introduction(Vol.

1, No. 1). Cambridge: MIT press.

16](https://image.slidesharecdn.com/ddpgcontinuouscontrolwithdeepreinforcementlearning-160708110337/85/Continuous-control-with-deep-reinforcement-learning-DDPG-16-320.jpg)