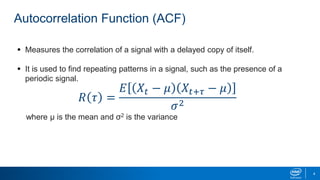

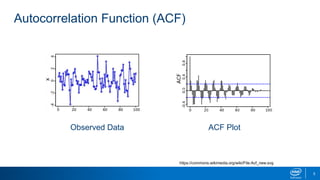

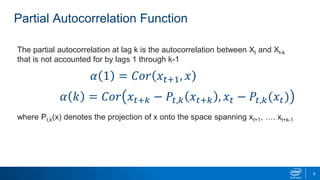

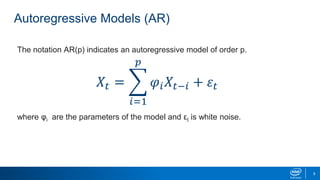

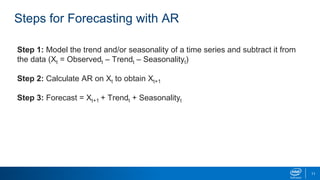

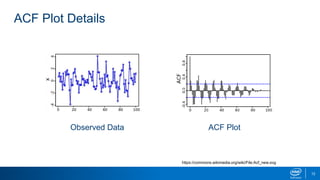

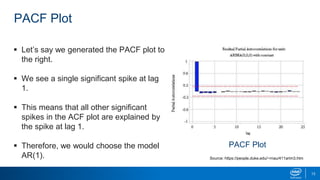

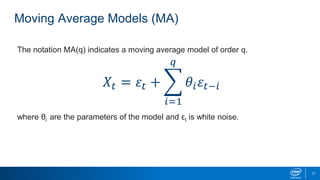

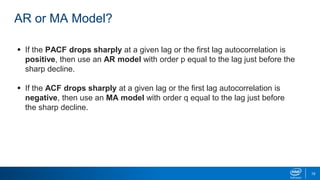

This document discusses autocorrelation models and their applications in Python. It describes the autocorrelation function (ACF) and partial autocorrelation function (PACF), and how they are used to identify autoregressive (AR) and moving average (MA) time series models. AR models regress the current value on prior values, while MA models regress the current value on prior noise terms. The document demonstrates how to interpret ACF and PACF plots to select AR or MA models, and how to fit these models in Python.