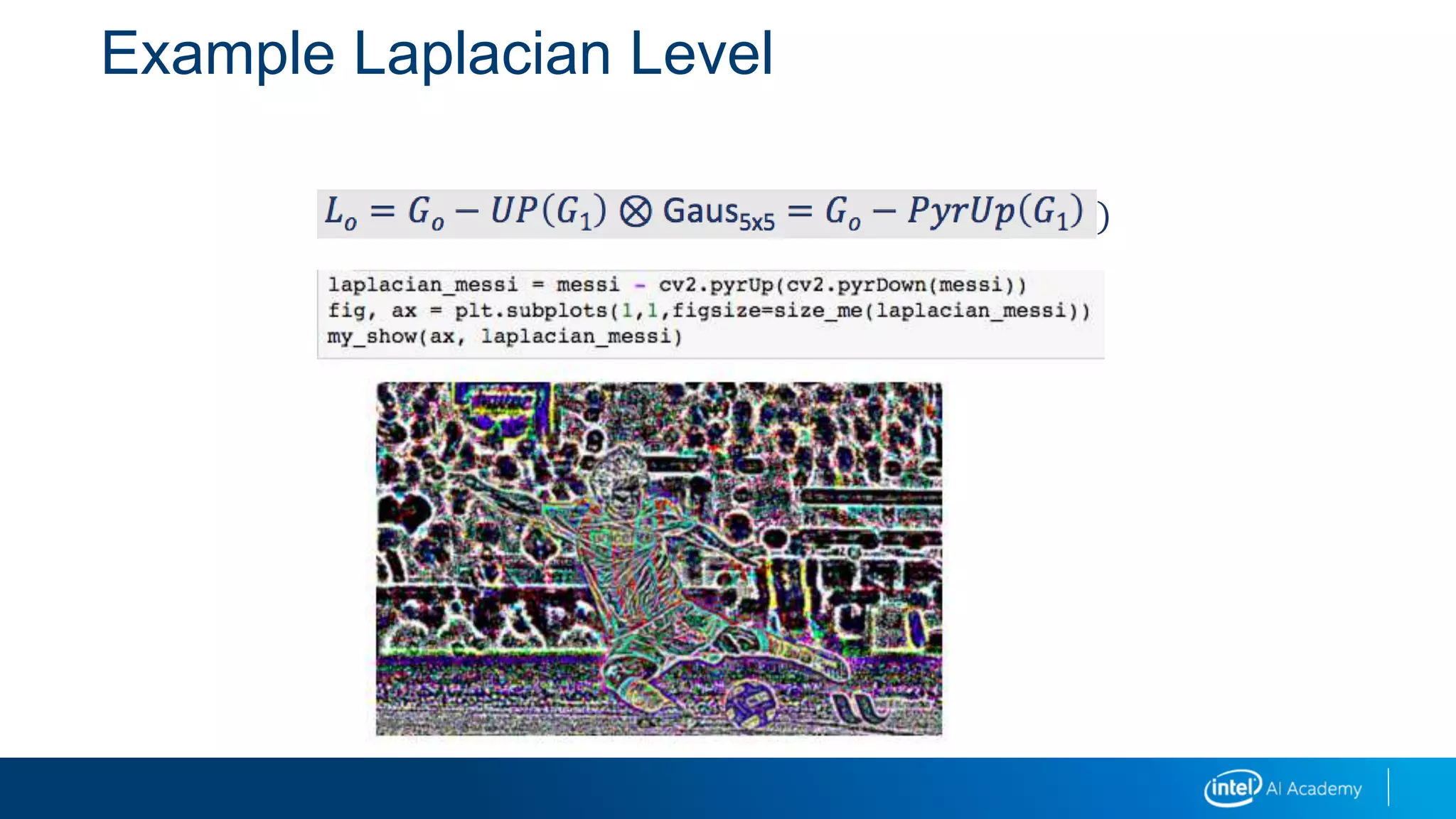

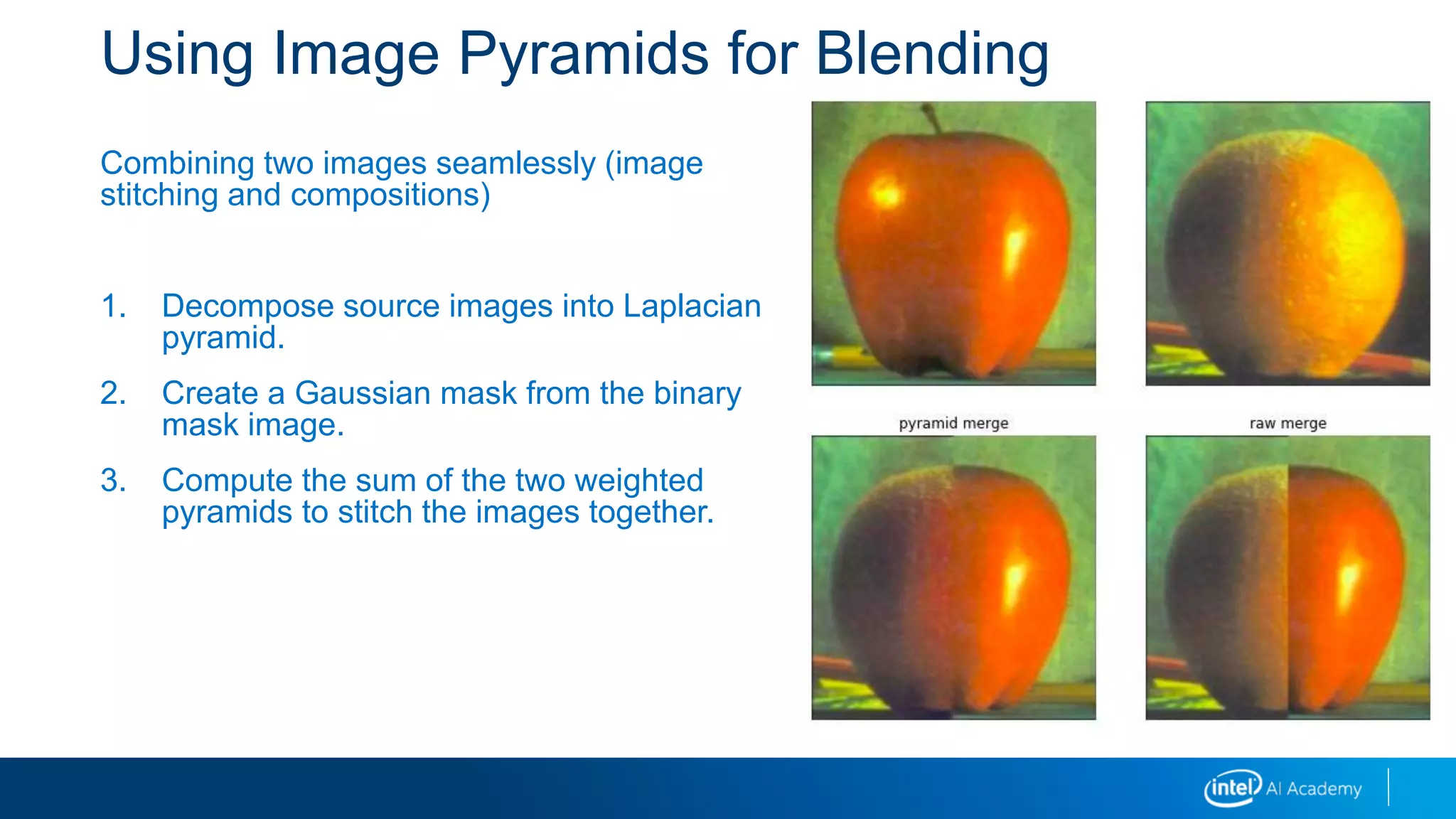

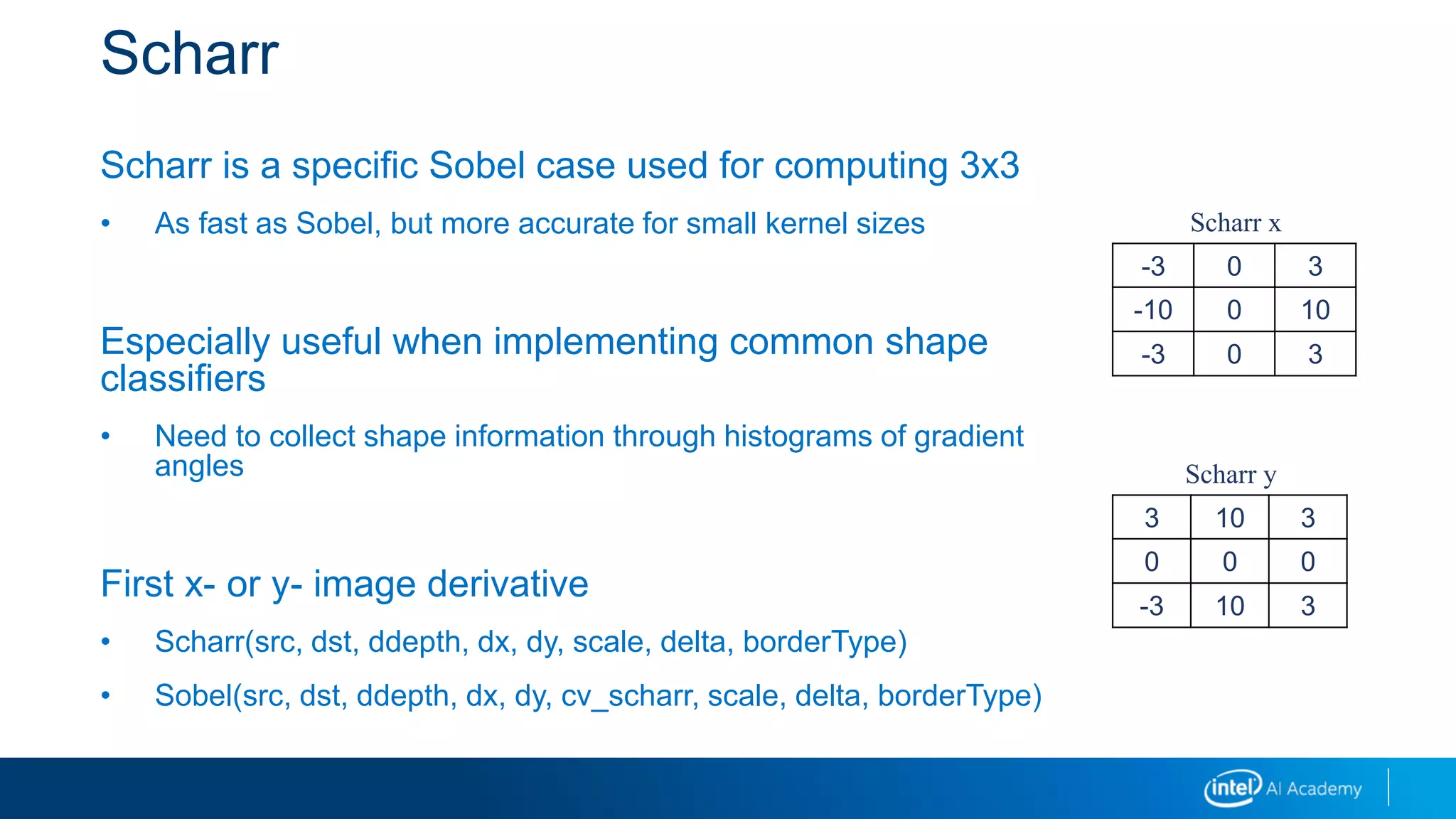

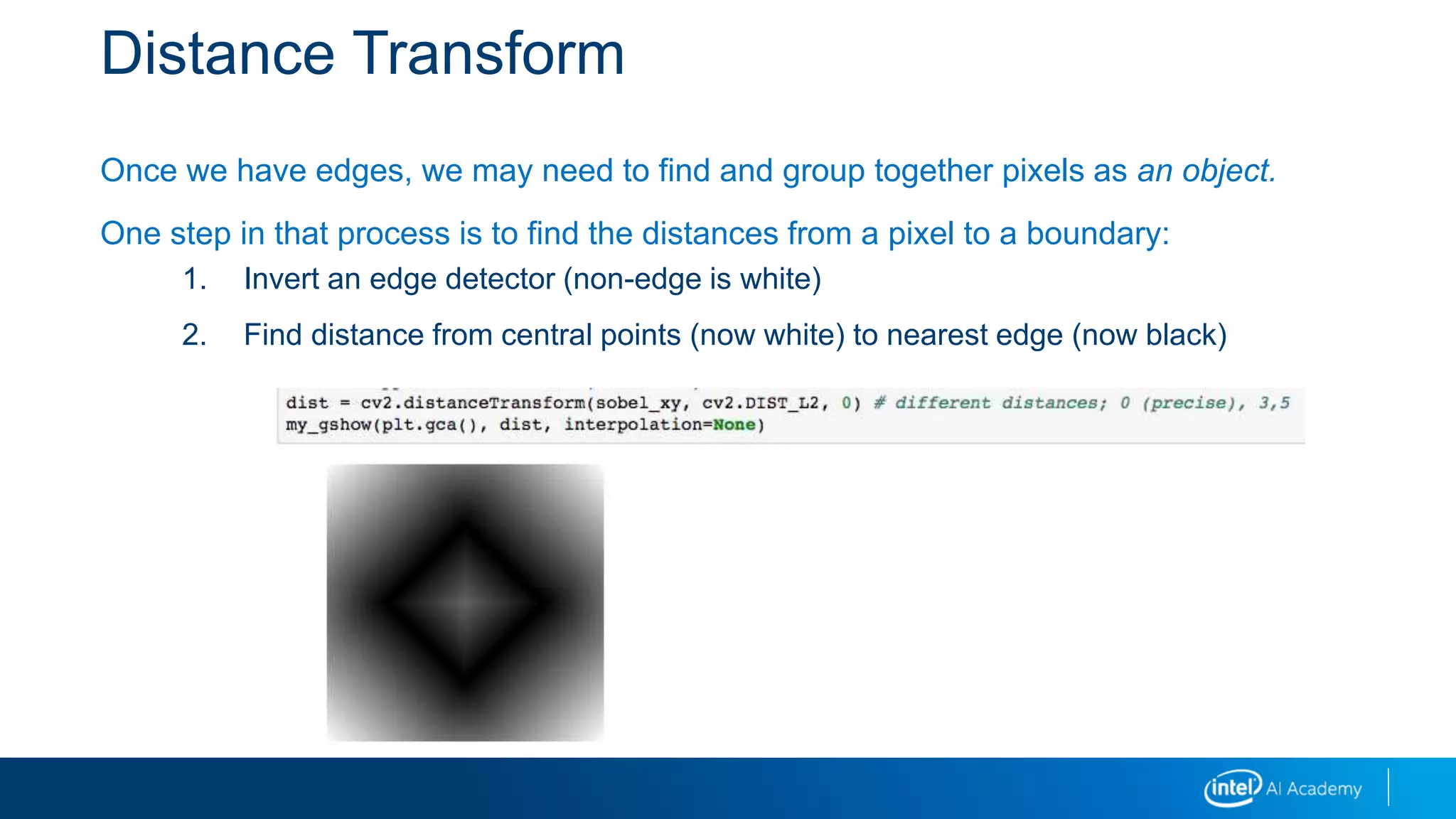

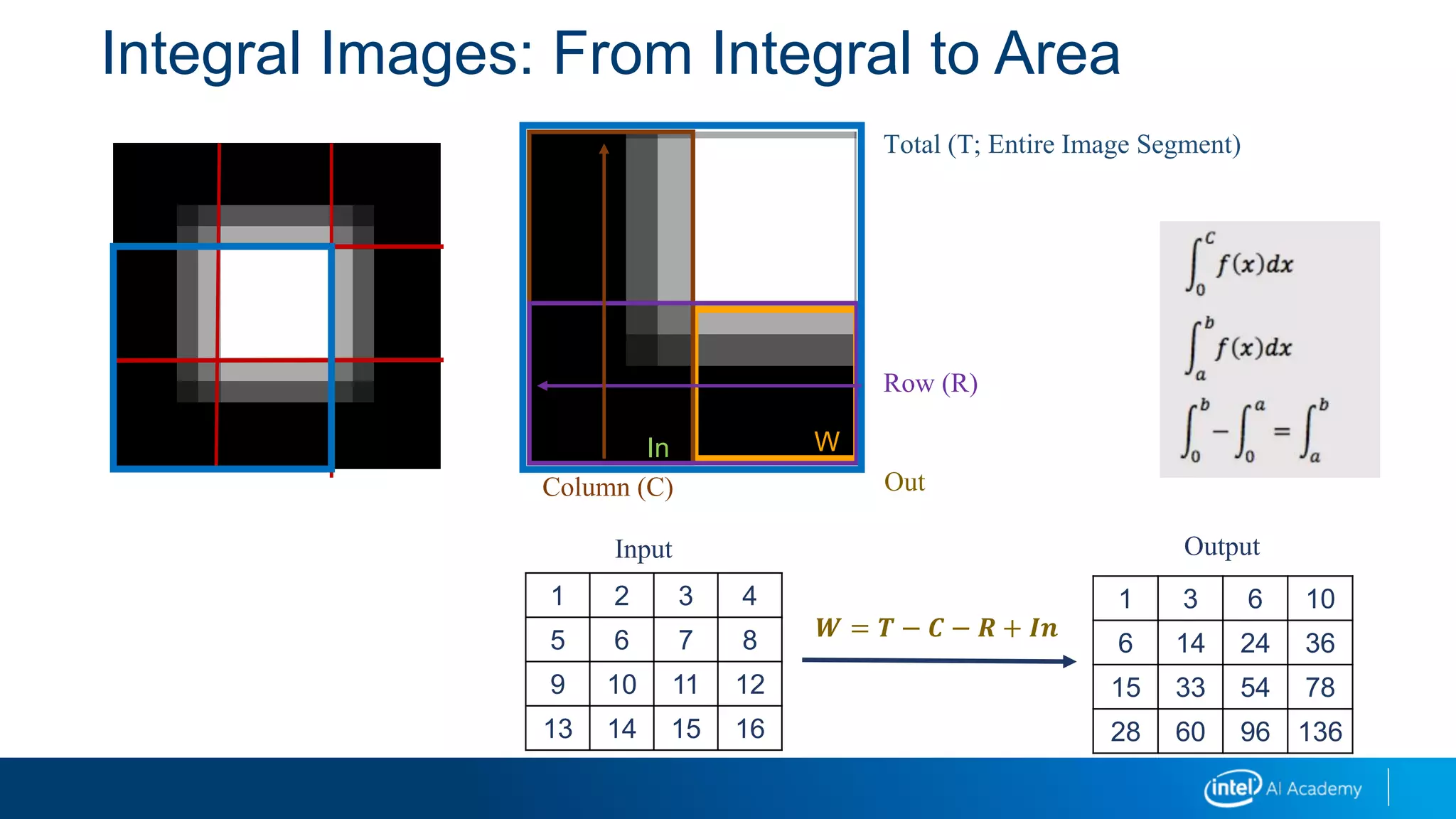

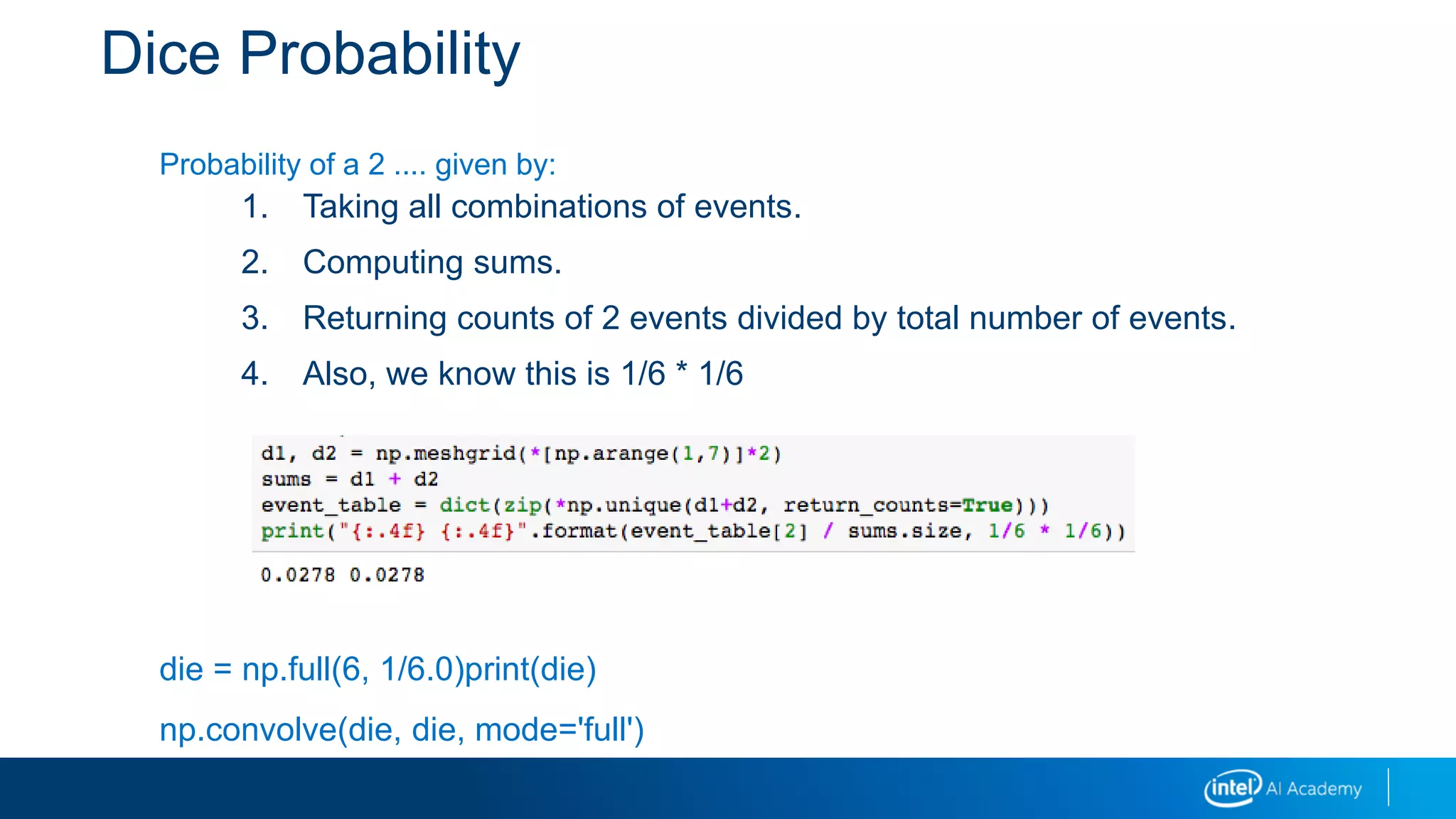

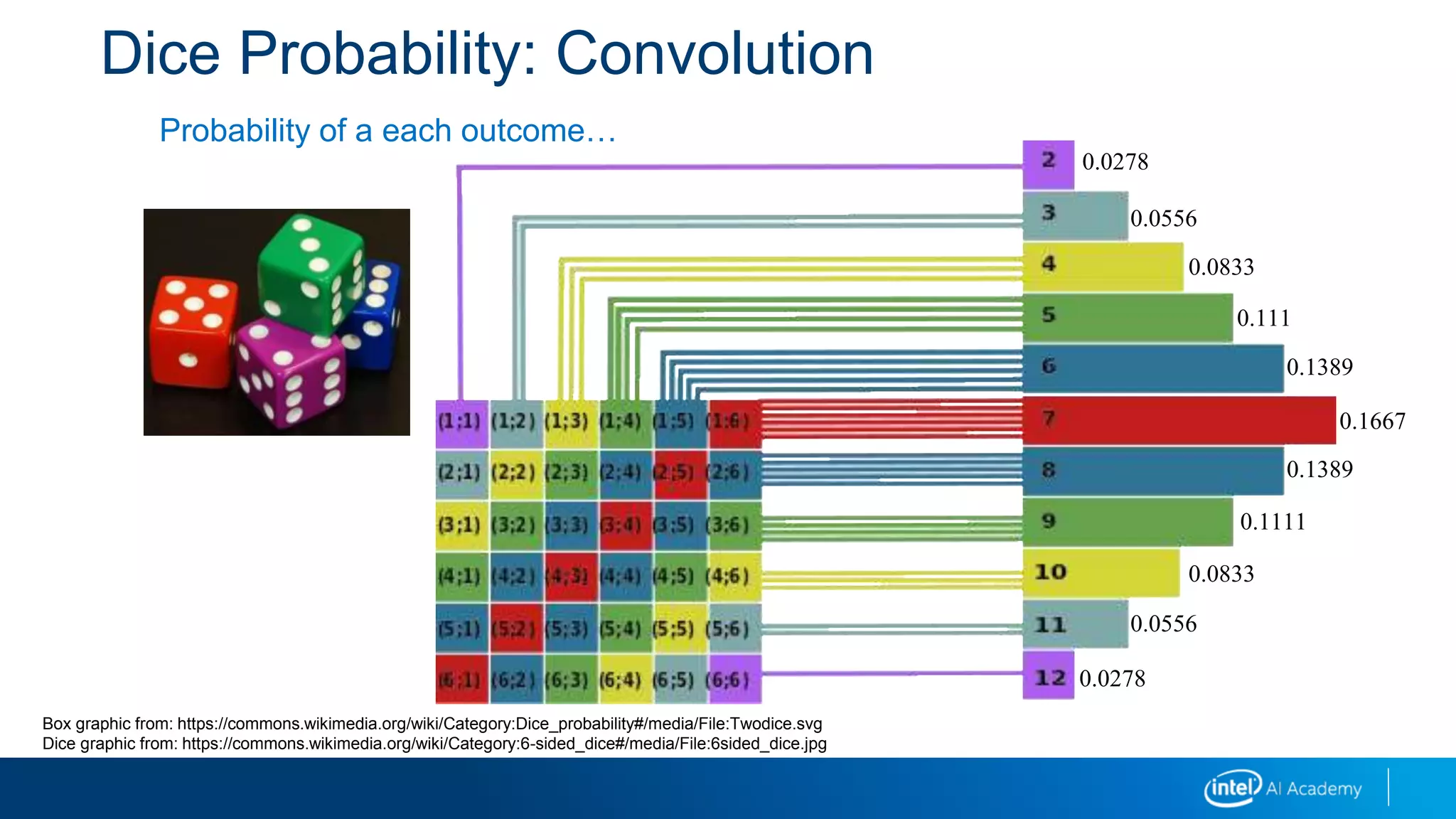

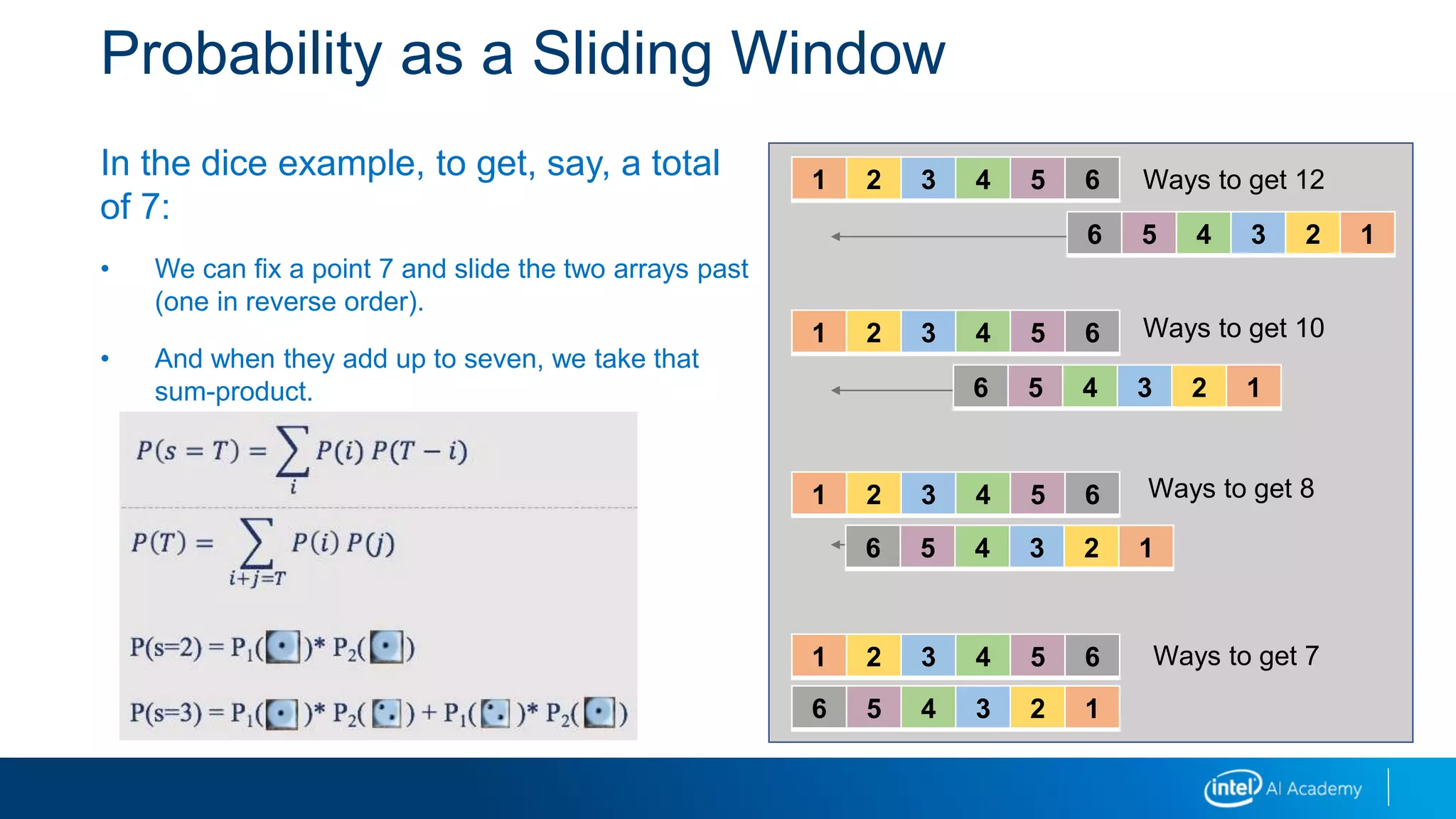

The document discusses image processing techniques including image derivatives, integral images, convolution, morphology operations, and image pyramids.

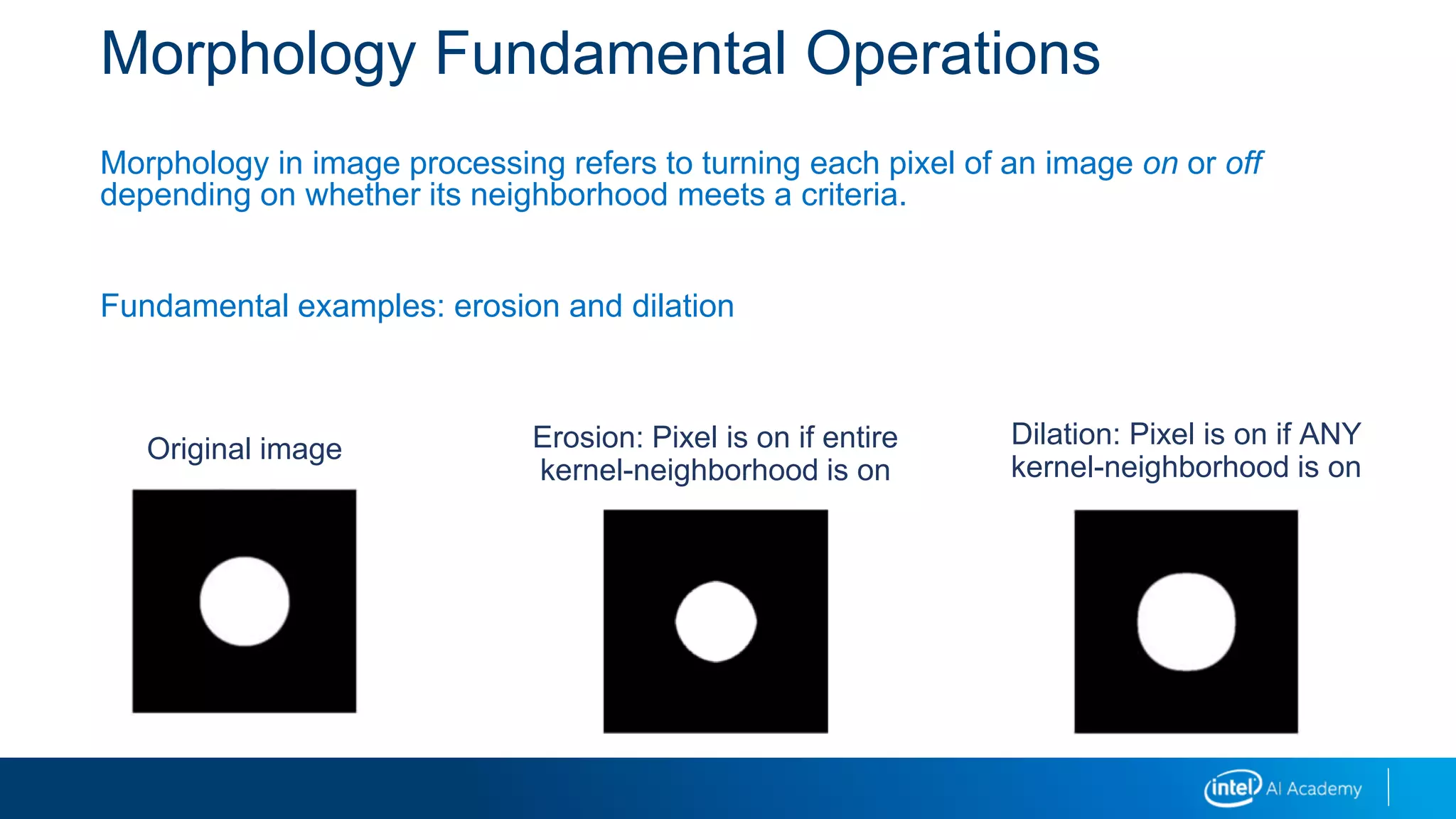

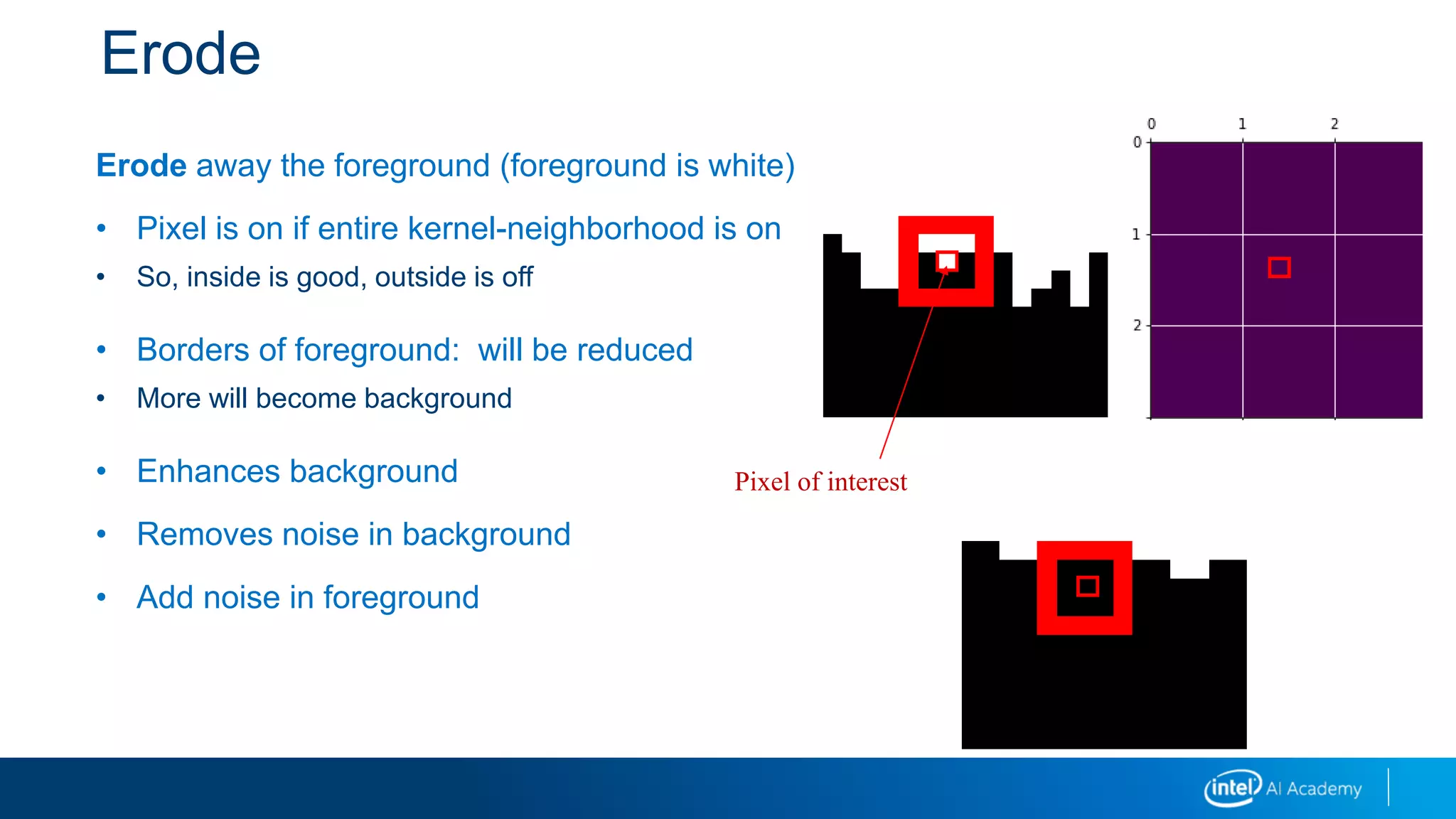

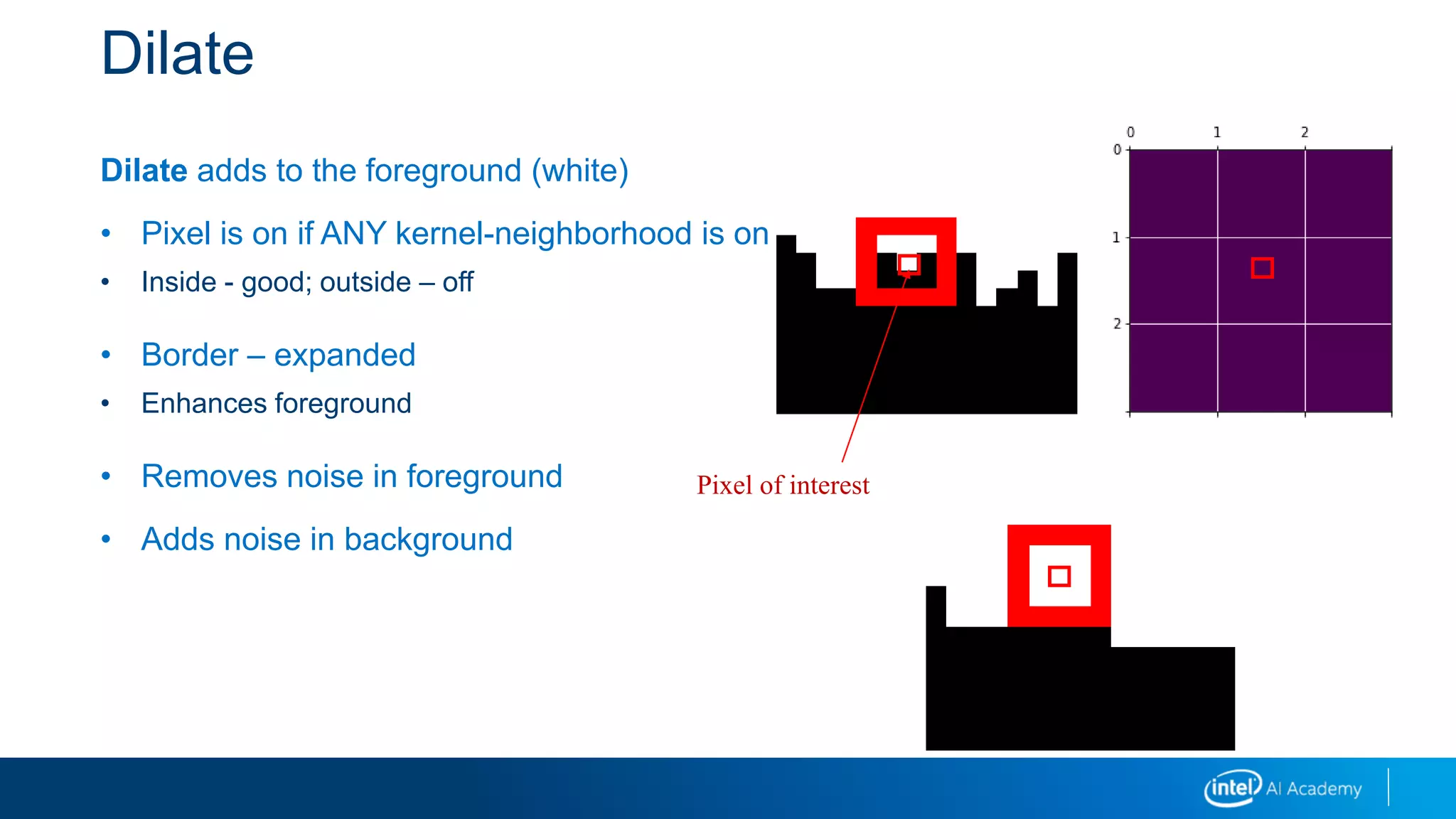

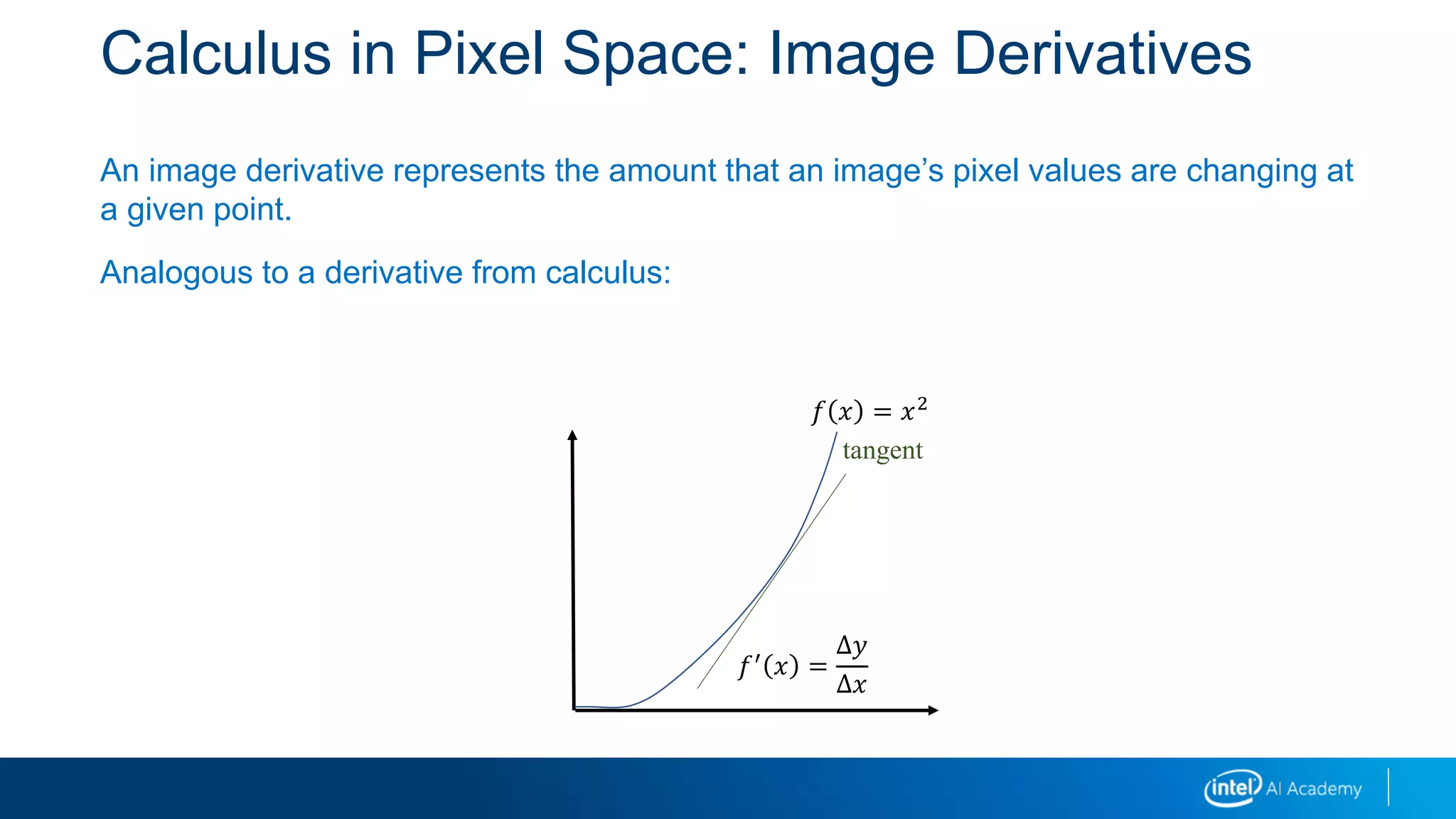

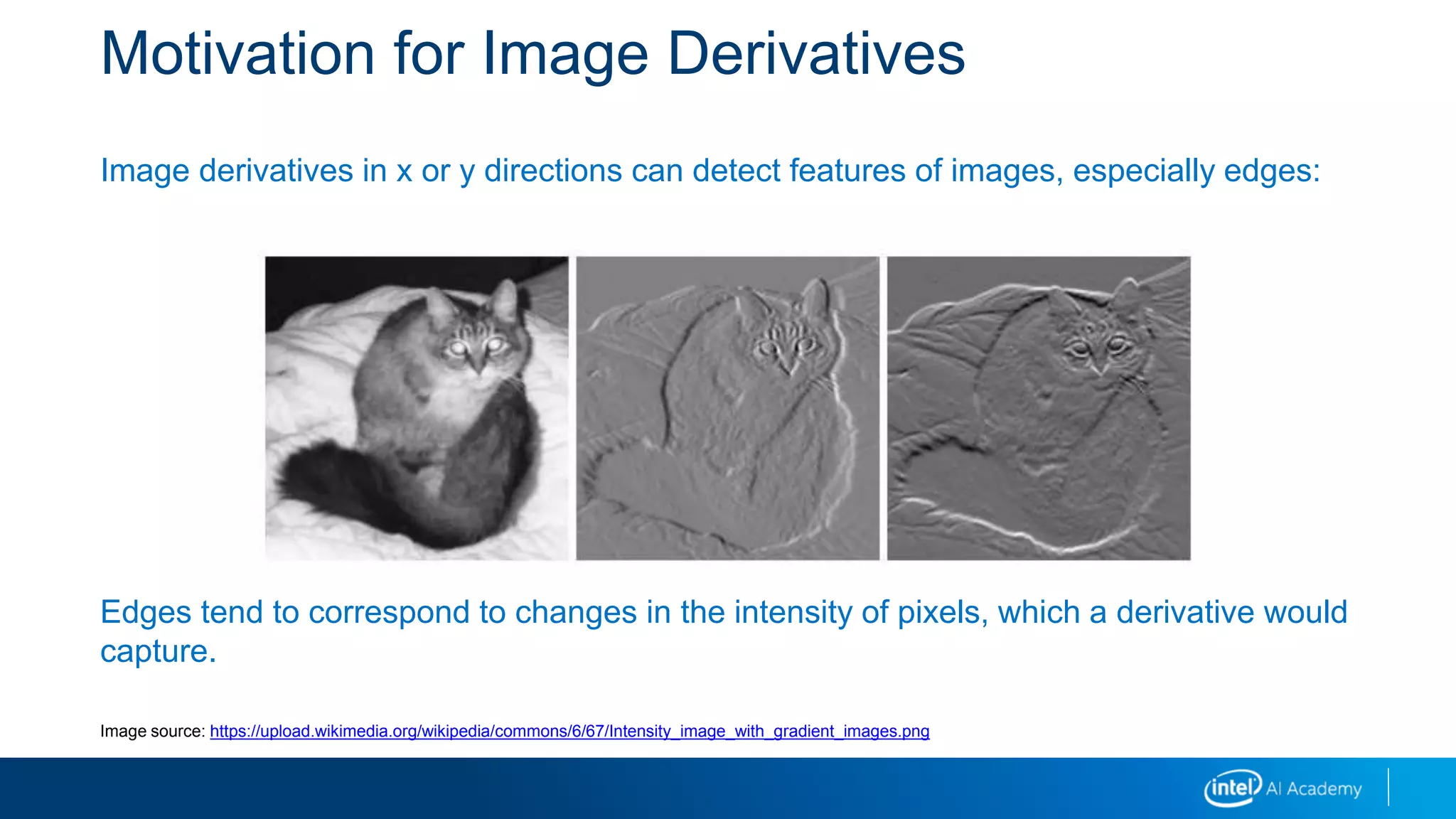

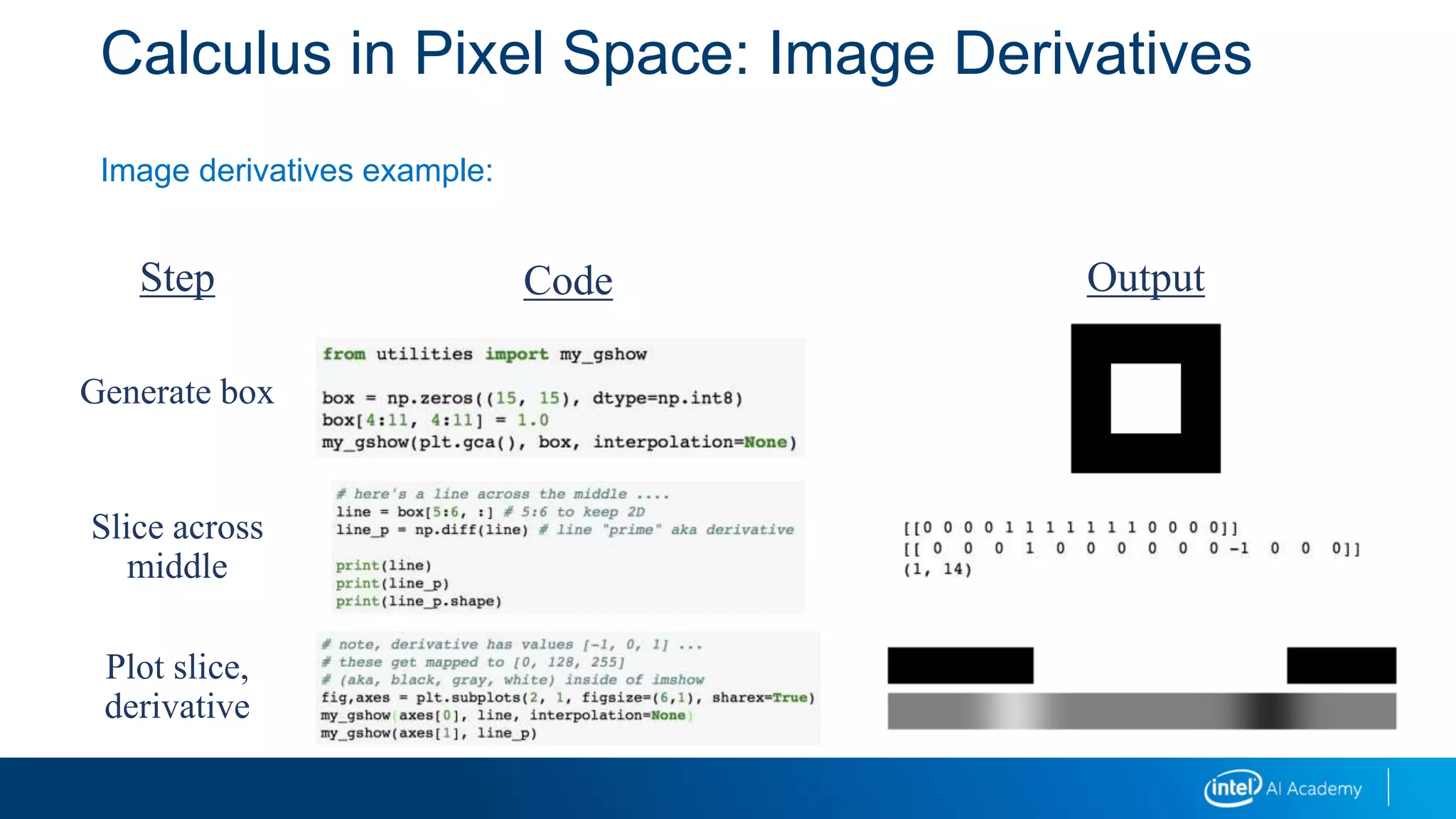

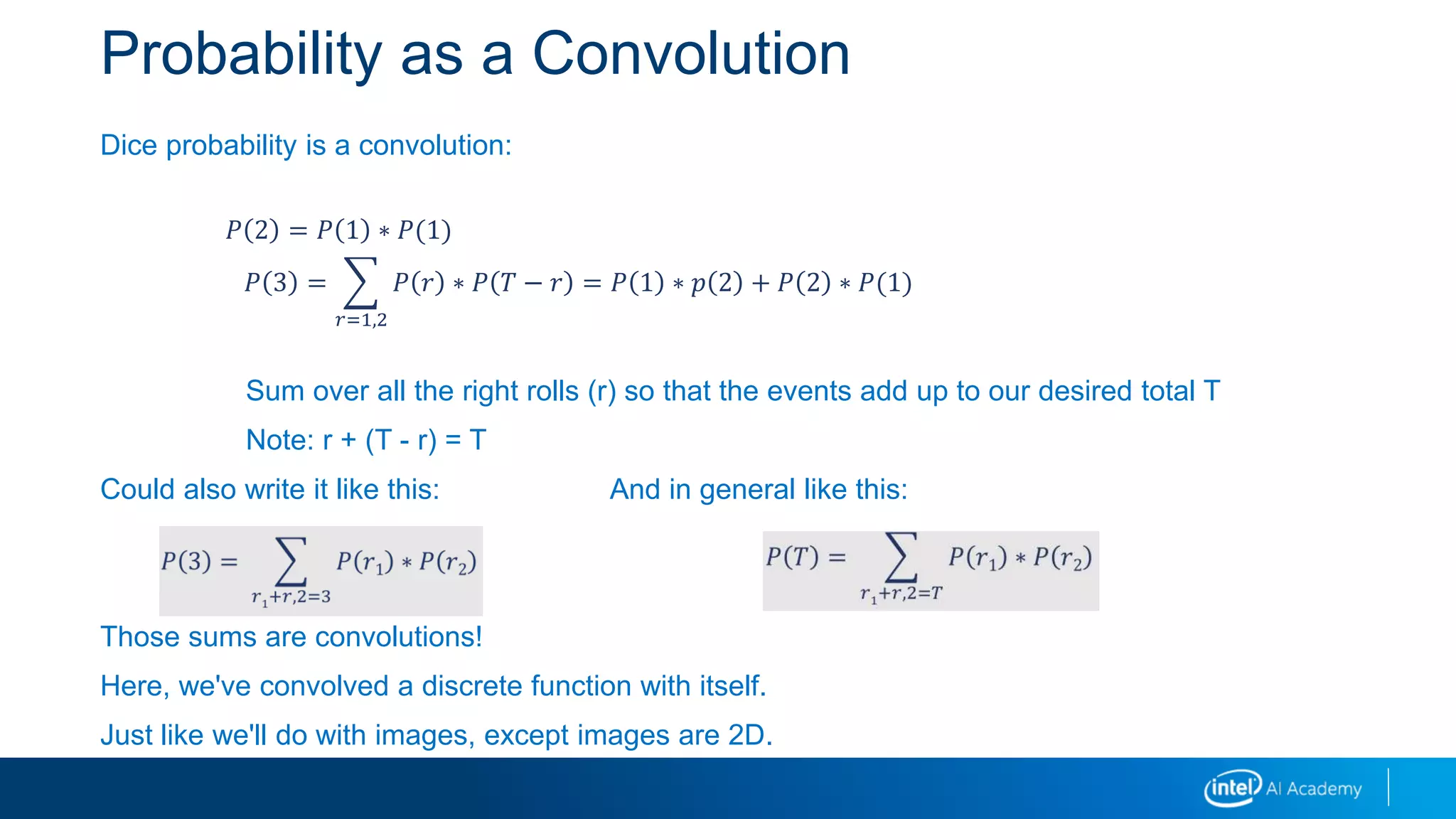

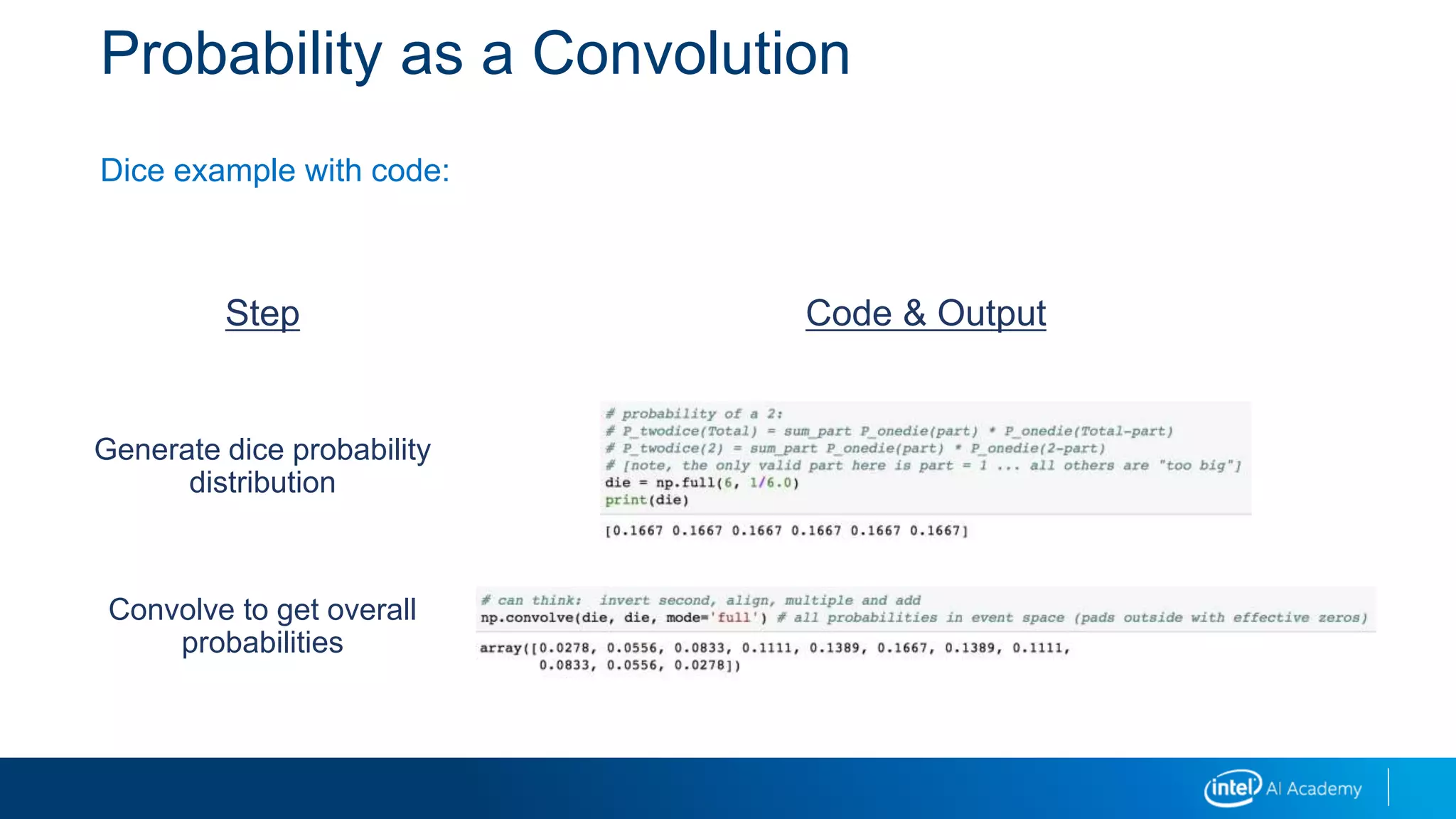

It explains that image derivatives detect edges by capturing changes in pixel intensity, and provides an example calculation. Integral images allow fast computation of box filters by precomputing pixel sums. Convolution is used to calculate probabilities as the sliding overlap of distributions. Morphology operations like erosion and dilation modify images based on pixel neighborhoods. Image pyramids create multiple resolution layers that aid in object detection across scales.

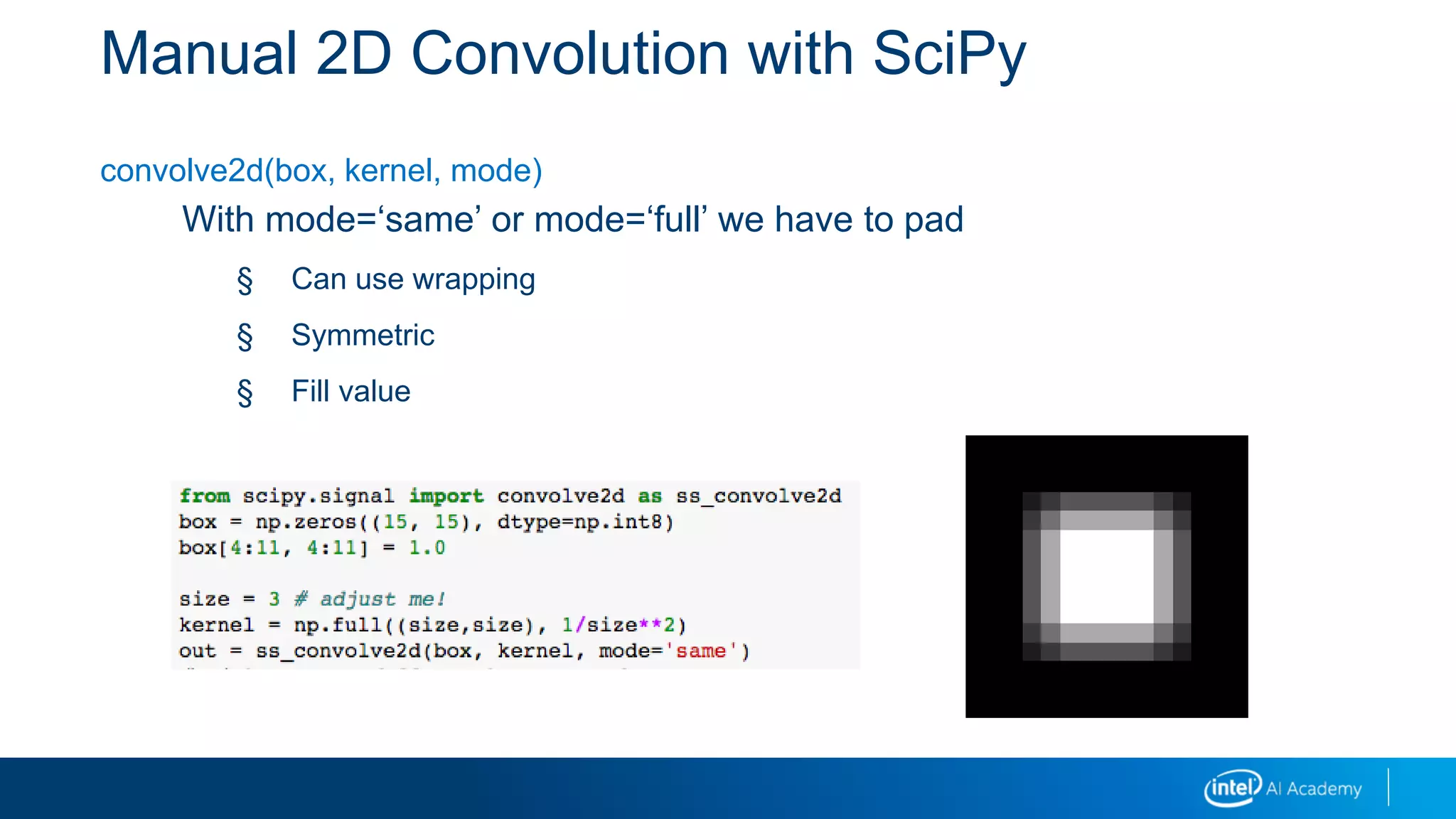

![A General 2D Convolution

The usual formula looks like this:

But, as an idea, that is less than clear!

𝑓 ⊗ 𝑔 𝑁, 𝑀 =

”𝑟𝑖𝑔ℎ𝑡”𝑖𝑗,𝑘𝑙

𝑓 𝑖, 𝑘 𝑔[𝑗, 𝑙]𝑓 ⊗ 𝑔 𝑁 =

𝑜𝑓𝑓𝑠𝑒𝑡𝑠

𝑓 𝑜𝑓𝑓𝑠𝑒𝑡 𝑔[𝑁 − 𝑜𝑓𝑓𝑠𝑒𝑡]

𝑖𝑚𝑔 ⊗ 𝑘𝑒𝑟𝑛 𝑅, 𝐶 =

𝑝𝑖𝑥𝑒𝑙_𝑝𝑎𝑖𝑟

𝑖𝑛𝑎𝑙𝑖𝑔𝑛𝑒𝑑

𝑛𝑒𝑖𝑔ℎ𝑏𝑜𝑟ℎ𝑜𝑜𝑑𝑠

(𝑘𝑒𝑟𝑛,𝑅,𝐶)

𝑖𝑚𝑔 𝑖𝑚𝑔𝑟, 𝑖𝑚𝑔𝑐 𝑘𝑒𝑟𝑛[𝑘𝑒𝑟𝑛𝑟, 𝑘𝑒𝑟𝑛𝑐]

result

imgr, imgc

kernr, kernc

given a kernel, and R, C…

this tells us the corresponding

pixels in img and elements in

kernel

a pixel](https://image.slidesharecdn.com/03imagetransformationsi-190218095658/75/03-image-transformations_i-15-2048.jpg)