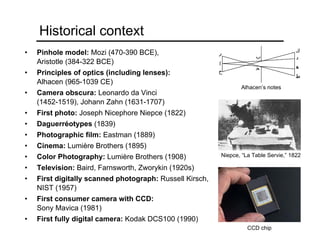

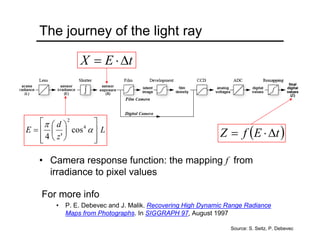

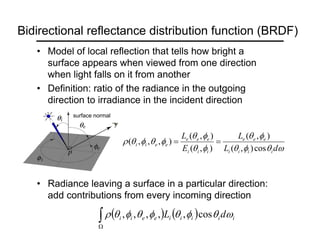

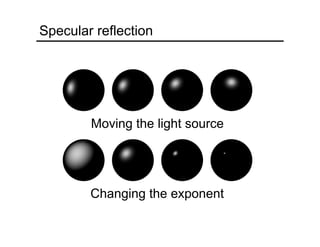

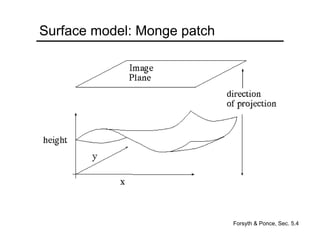

This document provides an overview of concepts related to light, cameras, and image formation. It discusses pinhole projection, lenses, depth of field, field of view, and lens aberrations. It also covers the two major types of digital camera sensors, capturing color, and demosaicing. The document then reviews the history of photography and important early innovations. It concludes by discussing concepts like radiometry, the camera response function, BRDF models, and photometric stereo for shape reconstruction from images under varying lighting.