Embed presentation

Download to read offline

![Big–THETA ө (Same order)

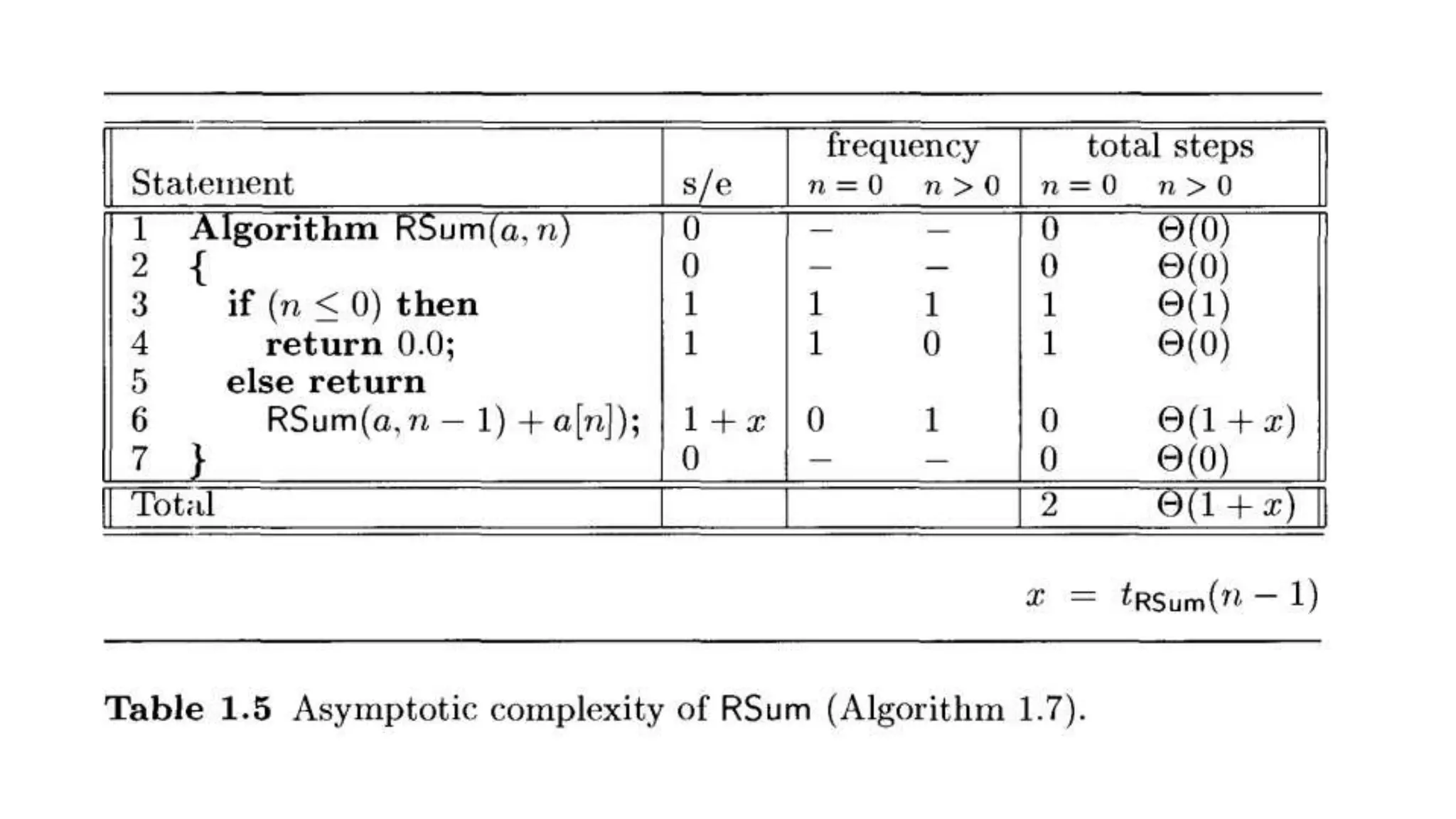

f(n) = ө (g(n)) (pronounced theta), says that the growth rate of f(n)

equals (=) the growth rate of g(n) [if f(n) = O(g(n)) and T(n) = ө

(g(n)].

Theta: the function f(n)=ө(g(n)) iff there exist positive constants

c1,c2 and no such that c1 g(n) ≤ f(n) ≤ c2 g(n) for all n, n ≥ no.](https://image.slidesharecdn.com/asyptoticnotations-210402151548/75/Asymptotic-notations-Big-O-Omega-Theta-6-2048.jpg)

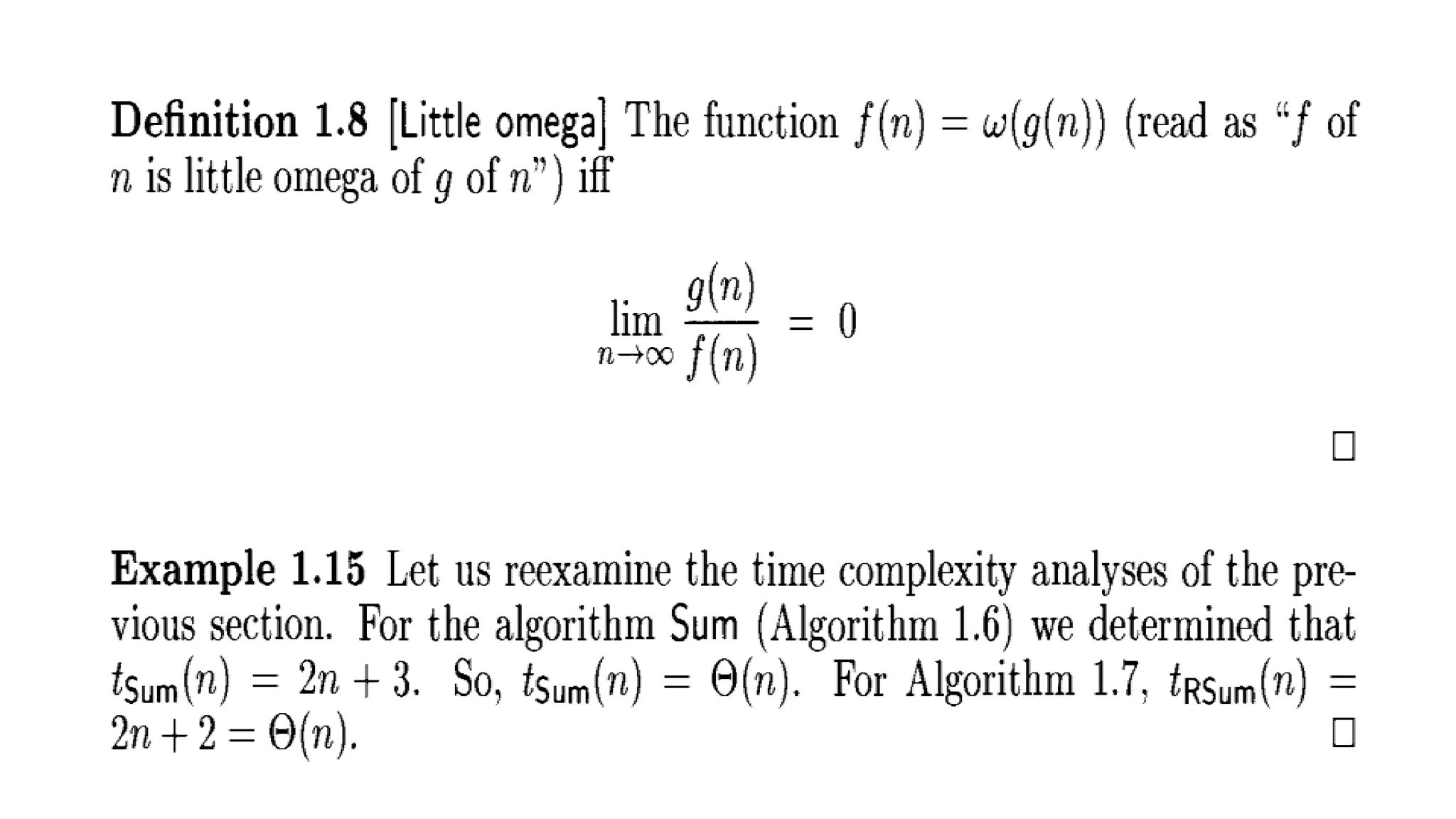

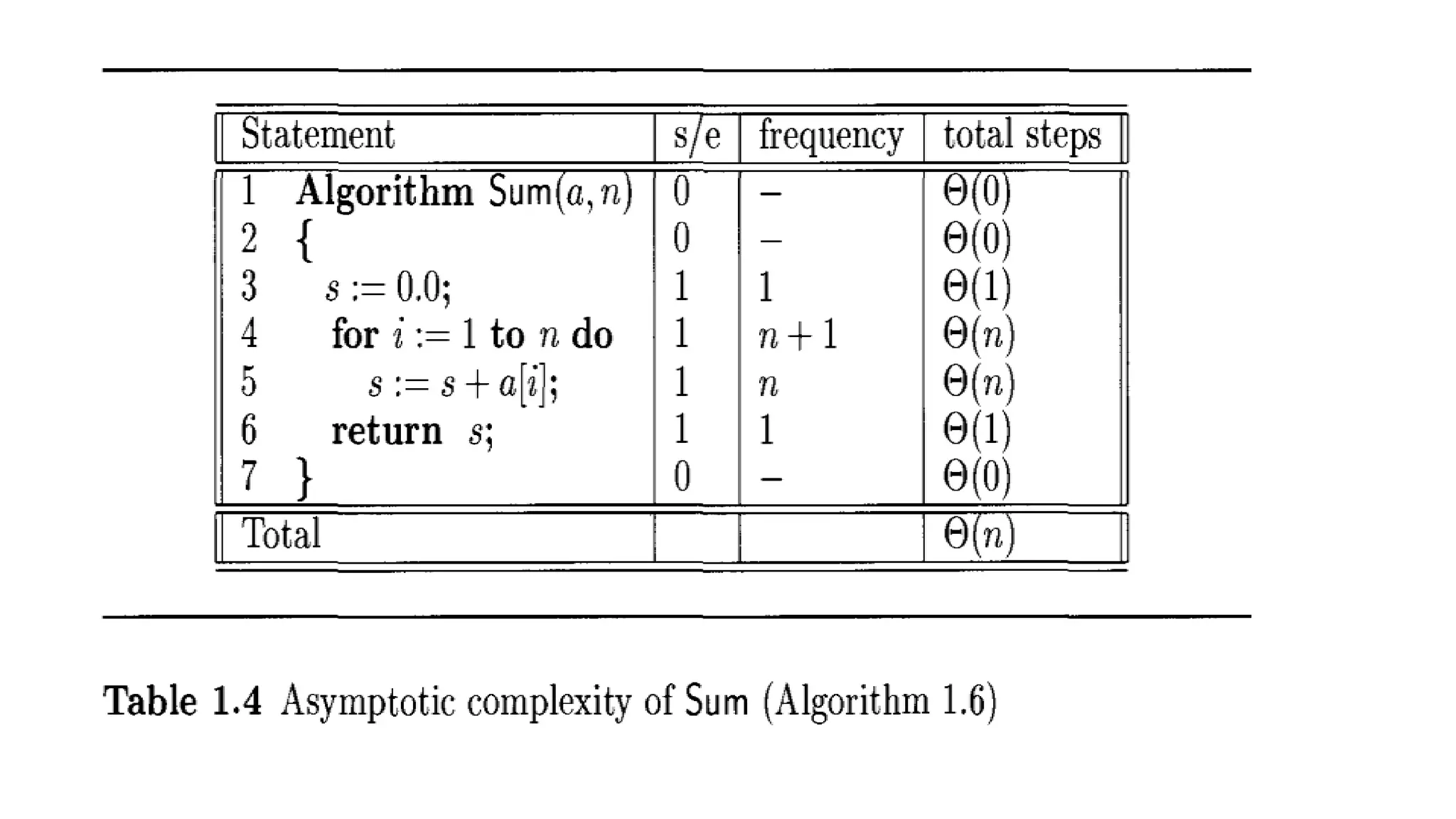

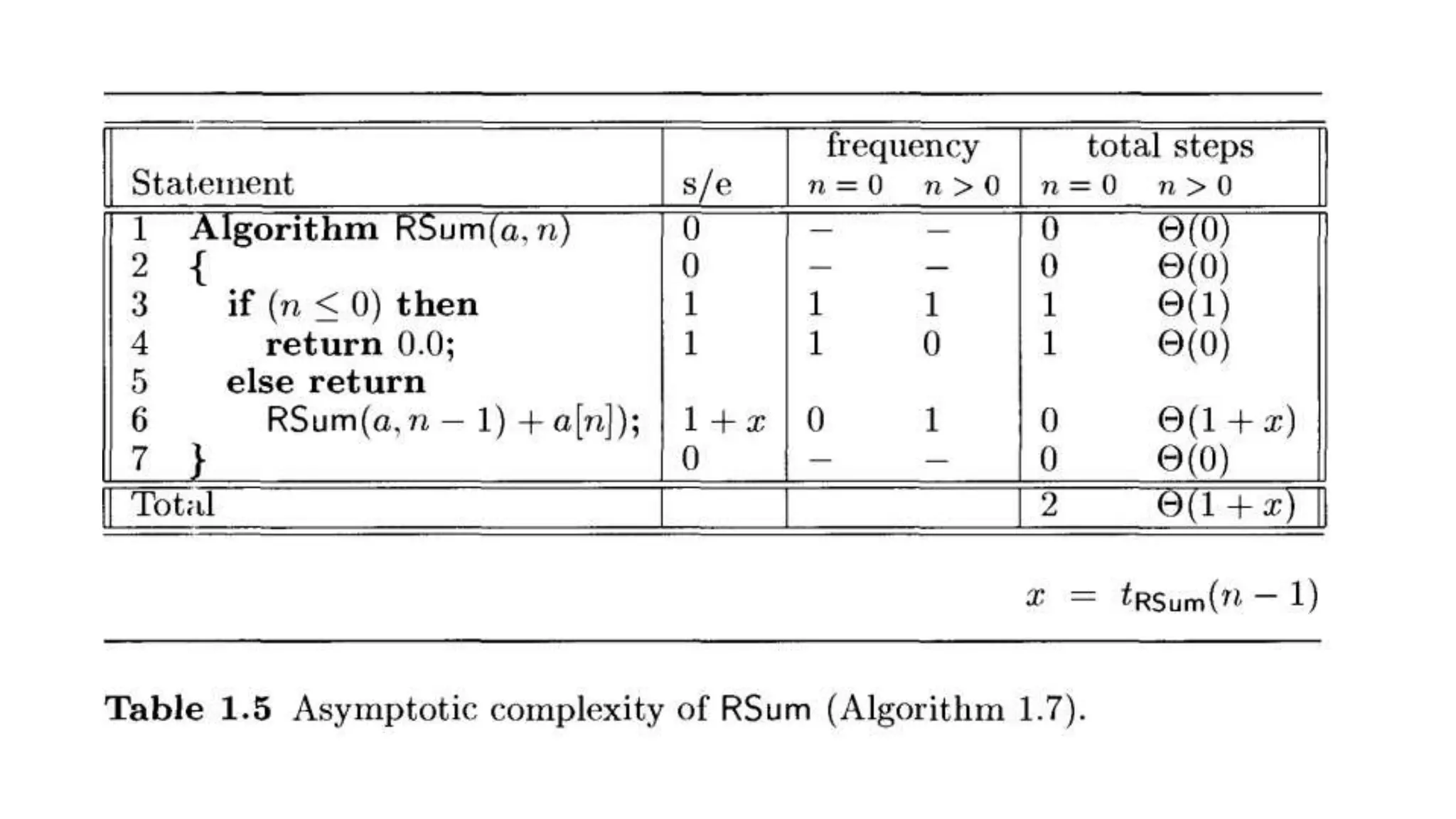

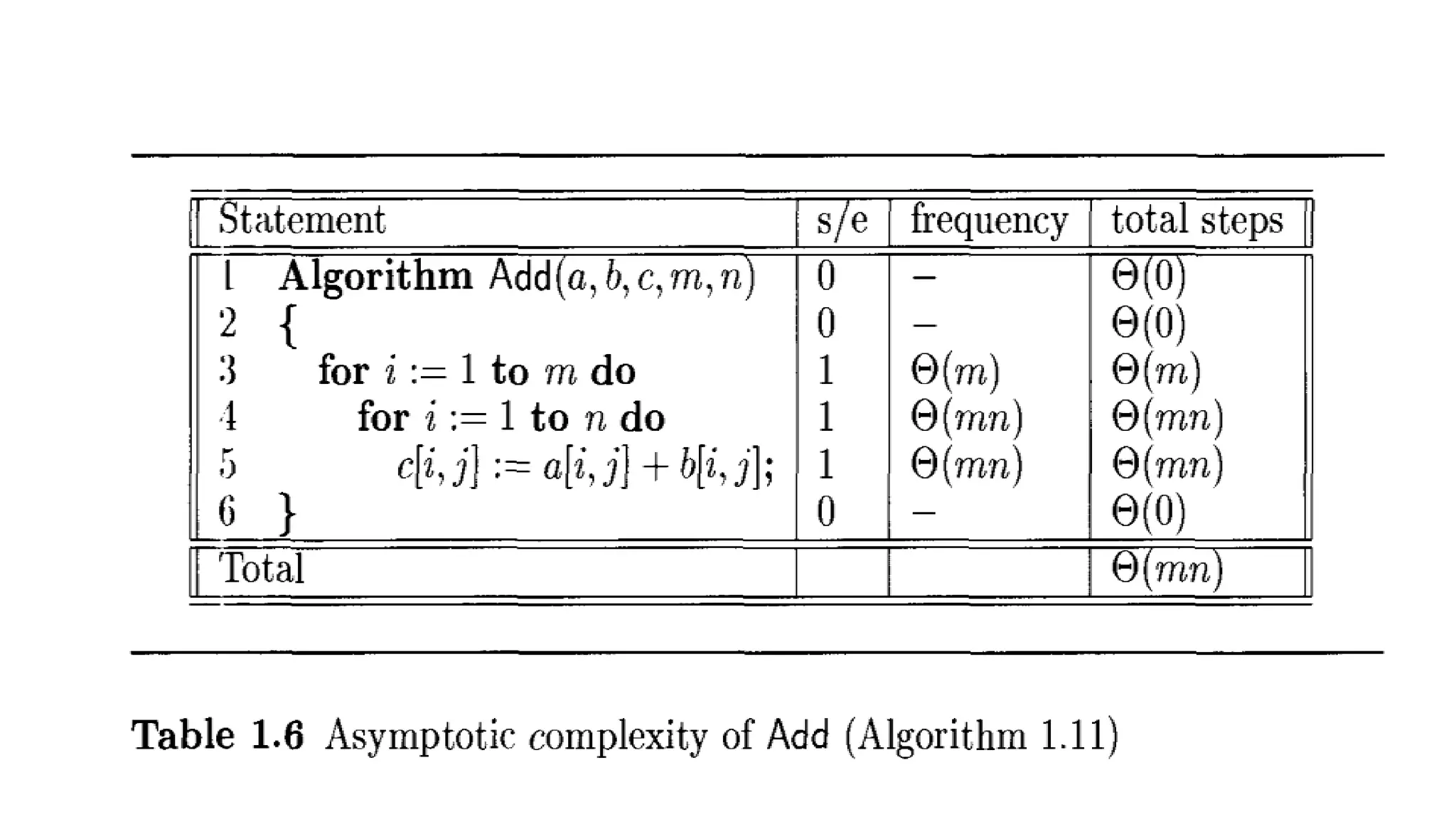

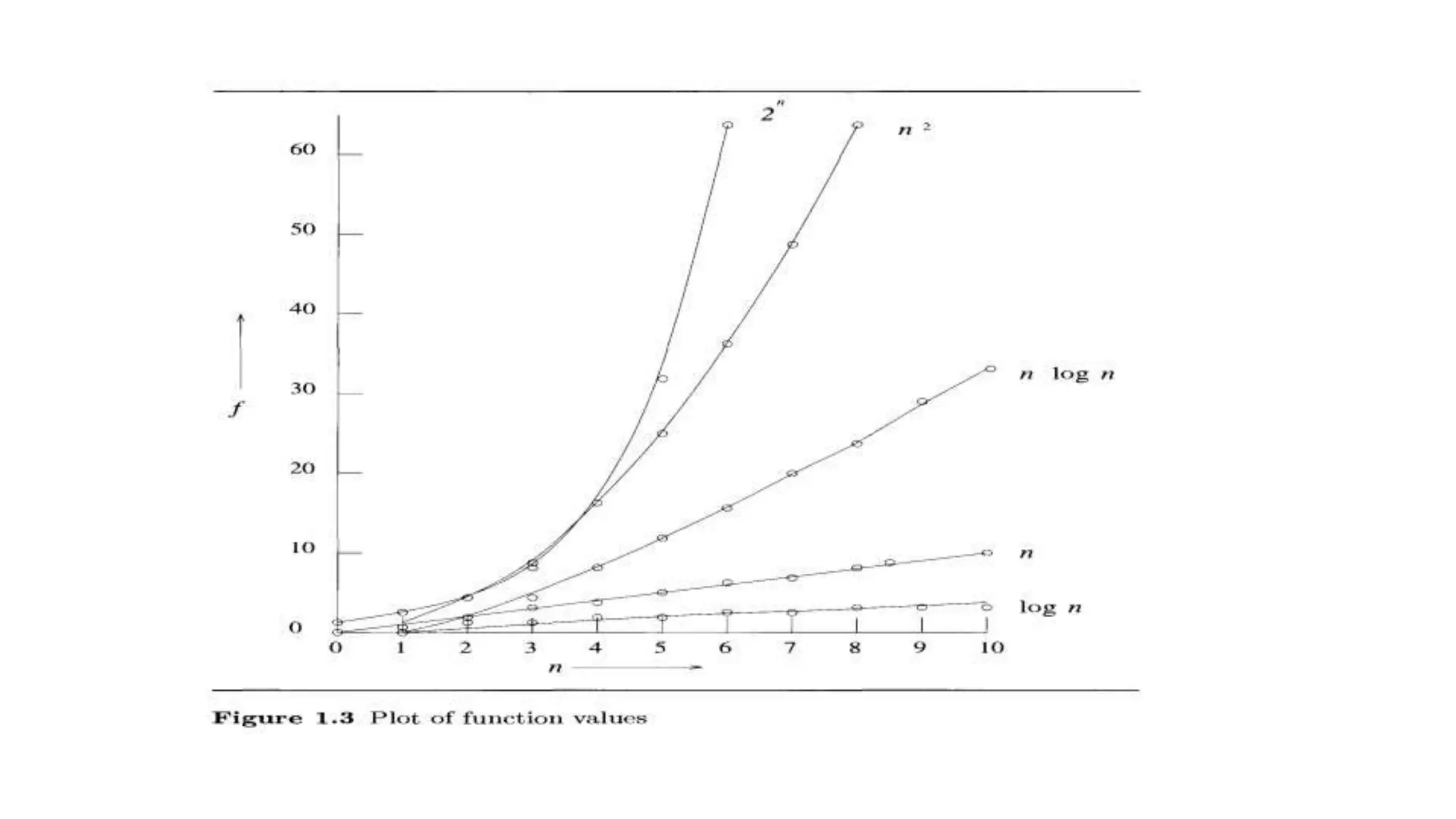

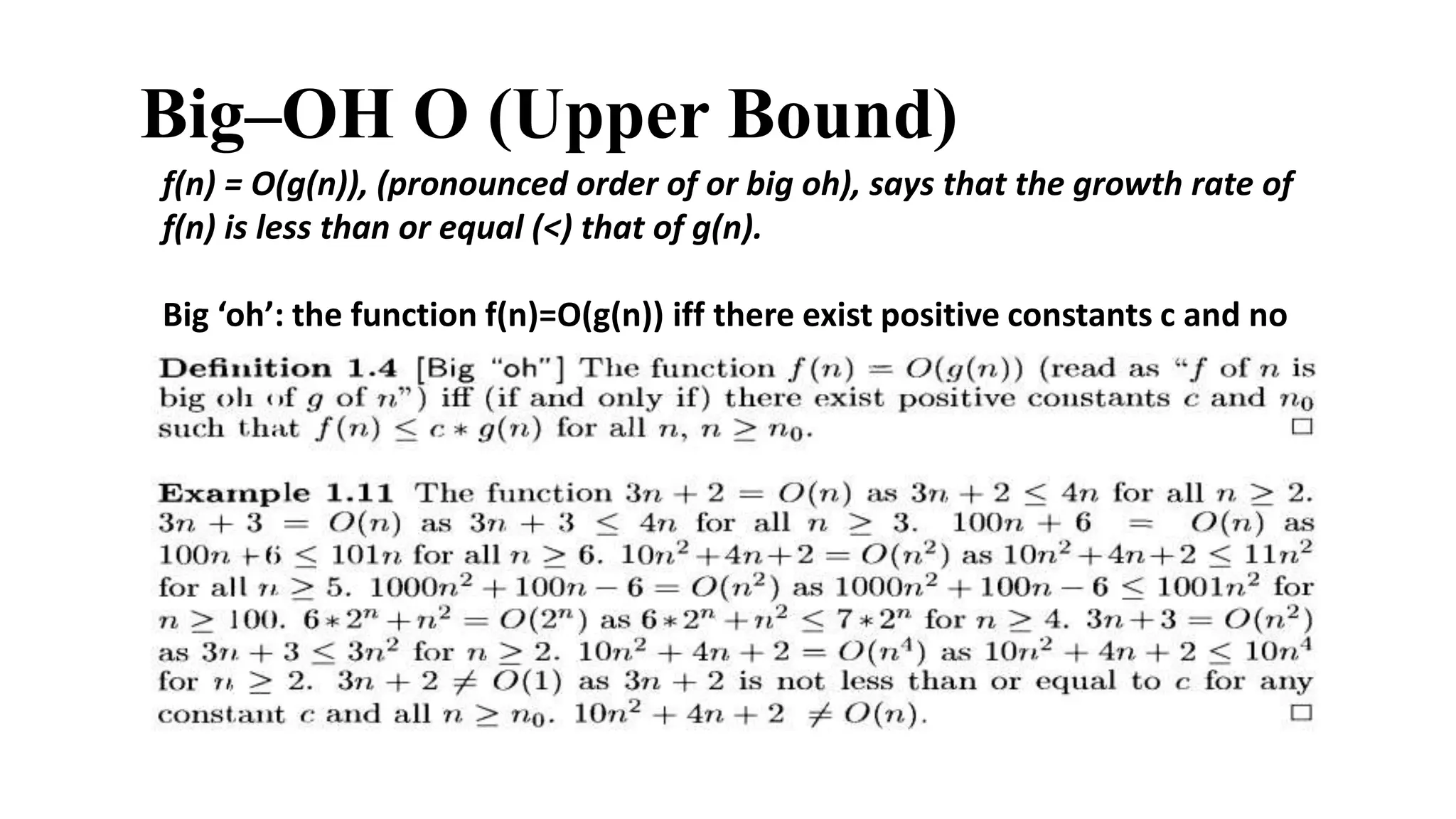

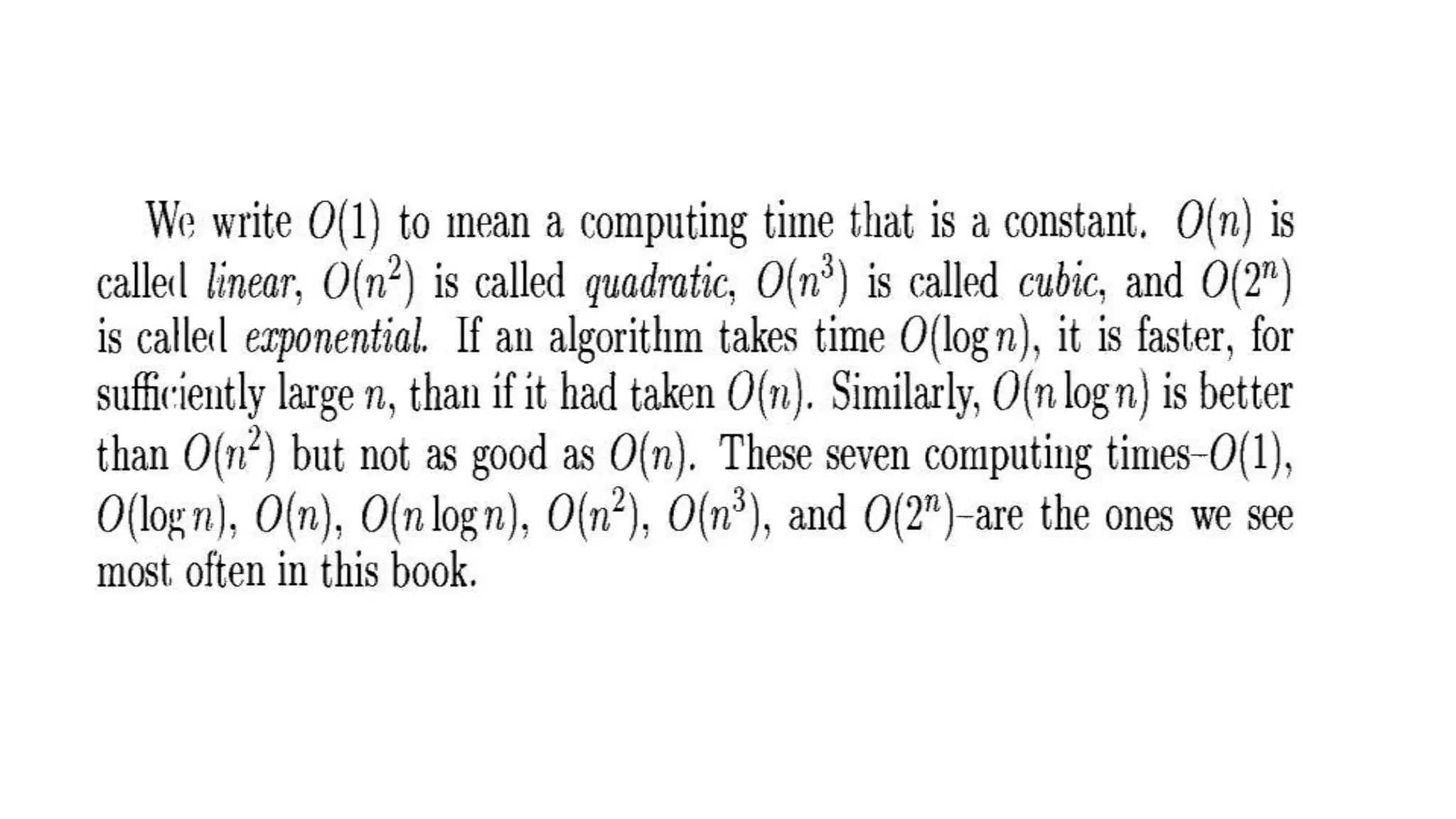

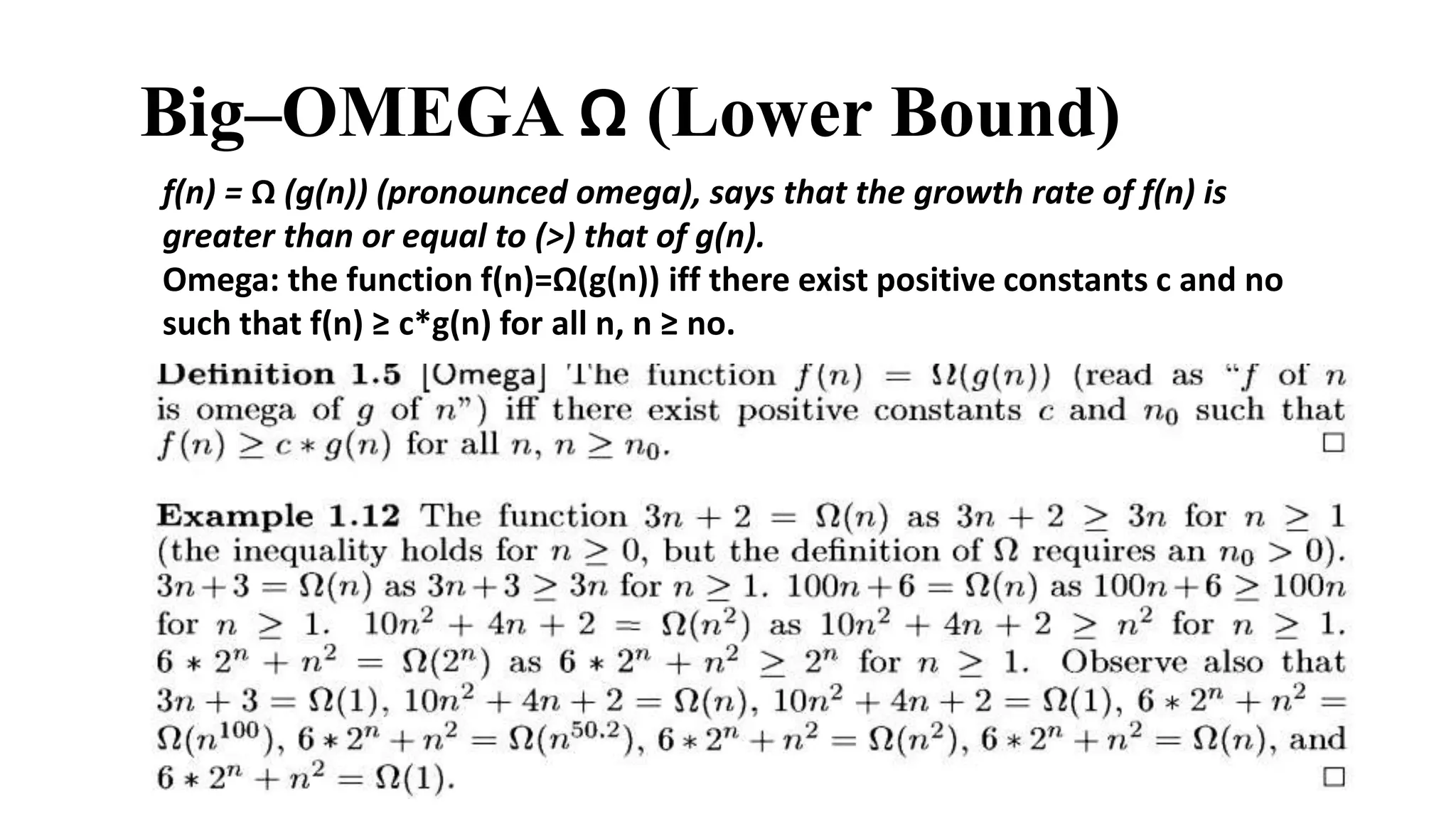

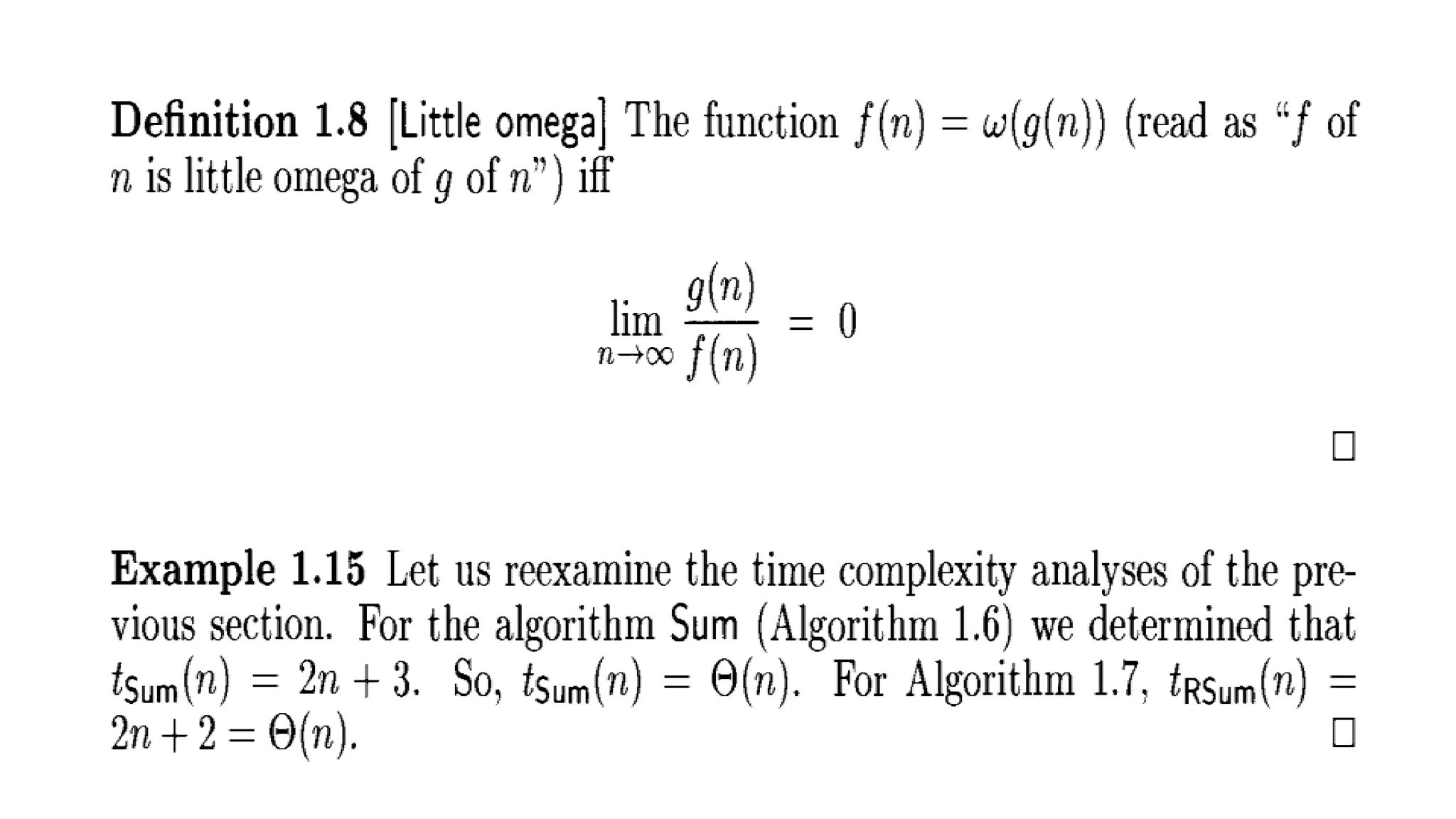

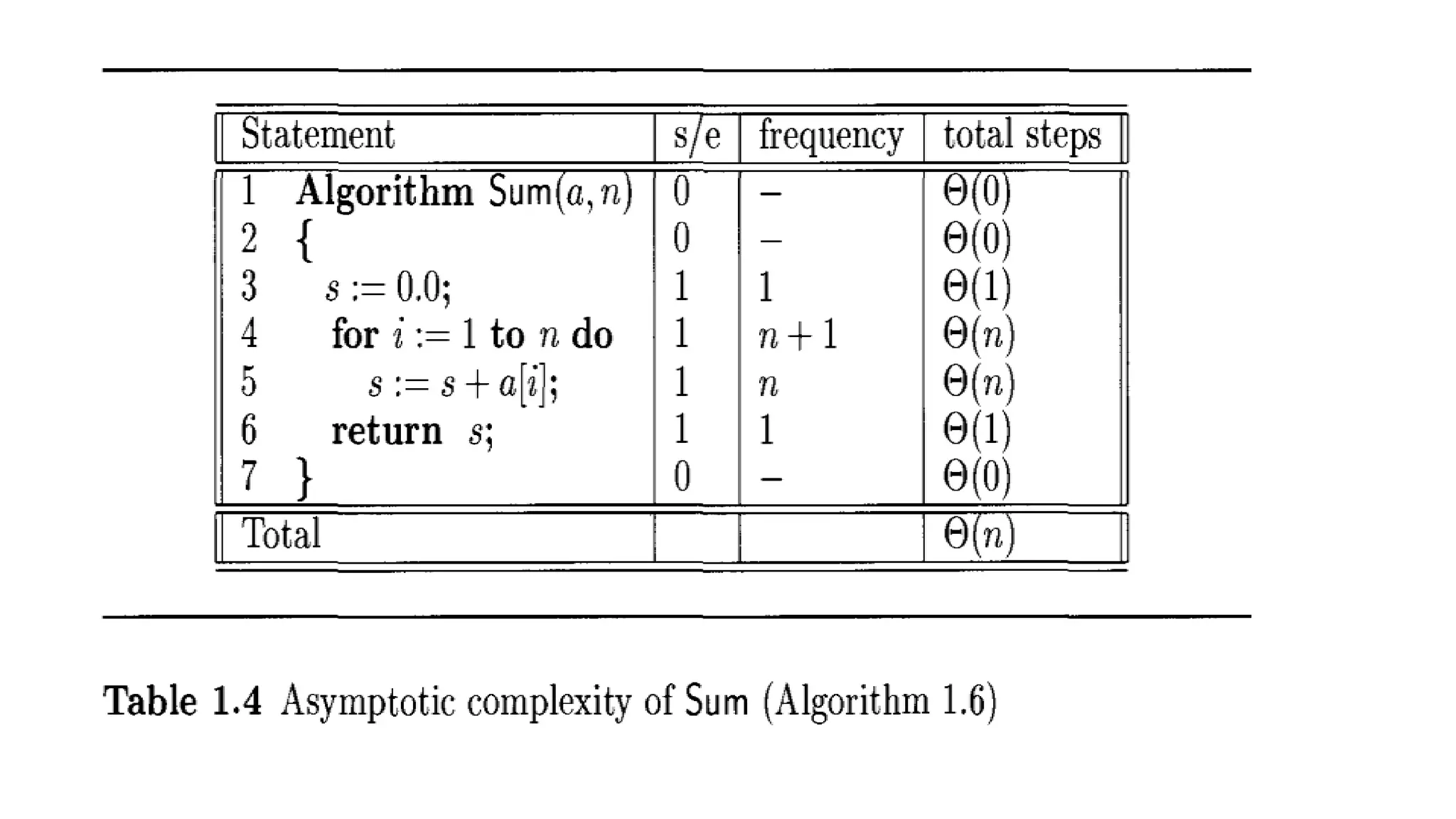

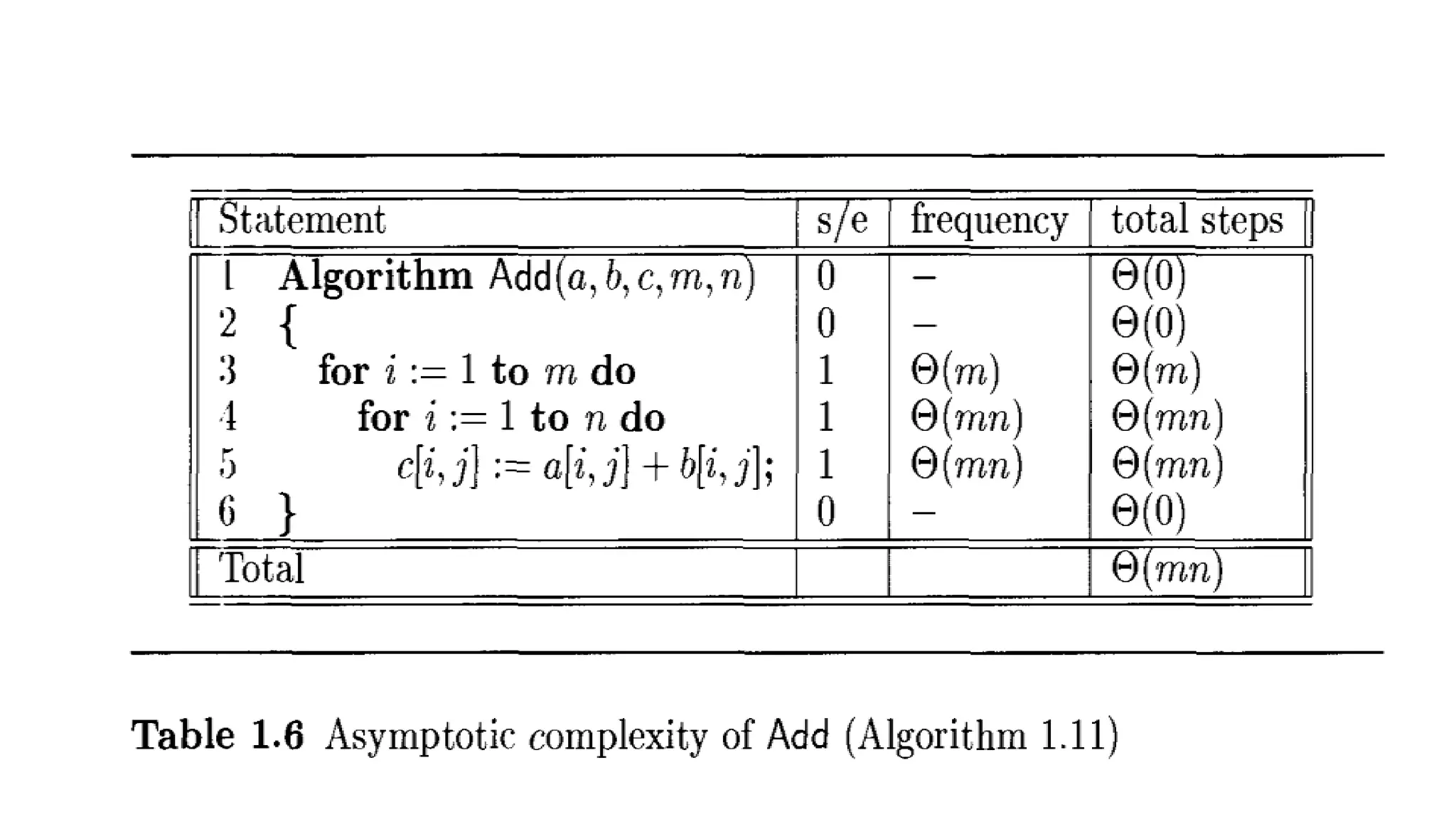

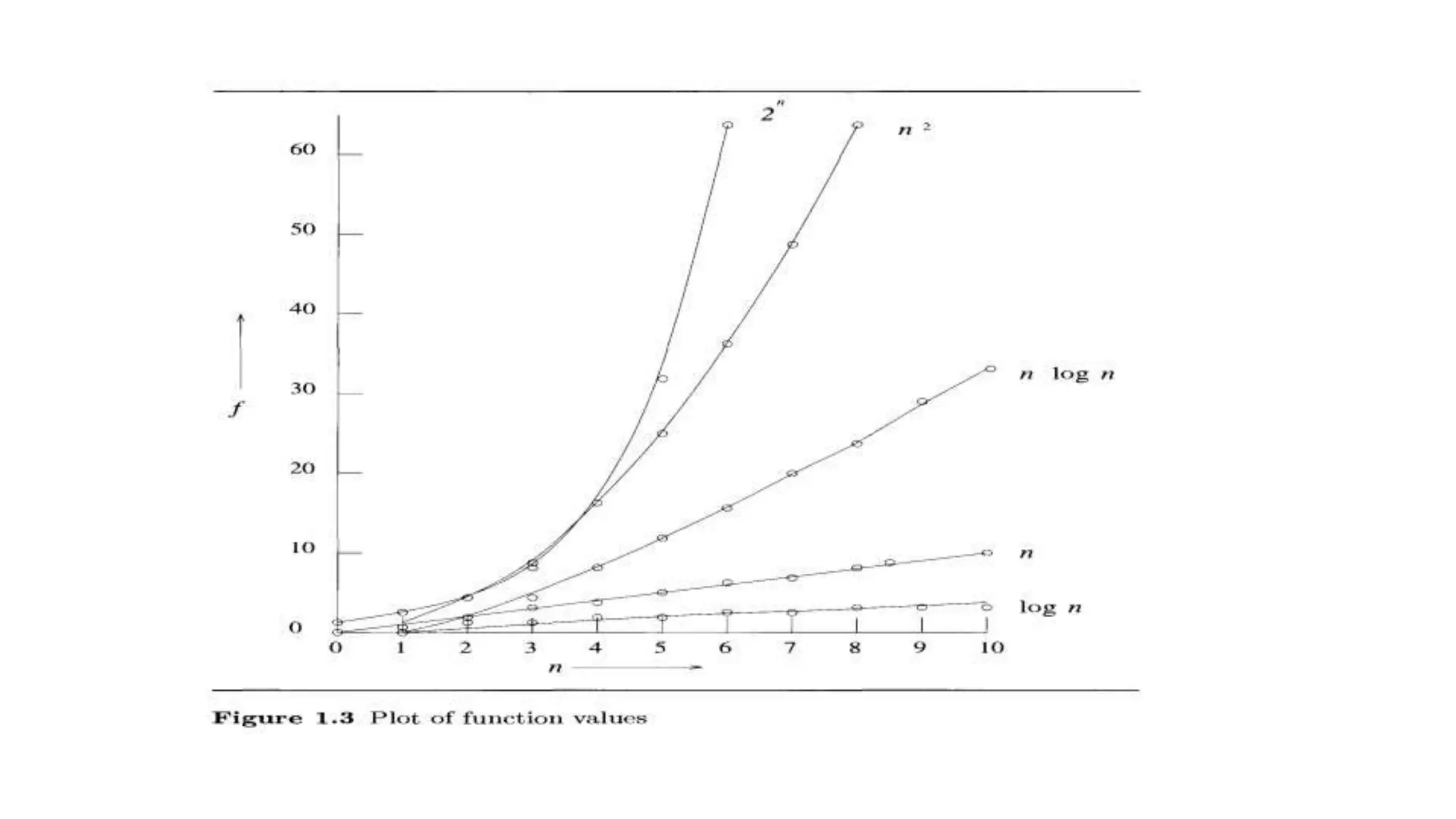

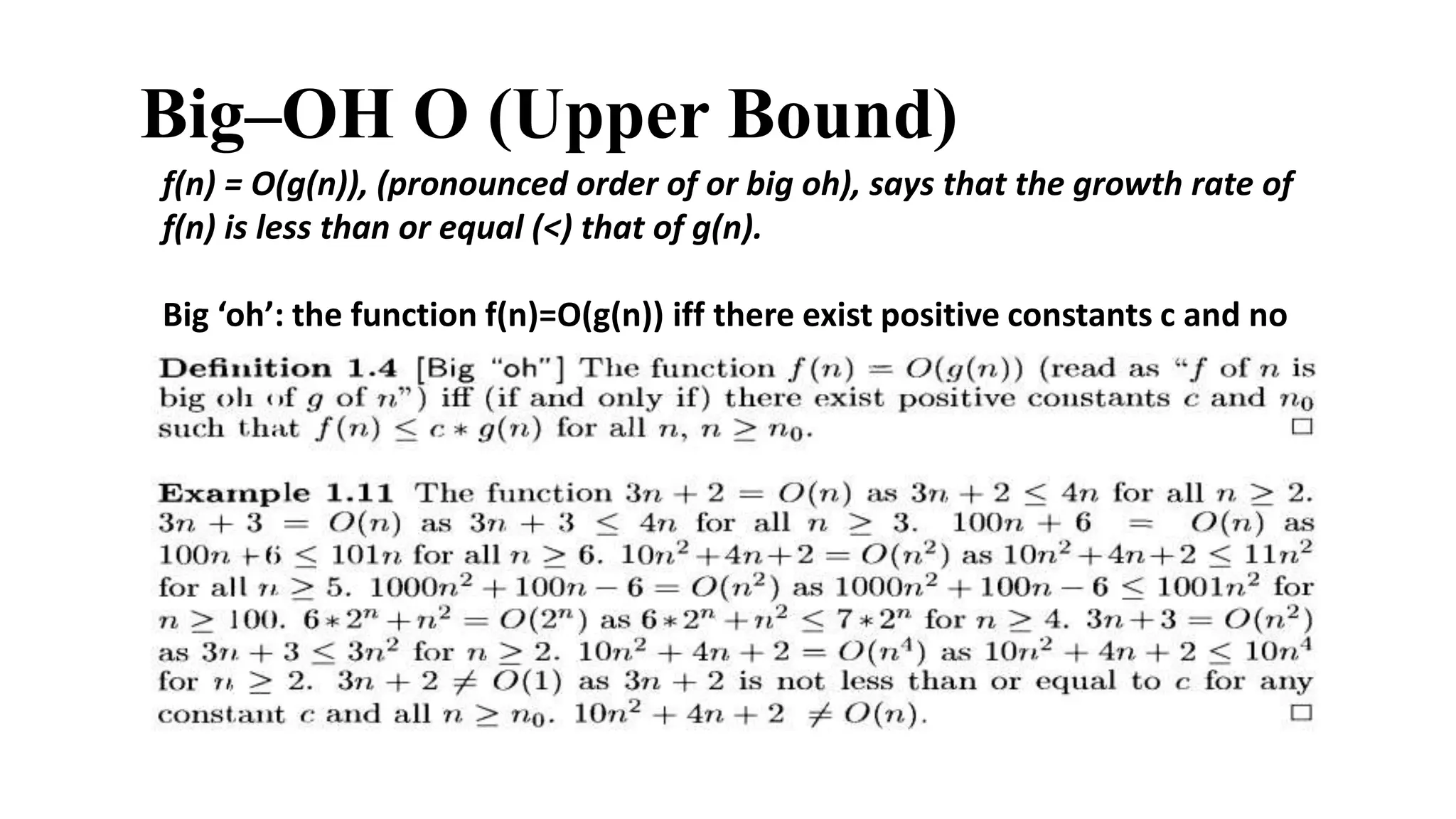

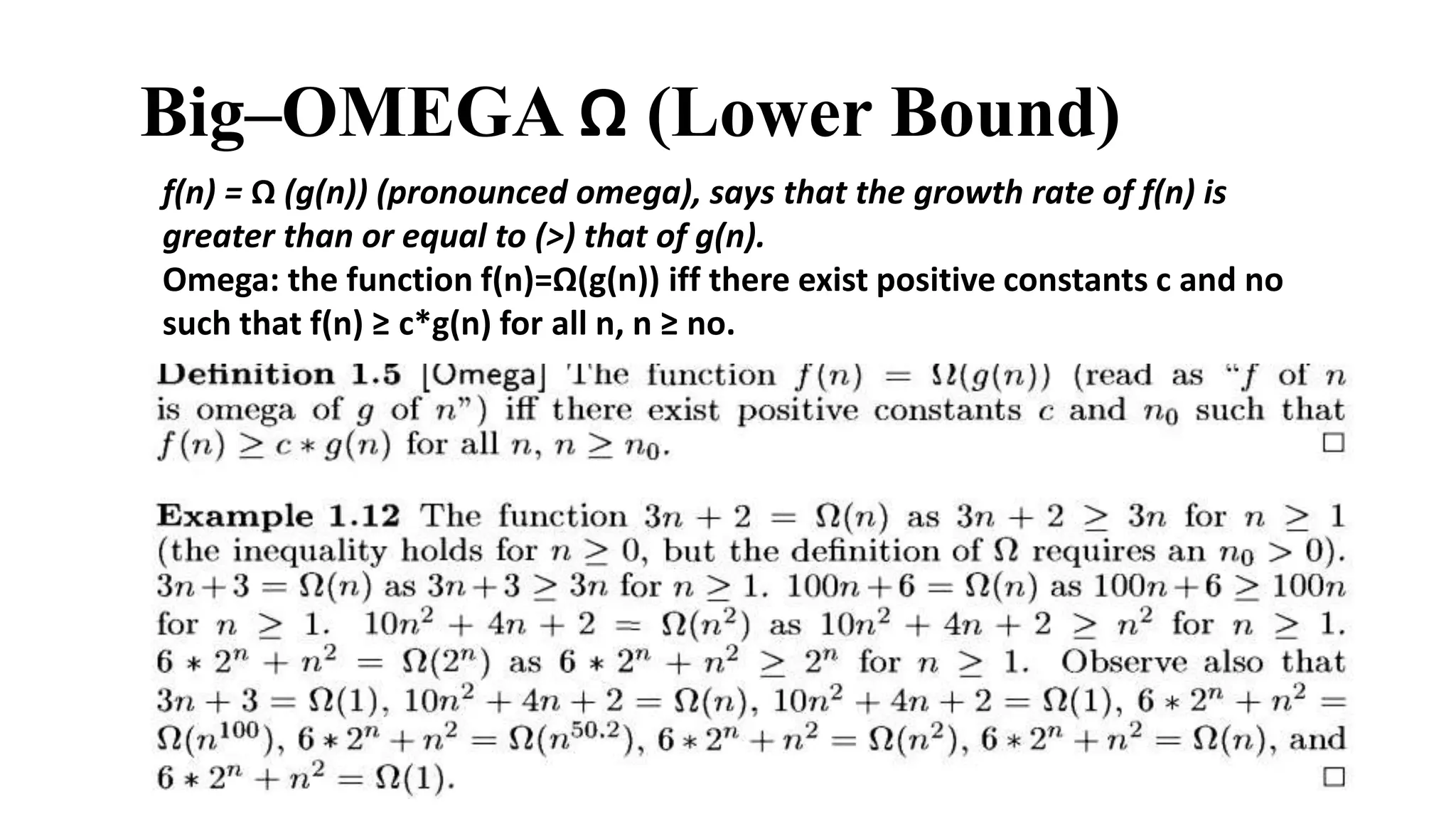

The document discusses different asymptotic notations used to characterize the complexity of algorithms: Big-O(O) notation provides an upper bound, Big-Omega(Ω) provides a lower bound, and Big-Theta(Θ) indicates the same order of growth. It defines each notation, explaining that Big-O represents f(n) growing less than or equal to g(n), Big-Omega represents f(n) growing greater than or equal to g(n), and Big-Theta represents f(n) growing equal to g(n). The document then discusses basics of probability theory, defining a sample space as the set of all possible outcomes of an experiment, with events being subsets of the sample space.

![Big–THETA ө (Same order)

f(n) = ө (g(n)) (pronounced theta), says that the growth rate of f(n)

equals (=) the growth rate of g(n) [if f(n) = O(g(n)) and T(n) = ө

(g(n)].

Theta: the function f(n)=ө(g(n)) iff there exist positive constants

c1,c2 and no such that c1 g(n) ≤ f(n) ≤ c2 g(n) for all n, n ≥ no.](https://image.slidesharecdn.com/asyptoticnotations-210402151548/75/Asymptotic-notations-Big-O-Omega-Theta-6-2048.jpg)