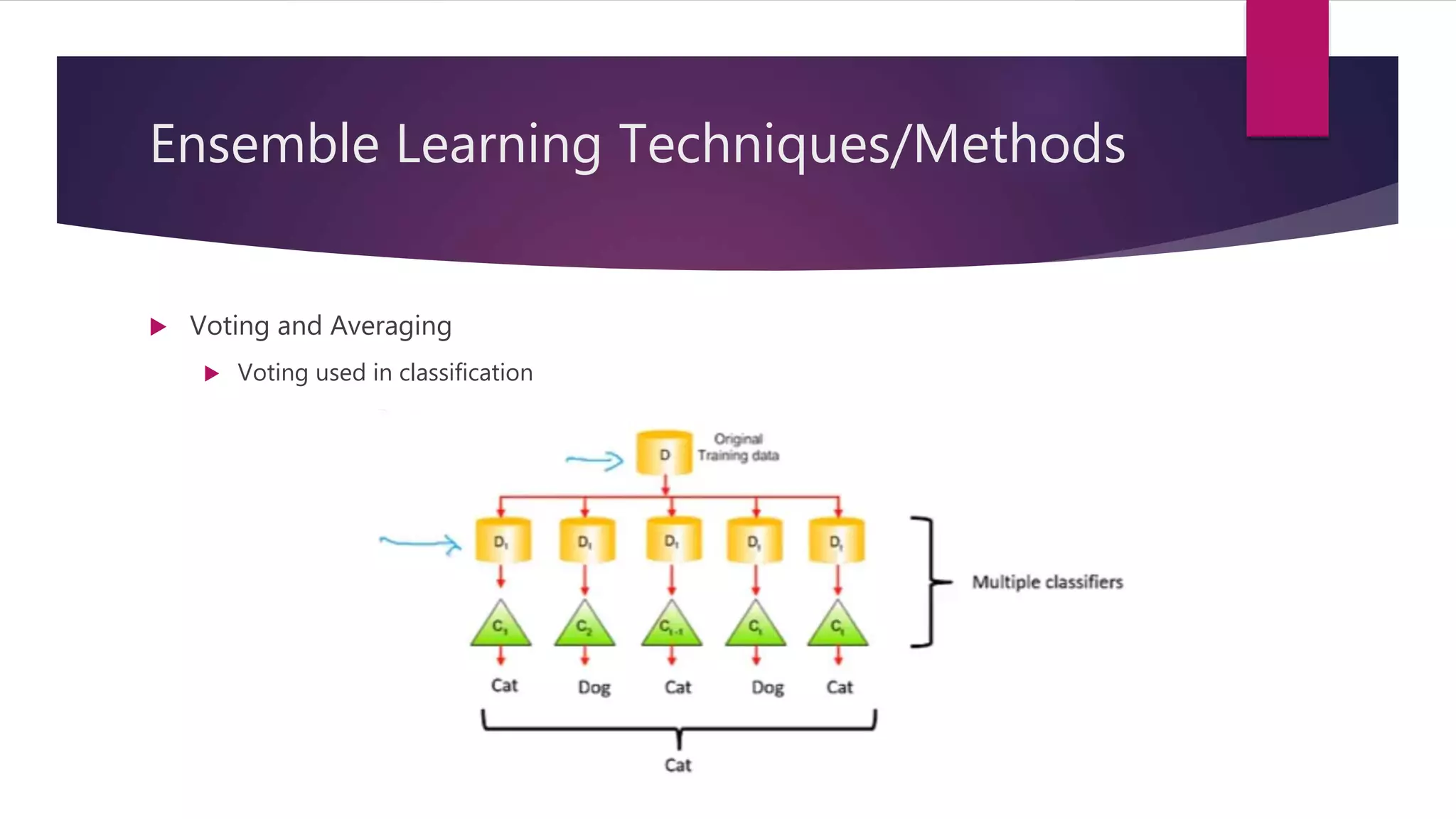

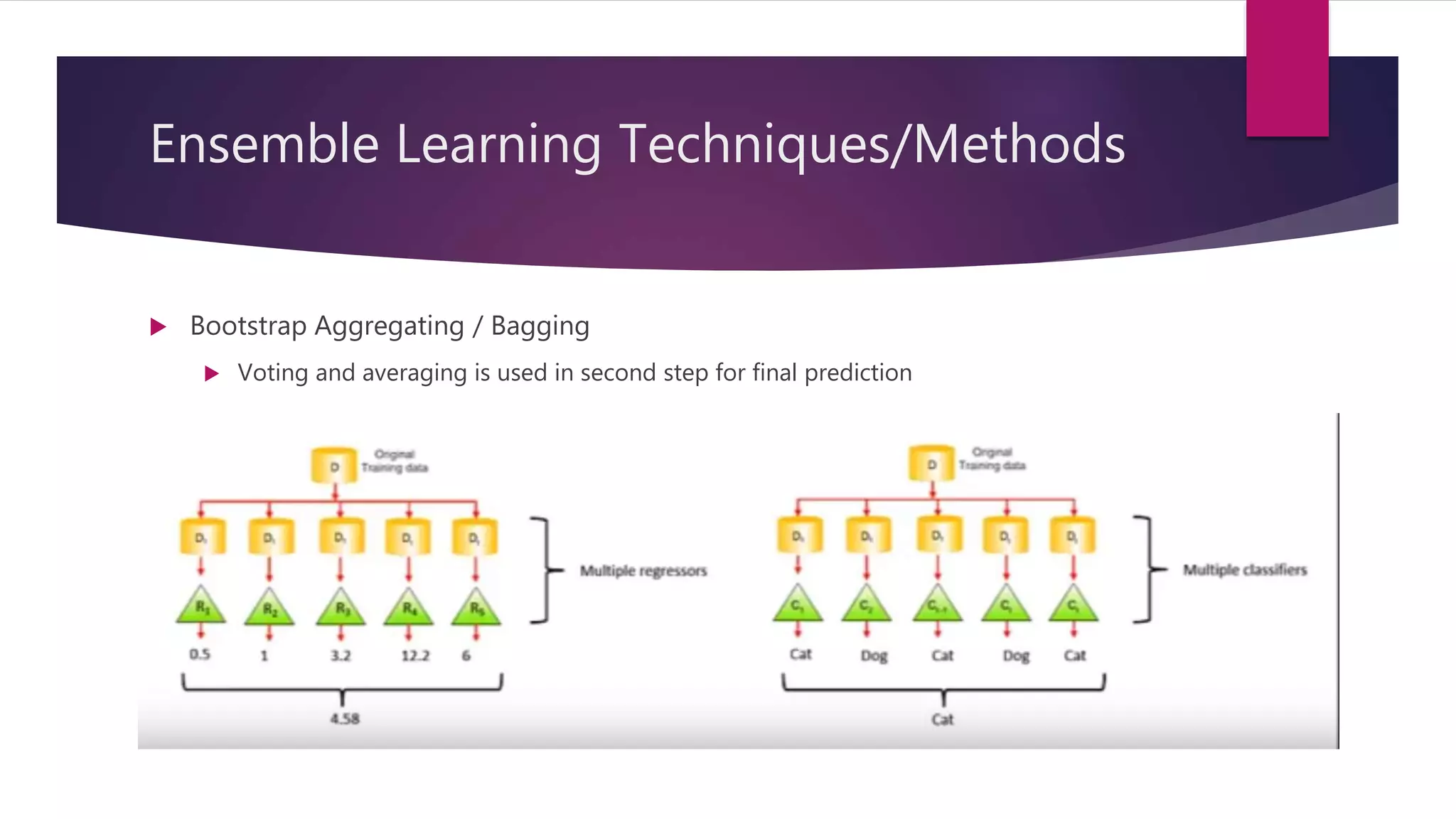

Ensemble learning is a technique that combines multiple models to produce more accurate predictions in both regression and classification tasks. Methods of ensemble learning include voting, averaging, stacking, bagging, and boosting, each employing various algorithms and techniques to improve the performance of the base models. Notably, algorithms like Random Forest and Adaboost are examples of methods used in bagging and boosting, respectively.