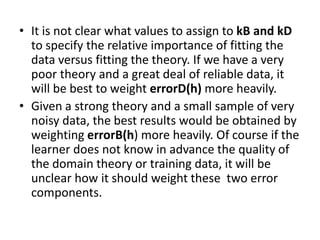

This document discusses two inductive-analytical approaches to learning from data: 1) minimizing errors between a hypothesis and training examples as well as errors between the hypothesis and domain theory, with weights determining the importance of each, and 2) using Bayes' theorem to calculate the posterior probability of a hypothesis given the data and prior knowledge. It also describes three ways prior knowledge can alter a hypothesis space search: using prior knowledge to derive the initial hypothesis, alter the search objective to fit the data and theory, and alter the available search steps.