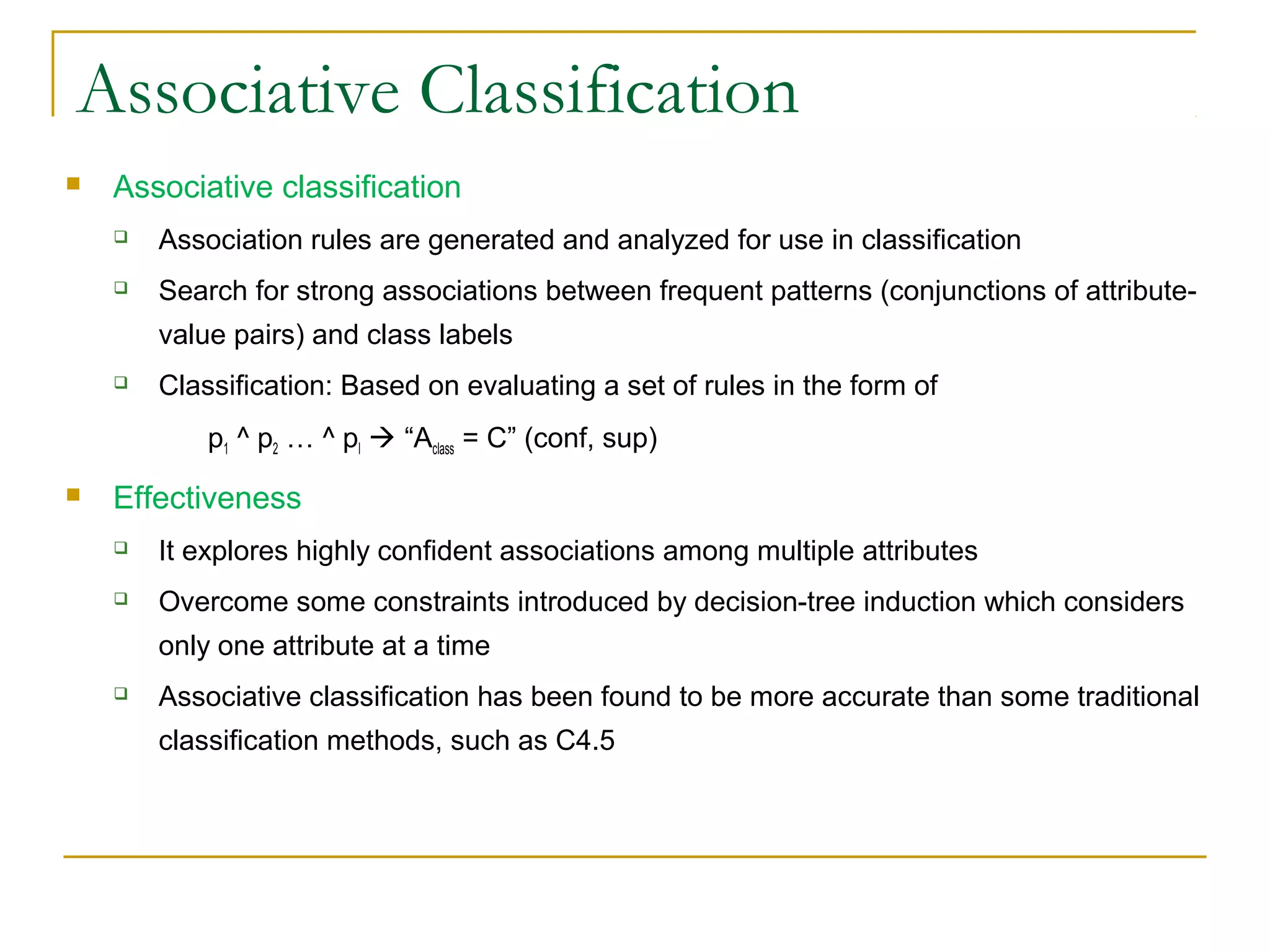

Support vector machines (SVMs) are a type of supervised machine learning model that can be used for both classification and regression analysis. SVMs work by finding a hyperplane in a multidimensional space that best separates clusters of data points. Nonlinear kernels can be used to transform input data into a higher dimensional space to allow for the detection of complex patterns. Associative classification is an alternative approach that uses association rule mining to generate rules describing attribute relationships that can then be used for classification.