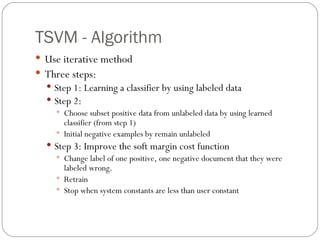

The document discusses text categorization and compares several machine learning algorithms for this task, including Support Vector Machines (SVM), Transductive SVM (TSVM), and SVM combined with K-Nearest Neighbors (SVM-KNN). It provides an overview of text categorization and challenges. It then describes SVM, TSVM which uses unlabeled data to improve classification, and SVM-KNN which combines SVM with KNN to better handle unlabeled data. Pseudocode is presented for the algorithms.

![Semi-Supervised Semi-Supervised is halfway between supervised and unsupervised learning [3] Data of SSL set X=(x 1 ,x 2, ….,x n ) can separated into two parts: The points X h =(x 1 ,…,x h ), for which labels Y h =(y 1 ,....,y h ) are provided. The points X t= (x h+1 ,….,x h+t ) the labels of which are not known. SSL will be most useful whenever there are much more unlabeled data than labeled](https://image.slidesharecdn.com/textcategorization-111031213457-phpapp02/85/Text-categorization-36-320.jpg)

![Algorithm KNN The KNN algorithms is proposed by Cover and Hart (1968) [5]. KNN are calculated using Euclidean distance : X i (x 1 ,...,x i ) and X j =(x 1 ,..,x j ) KNN are suitable for classifying the case of examples set of boundary intercross and examples overlapped.](https://image.slidesharecdn.com/textcategorization-111031213457-phpapp02/85/Text-categorization-39-320.jpg)

![Pesudocode SVM-KNN [1] Step 1:Utilize the labeled data available in a data set as inital training set and construct a weaker classifier SVM1 based on this training set. Step 2: Utilize SVM1 to predict the labels of all the remaining unlabeled data in the data set, the pick out 2 n examples located around the decision boundary as boundary vectors. Choose an example x i from class A(A is the label) and calculate the distance between x i and all the examples of class B (B is the label) using Euclidean distance subsequently pick out n exam;es of B corresponding to the n minimum distances. Similar choose y j from class B. Call the 2n examples as boundary vectors, make the 2n boundary vectors together as a new testing set. Step 3:KNN classifier classifies the new testing set with the initial training set, the boudary vectors get new labels. Step 4: Put the boundary vectors and their new labels into initial training set to enlarge the training set, then retrain a new SVM2 Step 5: Iteratively as above until the number of the training examples is m times of the whole data set.](https://image.slidesharecdn.com/textcategorization-111031213457-phpapp02/85/Text-categorization-46-320.jpg)

![References [1]Kunlun Li, Xuerong Luo and Ming Jin (2010). Semi-supervised Learning for SVM-KNN . Journal of computers, 5 (5): 671-678, May 2010 [2] X.J. Zhu. Semi-supervised learning literature survey[R].Technical Report 1530, Department of Computer Sciences,University of Wisconsin at Madison, Madison, WI,December, 2007. [3] Fung, G., & Mangasarian, O. Semi-supervised support vector machines for unlabeled data classification (Technical Report 99-05). Data Mining Institute,University of Wisconsin Madison, 1999 . [4] Zhou, Z.-H., Zhan, D.-C., & Yang, Q.Semi-supervised learning with very few labeled training examples. Twenty-Second AAAI Conference on Artificial Intelligence(AAAI-07), 2007. [5] Dasarathy, B. V., Nearest Neighbor (NN) Norms,NN Pattern Classification Techniques. IEEEComputer Society Press, 1990](https://image.slidesharecdn.com/textcategorization-111031213457-phpapp02/85/Text-categorization-54-320.jpg)