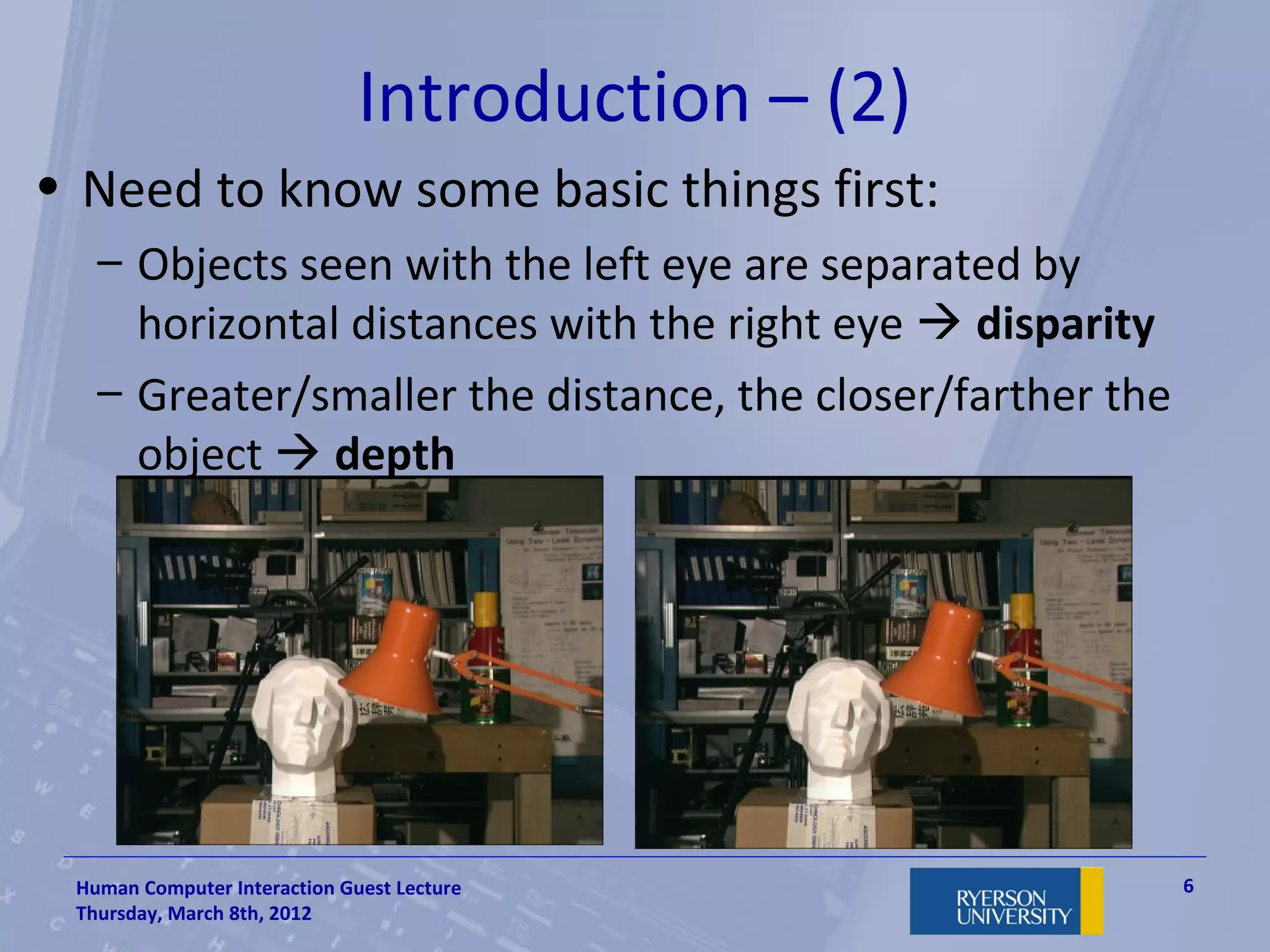

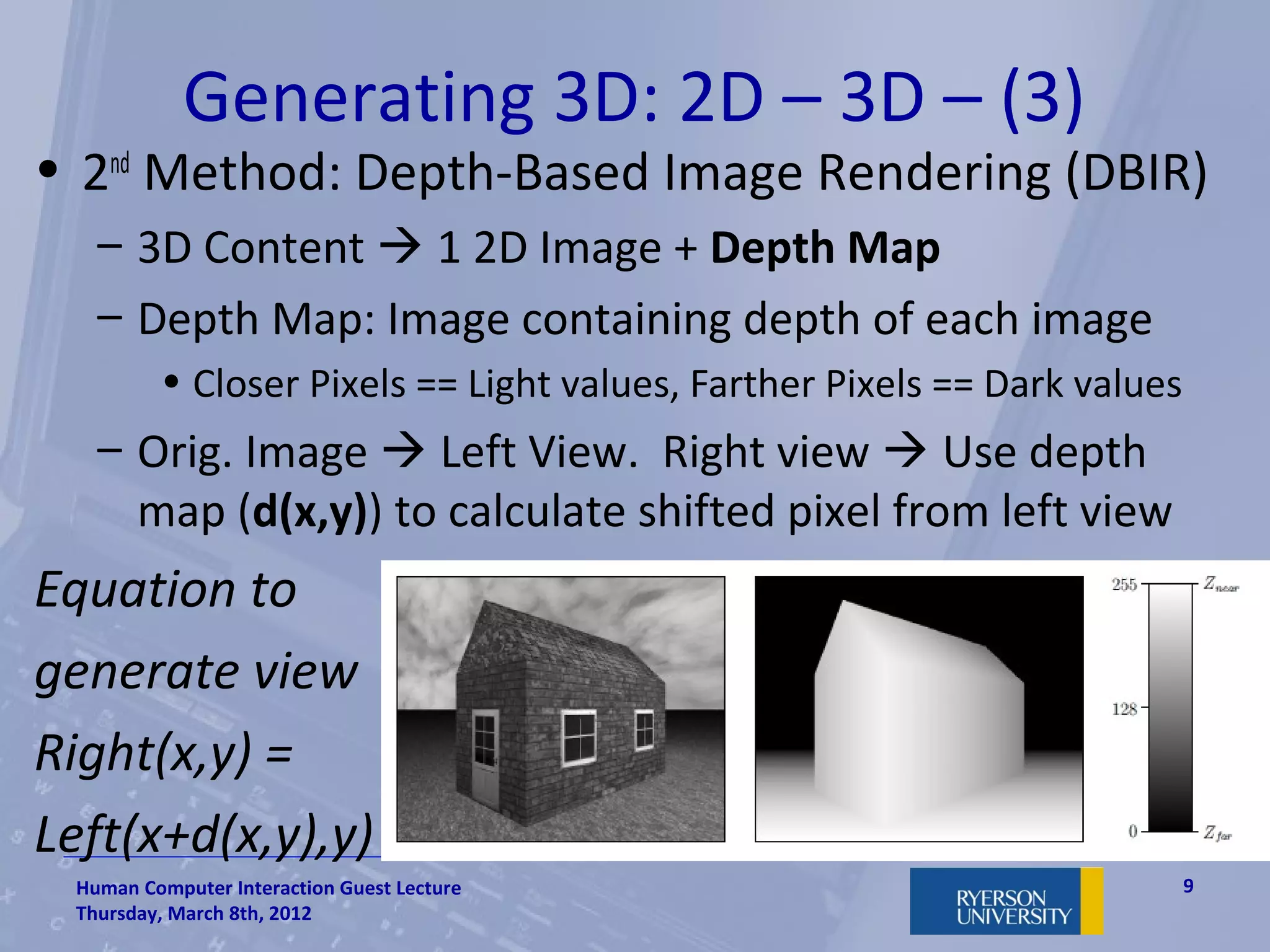

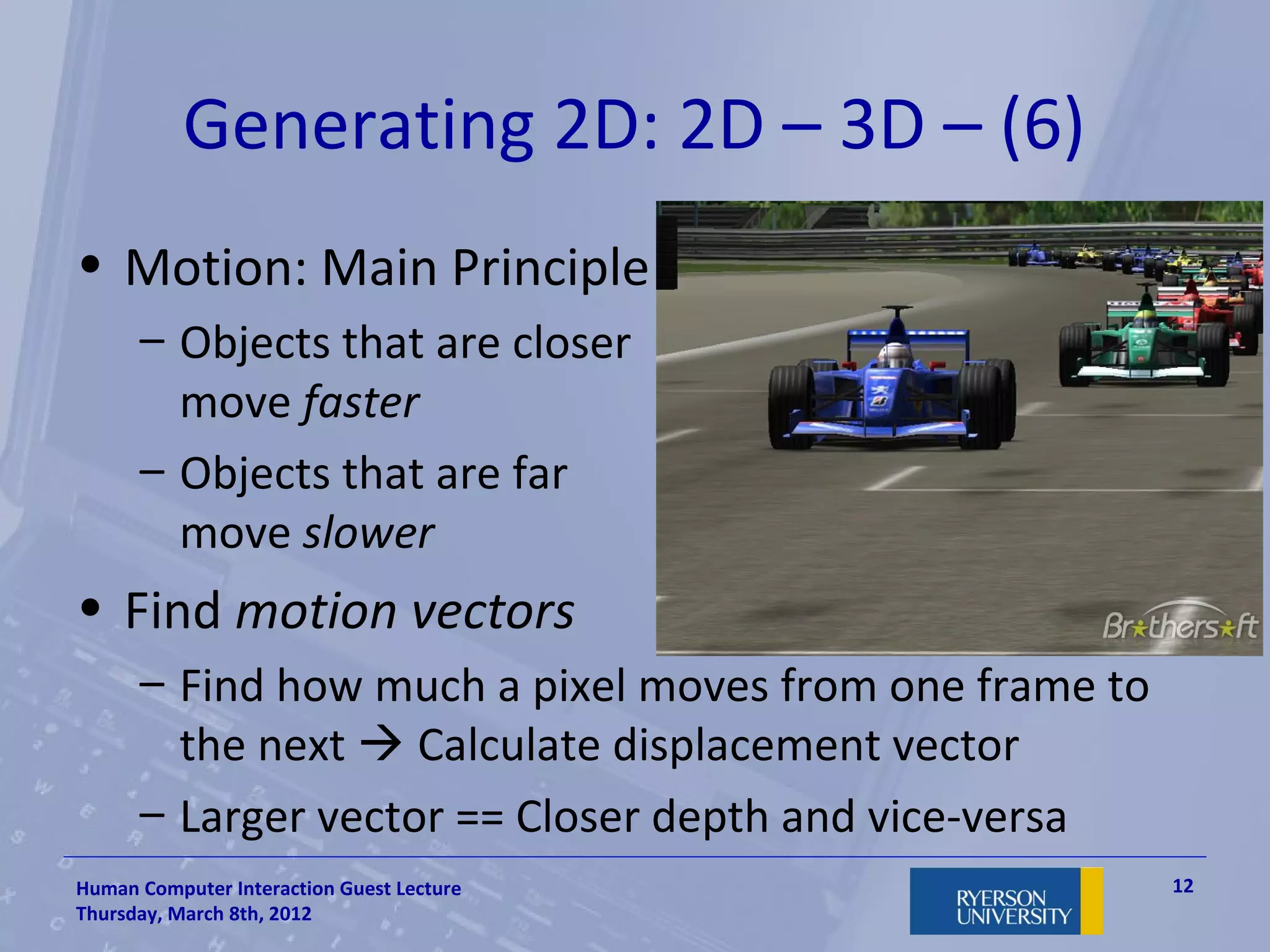

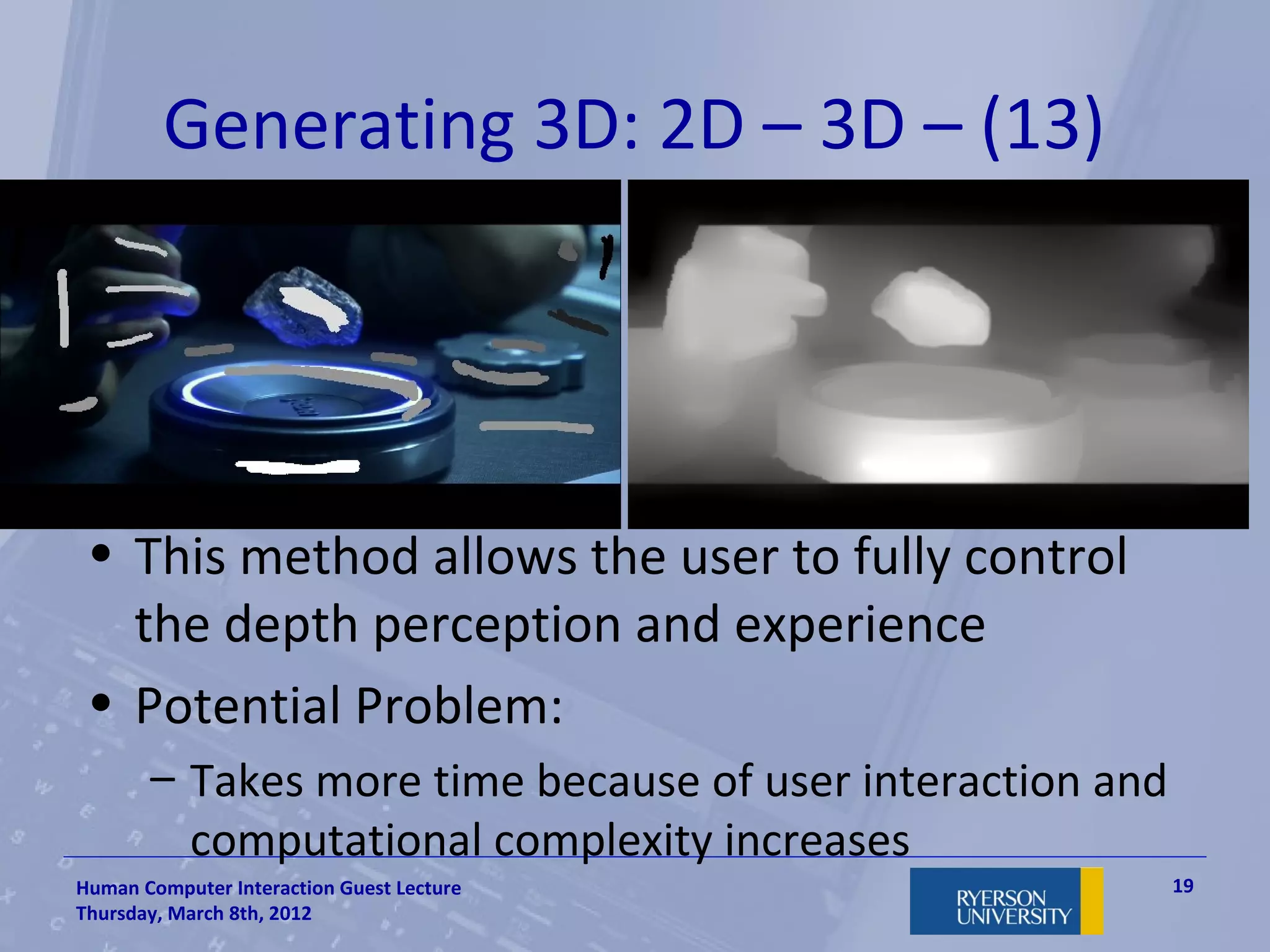

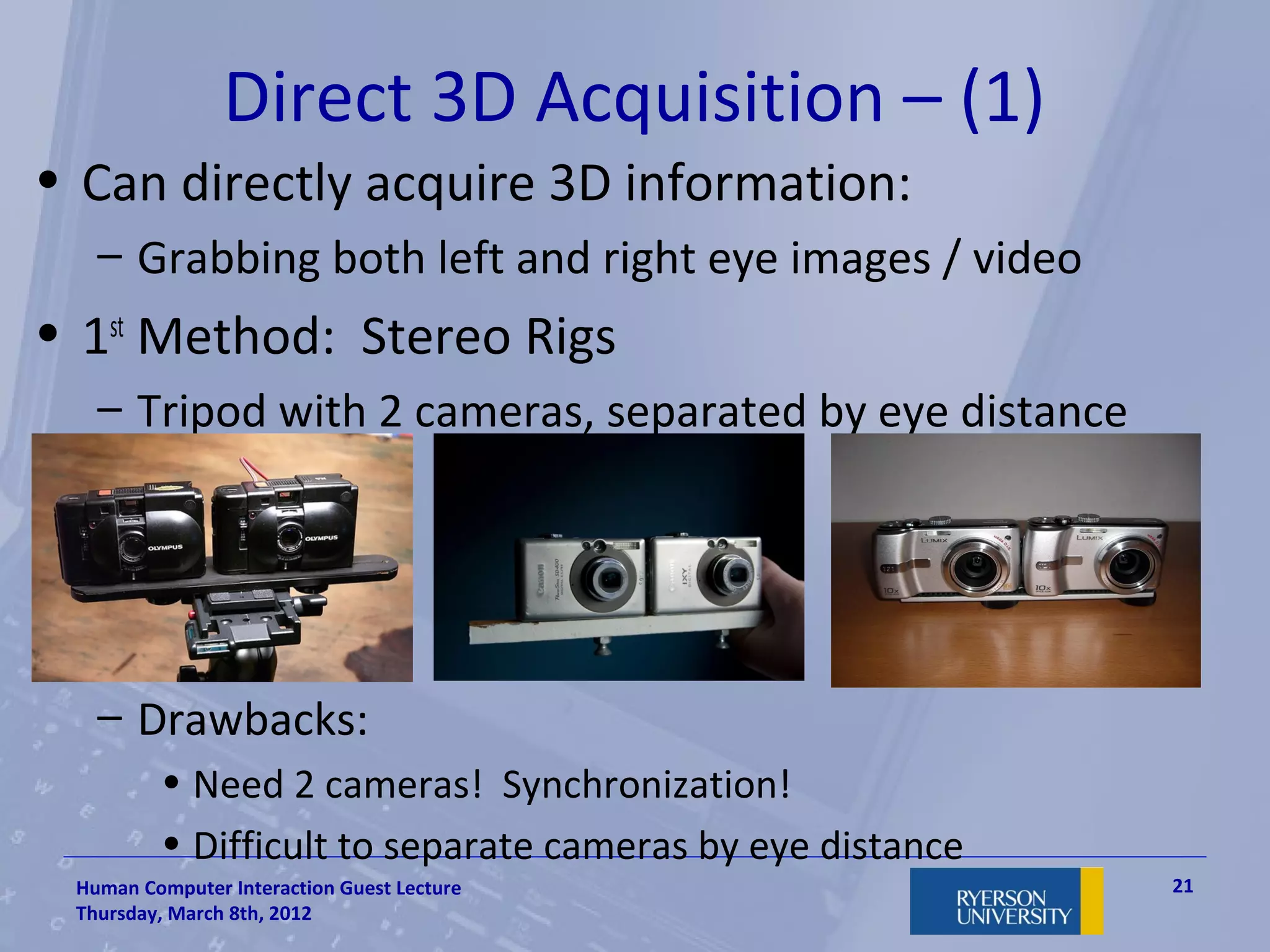

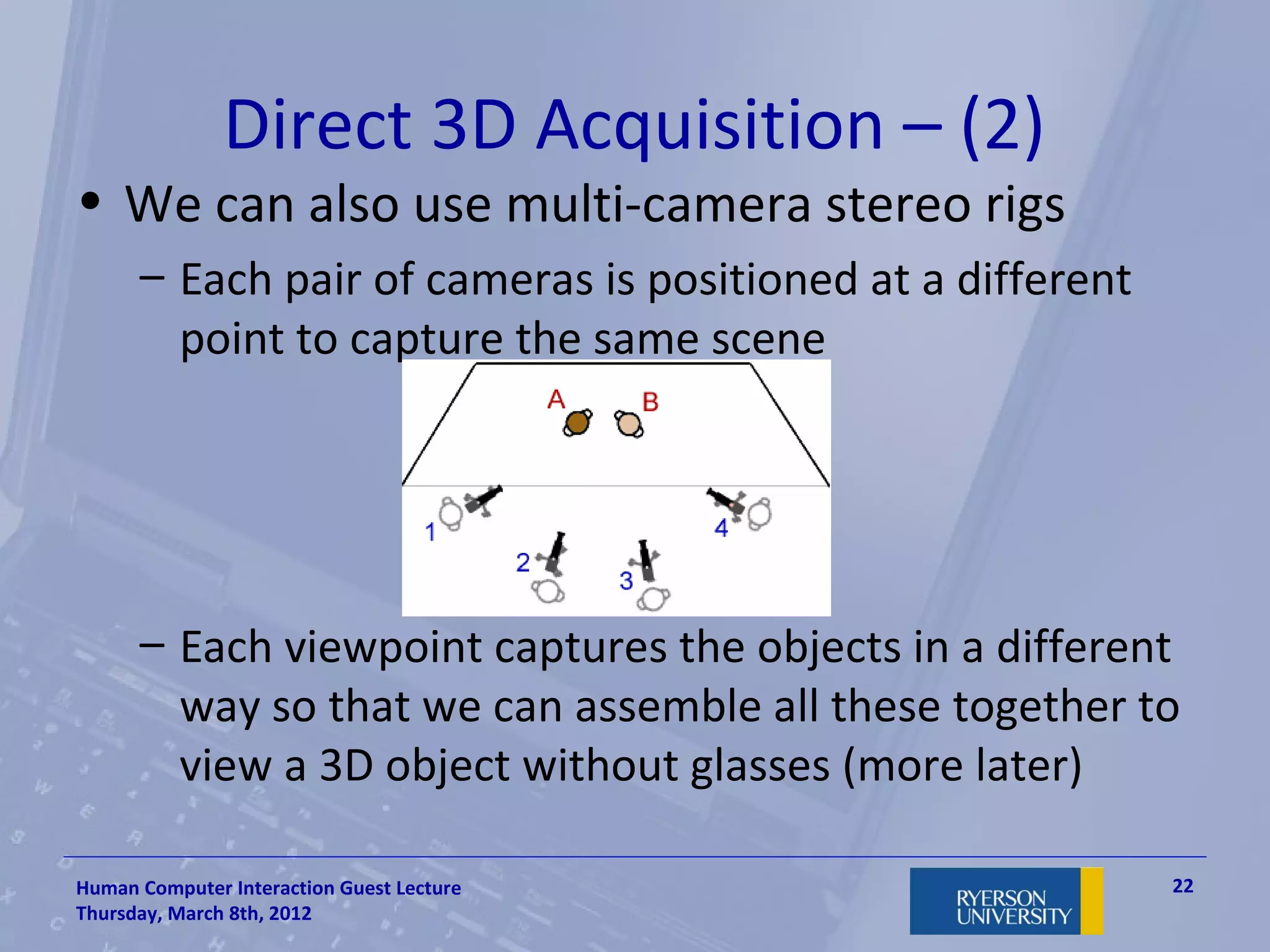

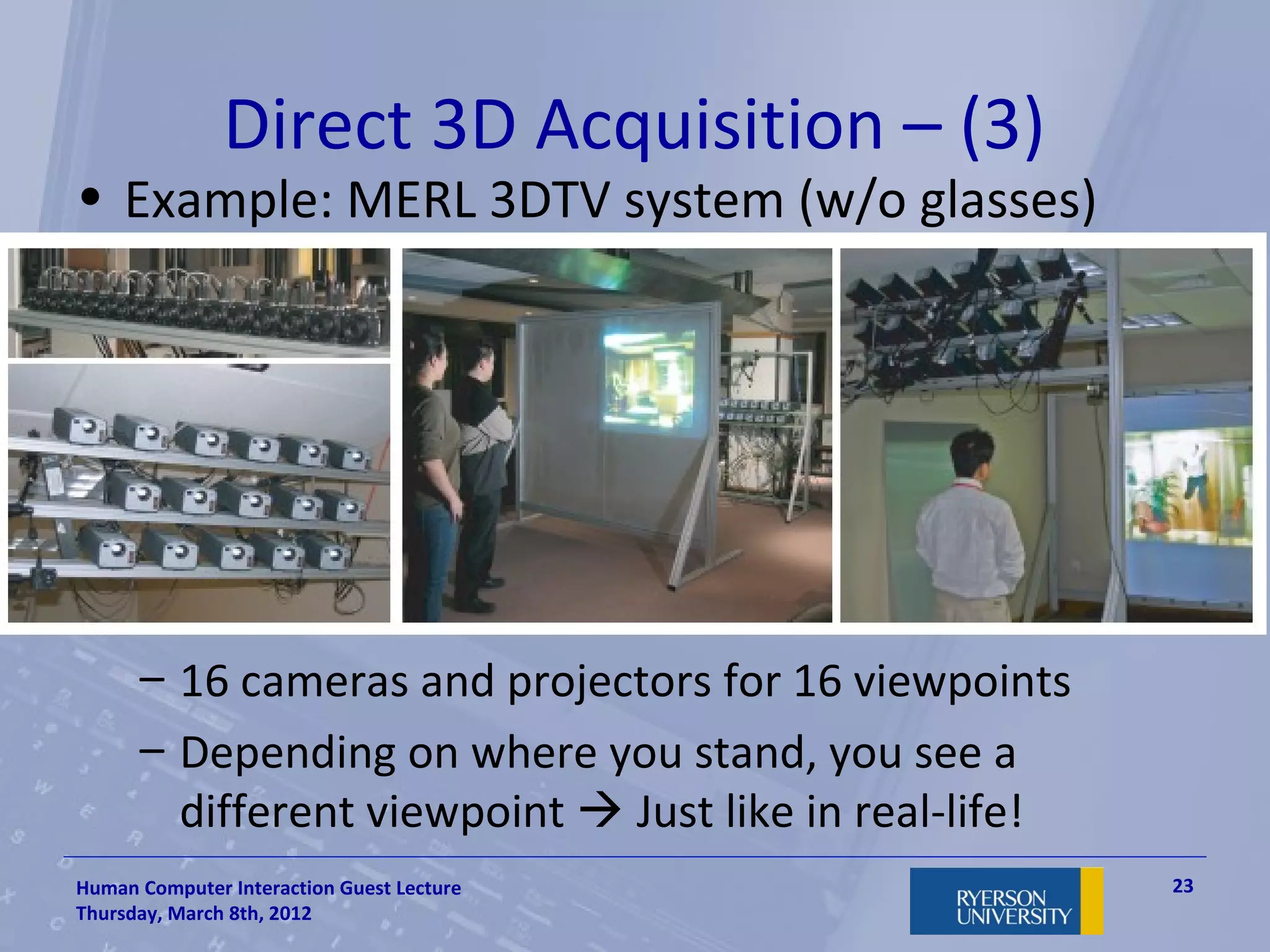

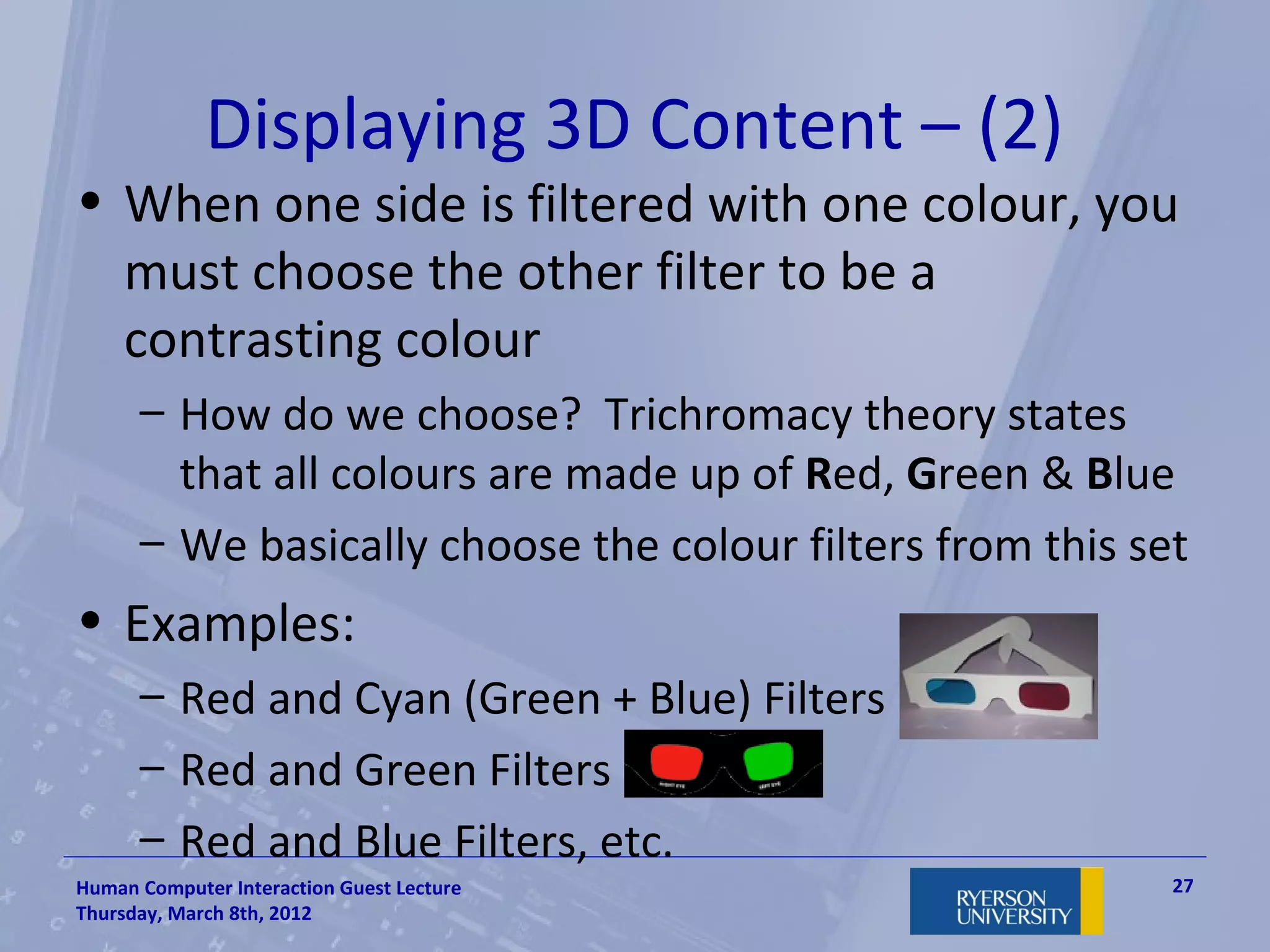

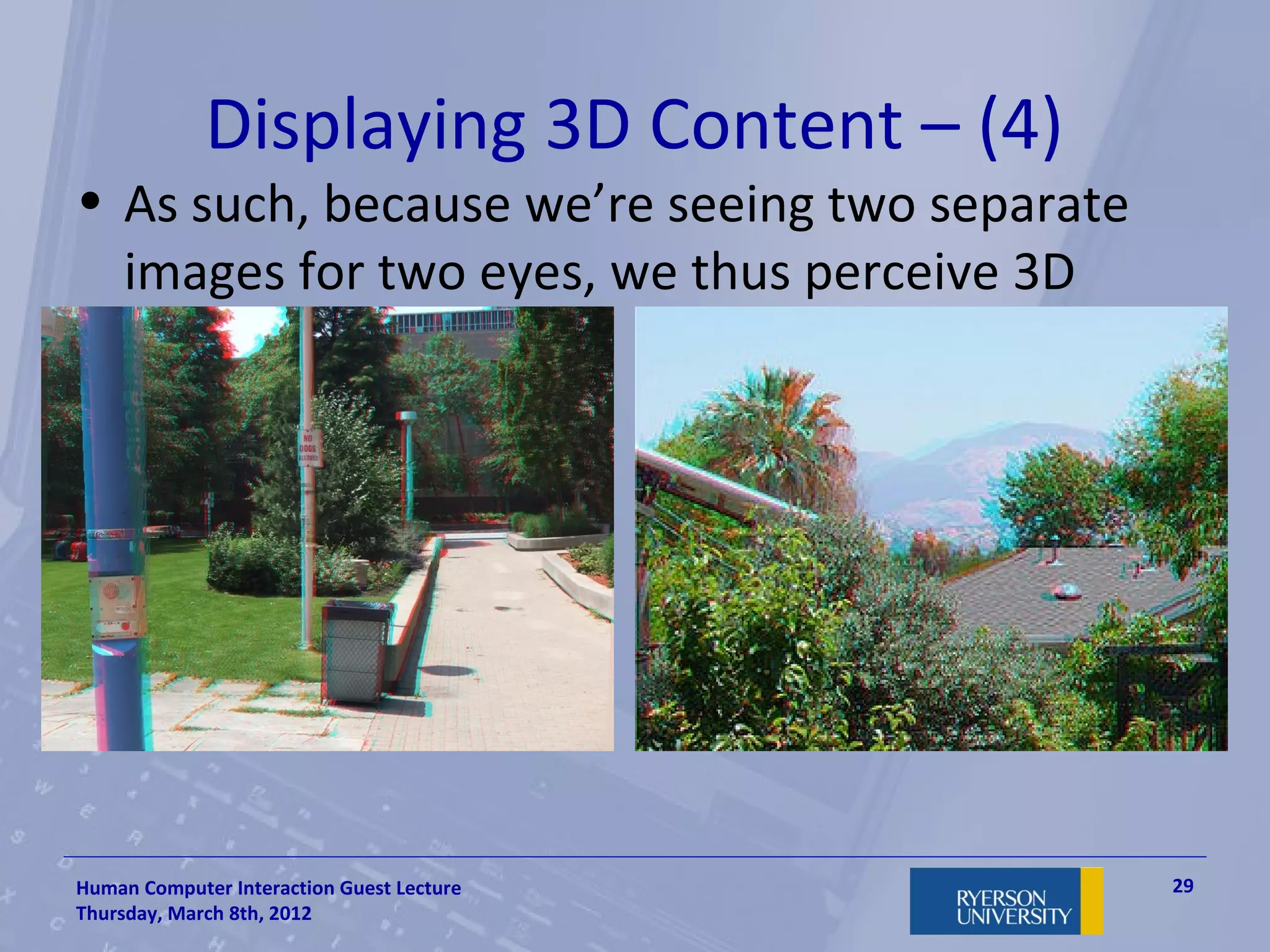

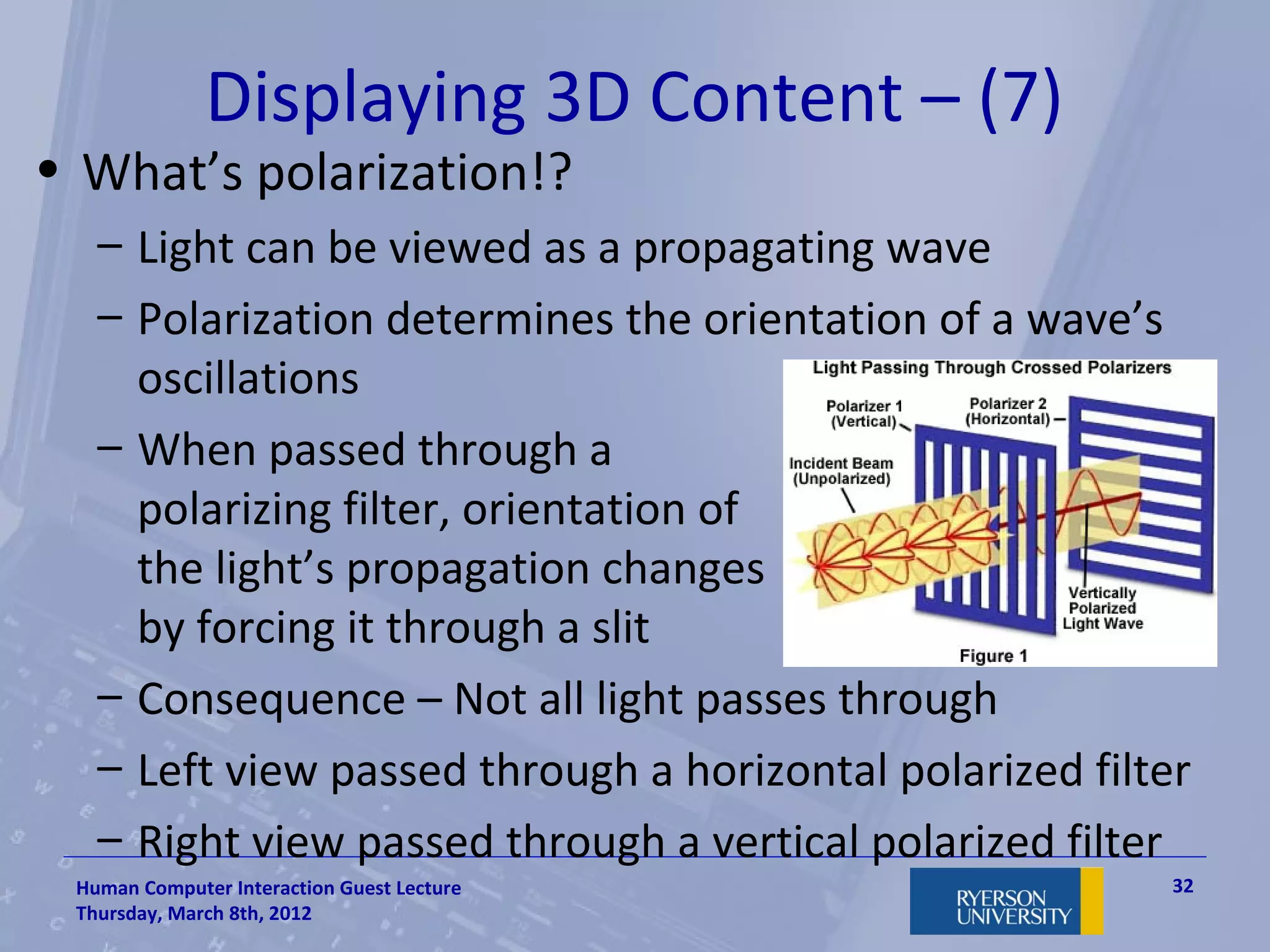

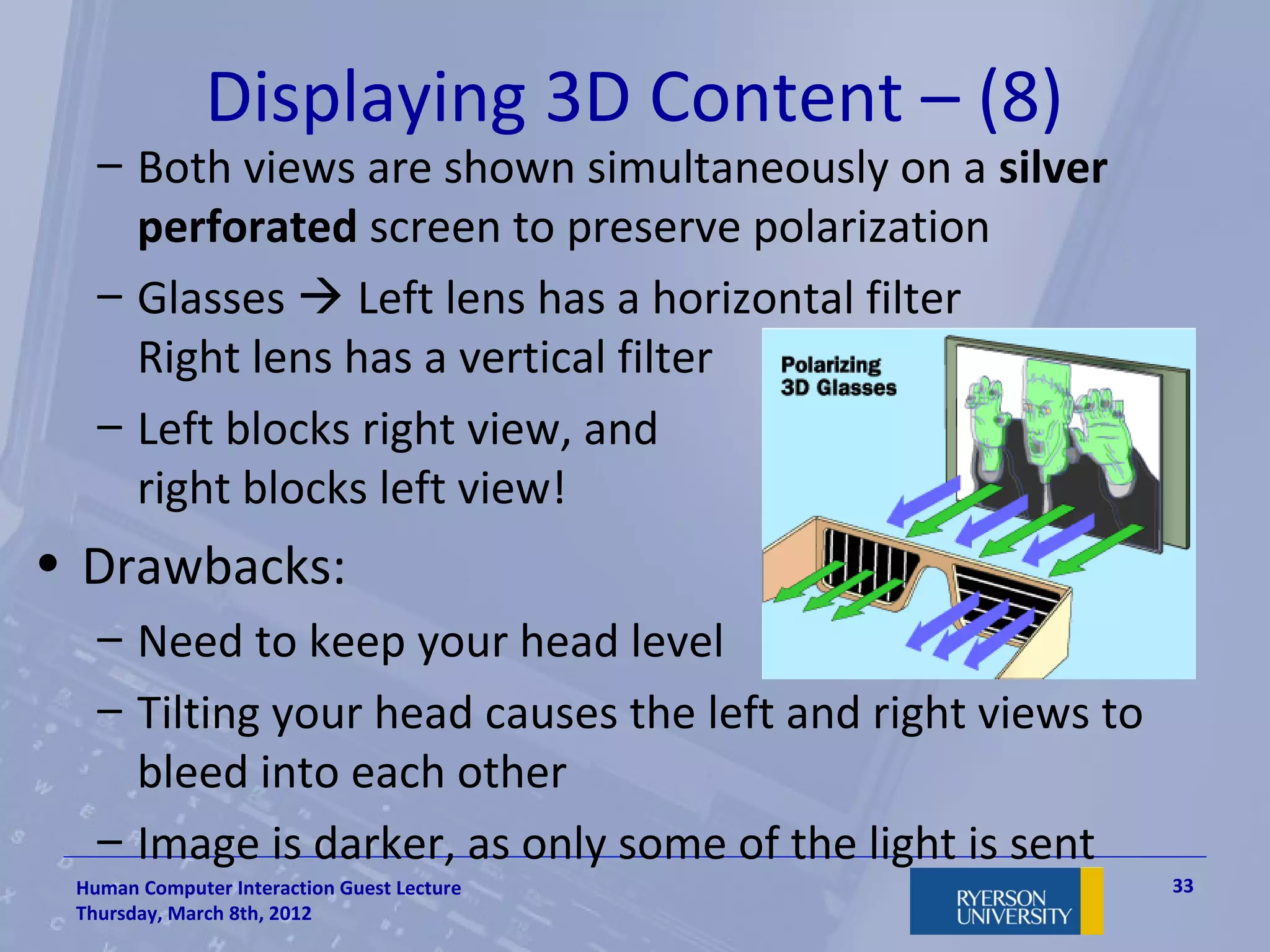

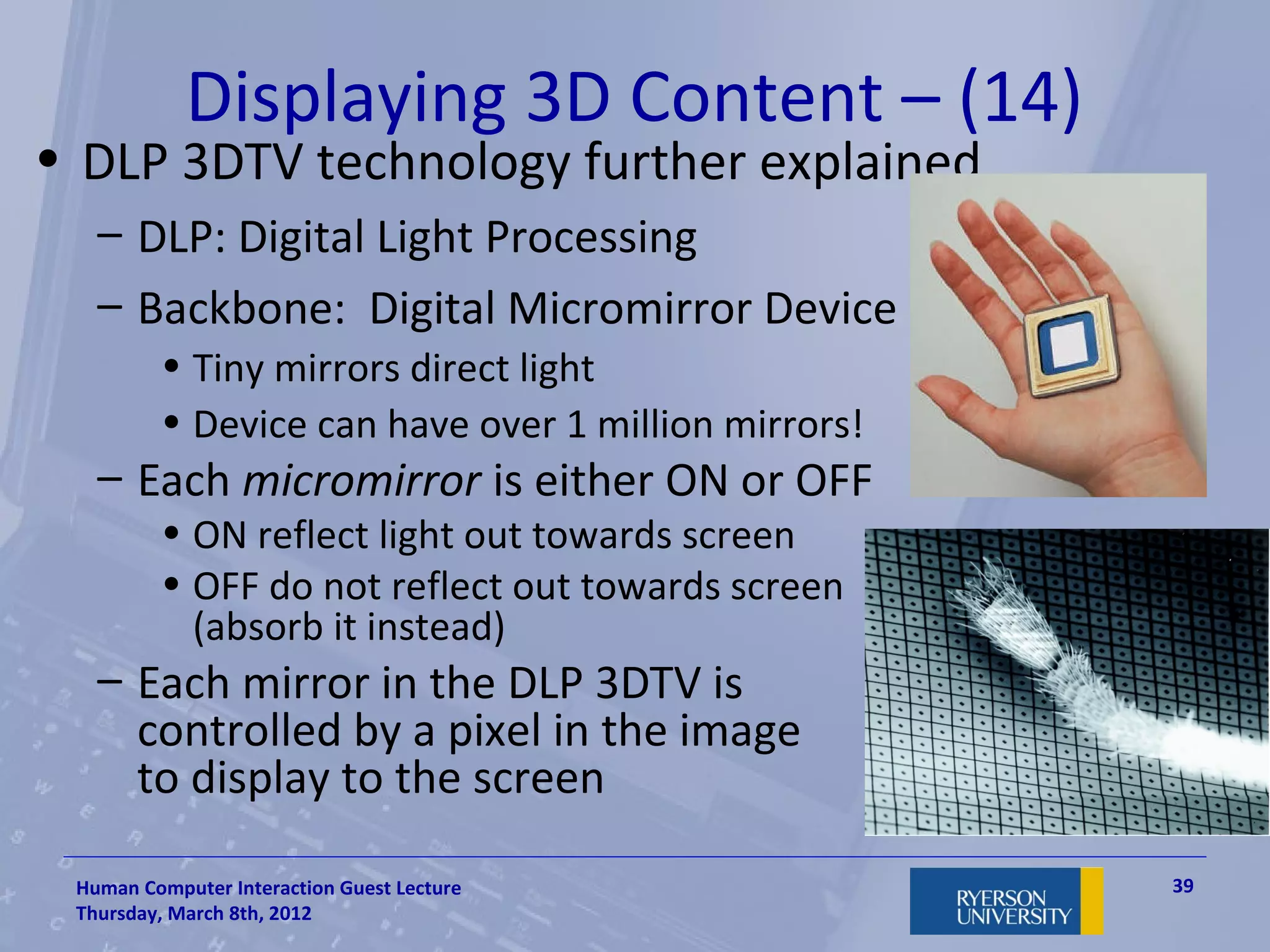

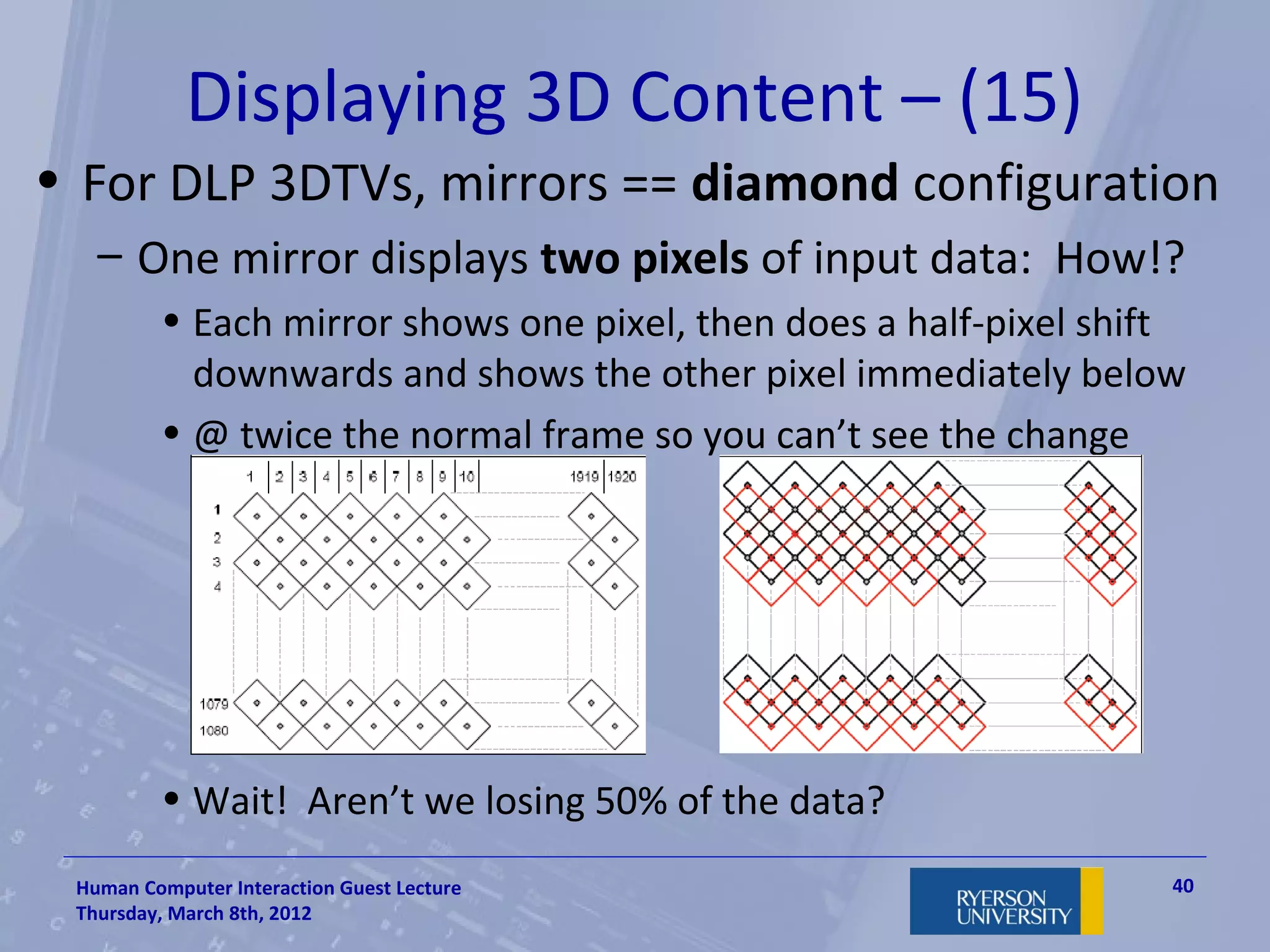

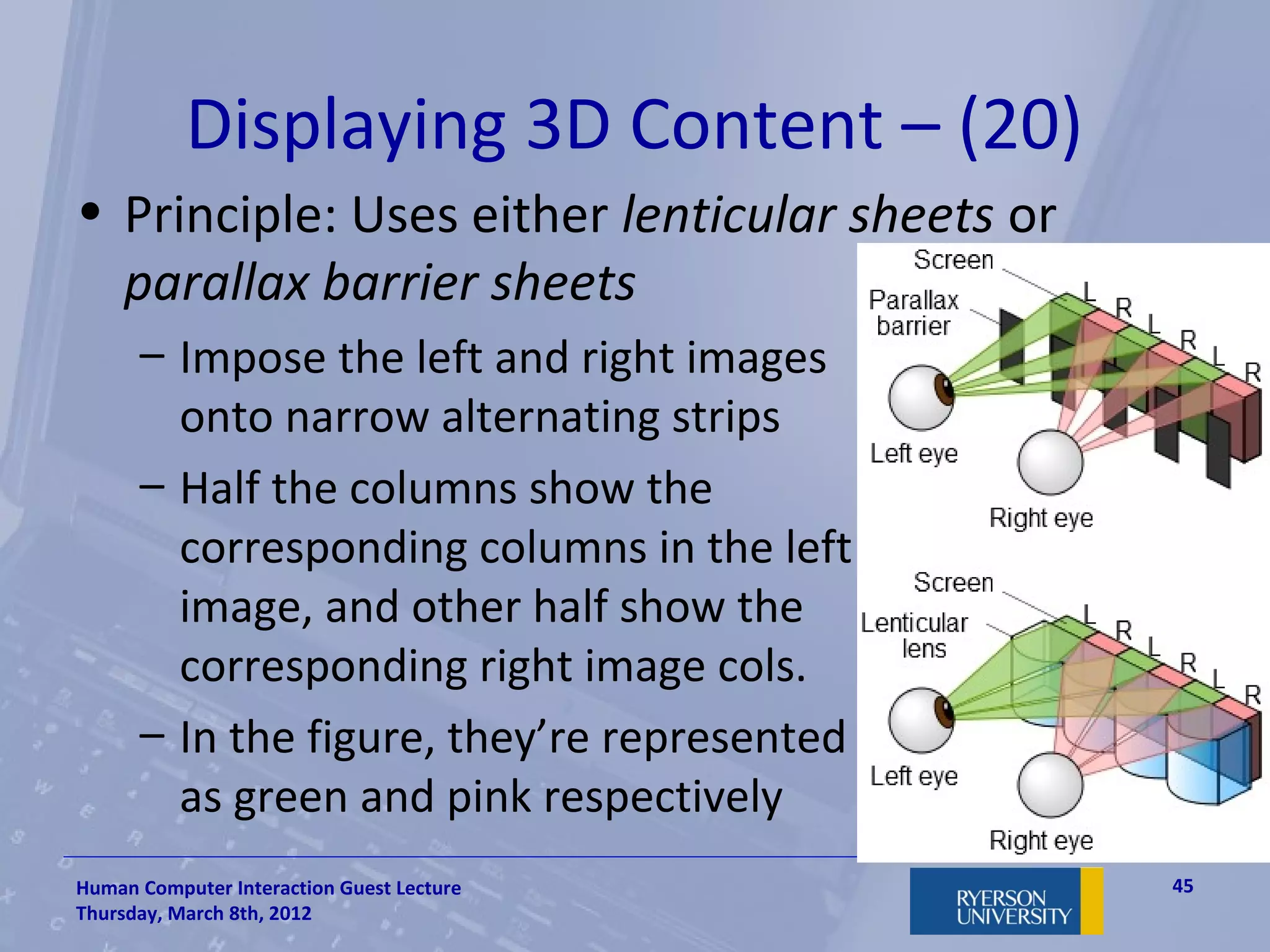

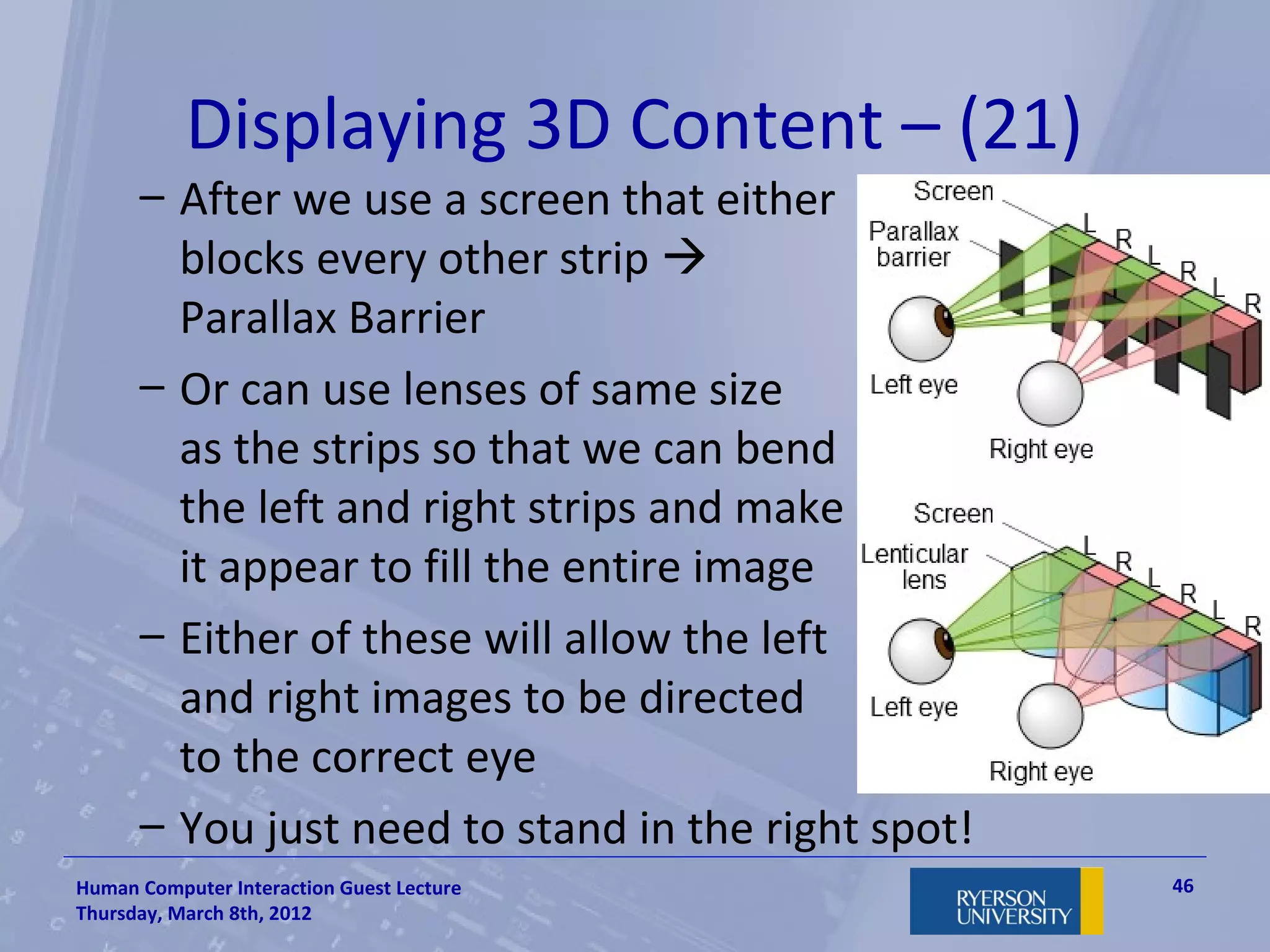

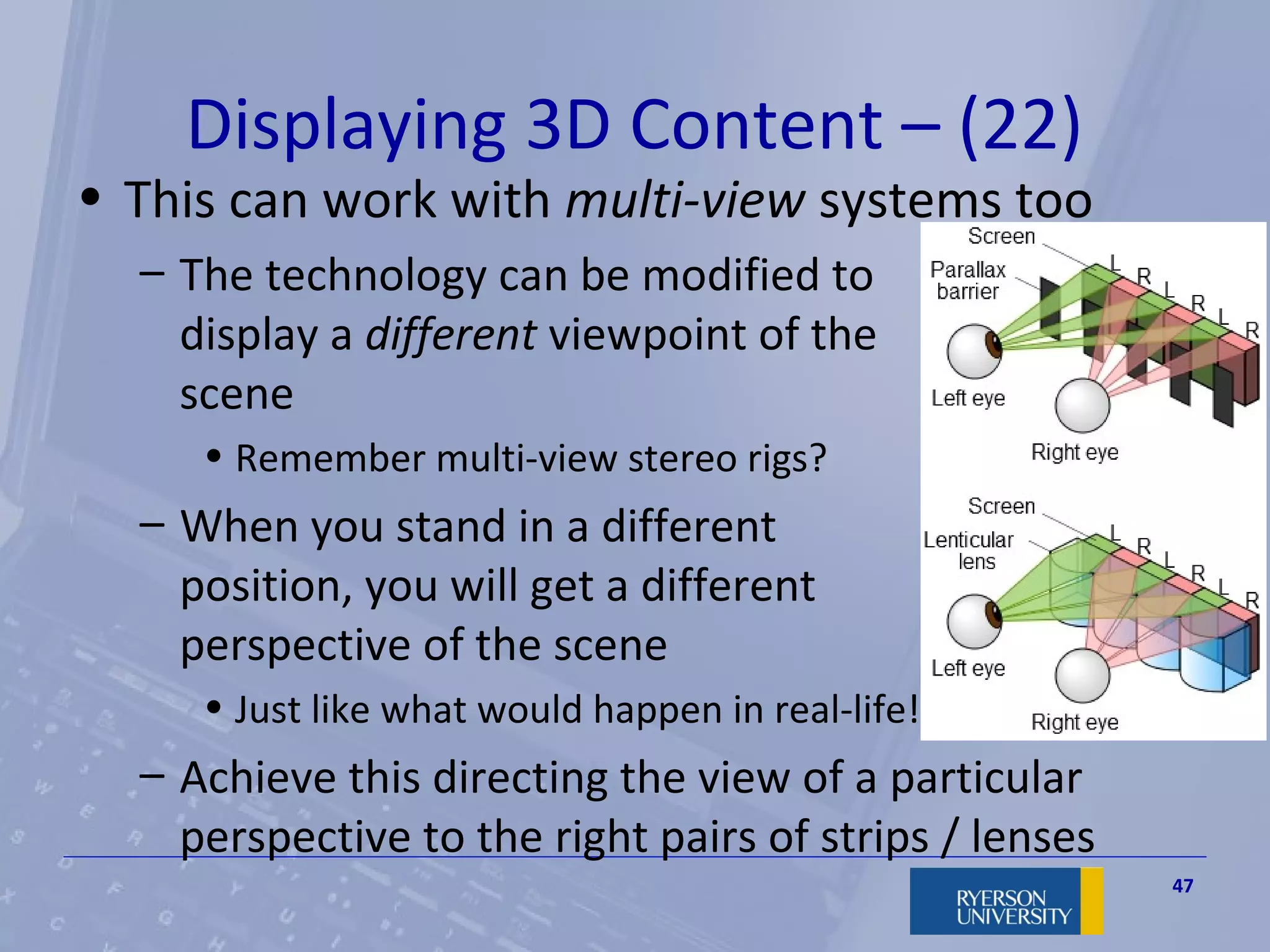

The document discusses stereoscopic 3D generation methods and display technologies used in both industry and home entertainment. It covers various techniques for converting 2D images to 3D, directly acquiring 3D content, and displaying 3D visuals through methods such as anaglyphs, polarized glasses, and shutter glasses. The presentation emphasizes the algorithms and technologies involved in achieving depth perception in visual media, outlining advancements and challenges in the field.