The document provides an overview of probability theory and random variables including:

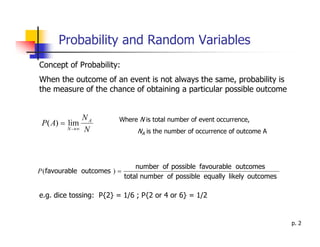

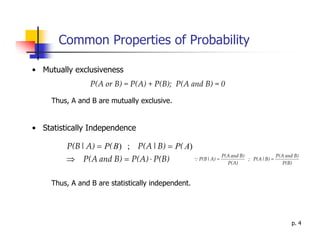

1) It defines probability as a measure of the chance of obtaining a particular outcome from an event. Common properties of probability such as mutually exclusive events and conditional probability are also covered.

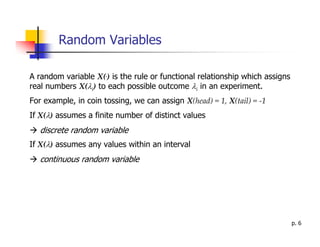

2) Random variables are introduced as rules that assign real numbers to possible outcomes of an experiment. Both discrete and continuous random variables are defined.

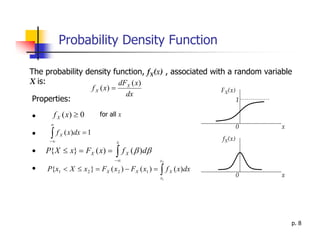

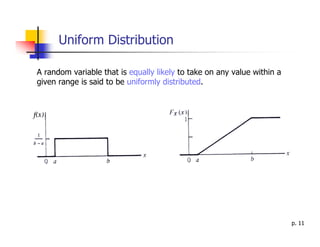

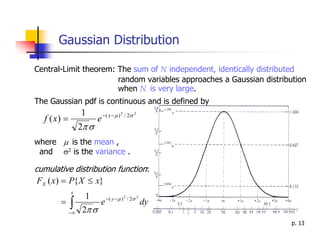

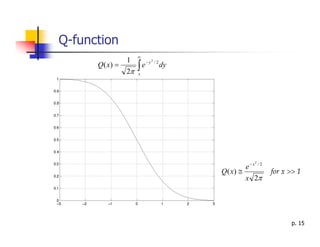

3) Key concepts related to random variables are summarized including the cumulative distribution function, probability density function, expected value, variance, and common distributions like the uniform, binomial, and Gaussian distributions.

4) Finally, random processes are defined as sets of random variables indexed by time, with properties like the mean

![Random Processes: Properties

Consider a random process X(t) , let X(tk) denote the random variable

obtained by observing the process X(t) at time tk .

Mean: mX(tk) =E{X(tk)}

Variance:σX2 (tk) =E{X2(tk)}-[mX (tk)] 2

Autocorrelation: RX{tk ,tj}=E{X(tk)X(tj)} for any tk and tj

Autocovariance: CX{tk ,tj}=E{ [X(tk)- mX (tk)][X(tj)- mX (tj)] }

p. 17](https://image.slidesharecdn.com/tele3113wk1wed-110604232830-phpapp01/85/Tele3113-wk1wed-17-320.jpg)