This document provides an overview of correlation and regression analysis concepts including:

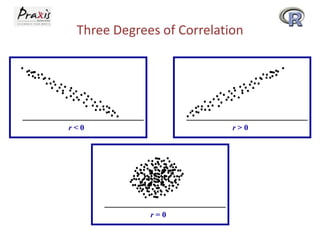

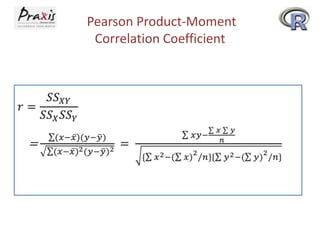

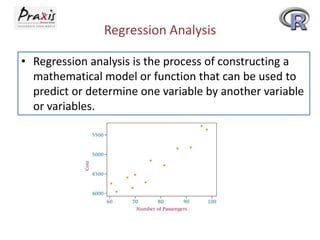

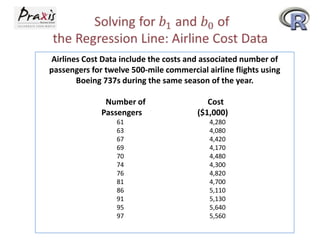

- Correlation measures the relationship between two variables while regression analysis is used to predict one variable based on another.

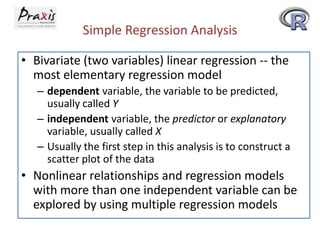

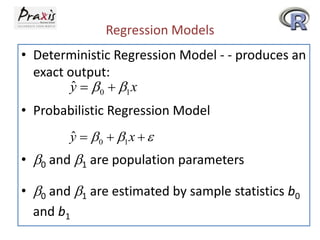

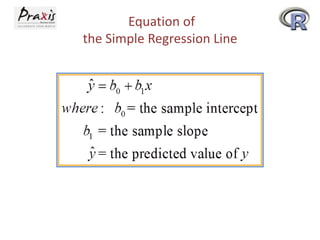

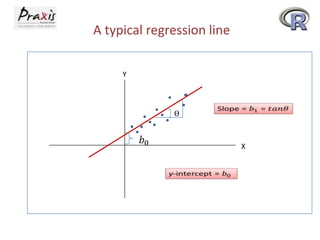

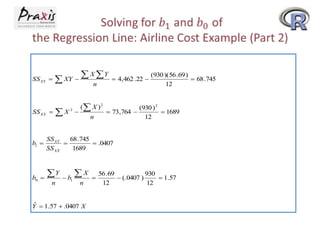

- Simple linear regression involves predicting a dependent variable Y based on an independent variable X.

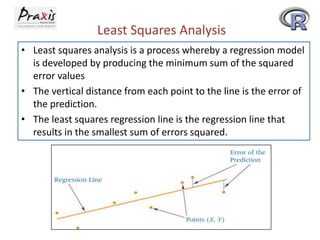

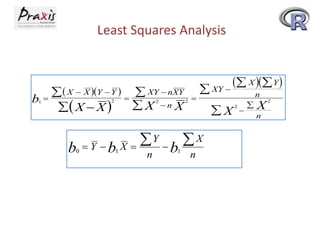

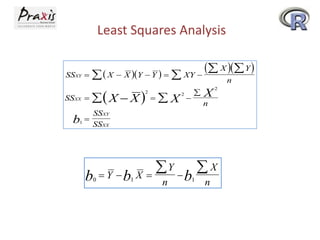

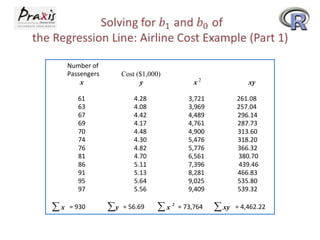

- The least squares method is used to fit a regression line that minimizes the squared errors between observed and predicted Y values.

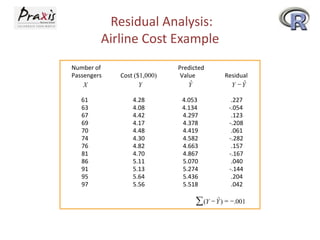

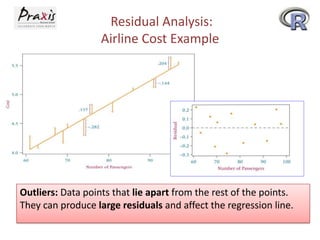

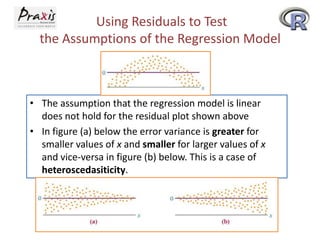

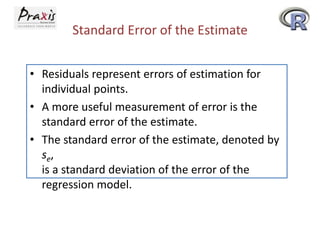

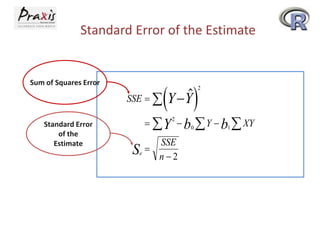

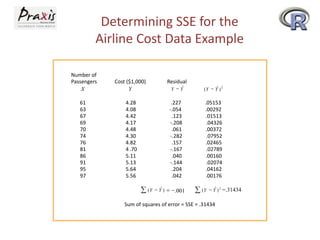

- Residual analysis and other statistical measures like the standard error can be used to evaluate the fit of the regression model.