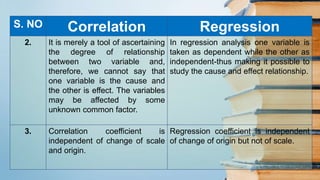

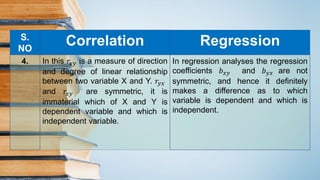

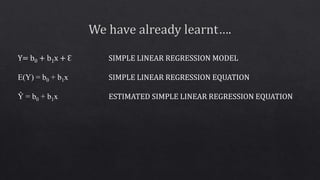

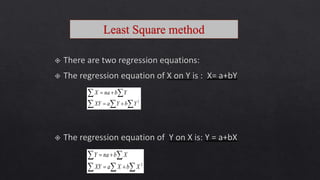

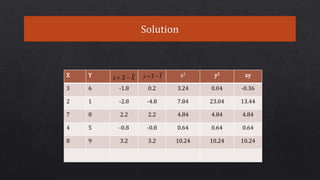

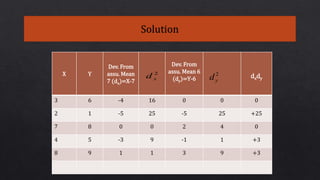

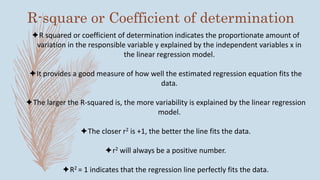

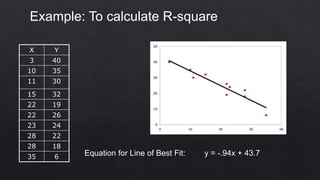

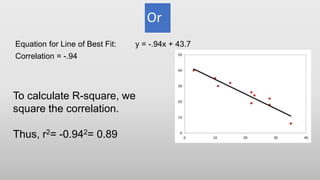

This document defines and explains various types of regression analysis including linear, logistic, polynomial, stepwise, ridge and lasso regression. It discusses the key differences between correlation and regression. It also covers topics such as the least squares method, R-squared/coefficient of determination, adjusted R-squared, limitations of regression analysis and applications of regression analysis.