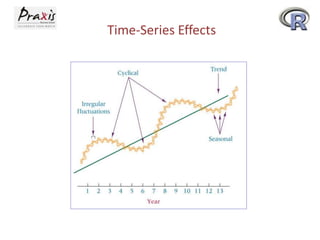

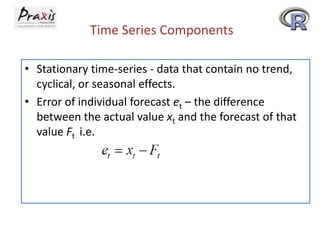

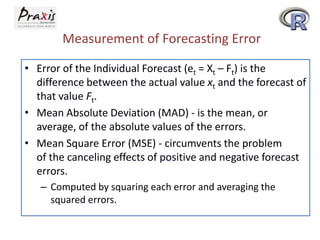

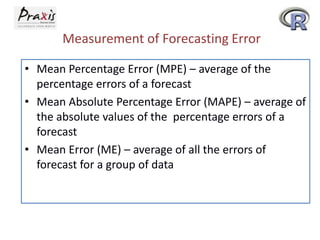

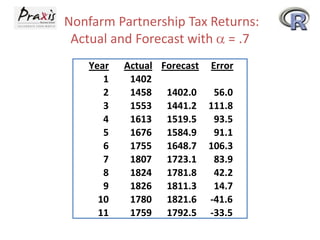

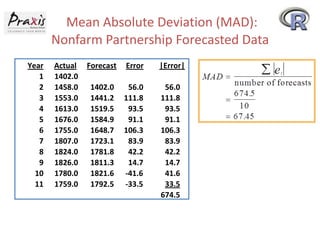

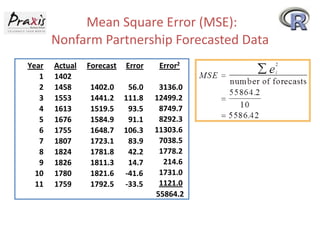

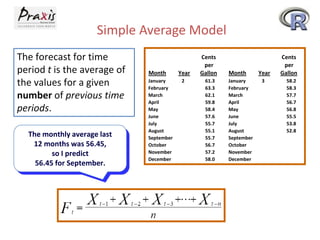

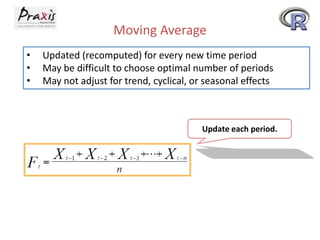

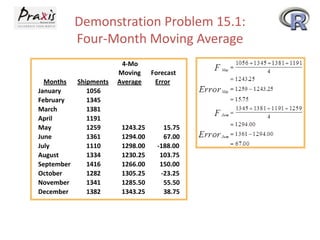

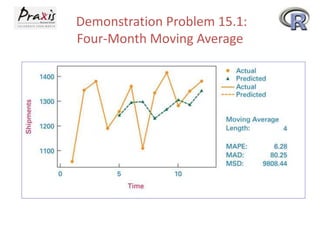

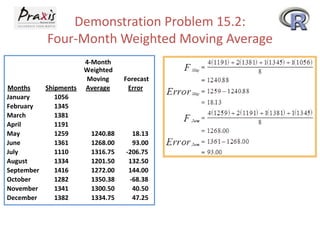

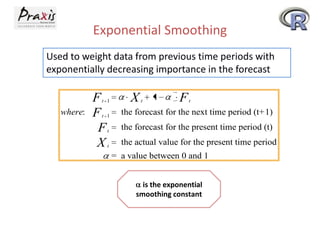

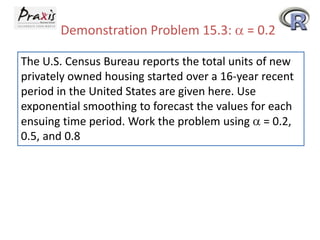

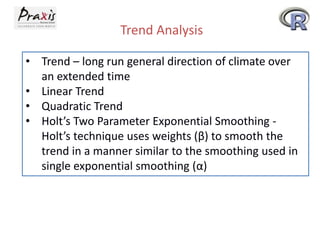

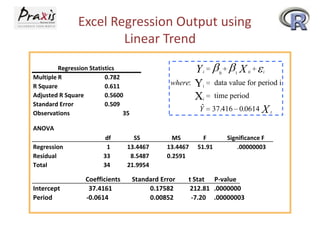

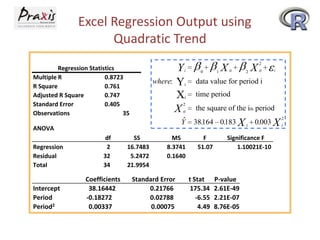

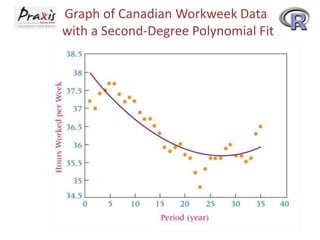

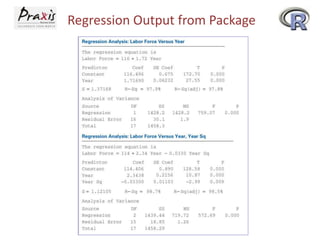

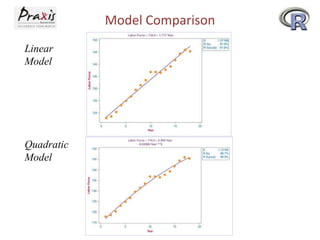

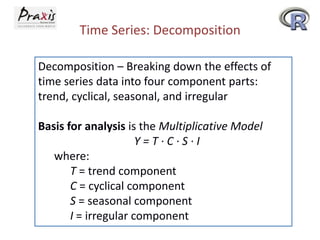

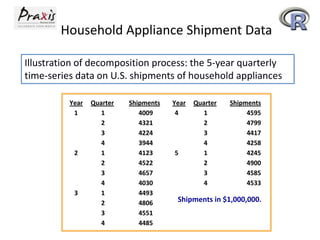

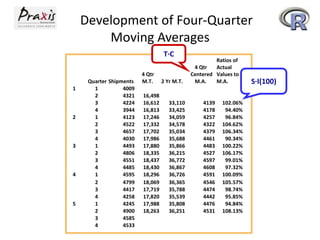

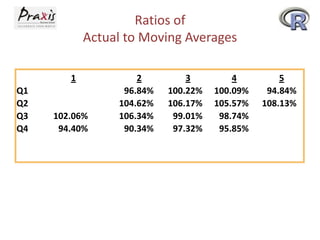

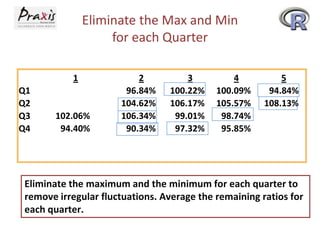

This document provides an overview of time series forecasting techniques. It discusses the components of time series data including trends, cycles, seasonality and irregular fluctuations. It also covers stationary and non-stationary time series. Forecasting techniques covered include naive methods, smoothing techniques like moving averages and exponential smoothing, and decomposition methods. Regression models for trend analysis and measuring forecast accuracy are also discussed.