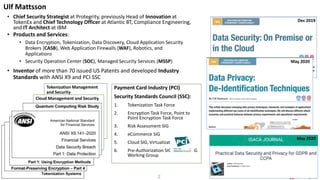

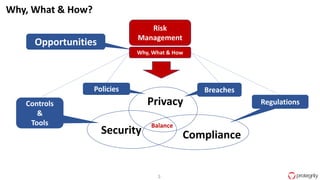

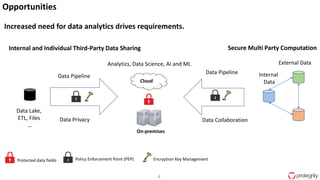

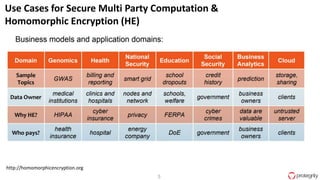

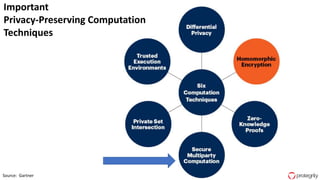

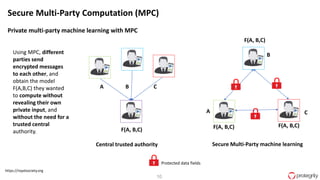

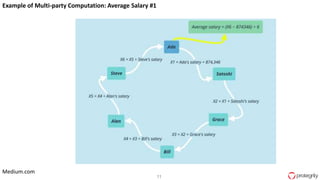

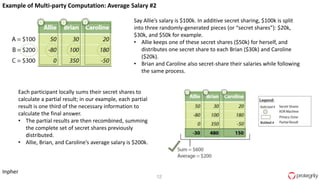

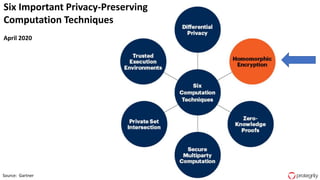

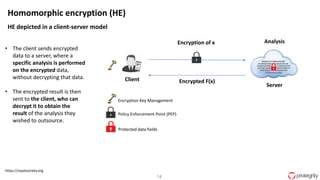

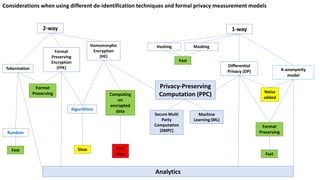

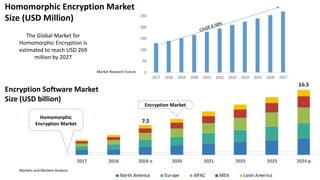

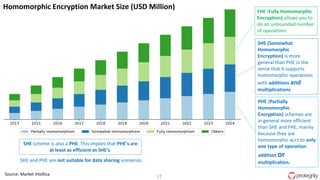

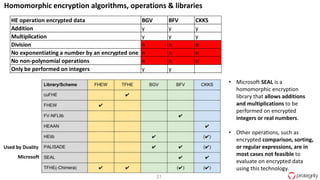

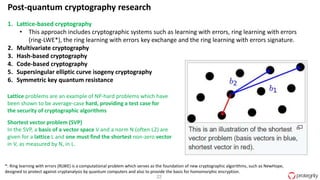

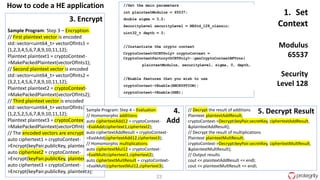

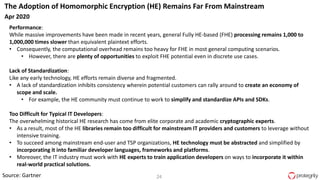

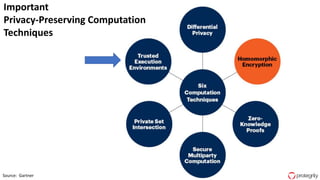

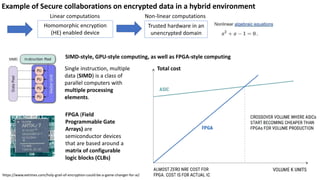

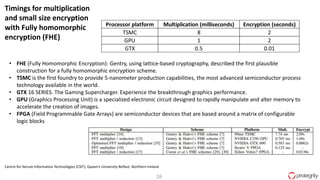

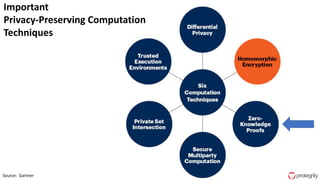

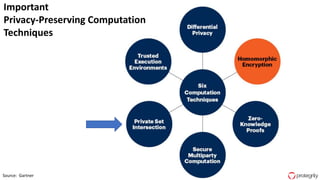

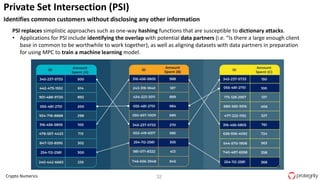

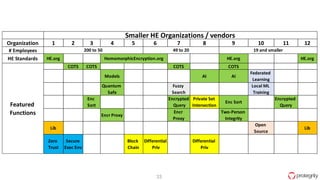

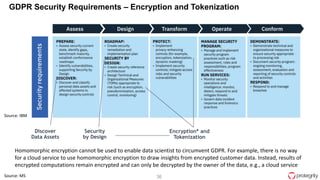

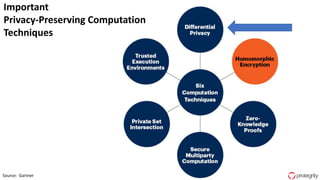

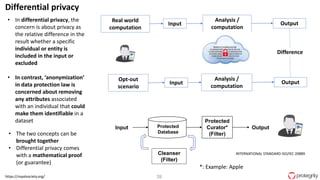

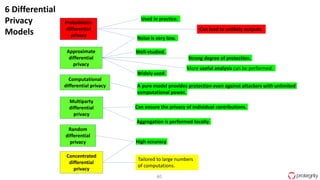

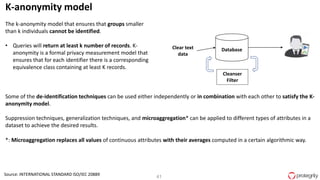

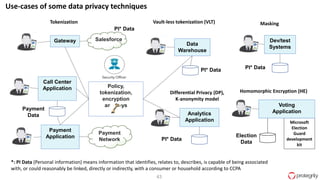

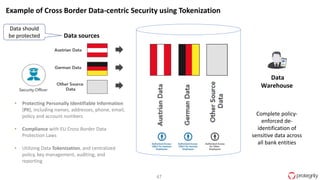

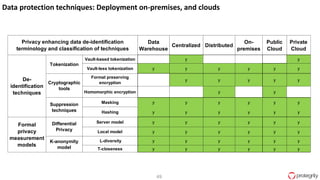

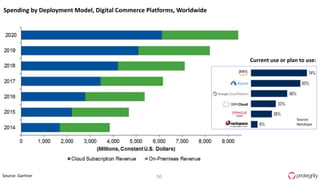

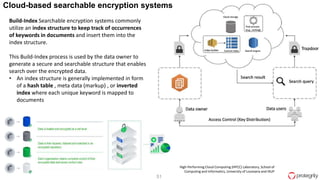

Ulf Mattsson is the Chief Security Strategist at Protegrity and has extensive experience in data encryption, tokenization, data privacy tools and security compliance. The document discusses several use cases for secure multi-party computation and homomorphic encryption including: sharing financial data between institutions while preserving privacy, using retail transaction data for secondary purposes like advertising while protecting privacy, and enabling internal data sharing within a bank for analytics while complying with regulations. It also provides overviews of important privacy-preserving computation techniques like homomorphic encryption, secure multi-party computation, differential privacy and the growth of the homomorphic encryption market.