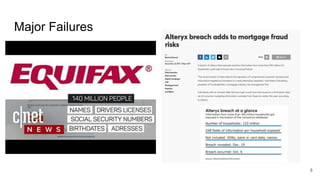

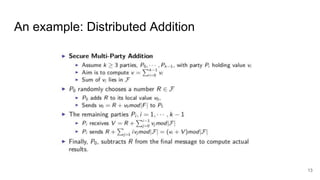

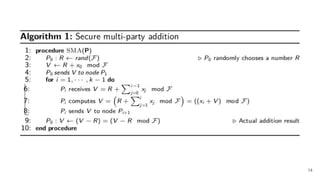

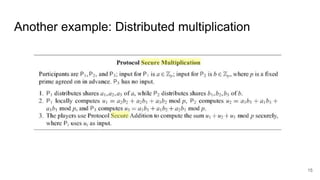

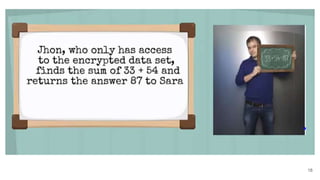

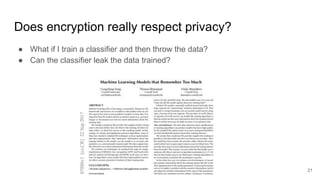

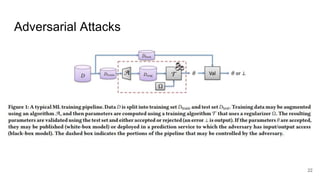

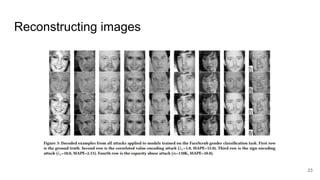

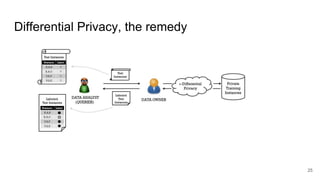

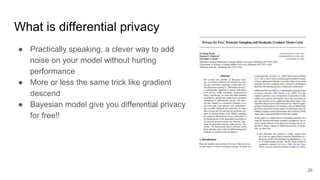

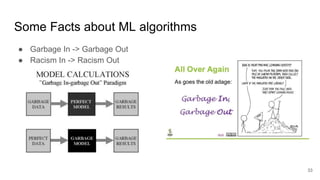

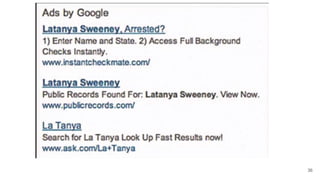

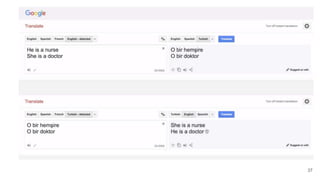

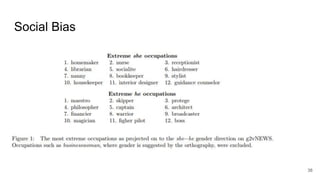

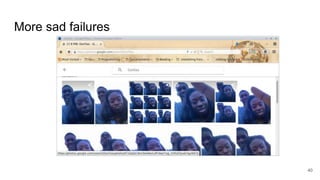

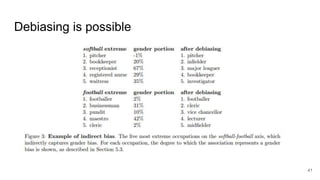

This document discusses privacy, security, and ethics in data science. It covers topics such as anonymizing data and computations, seeking security for personal data, and the unethical surprises that can occur in data science work. It also discusses how to respect privacy by securely storing data, adding layers of protection like encryption, and using techniques like distributed computing and differential privacy to better protect sensitive information. The document cautions that biases in data can propagate biases in models, and highlights the importance of addressing issues like social bias, redaction of sensitive info, and debiasing models to help ensure ethical practices in this field.