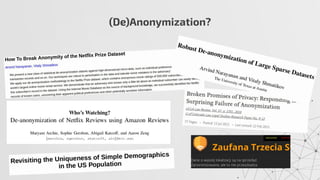

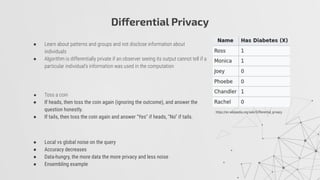

The document discusses privacy-preserving machine learning techniques, emphasizing the need for privacy while using sensitive data such as medical records under regulations like GDPR. It covers methods like federated learning, differential privacy, and homomorphic encryption to protect data while enabling useful insights. Key challenges include data correlation, bias, and security vulnerabilities that necessitate improved frameworks and algorithms.