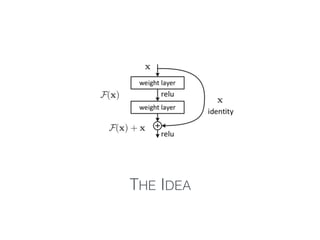

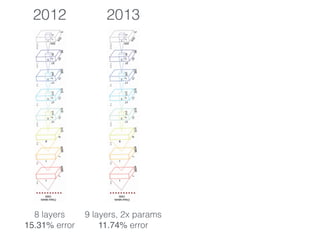

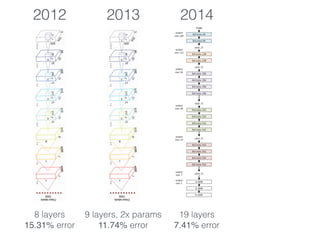

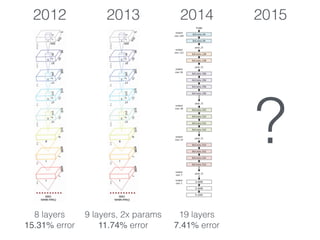

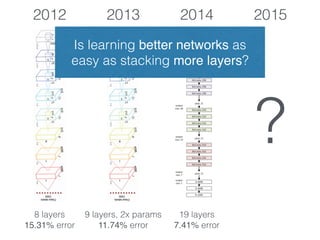

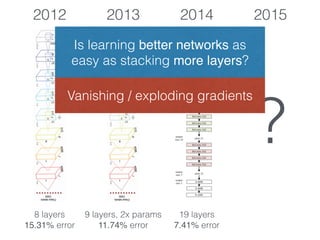

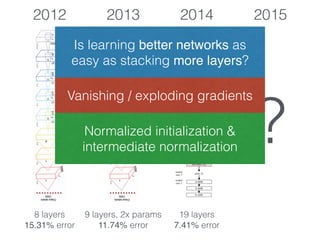

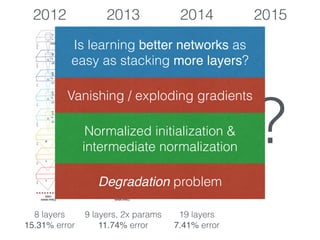

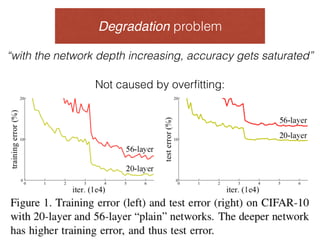

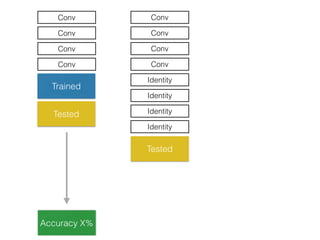

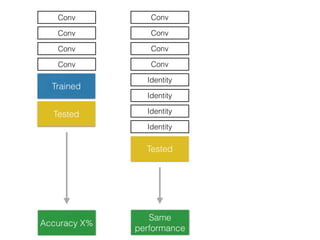

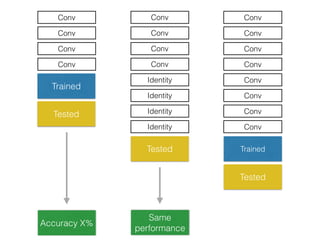

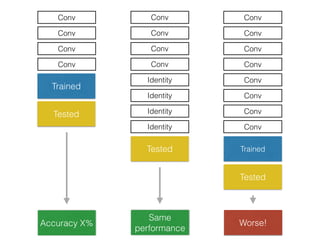

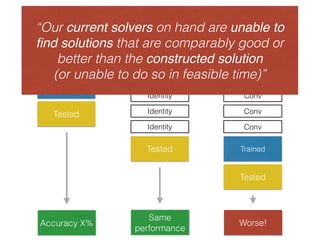

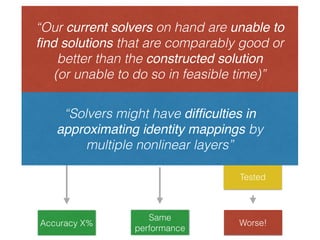

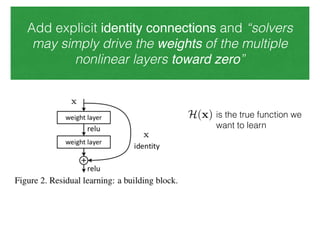

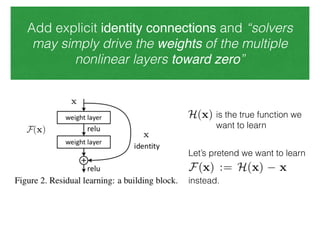

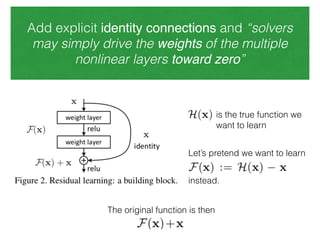

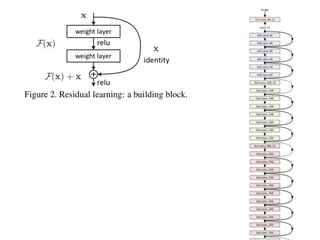

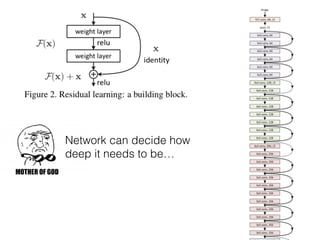

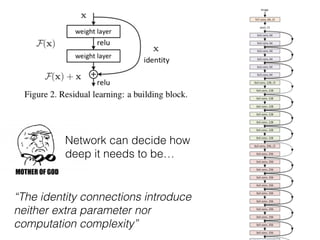

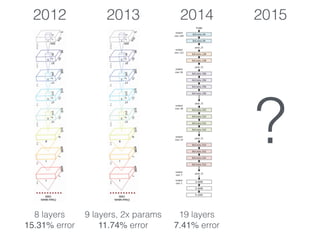

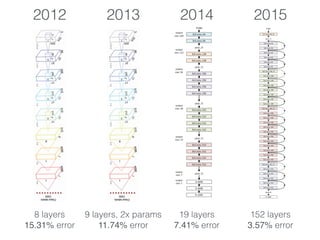

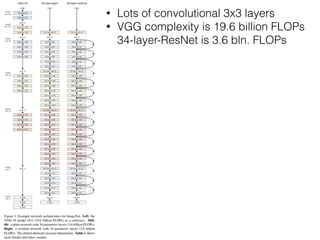

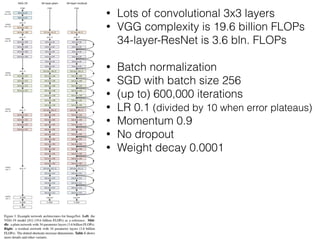

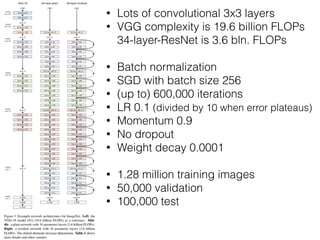

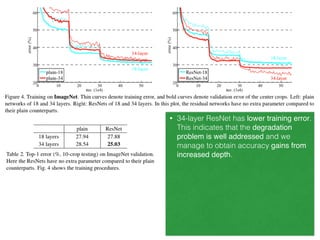

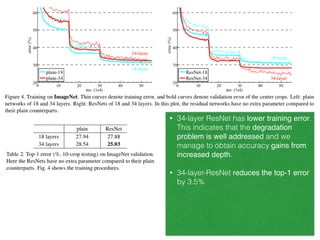

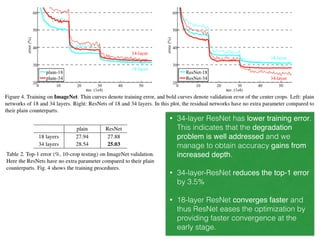

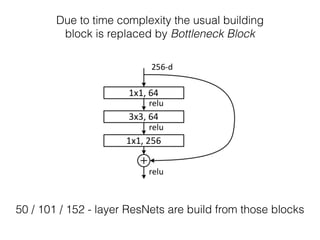

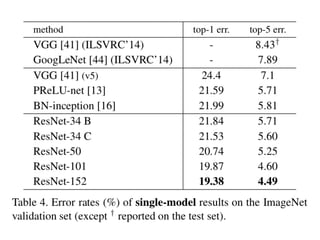

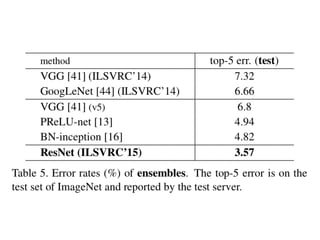

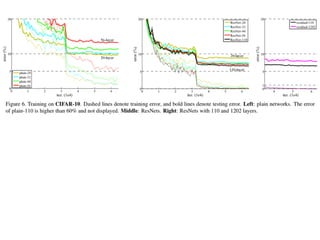

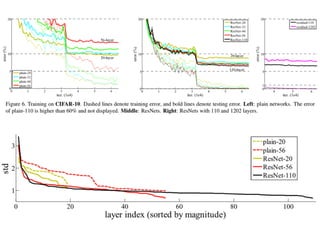

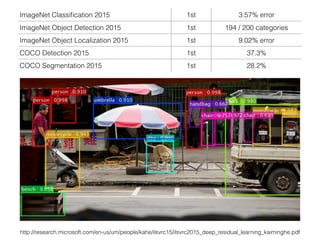

The document discusses deep residual learning for image recognition, detailing the evolution of neural network architectures and their performance on various benchmarks such as ILSVRC and MS COCO. It emphasizes the challenges of vanishing gradients and degradation problems with increased depth, proposing identity connections to mitigate these issues. The findings indicate that ResNet architectures, particularly deeper variants, can achieve lower error rates and improved training accuracy by addressing the degradation problem effectively.