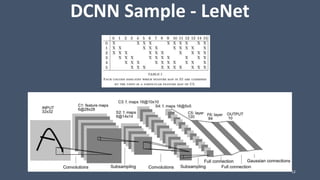

This document outlines Anusua Trivedi's talk on transfer learning and fine-tuning deep neural networks. The talk covers traditional machine learning versus deep learning, using deep convolutional neural networks (DCNNs) for image analysis, transfer learning and fine-tuning DCNNs, recurrent neural networks (RNNs), and case studies applying these techniques to diabetic retinopathy prediction and fashion image caption generation.