Deep Learning and Business Models

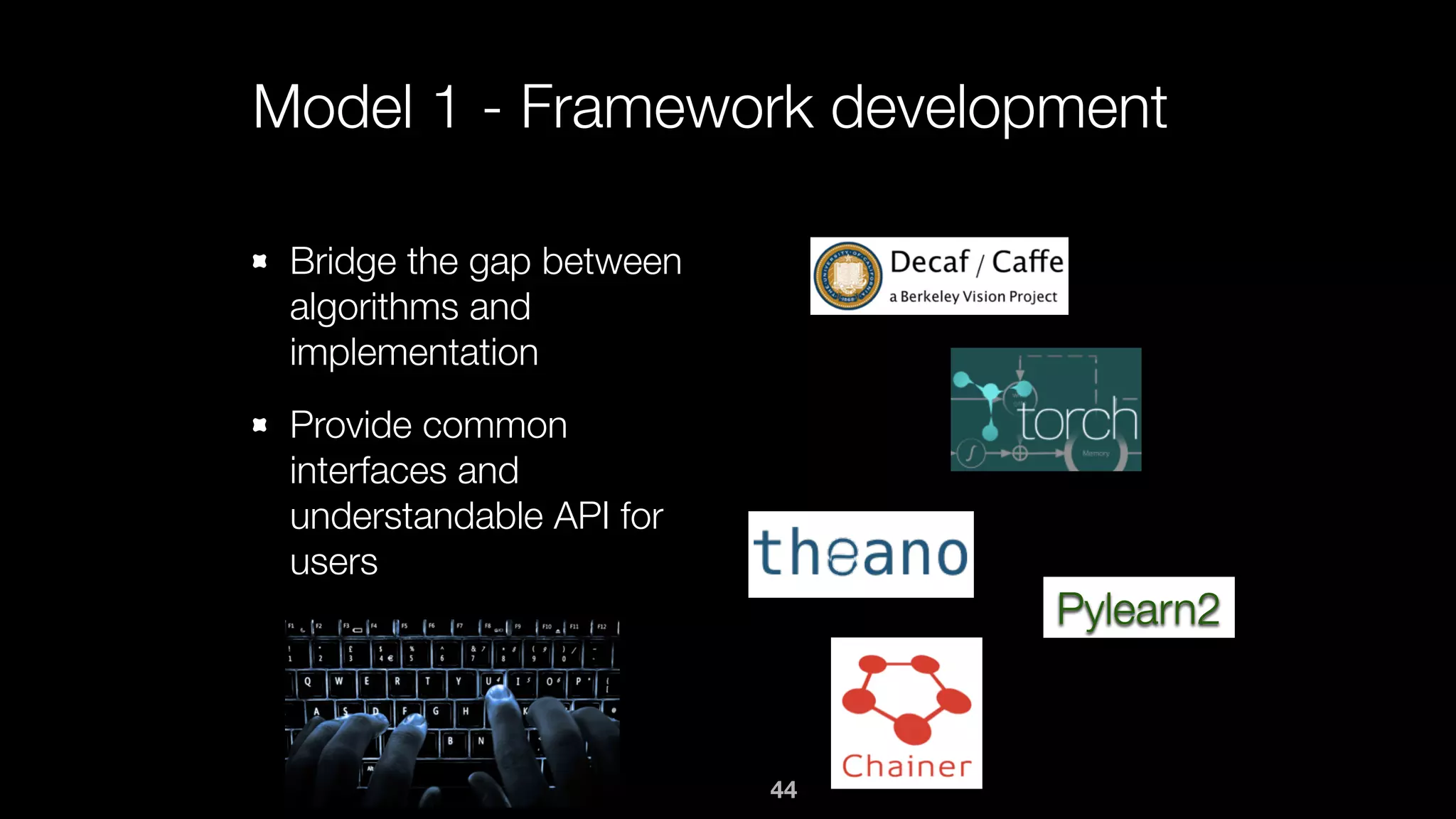

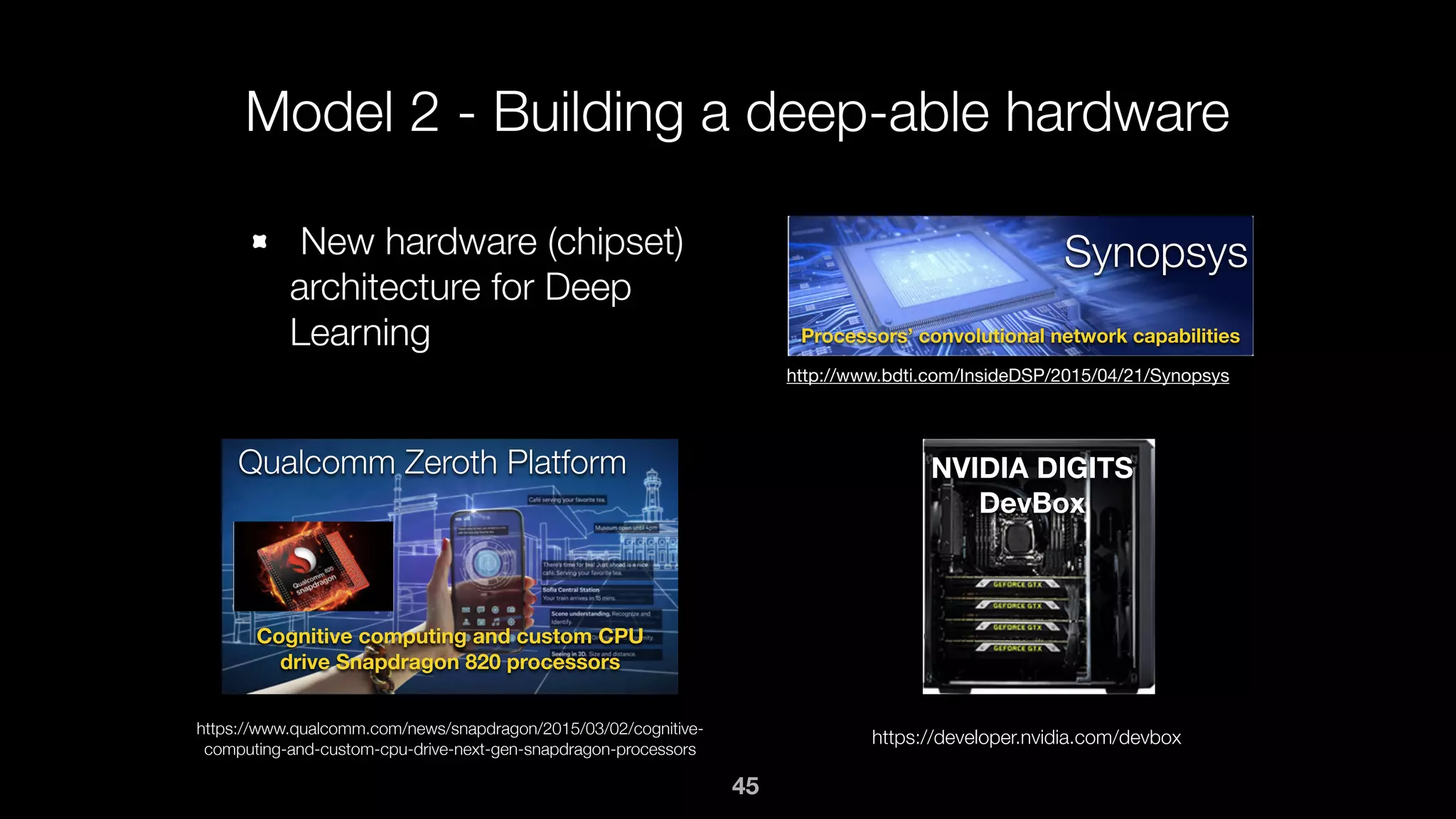

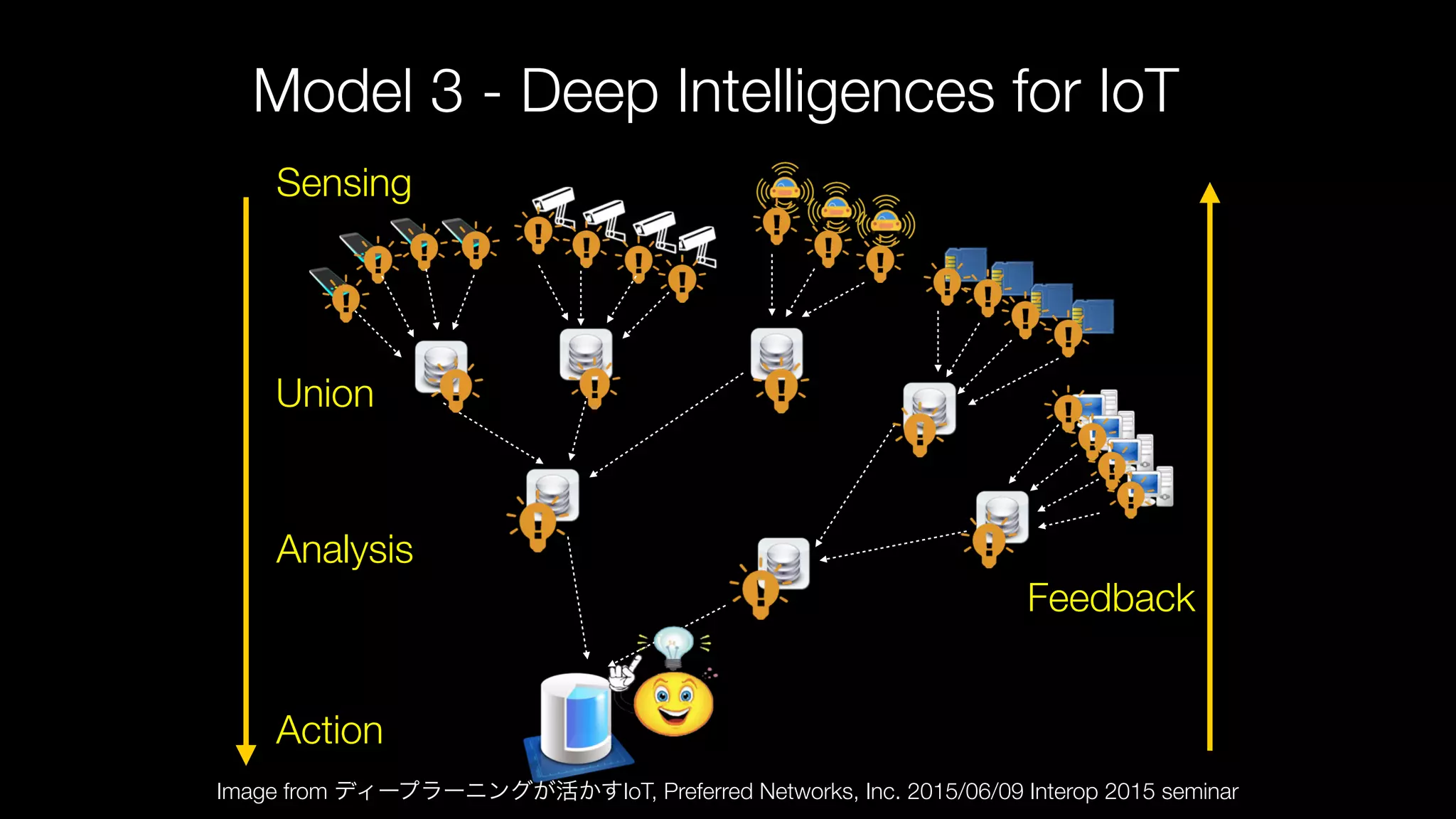

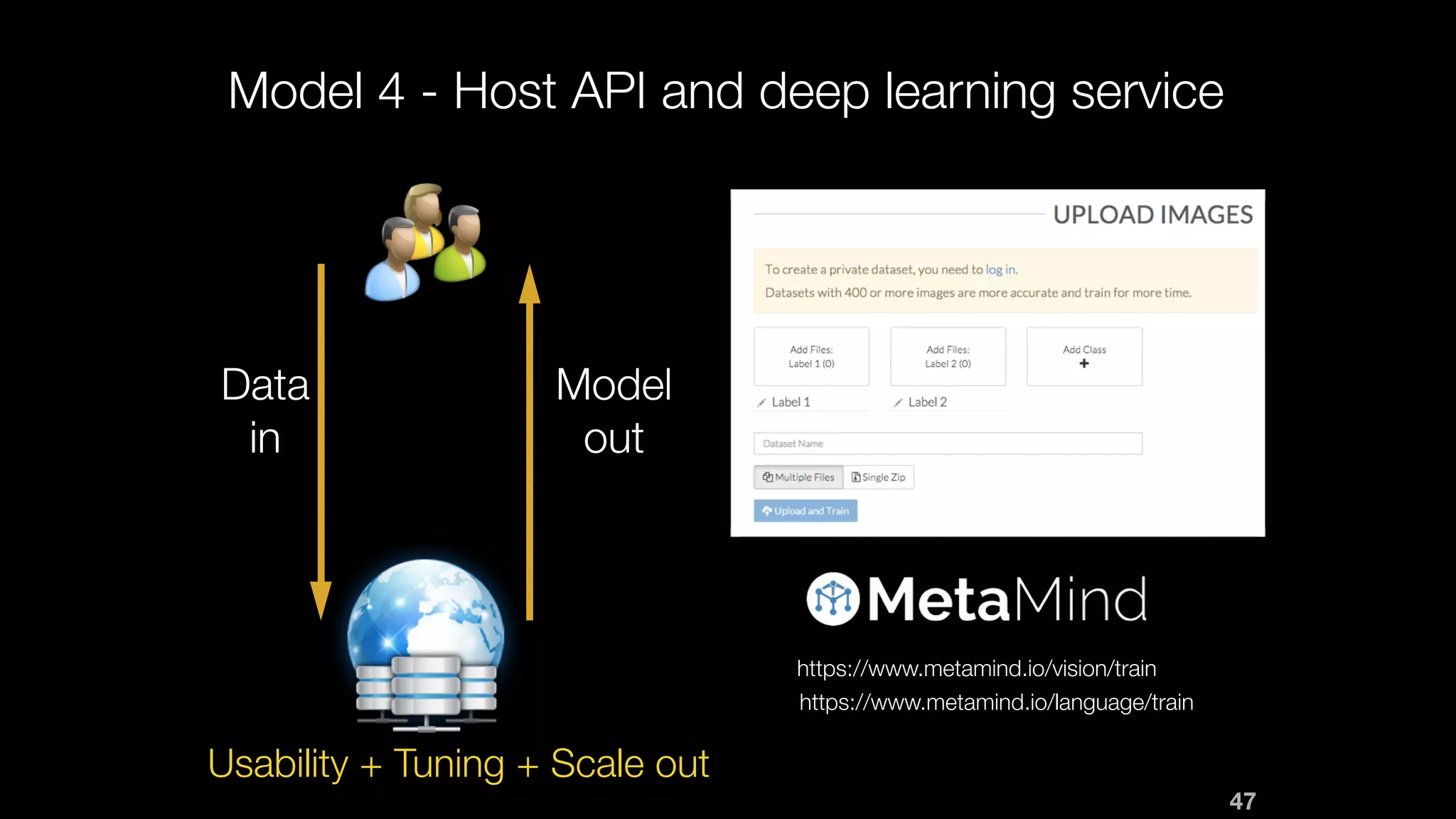

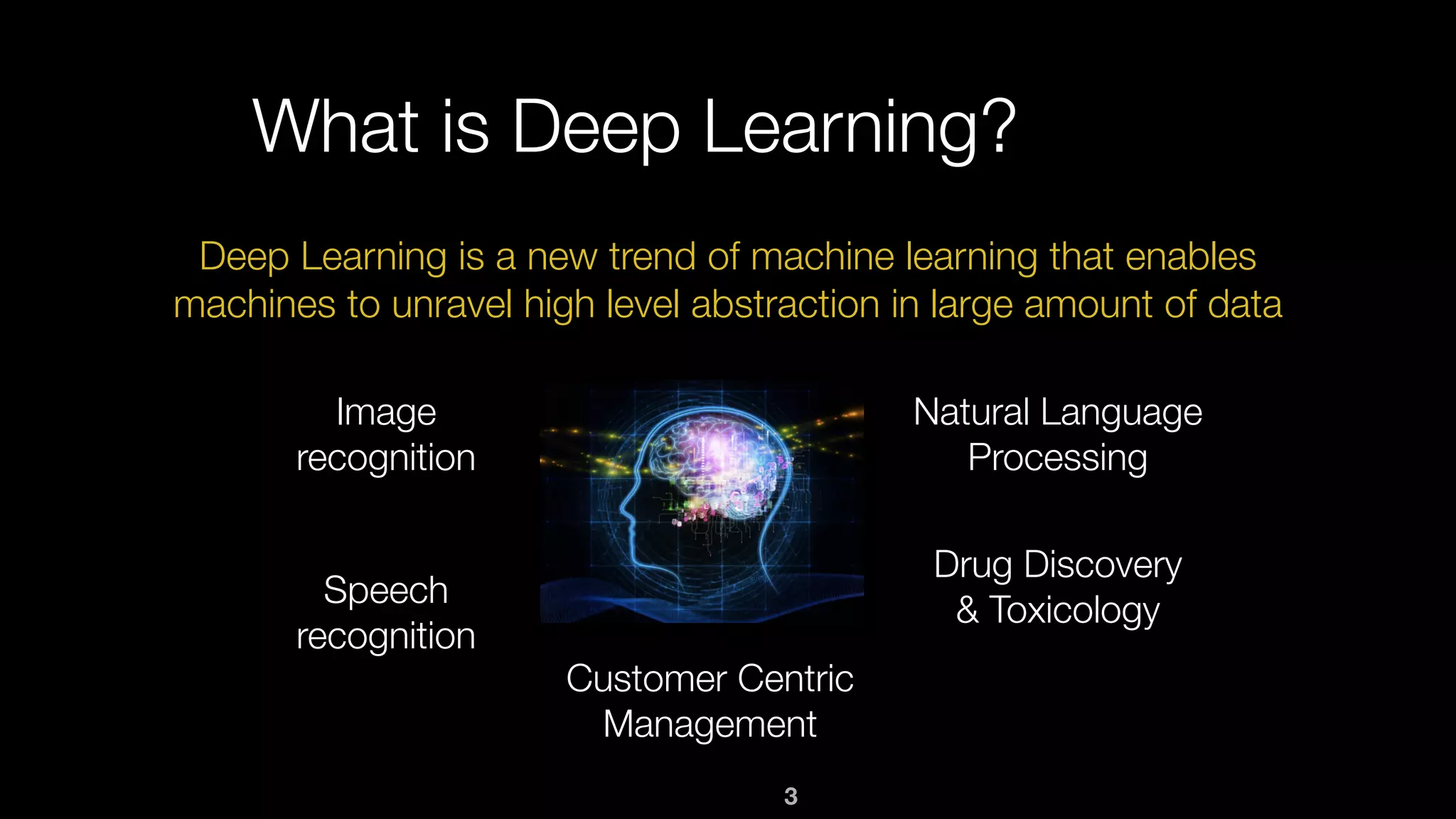

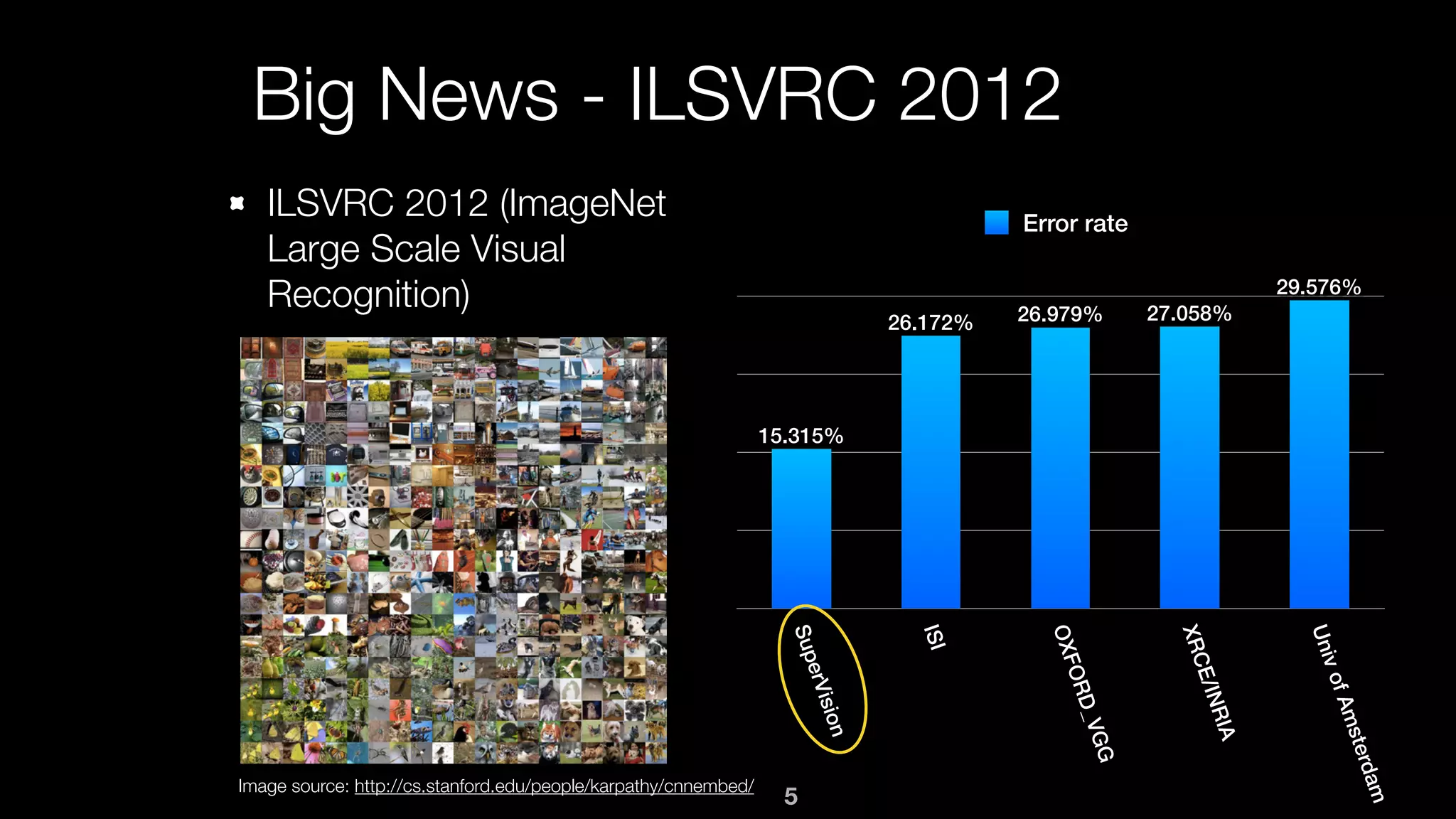

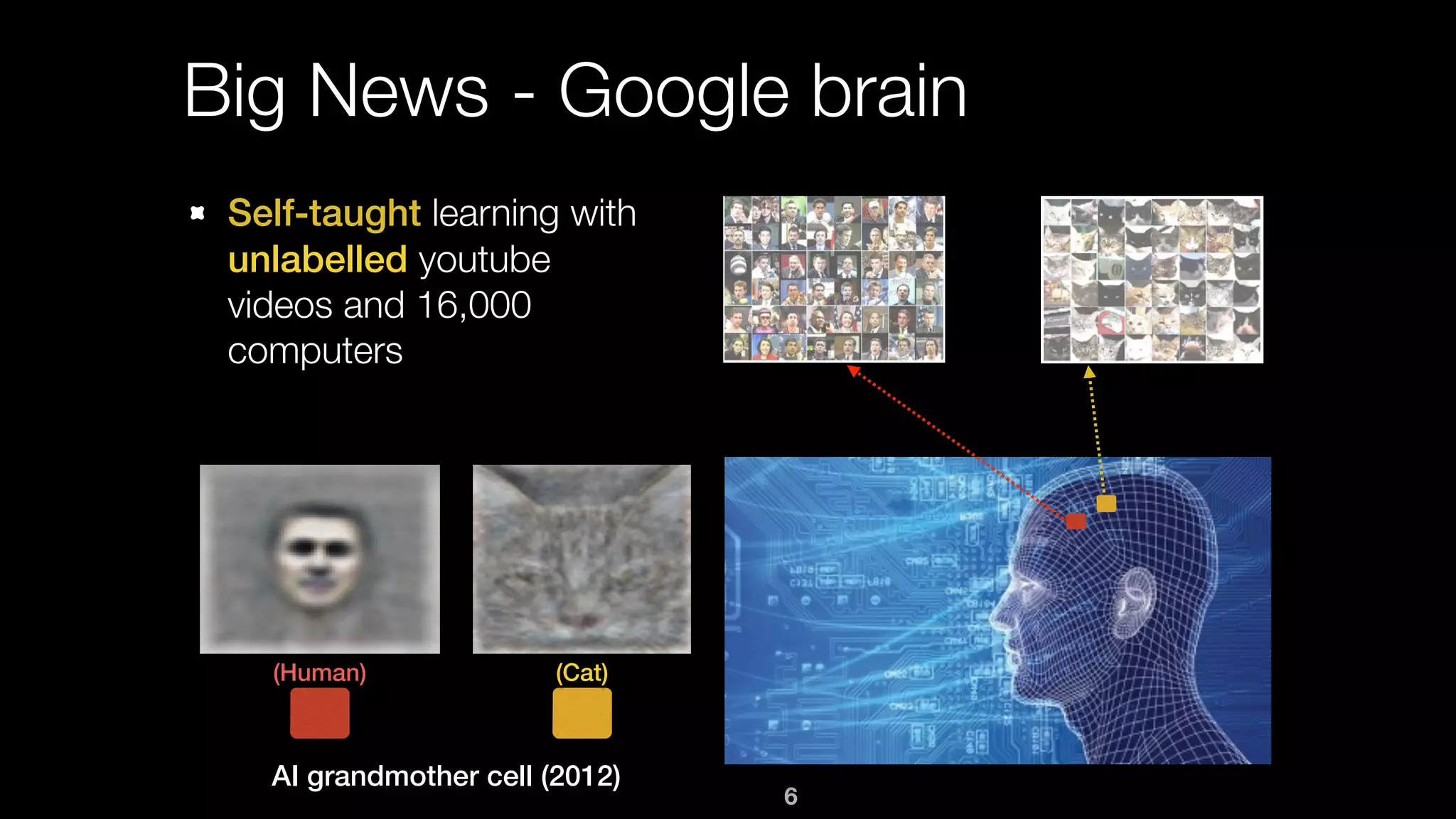

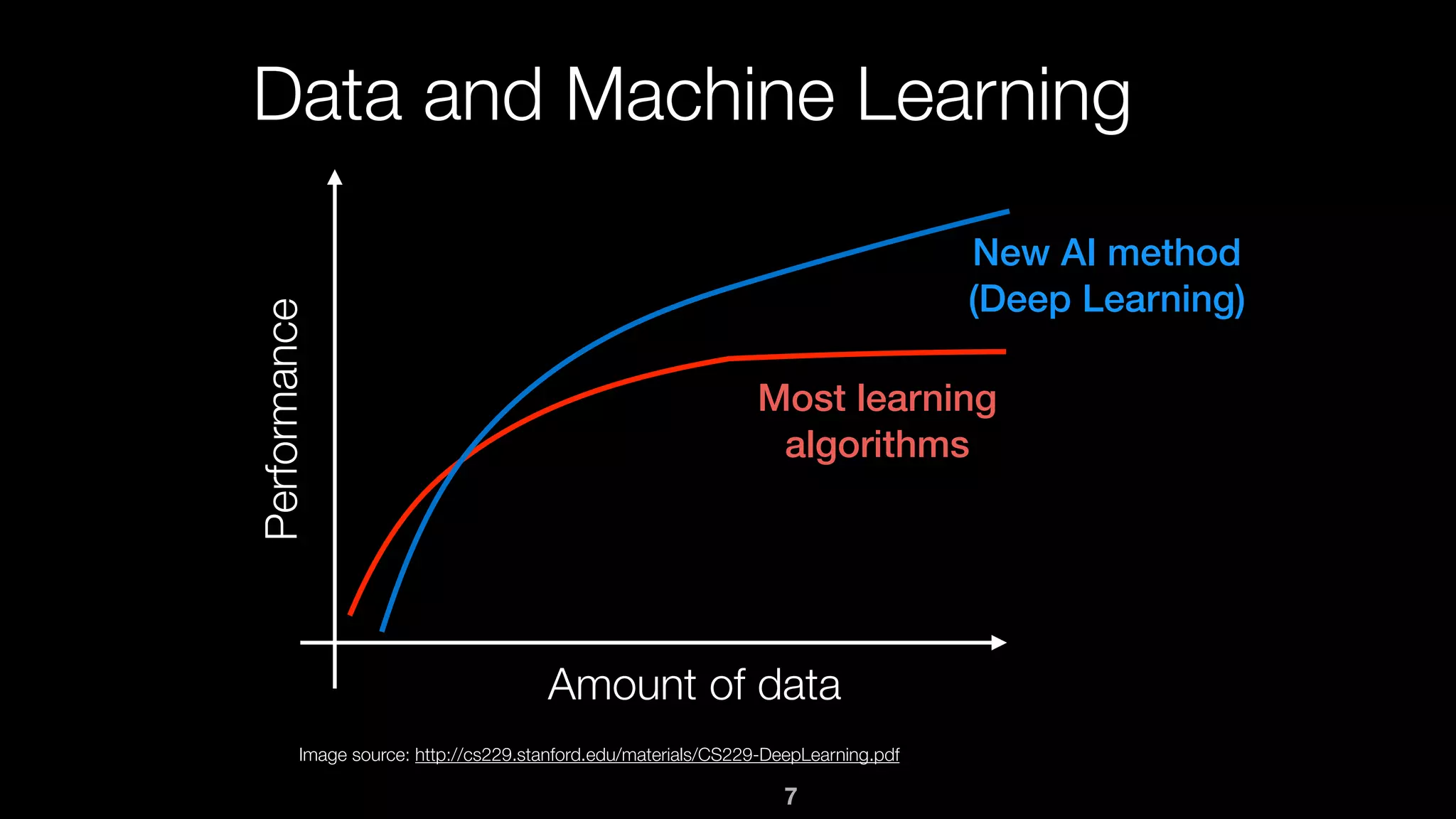

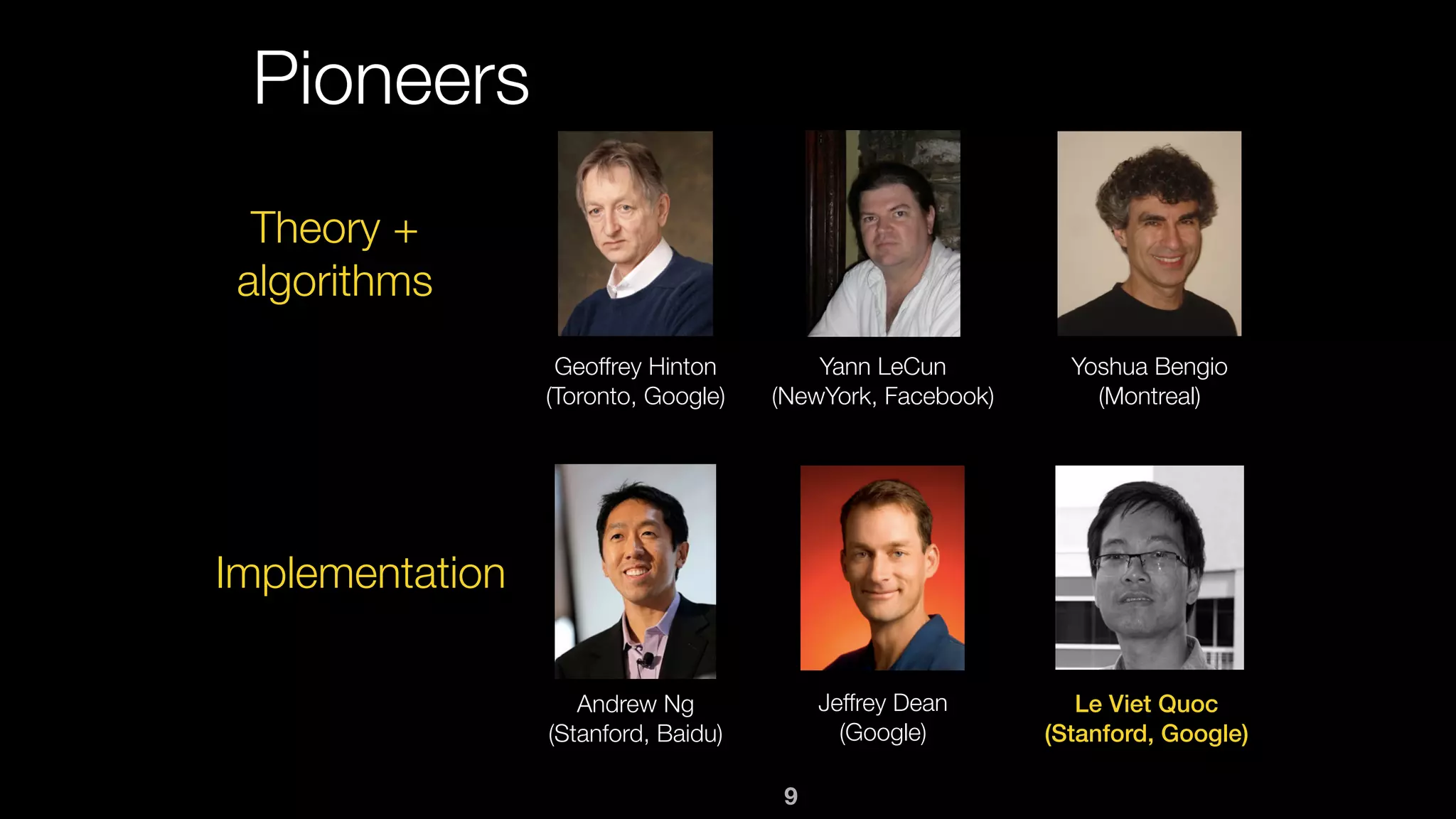

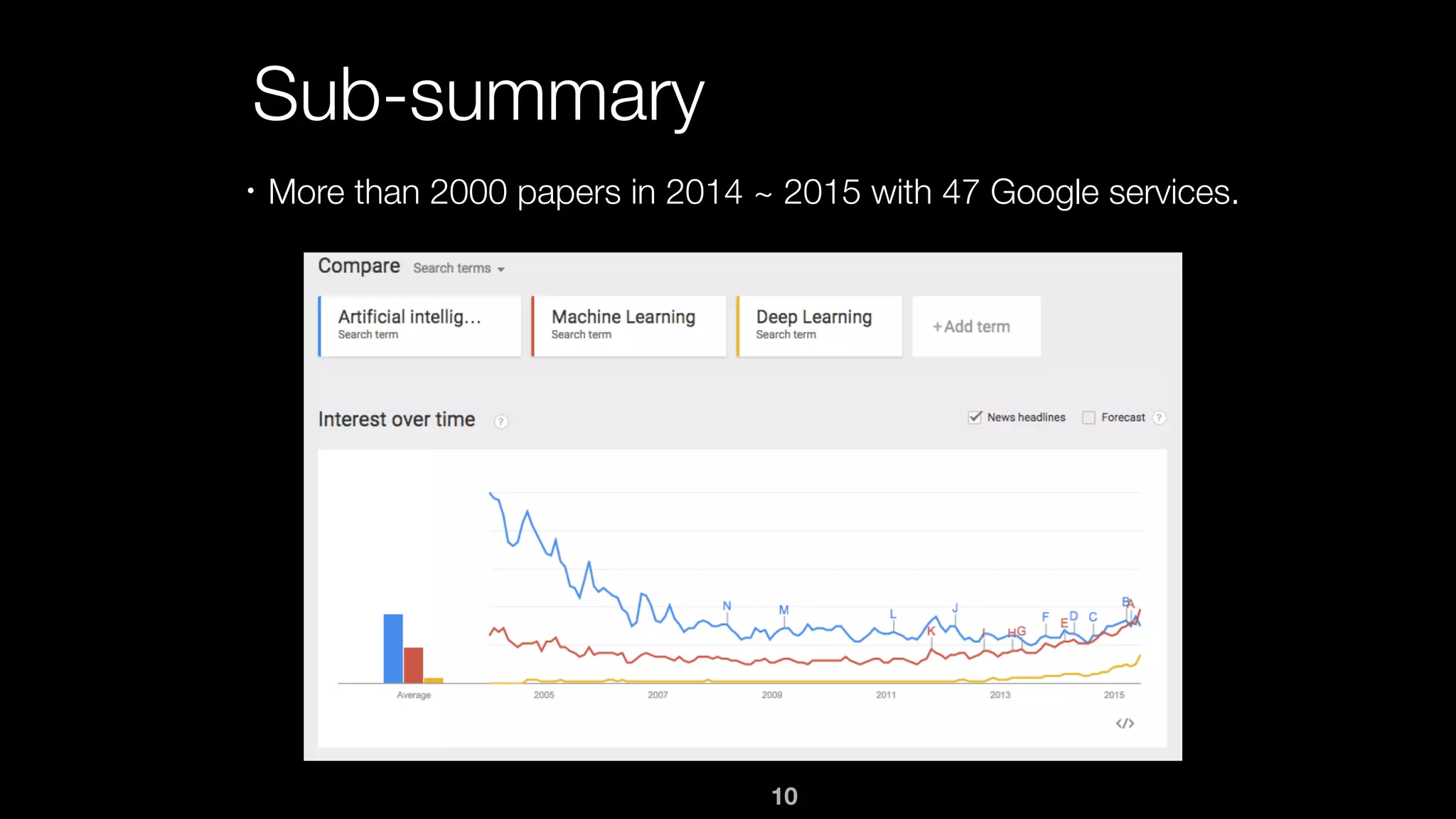

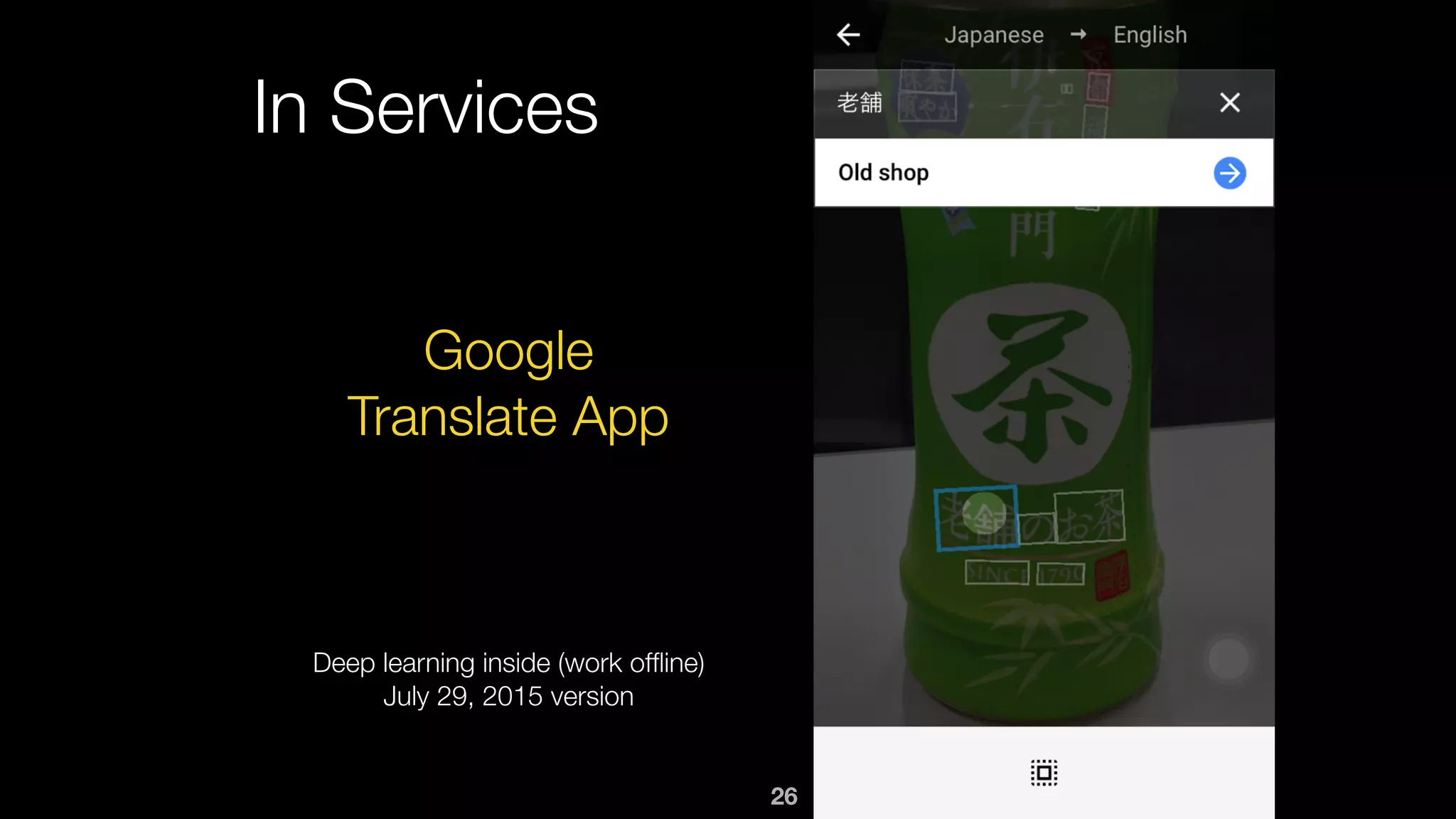

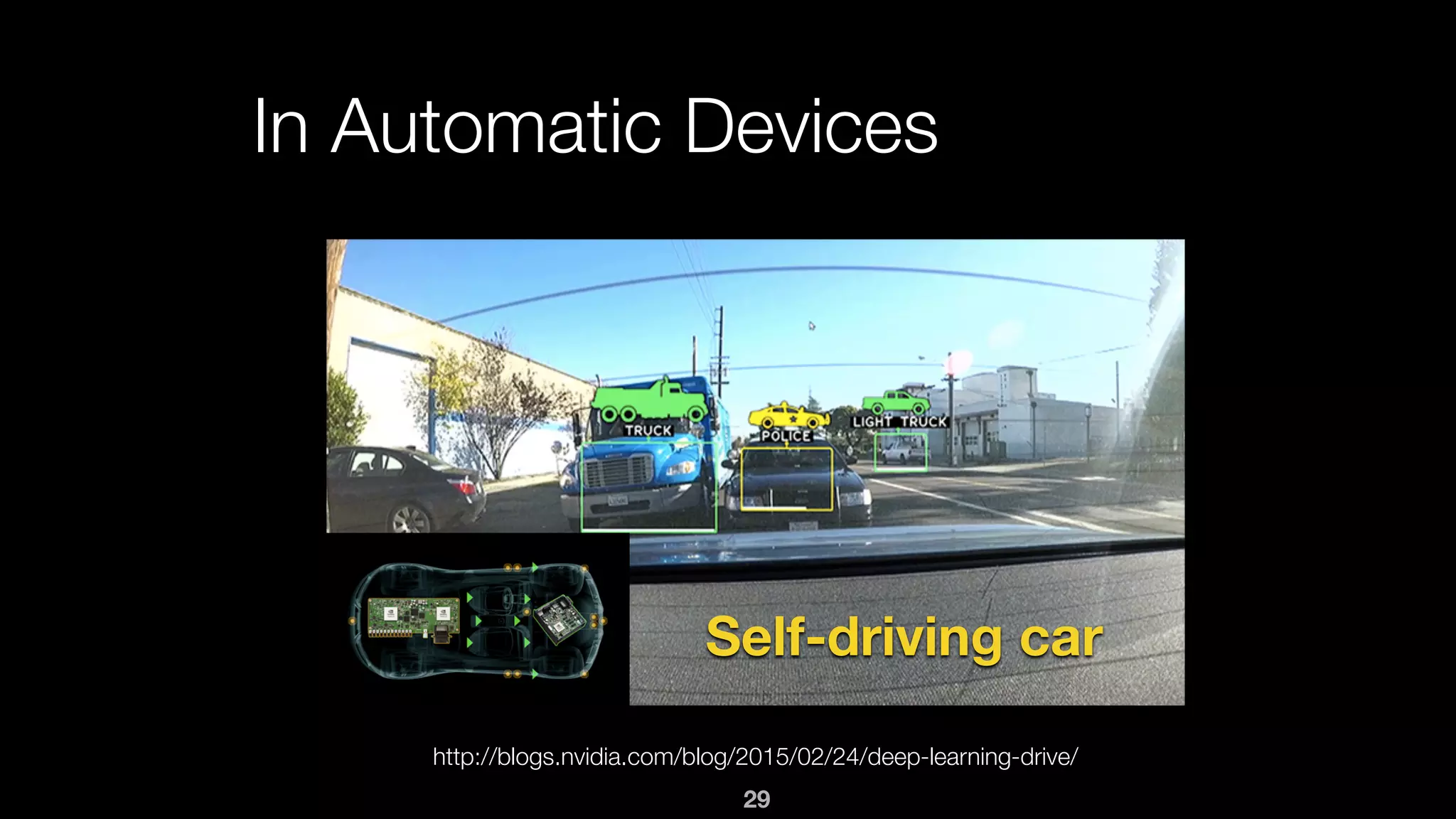

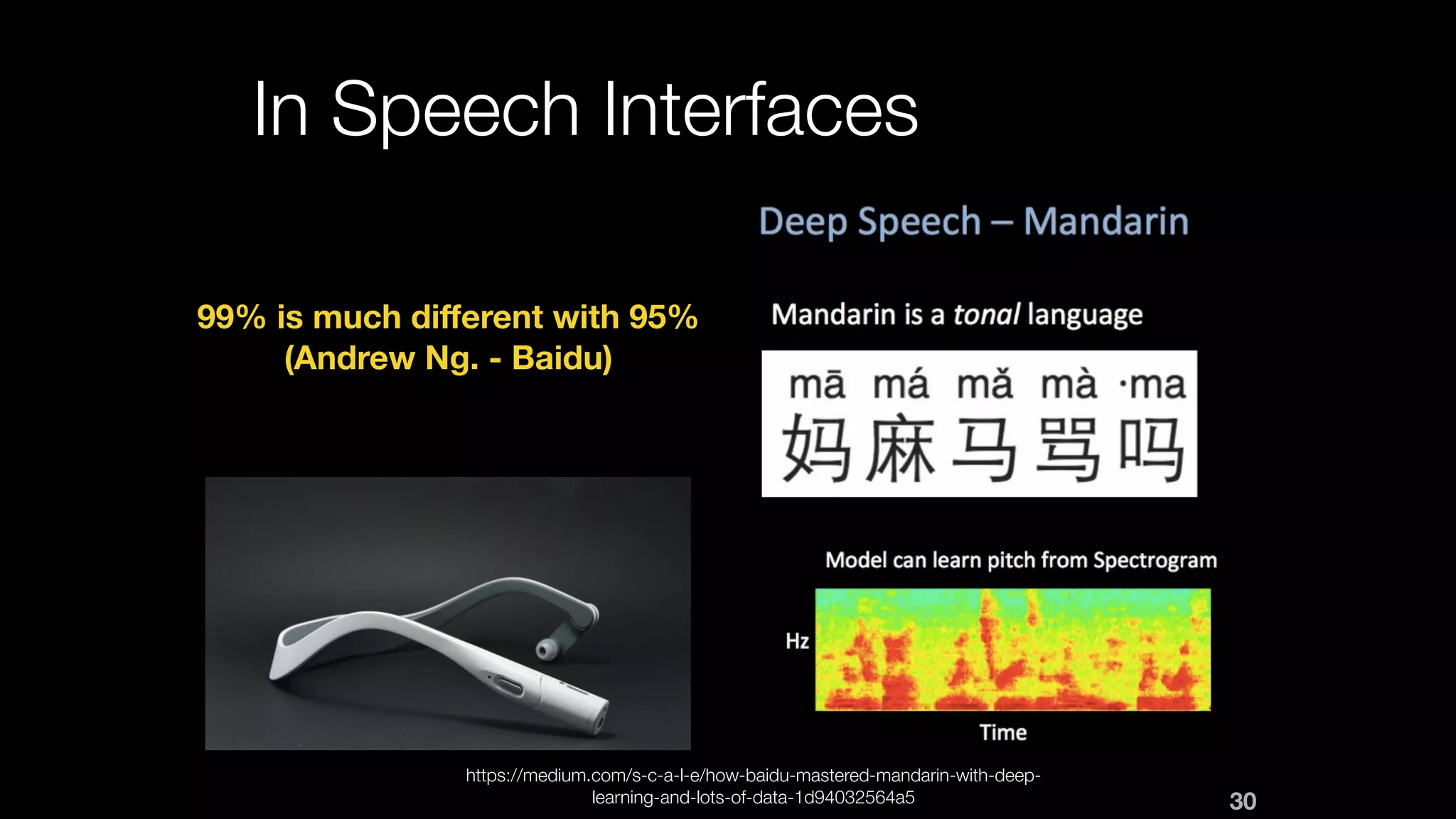

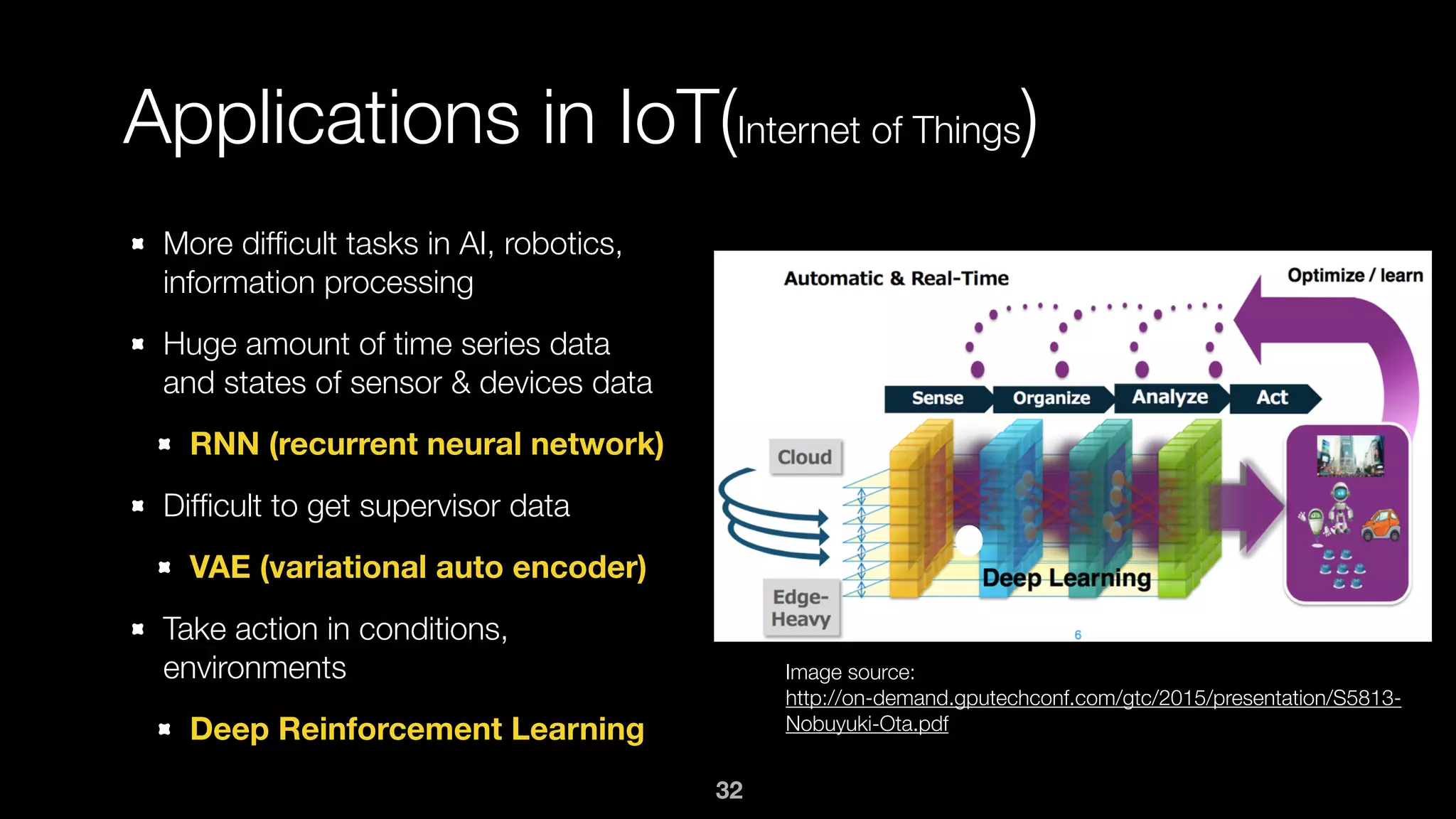

Tran Quoc Hoan discusses deep learning and its applications, as well as potential business models. Deep learning has led to significant improvements in areas like image and speech recognition compared to traditional machine learning. Some business models highlighted include developing deep learning frameworks, building hardware optimized for deep learning, using deep learning for IoT applications, and providing deep learning APIs and services. Deep learning shows promise across many sectors but also faces challenges in fully realizing its potential.

![RNN (3/3)

Neural Turing Machine [A. Graves, 2014]

Neural network which has the capability of coupling the external memories

Applications: COPY, PRIORITY SORT

35

NN with

parameters to

coupling to

external

memories](https://image.slidesharecdn.com/deeplearningbusinessmodelsvnitc-2015-09-13-150912180100-lva1-app6891/75/Deep-Learning-And-Business-Models-VNITC-2015-09-13-35-2048.jpg)

![40

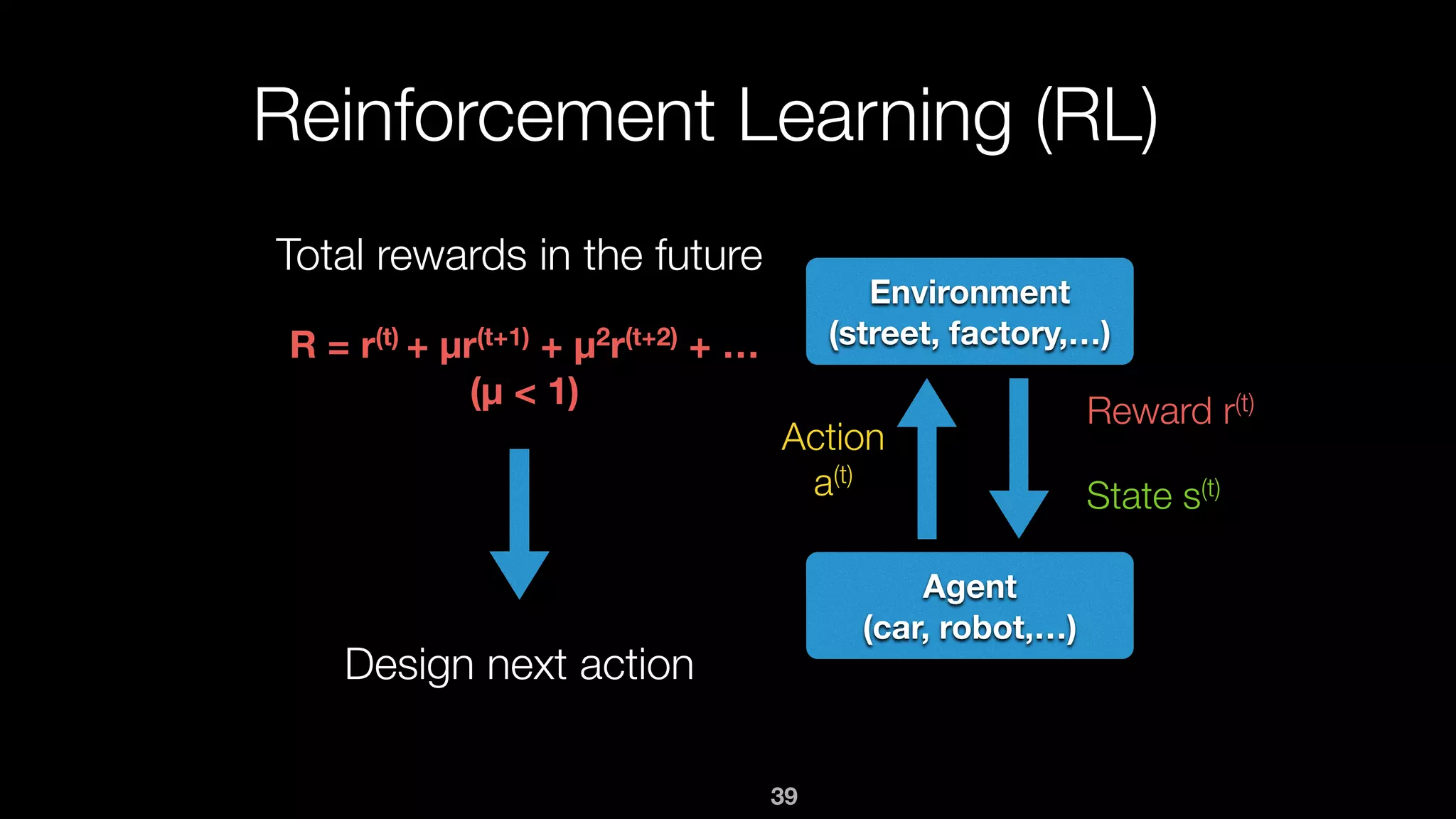

Deep Reinforcement Learning (RL)

Q - learning: need to know future expectation reward Q(s, a)

of action a at state s (Bellman update equation)

Q(s, a) <— Q(s, a) + ß ( r + µ maxa’ Q(s’, a’) - Q(s, a) )

Deep Q-Learning Network [V. Mnih, 2015]

Get Q(s, a) by a deep neural network Q(s, a, w)

Maximize: L(w) = E[ (r + µ max Q(s’, a’, w) - Q(s, a, w))2 ]

Useful when there are many states](https://image.slidesharecdn.com/deeplearningbusinessmodelsvnitc-2015-09-13-150912180100-lva1-app6891/75/Deep-Learning-And-Business-Models-VNITC-2015-09-13-40-2048.jpg)