This document provides an overview of a 65-hour course on neural networks and deep learning taught by Andres Mendez Vazquez at Cinvestav Guadalajara. The course objectives are to introduce students to concepts of neural networks, with a focus on various neural network architectures and their applications. Topics covered include traditional neural networks, deep learning, optimization techniques for training deep models, and specific deep learning architectures like convolutional and recurrent neural networks. The course grades are based on midterms, homework assignments, and a final project.

![Cinvestav Guadalajara

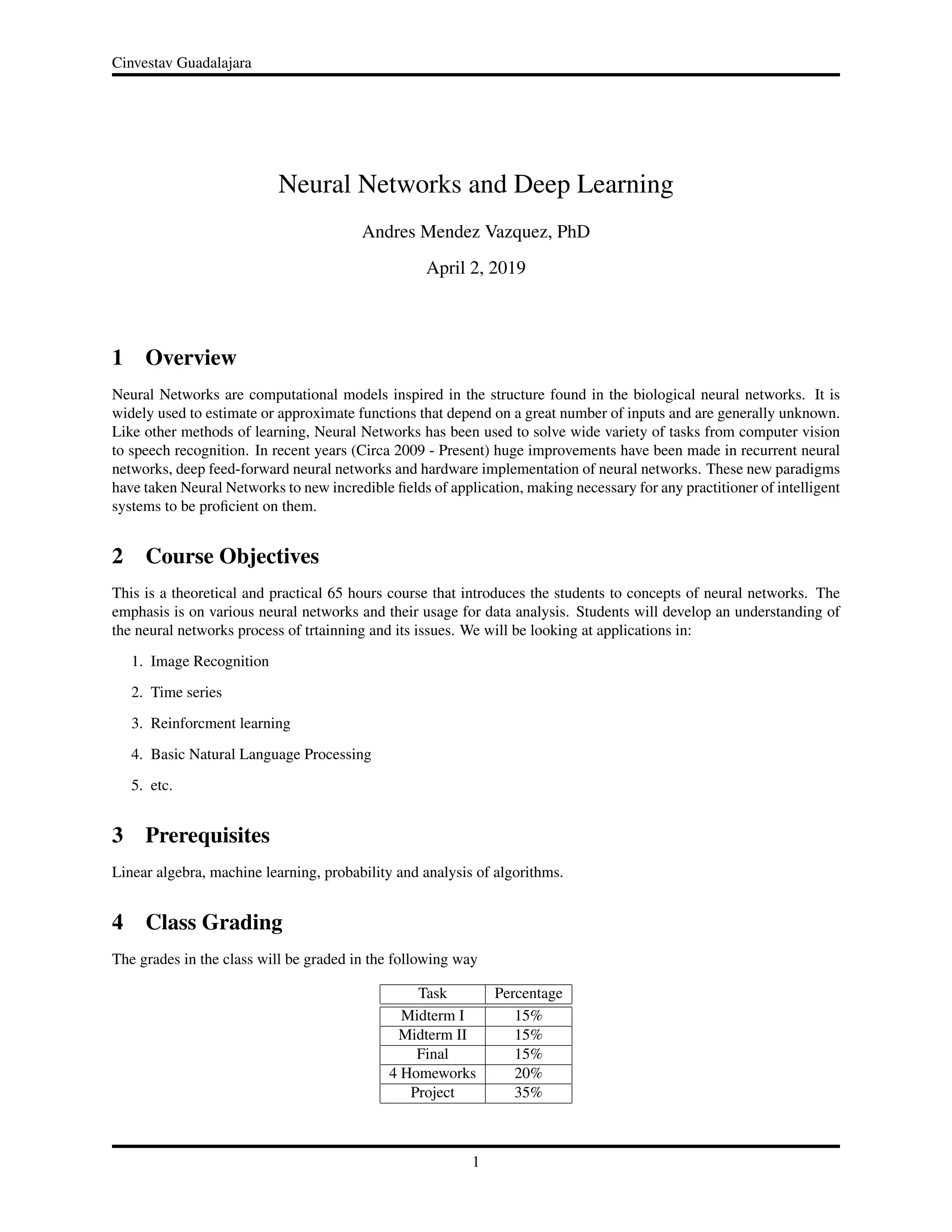

5 Course Topics

5.1 Traditional Neural Networks

1. Introduction [1, 2, 3]

(a) Definition

(b) Models of a Neuron

(c) Neural networks as directed graphs

(d) Knowledge representation

2. Learning Process [1, 2, 3]

(a) Error-correction learning

(b) Memory-based learning

(c) Hebbian learning

(d) Competitive learning

3. Single Layer Perceptrons [1, 2, 3]

(a) Introduction

(b) Basic Theory

(c) Perceptron model

(d) Perceptron convergence theorem

4. Multilayer Perceptron [1, 2, 3]

(a) Introduction

(b) XOR problem

(c) Architecture

(d) Back-propagation algorithm

(e) Virtues and limitations of the back-propagation

5. Hopfield Networks [2, 4]

(a) Energy Based Models

(b) Training Strategies

i. Hebbian Learning

ii. Storkey Learning Rule

6. Radial-Basis Function Networks [1, 2, 5]

(a) Introduction

(b) Cover’s theorem

(c) Regularization theory

(d) Generalized Radial-Basis function networks

(e) Comparison with Multilayer Perceptrons

(f) Learning Strategies

i. Recursive Least-Square Estimation

ii. Fordward Selection Strategies

2](https://image.slidesharecdn.com/neuralnetworkdeeplearningsyllabus-190403031355/85/Neural-Networks-and-Deep-Learning-Syllabus-2-320.jpg)

![Cinvestav Guadalajara

7. Self-Organizing Maps [1]

(a) Introduction

(b) Two Basic Feature-Mapping Models

(c) Self-Organizing Map

(d) Properties of the Feature Map

5.2 Deep Learning

1. Introduction[6]

2. Deep Feed Forward Networks [6]

(a) The XOR problem

(b) Gradient-Based Learning

(c) Architecture Design

(d) Back-Propagation and Other Differentiation Algorithms

(e) The Vanishing Gradient Problem

3. Optimization for Training Deep Models [7, 8, 9, 10, 11, 12, 6, 13, 14, 15]

(a) Basic Algorithms

i. Stochastic Gradient Descent

ii. Comparison of Gradient Descent with Stochastic Gradient Descent

iii. Automatic Differentiation

(b) Batch Normalization

(c) Parameter Initialization Strategies

(d) Algorithms with Adaptive Learning Rates

(e) Approximate Second-Order Methods

(f) Sampling and Monte Carlo Methods

4. Regularization for Deep Learning [6, 16, 17, 18, 19, 1]

(a) Norm Penalties as Constrained Optimization

(b) Regularization and Under-Constrained Problems

(c) Noise Robustness

(d) Early Stopping

(e) Sparse Representations

(f) Adversarial Training

5. Convolutional Networks [20, 6, 21]

(a) The Convolution Operation

(b) Convolution and Pooling as an Infinitely Strong Prior

(c) Variants of the Basic Convolution Function

(d) Efficient Convolution Algorithms

6. Recurrent and Recursive Nets [22, 18, 6, 1]

(a) Introduction

(b) Recurrent Neural Networks

3](https://image.slidesharecdn.com/neuralnetworkdeeplearningsyllabus-190403031355/85/Neural-Networks-and-Deep-Learning-Syllabus-3-320.jpg)

![Cinvestav Guadalajara

(c) Deep Recurrent Networks

(d) Recursive Neural Networks

(e) The Long Short-Term Memory

7. Auto-encoders [6, 23, 24, 25, 26]

(a) Purporse

(b) Structure

(c) Variations

i. Denoising autoencoder

ii. Sparse autoncoders

iii. Undercomplete autoncoders

iv. Variational autoencoder

8. Deep Boltzmann Machines [6, 27, 28, 29]

(a) Introduction

(b) Restricted Boltzmann Machines

(c) Deep Boltzmann Machines

(d) Boltzmann Machines for Real-Valued Data

4](https://image.slidesharecdn.com/neuralnetworkdeeplearningsyllabus-190403031355/85/Neural-Networks-and-Deep-Learning-Syllabus-4-320.jpg)

![Cinvestav Guadalajara

References

[1] S. Haykin, Neural Networks and Learning Machines. No. v. 10 in Neural networks and learning machines,

Prentice Hall, 2009.

[2] R. Rojas, Neural networks: a systematic introduction. Springer Science & Business Media, 2013.

[3] T. Hastie, R. Tibshirani, and J. Friedman, The Elements of Statistical Learning: Data Mining, Inference, and

Prediction, Second Edition. Springer Series in Statistics, Springer New York, 2009.

[4] H. Wang and B. Raj, “A survey: Time travel in deep learning space: An introduction to deep learning models

and how deep learning models evolved from the initial ideas,” arXiv preprint arXiv:1510.04781, 2015.

[5] L. Zhang and P. N. Suganthan, “A survey of randomized algorithms for training neural networks,” Information

Sciences, vol. 364, pp. 146–155, 2016.

[6] I. Goodfellow, Y. Bengio, and A. Courville, Deep Learning. The MIT Press, 2016.

[7] L. Bottou, “Large-scale machine learning with stochastic gradient descent,” in Proceedings of COMPSTAT’2010,

pp. 177–186, Springer, 2010.

[8] J. Duchi, E. Hazan, and Y. Singer, “Adaptive subgradient methods for online learning and stochastic optimiza-

tion,” Journal of Machine Learning Research, vol. 12, no. Jul, pp. 2121–2159, 2011.

[9] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

[10] Q. V. Le, J. Ngiam, A. Coates, A. Lahiri, B. Prochnow, and A. Y. Ng, “On optimization methods for deep learn-

ing,” in Proceedings of the 28th International Conference on International Conference on Machine Learning,

pp. 265–272, Omnipress, 2011.

[11] J. A. Snyman, Practical mathematical optimization. Springer, 2018.

[12] L. Bottou, F. E. Curtis, and J. Nocedal, “Optimization methods for large-scale machine learning,” Siam Review,

vol. 60, no. 2, pp. 223–311, 2018.

[13] A. G. Baydin, B. A. Pearlmutter, A. A. Radul, and J. M. Siskind, “Automatic differentiation in machine learning:

a survey,” Journal of Machine Learning Research, vol. 18, no. 153, pp. 1–43, 2018.

[14] A. Griewank and A. Walther, Evaluating derivatives: principles and techniques of algorithmic differentiation,

vol. 105. Siam, 2008.

[15] A. Wibisono, A. C. Wilson, and M. I. Jordan, “A variational perspective on accelerated methods in optimization,”

proceedings of the National Academy of Sciences, vol. 113, no. 47, pp. E7351–E7358, 2016.

[16] C. Zhang, S. Bengio, M. Hardt, B. Recht, and O. Vinyals, “Understanding deep learning requires rethinking

generalization,” arXiv preprint arXiv:1611.03530, 2016.

[17] M. D. Zeiler and R. Fergus, “Stochastic pooling for regularization of deep convolutional neural networks,” arXiv

preprint arXiv:1301.3557, 2013.

[18] W. Zaremba, I. Sutskever, and O. Vinyals, “Recurrent neural network regularization,” arXiv preprint

arXiv:1409.2329, 2014.

[19] M. J. Kochenderfer and T. A. Wheeler, Algorithms for Optimization. Mit Press, 2019.

[20] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, and L. D. Jackel, “Backpropagation

applied to handwritten zip code recognition,” Neural computation, vol. 1, no. 4, pp. 541–551, 1989.

[21] Y. LeCun, L. Bottou, Y. Bengio, P. Haffner, et al., “Gradient-based learning applied to document recognition,”

Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

5](https://image.slidesharecdn.com/neuralnetworkdeeplearningsyllabus-190403031355/85/Neural-Networks-and-Deep-Learning-Syllabus-5-320.jpg)

![Cinvestav Guadalajara

[22] T. Mikolov, M. Karafiát, L. Burget, J. ˇCernock`y, and S. Khudanpur, “Recurrent neural network based language

model,” in Eleventh annual conference of the international speech communication association, 2010.

[23] D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” arXiv preprint arXiv:1312.6114, 2013.

[24] Y. Bengio et al., “Learning deep architectures for ai,” Foundations and trends R in Machine Learning, vol. 2,

no. 1, pp. 1–127, 2009.

[25] A. B. L. Larsen, S. K. SÞnderby, H. Larochelle, and O. Winther, “Autoencoding beyond pixels using a learned

similarity metric,” in Proceedings of The 33rd International Conference on Machine Learning (M. F. Balcan and

K. Q. Weinberger, eds.), vol. 48 of Proceedings of Machine Learning Research, (New York, New York, USA),

pp. 1558–1566, PMLR, 20–22 Jun 2016.

[26] A. Makhzani and B. Frey, “K-sparse autoencoders,” arXiv preprint arXiv:1312.5663, 2013.

[27] E. Aarts and J. Korst, “Simulated annealing and boltzmann machines,” 1988.

[28] H. Larochelle, M. Mandel, R. Pascanu, and Y. Bengio, “Learning algorithms for the classification restricted

boltzmann machine,” Journal of Machine Learning Research, vol. 13, no. Mar, pp. 643–669, 2012.

[29] R. Salakhutdinov and G. Hinton, “Deep boltzmann machines,” in Artificial intelligence and statistics, pp. 448–

455, 2009.

6](https://image.slidesharecdn.com/neuralnetworkdeeplearningsyllabus-190403031355/85/Neural-Networks-and-Deep-Learning-Syllabus-6-320.jpg)