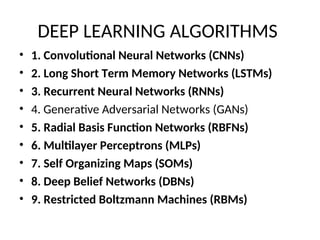

The document outlines the syllabus for a deep learning course, covering historical trends, basics of machine learning, and various deep learning algorithms. It details early developments in neural networks, significant milestones, and various types of machine learning, including supervised, unsupervised, and reinforcement learning. Additionally, it emphasizes the importance of linear algebra in machine learning and provides insights into specific algorithms such as CNNs, LSTMs, and GANS.