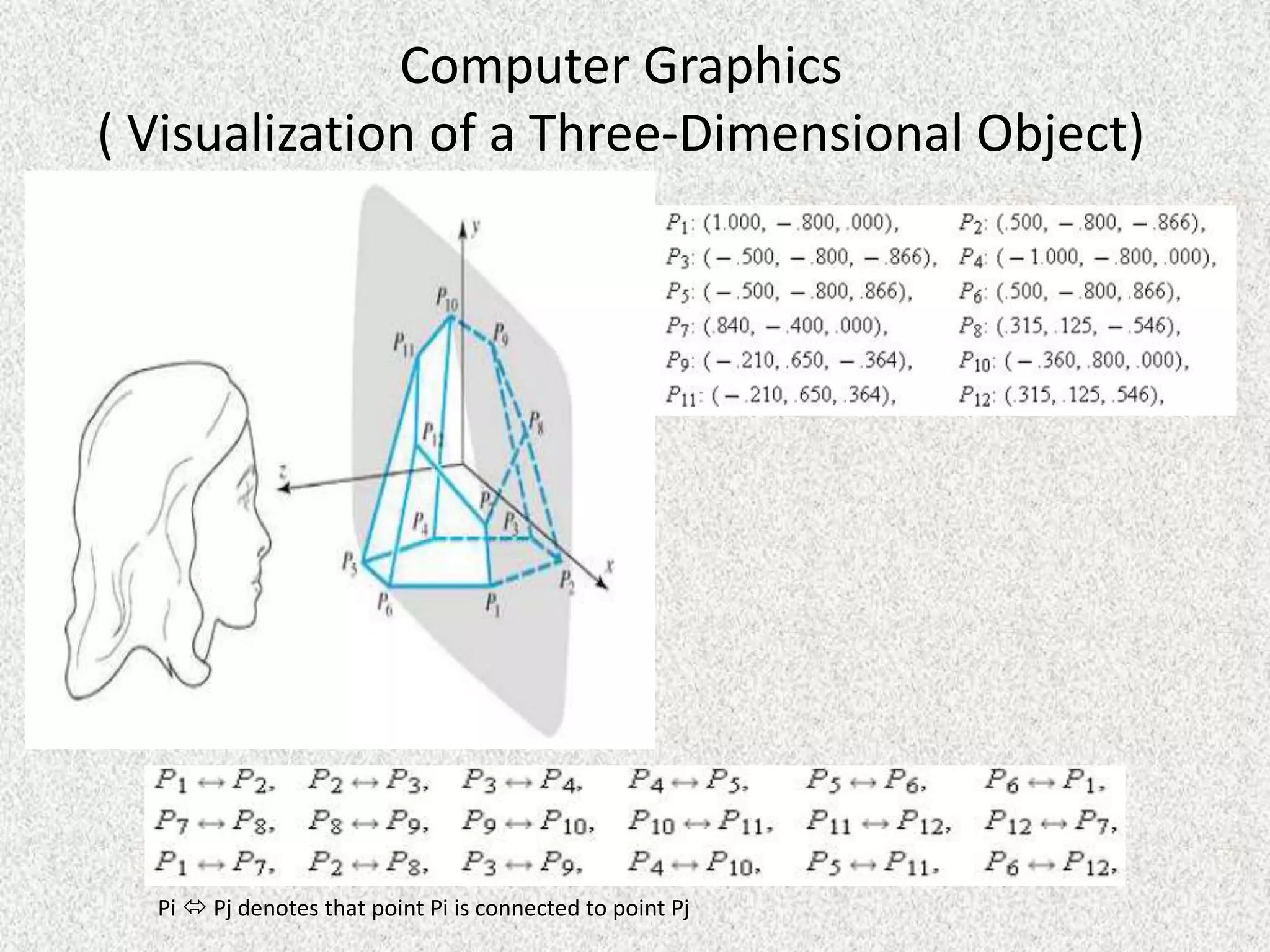

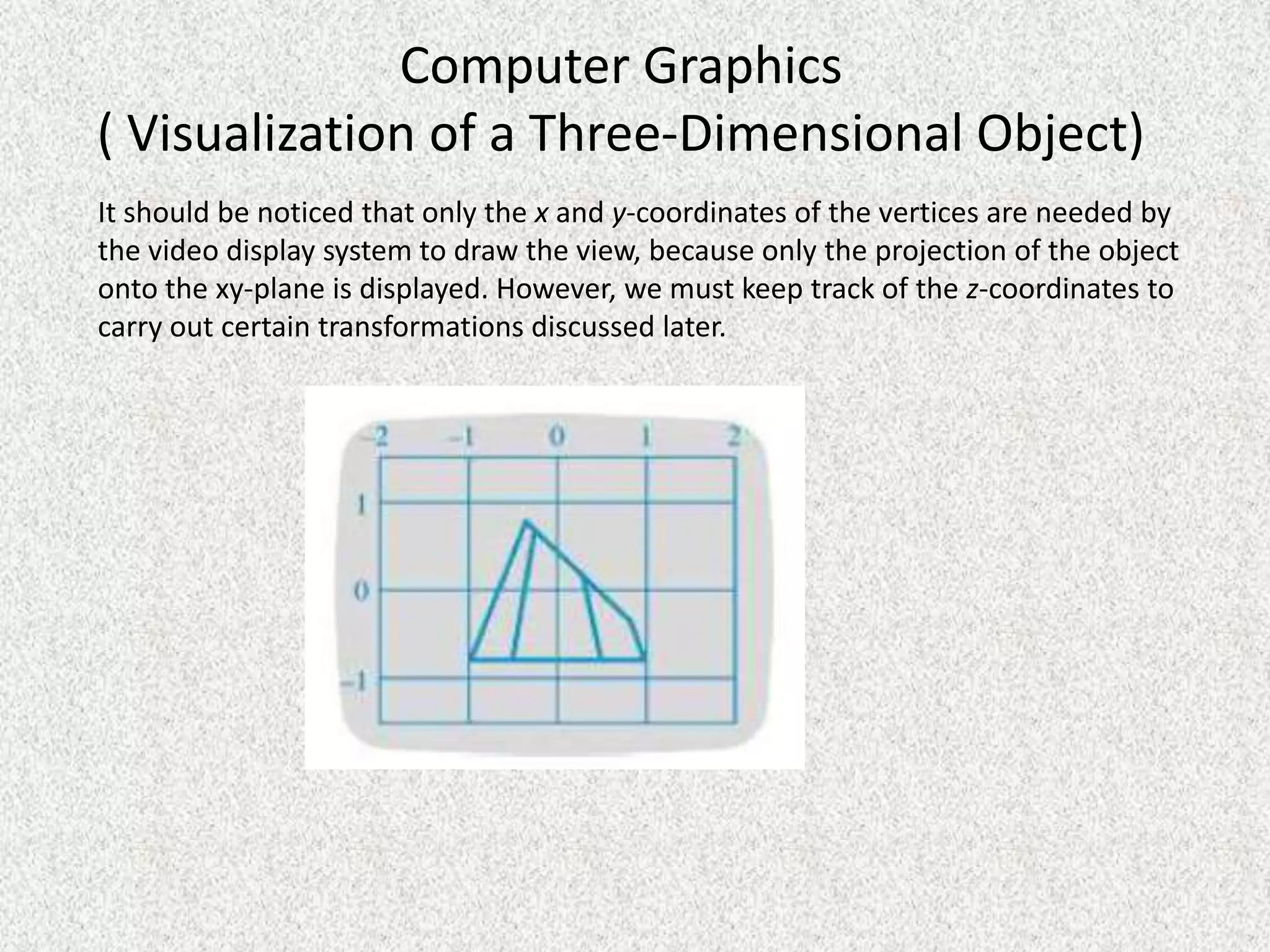

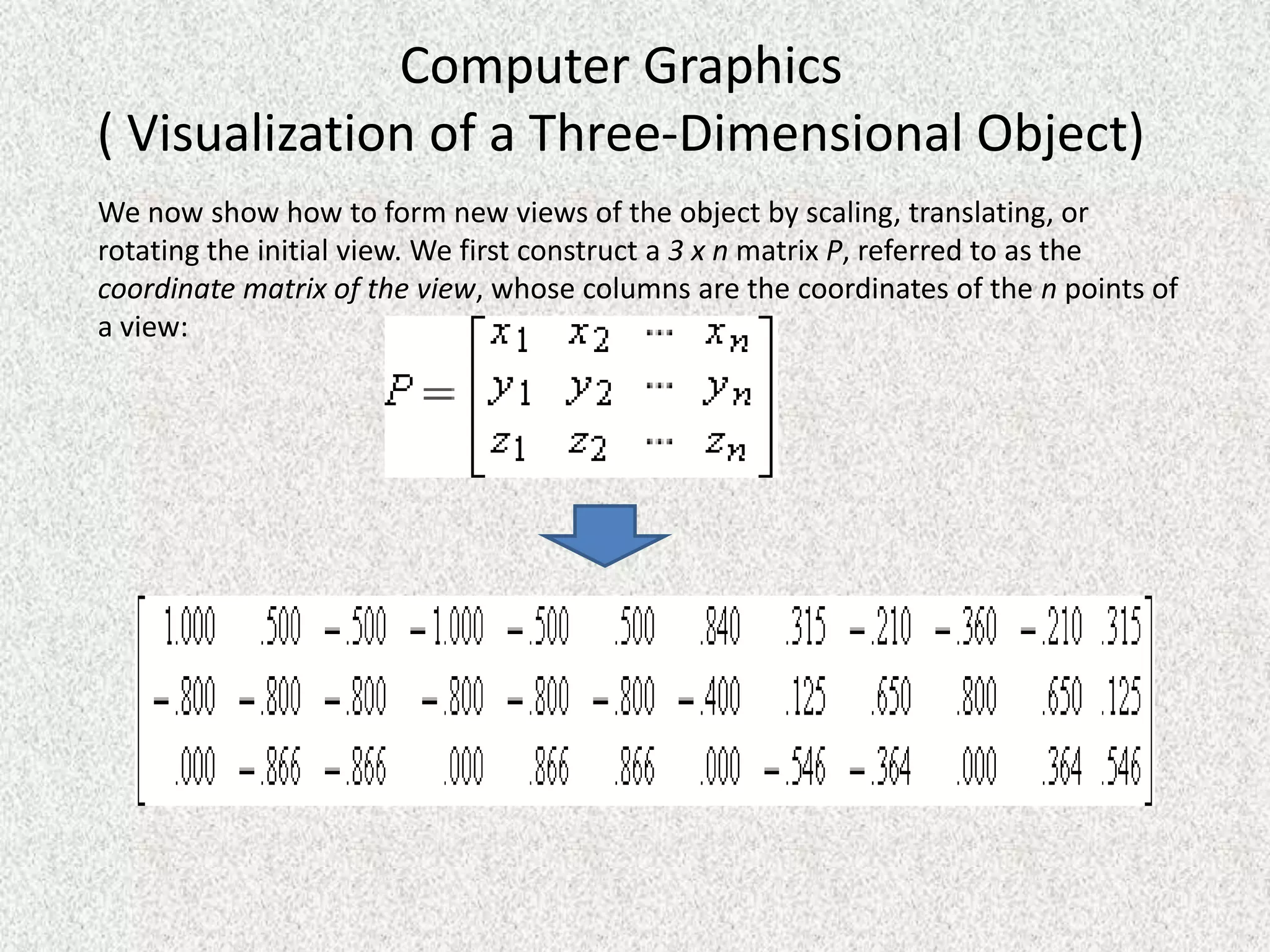

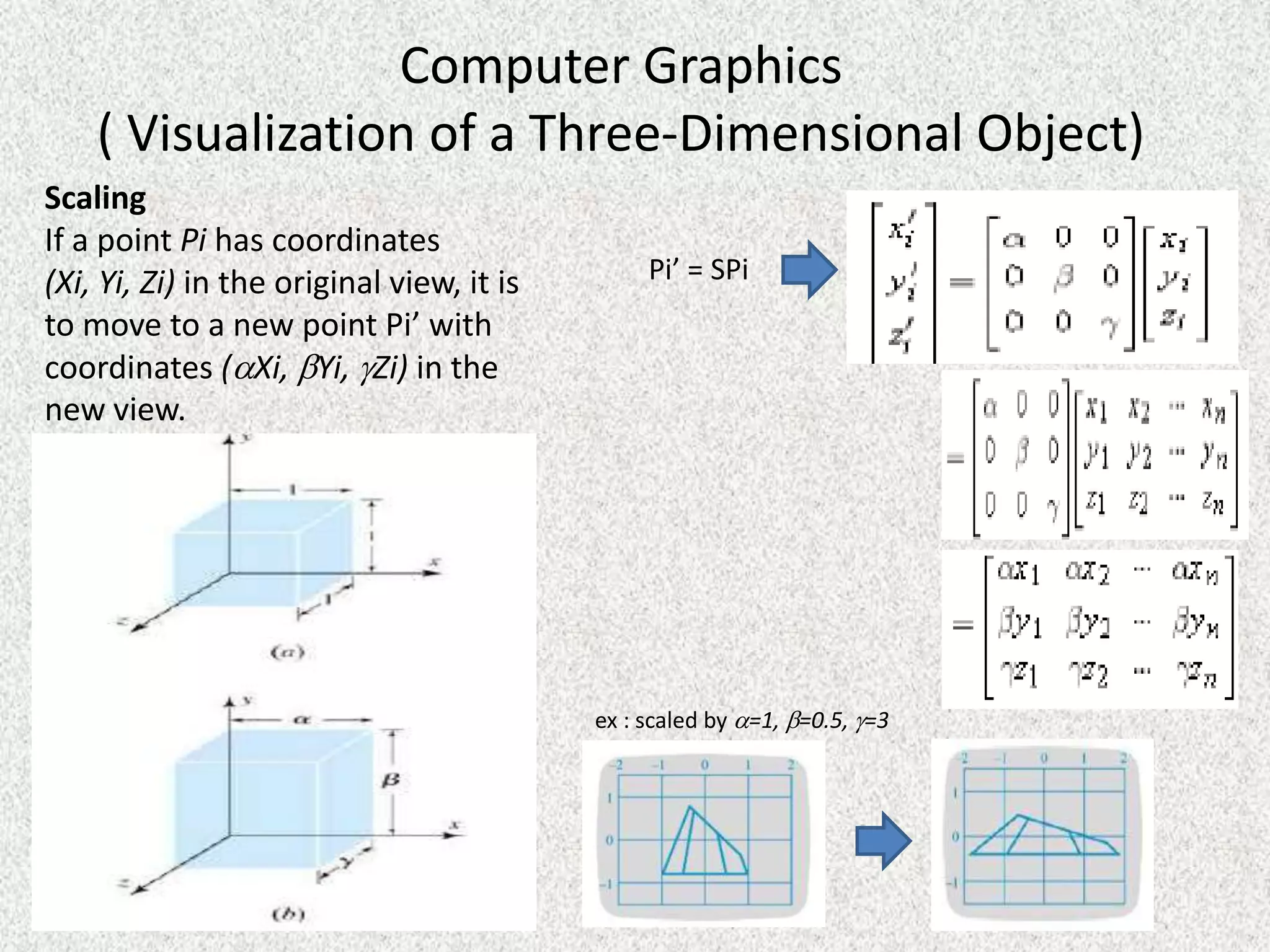

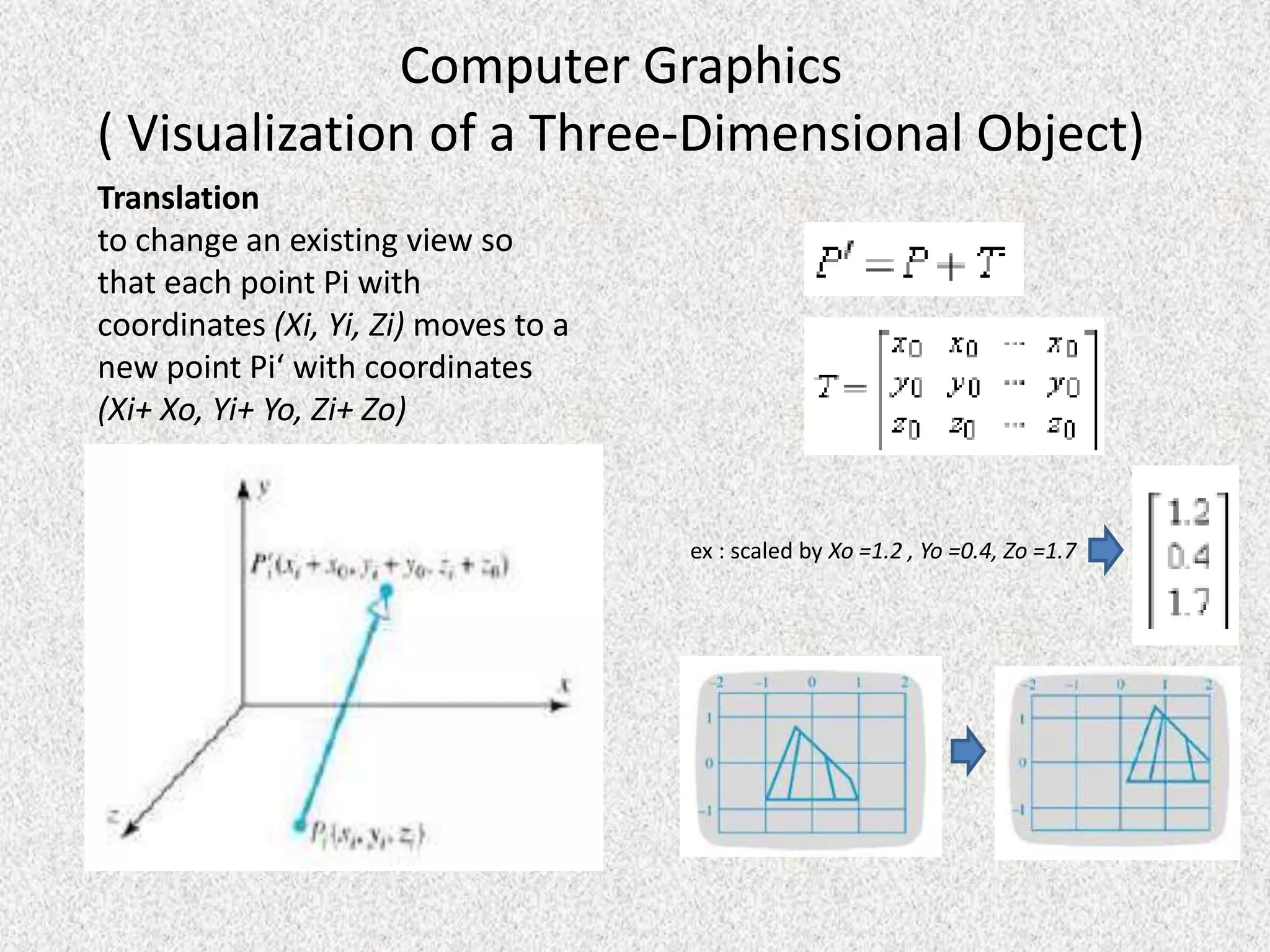

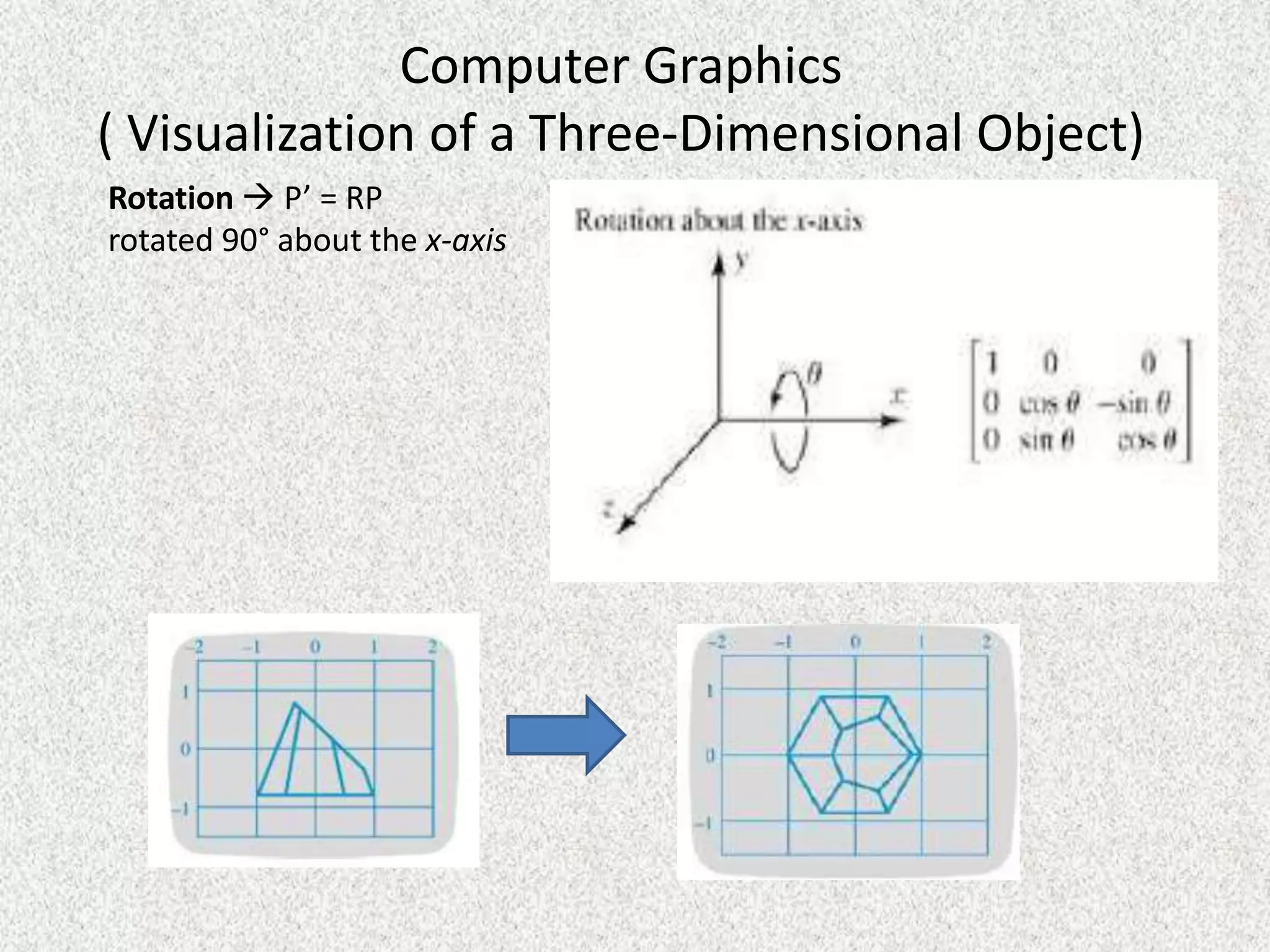

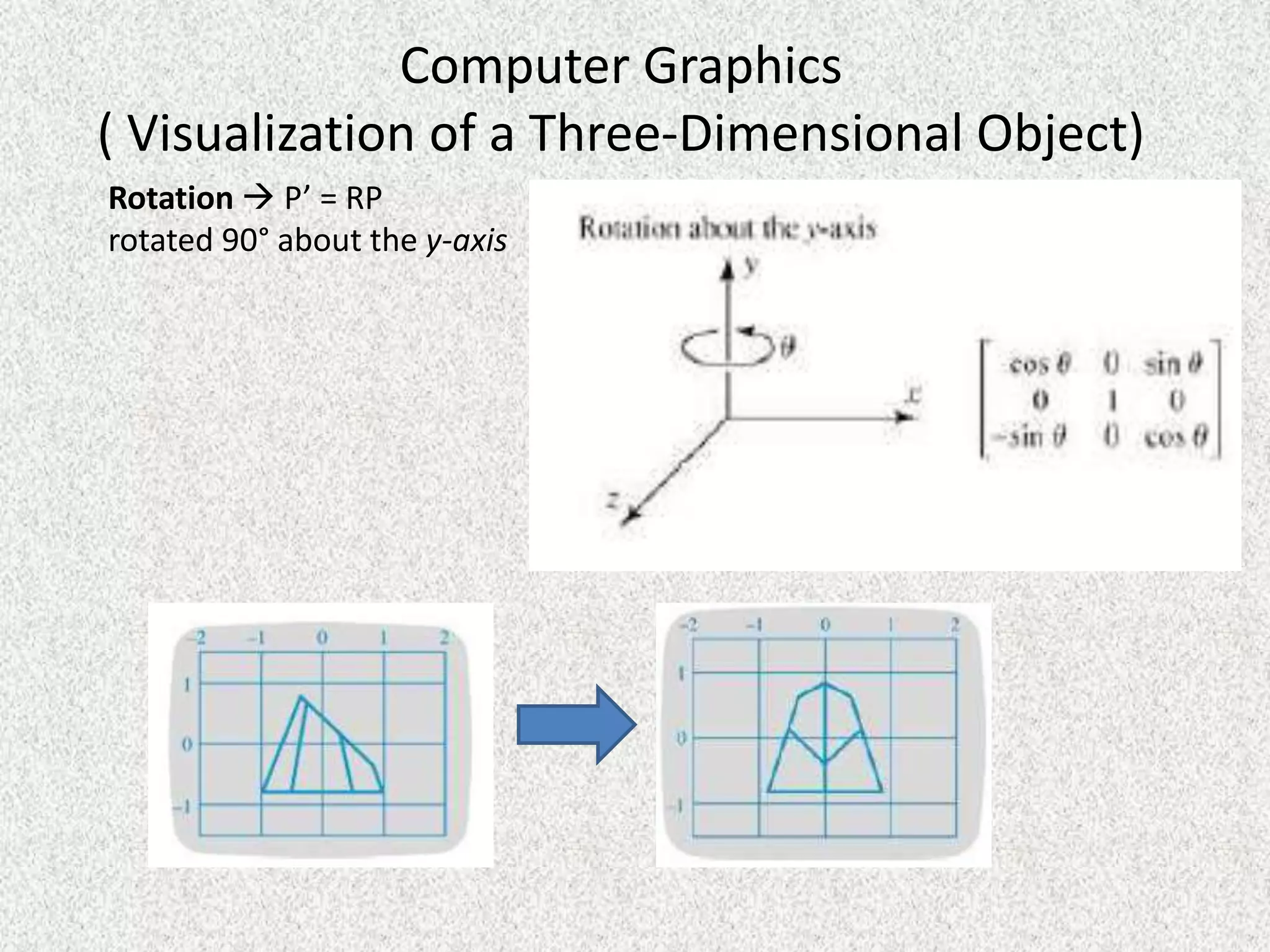

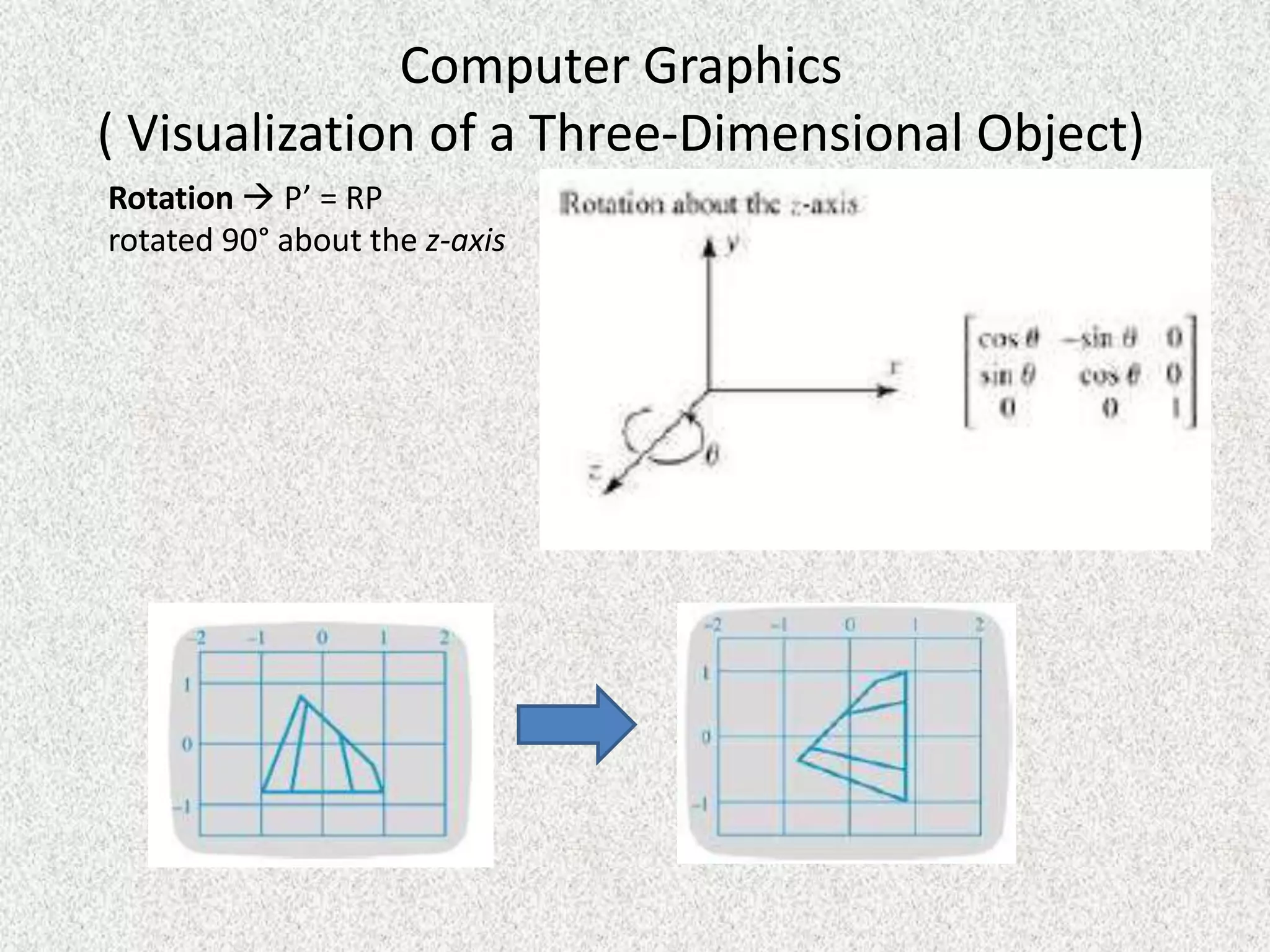

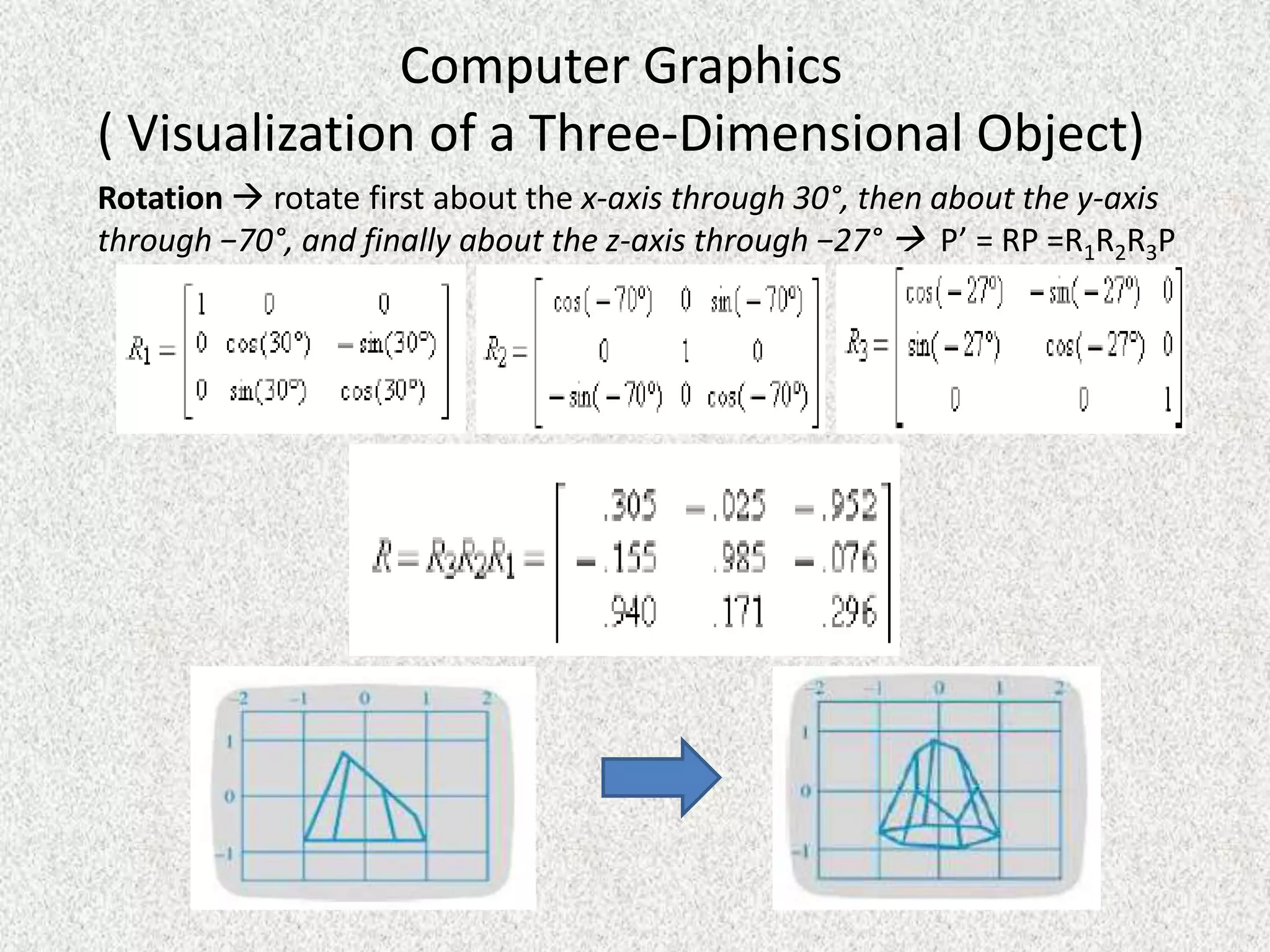

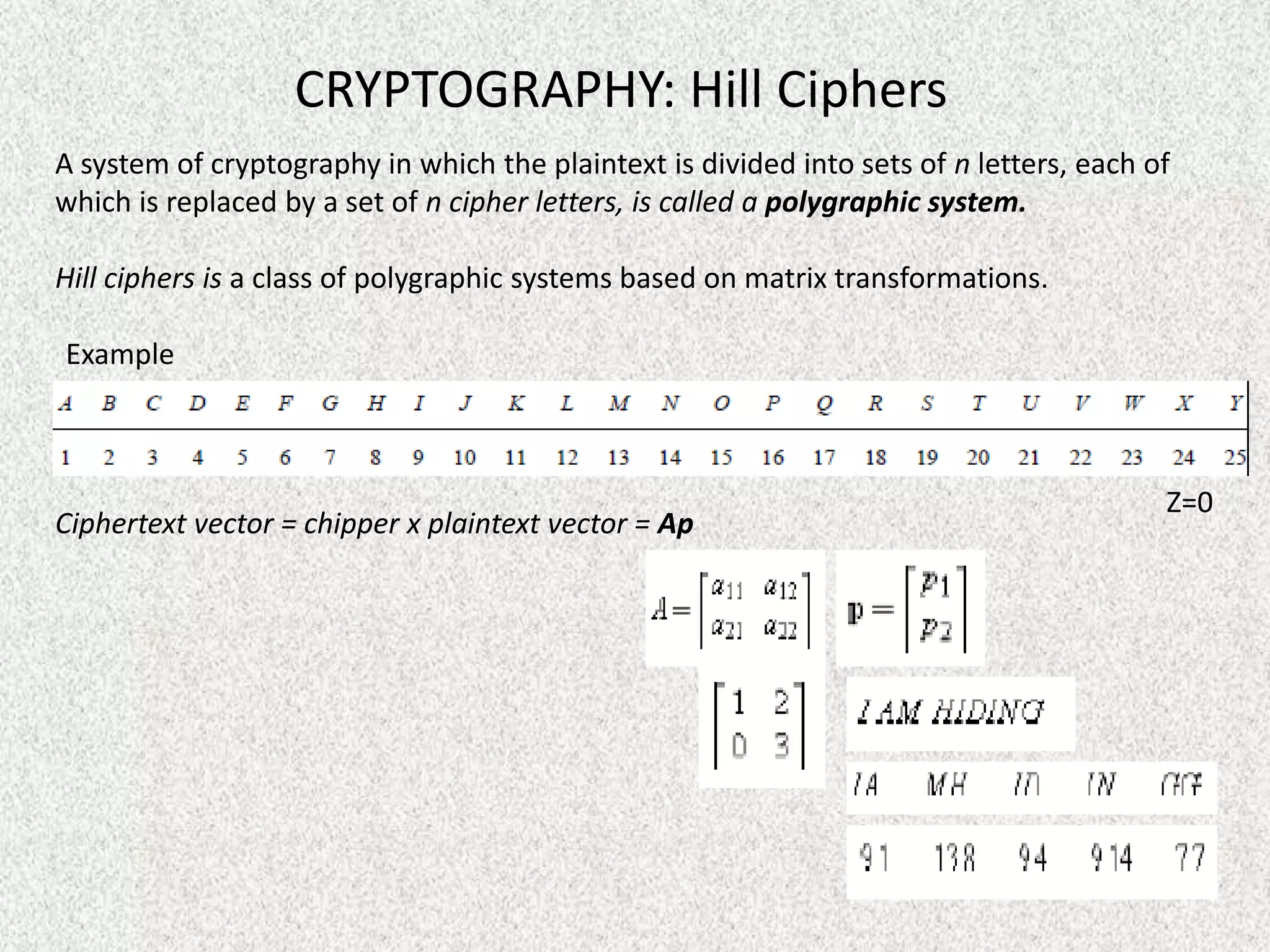

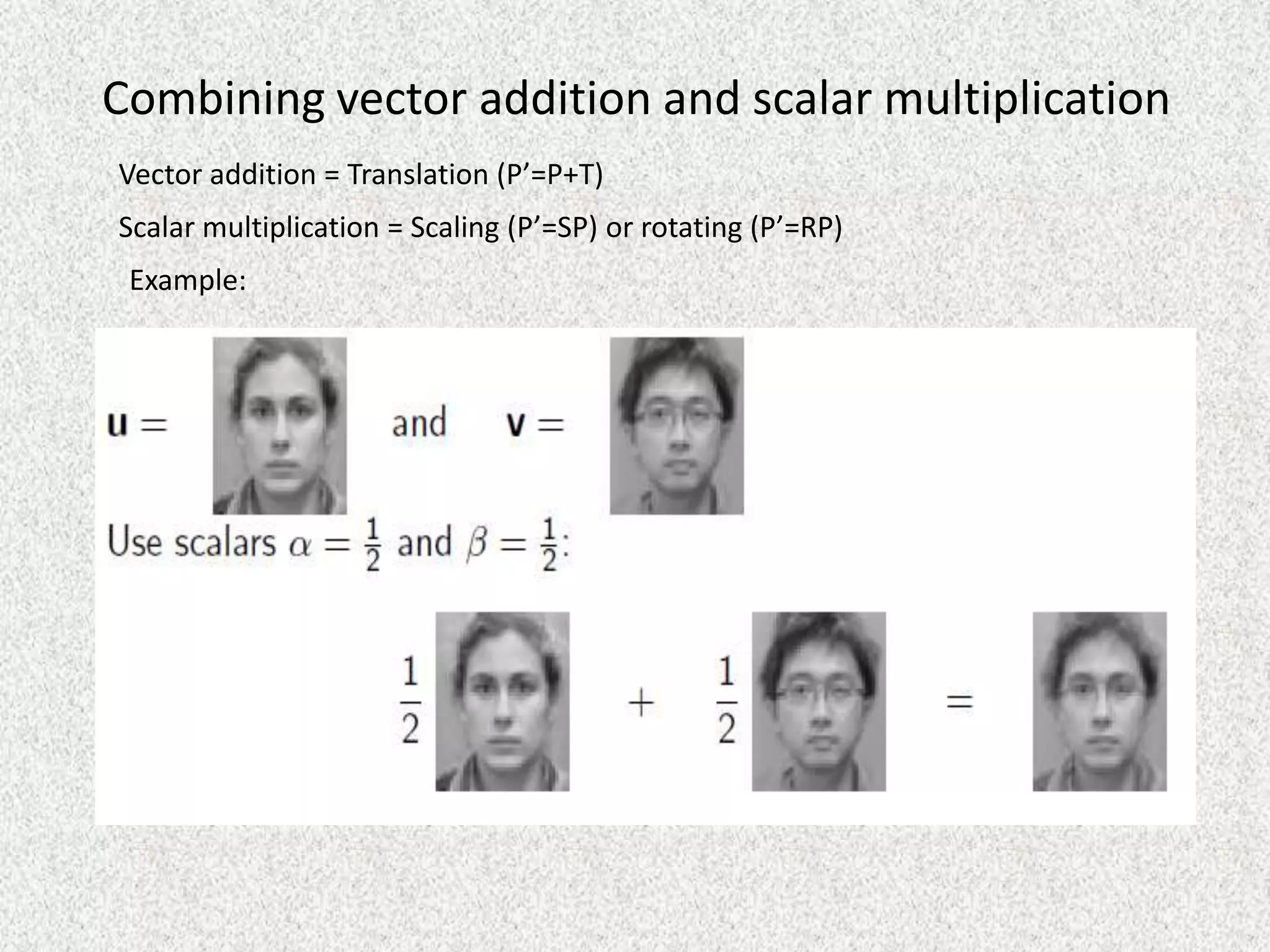

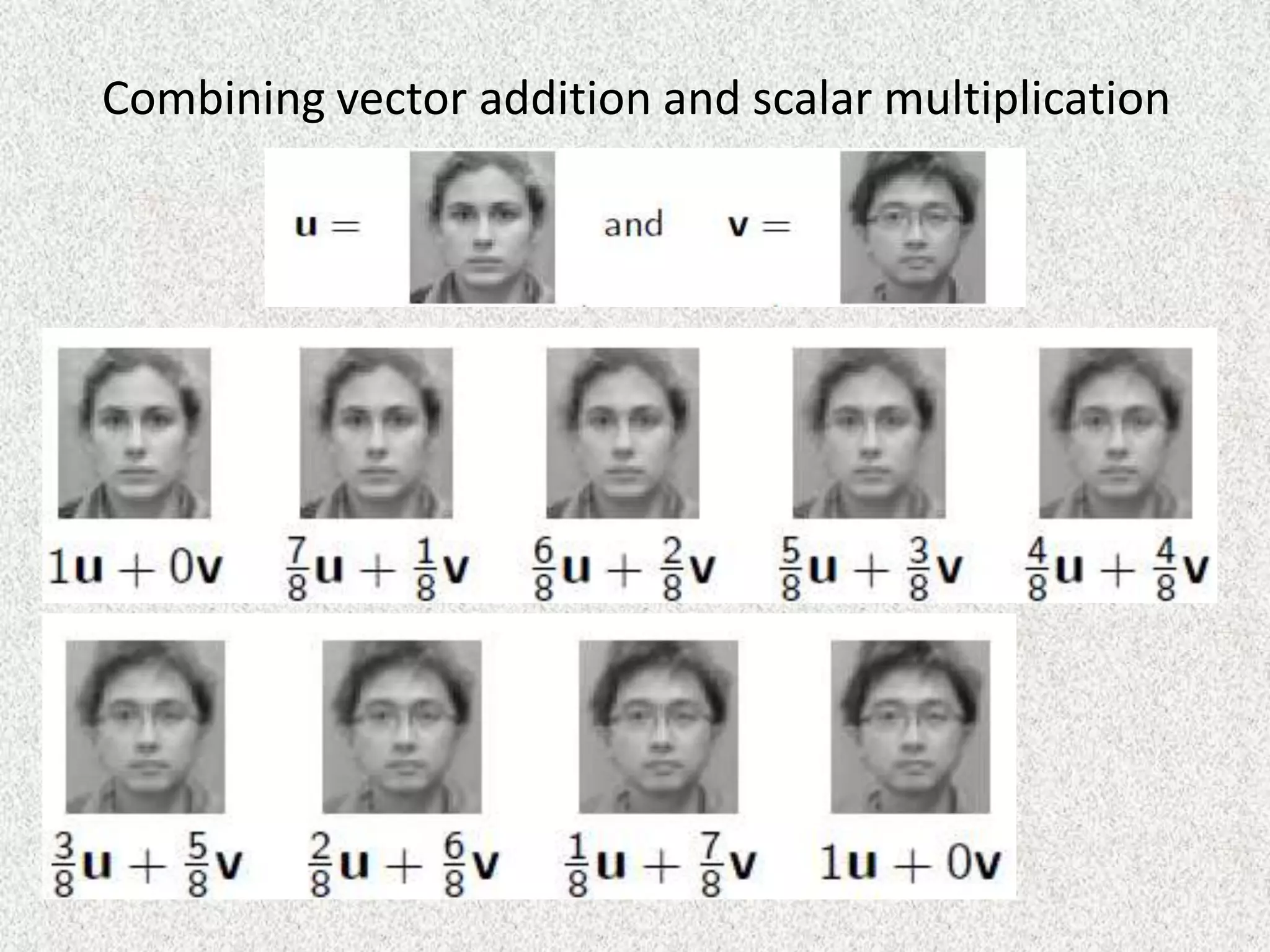

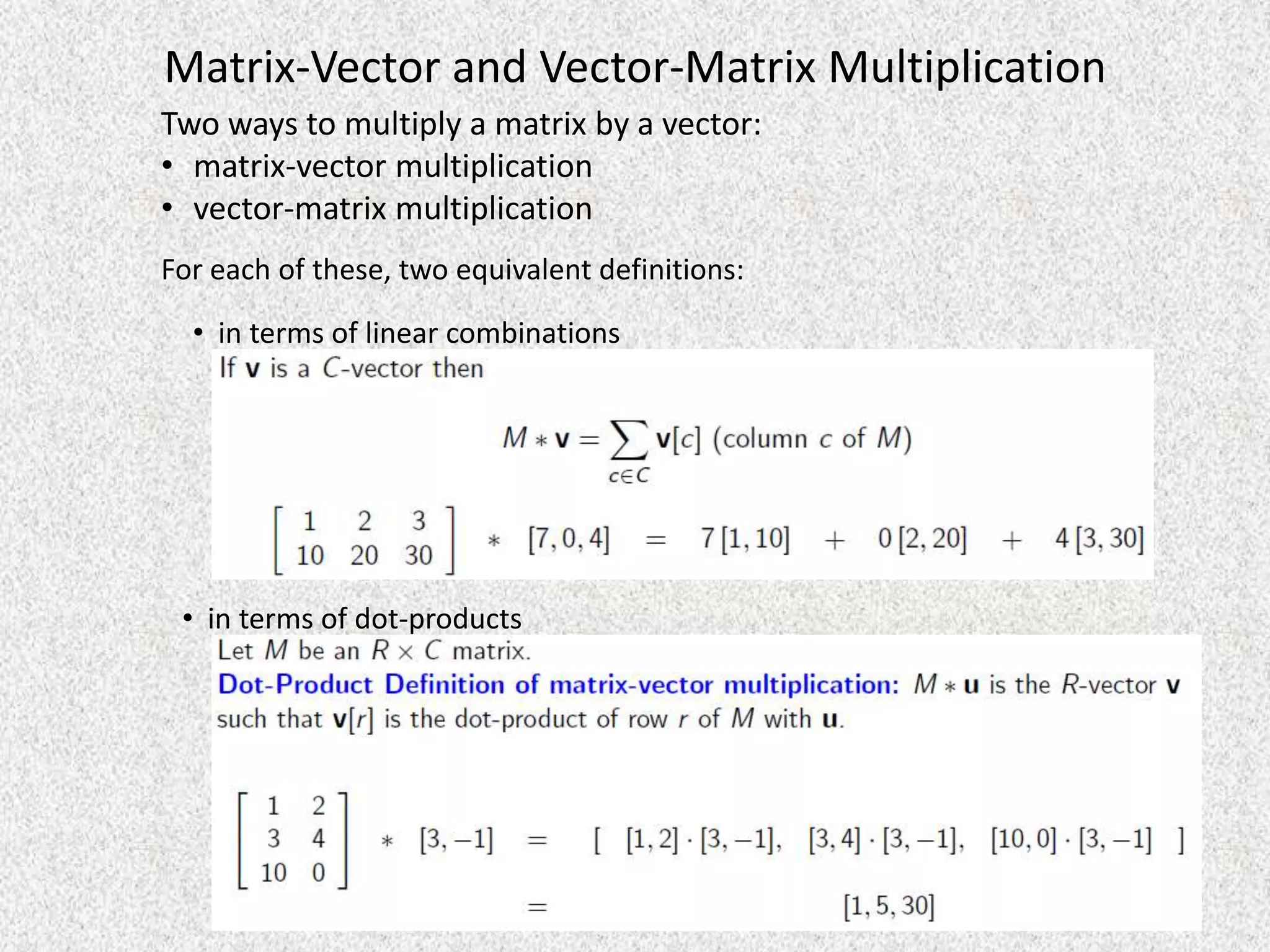

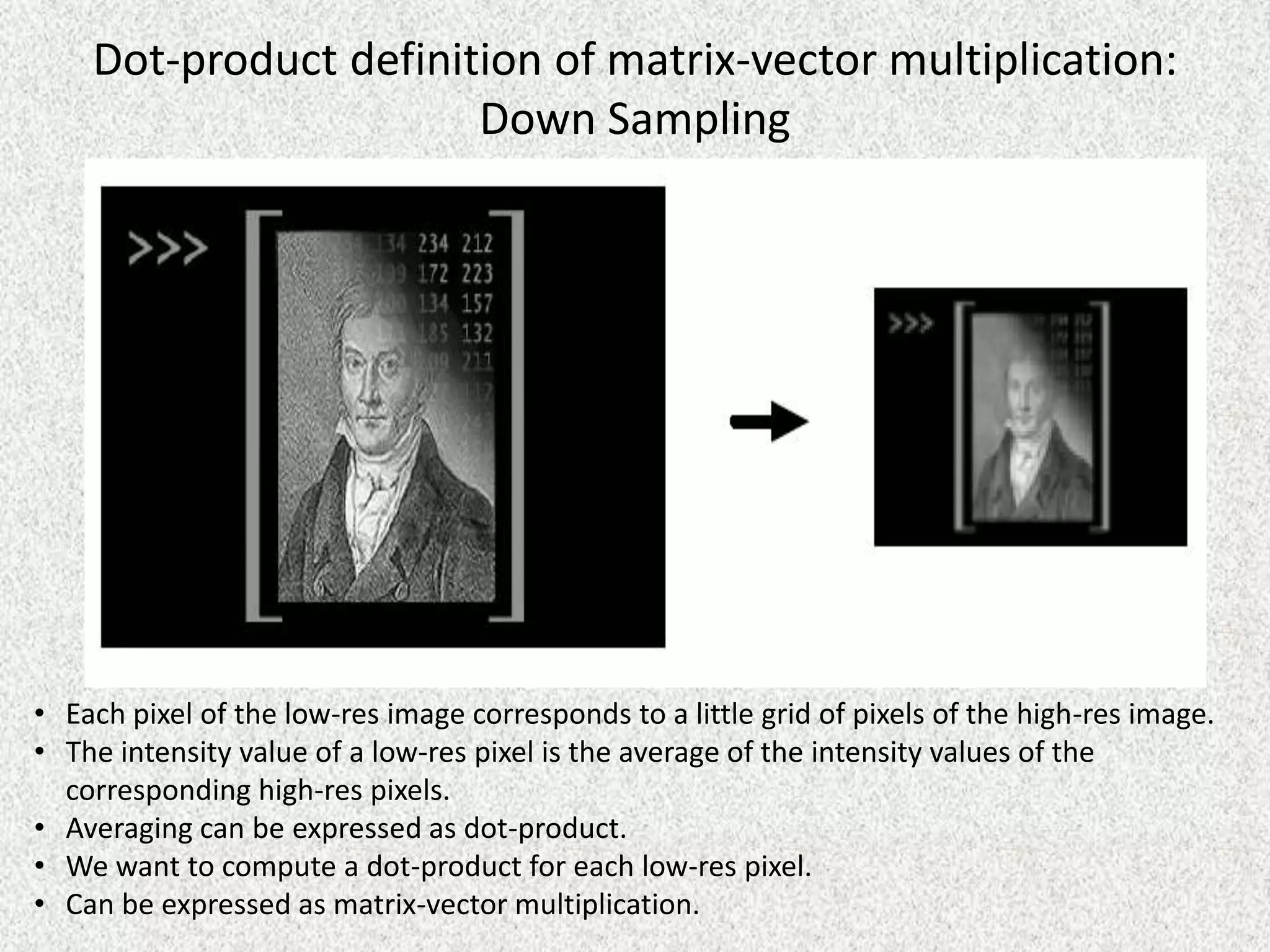

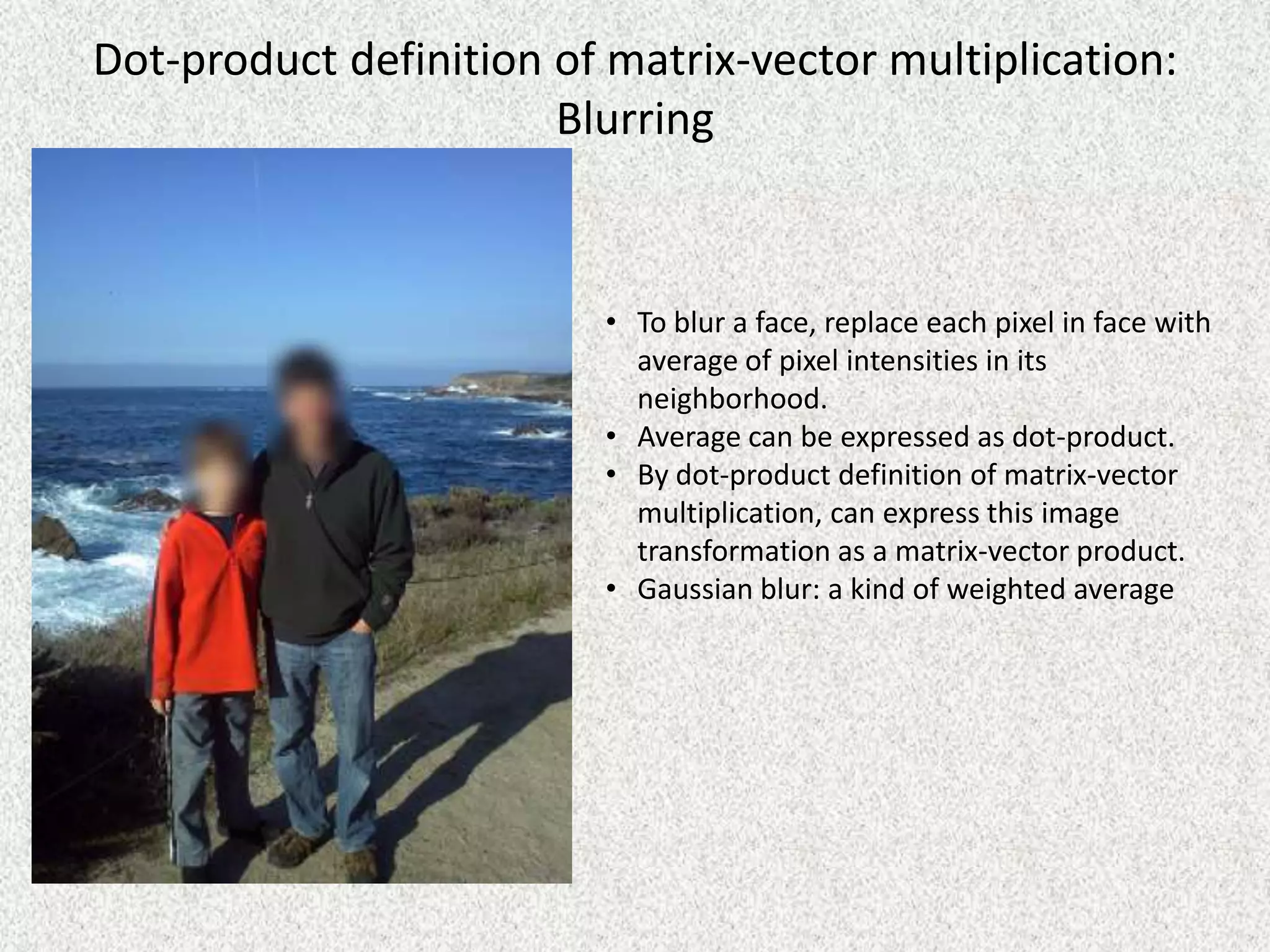

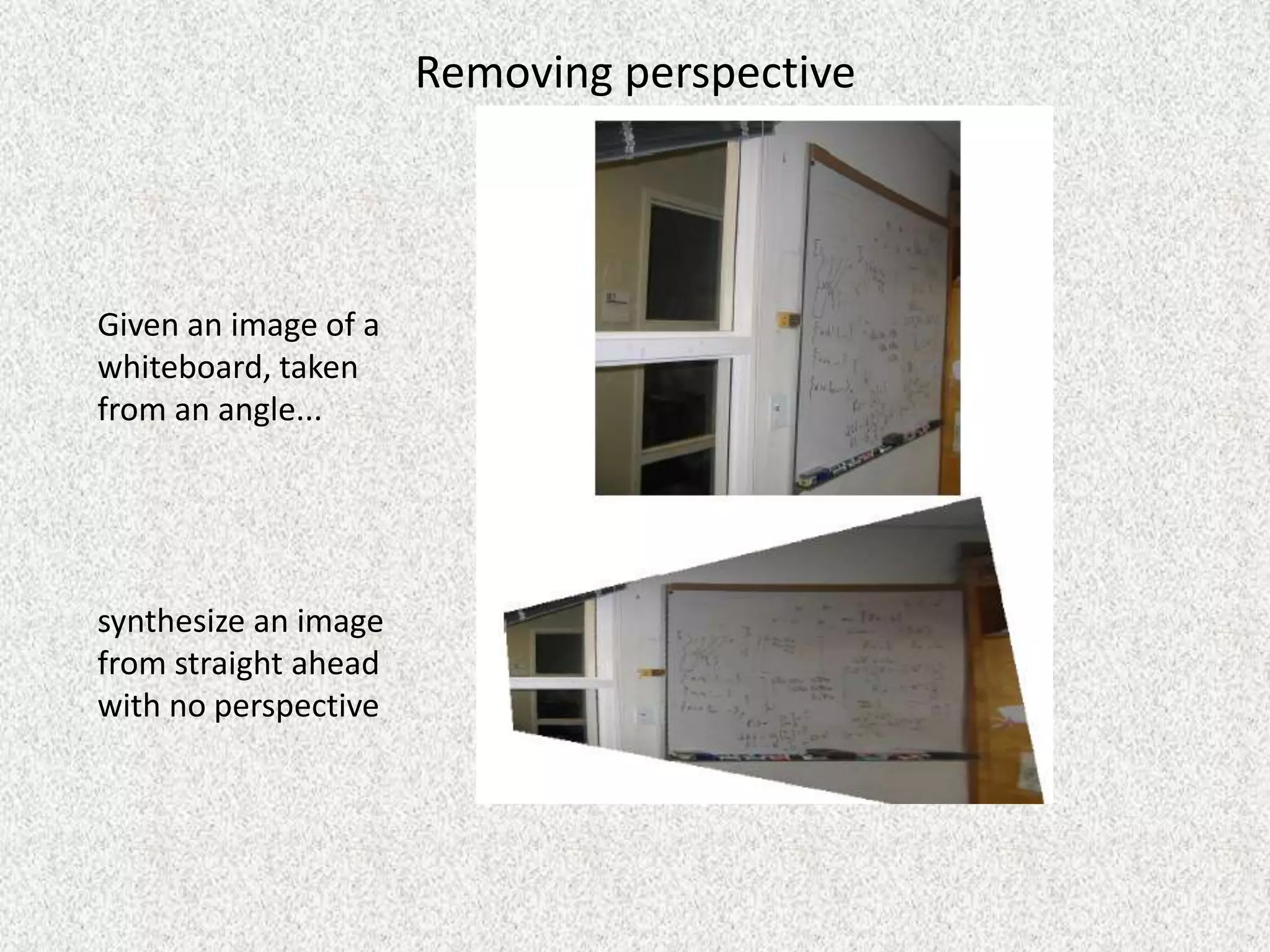

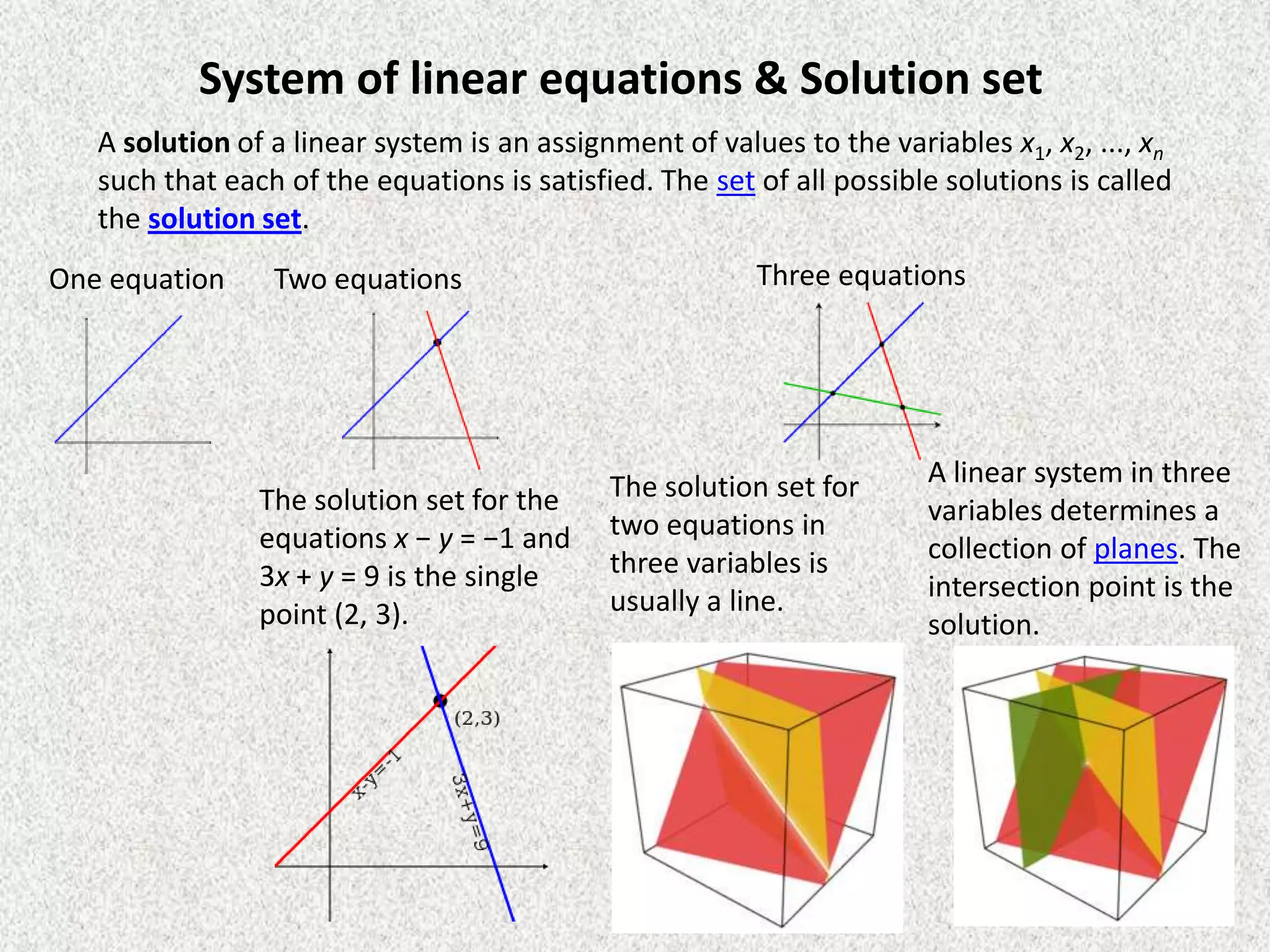

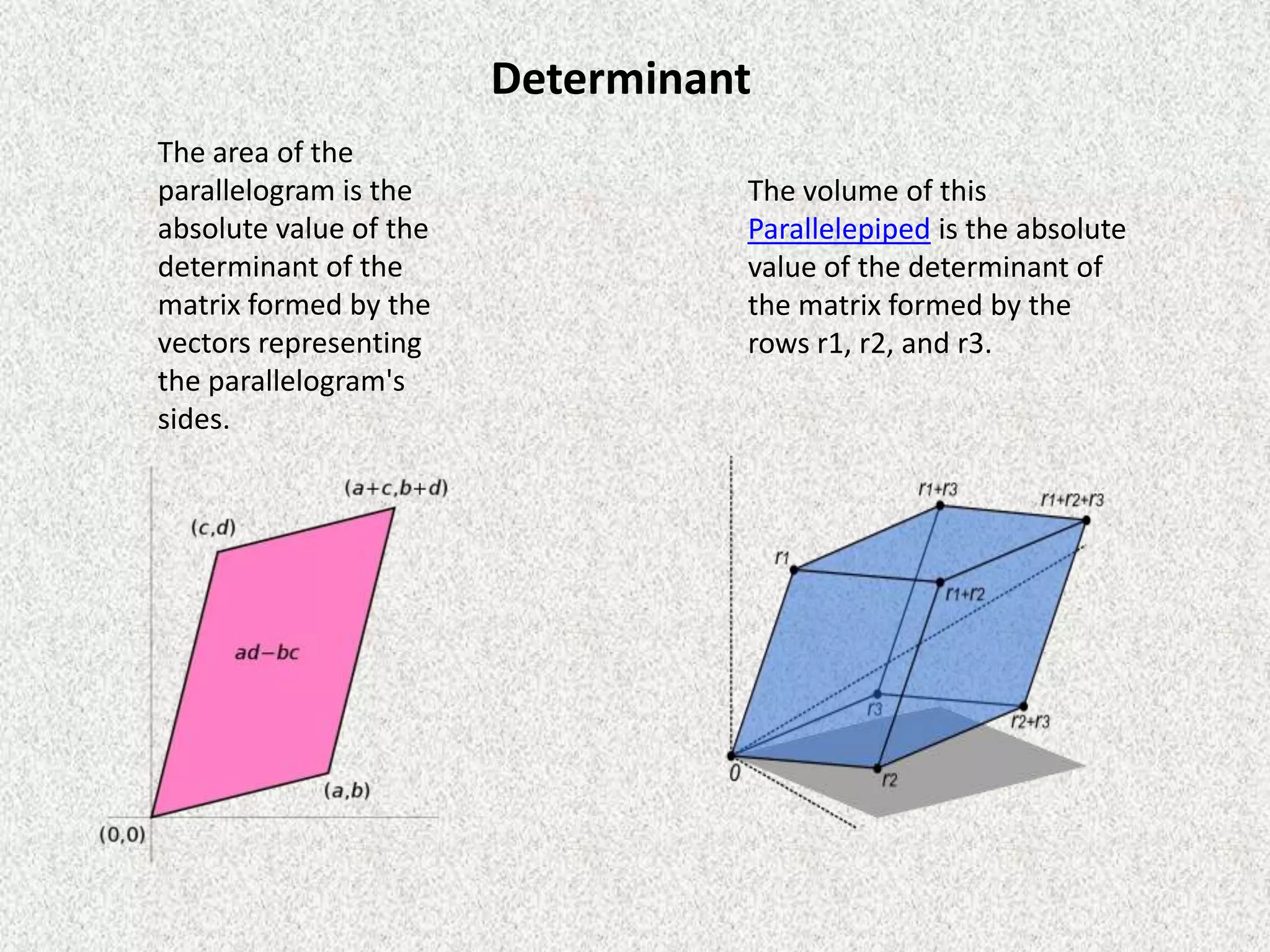

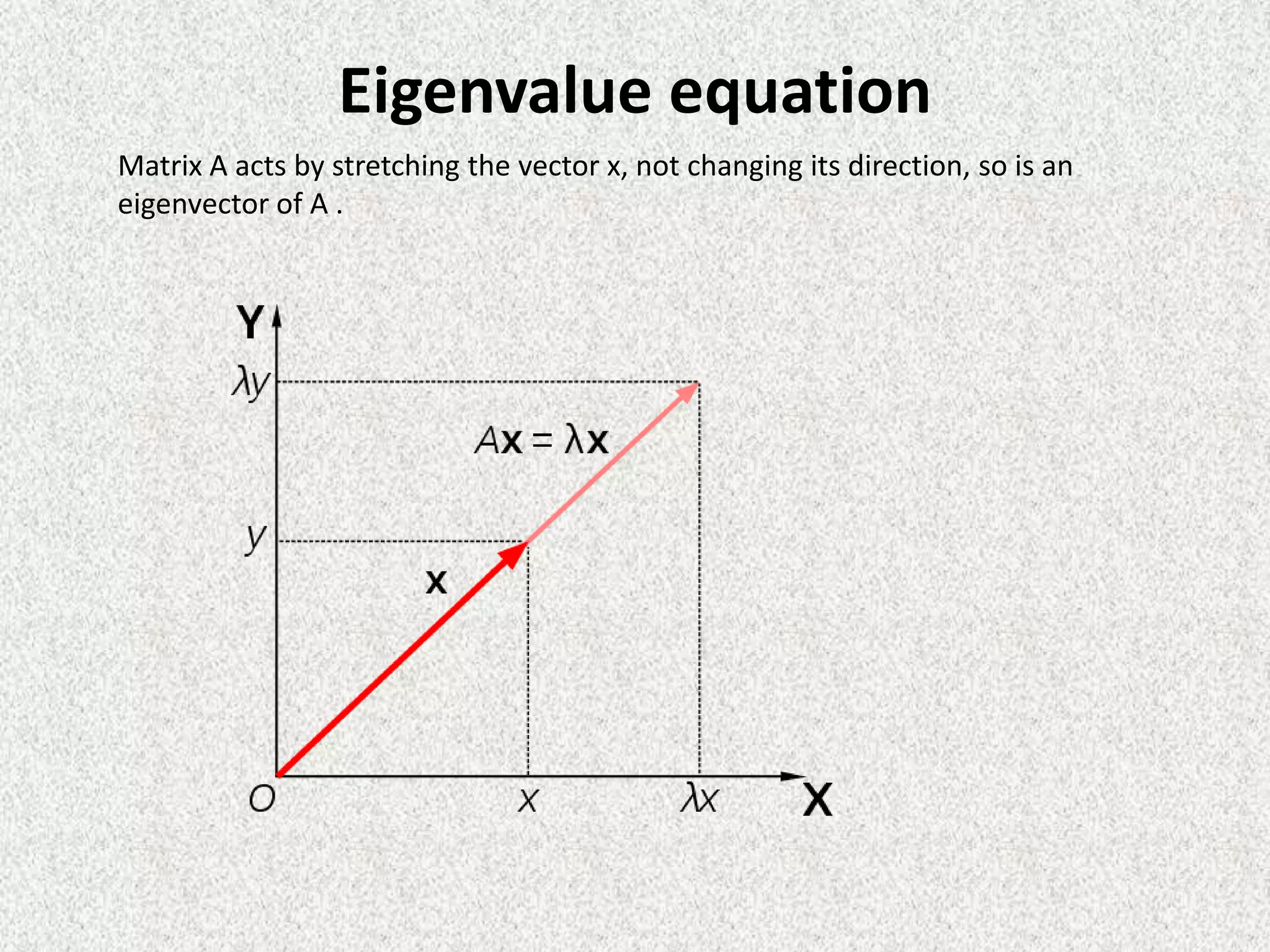

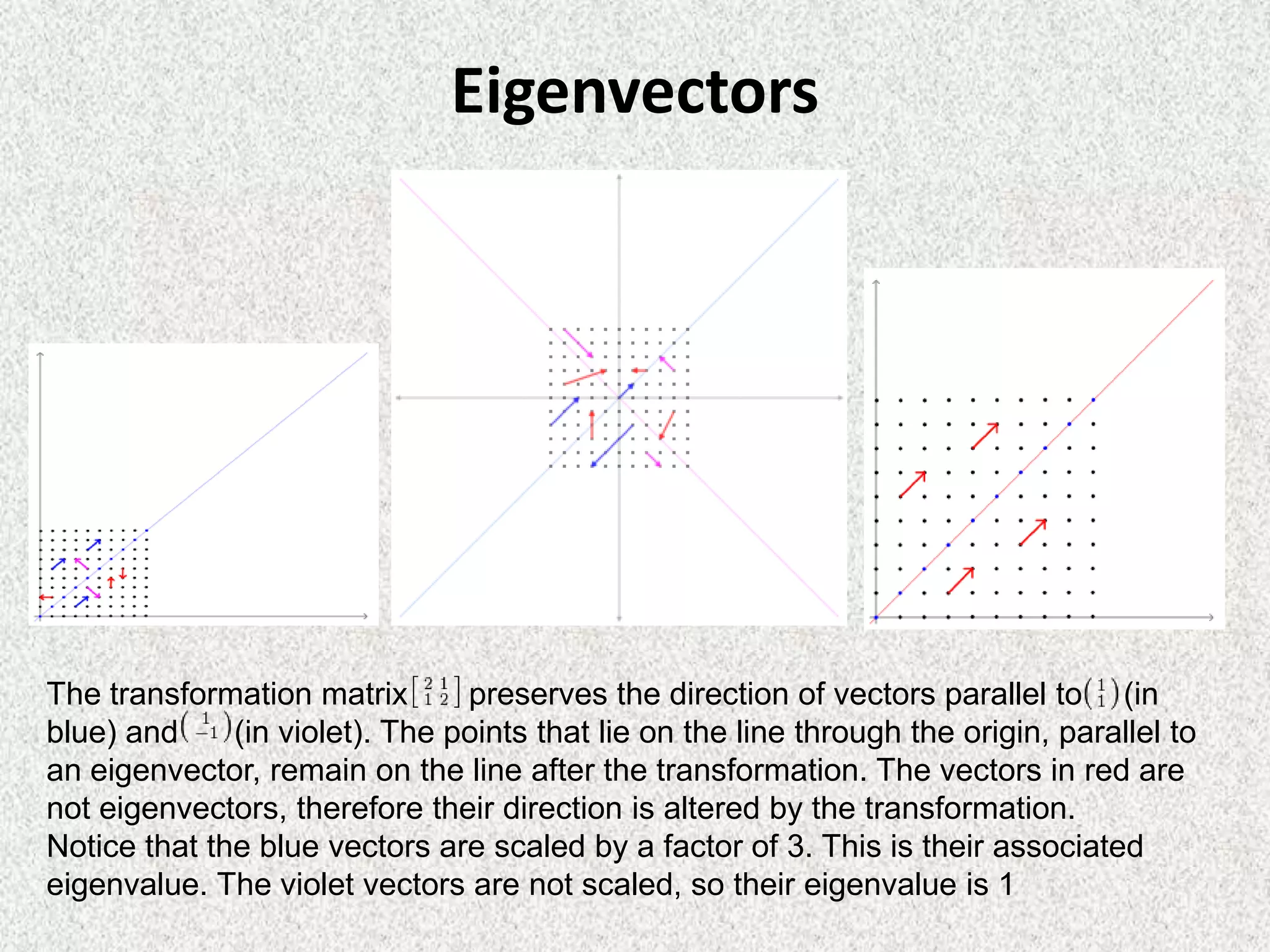

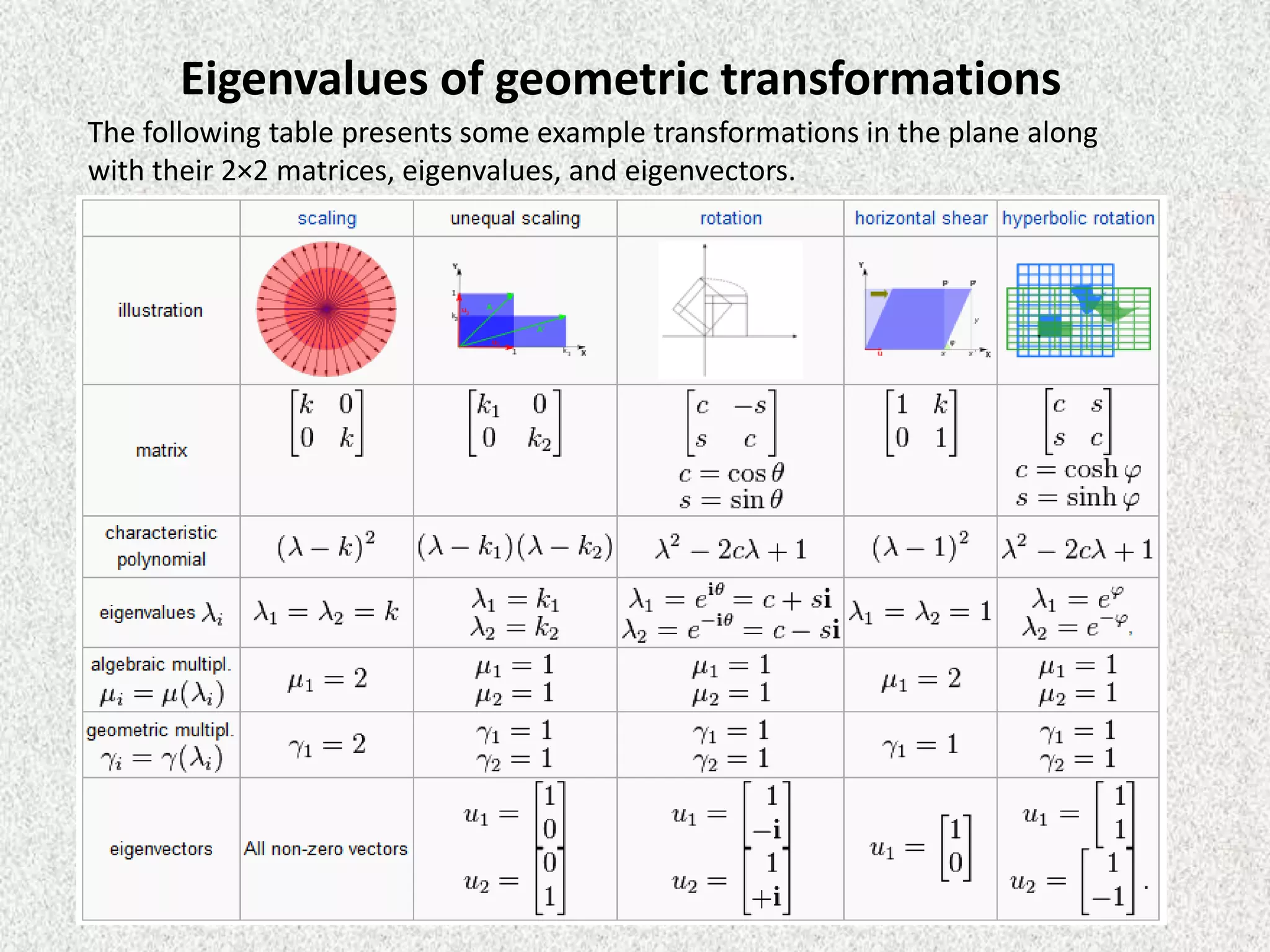

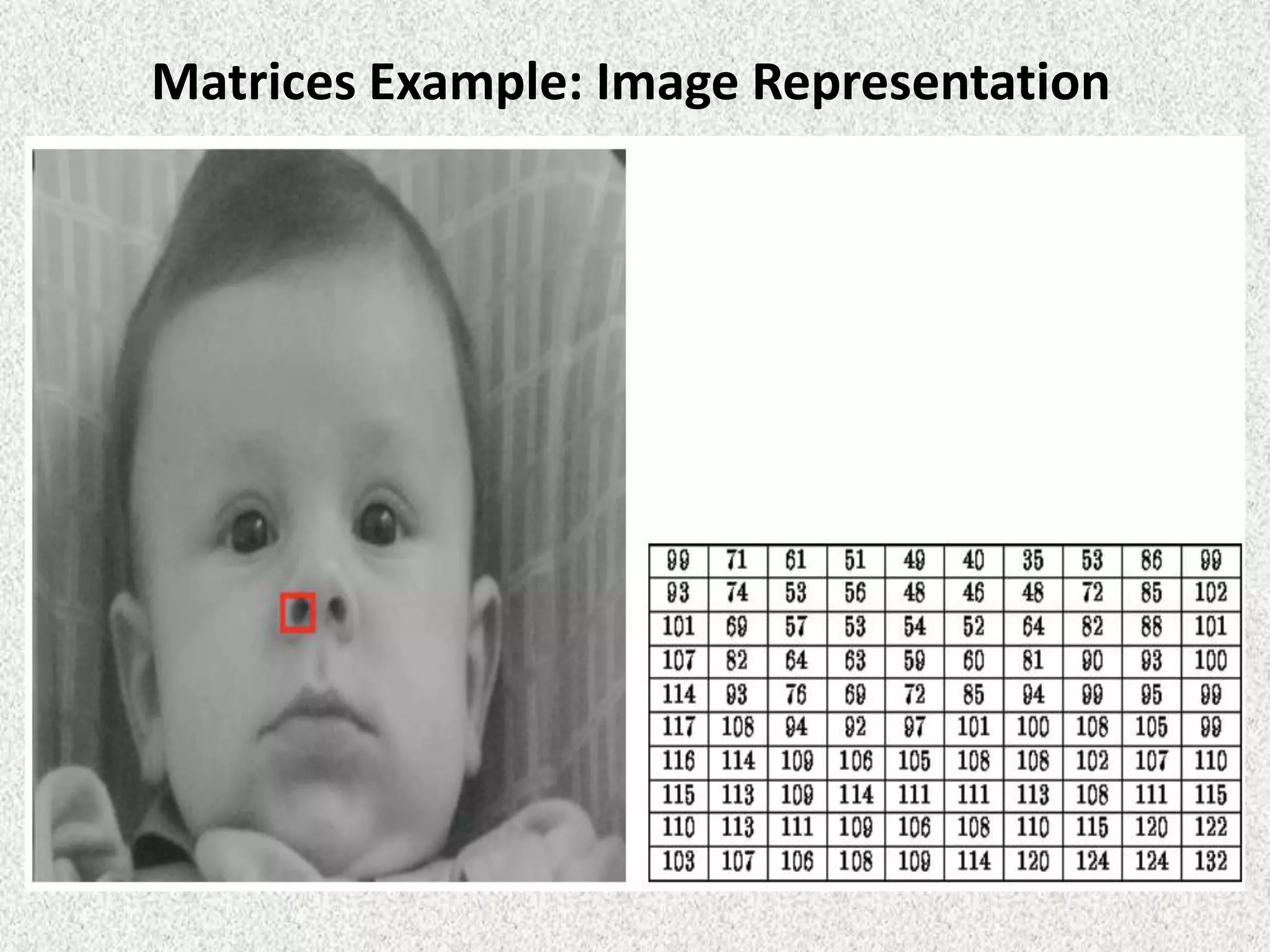

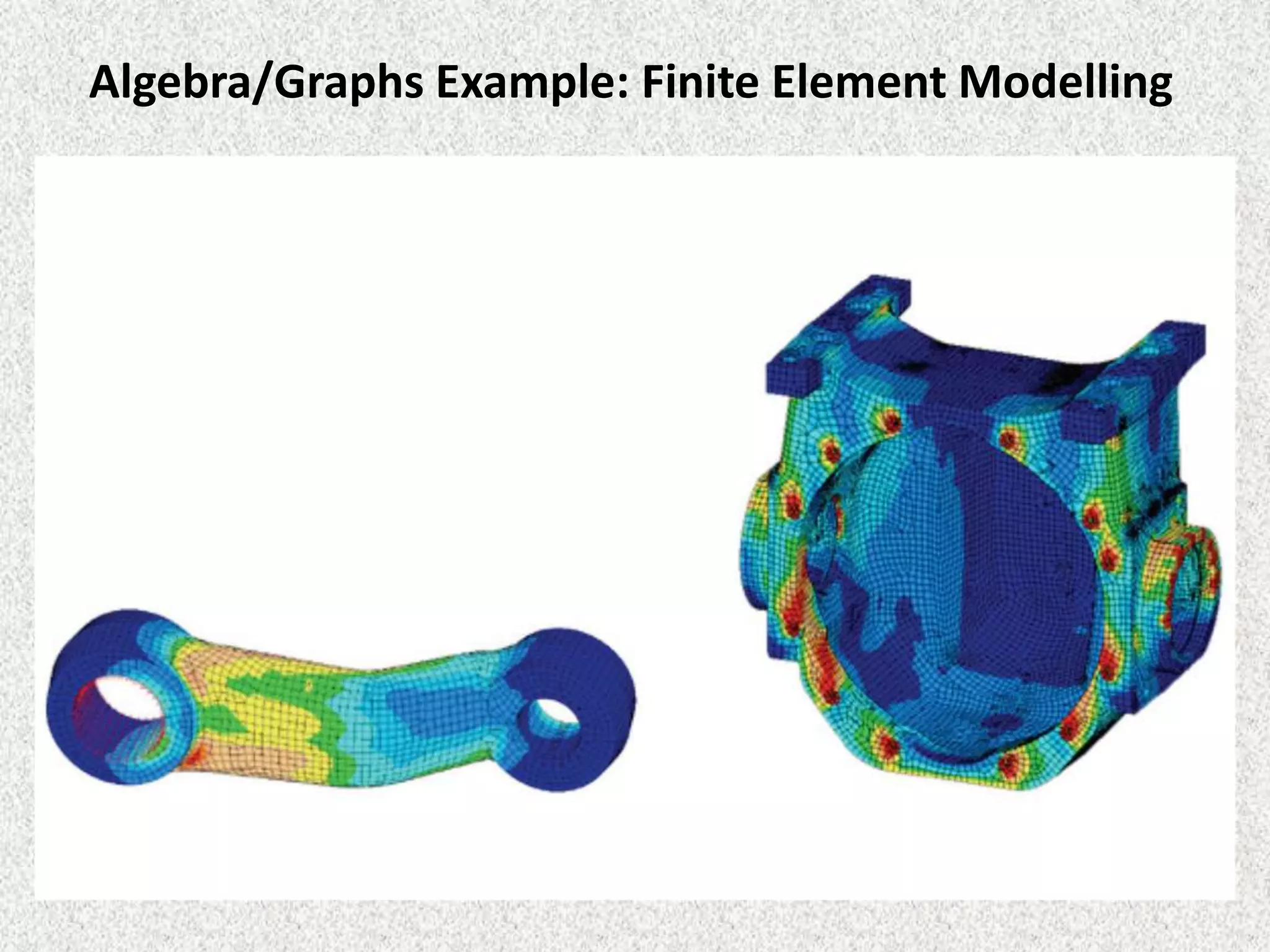

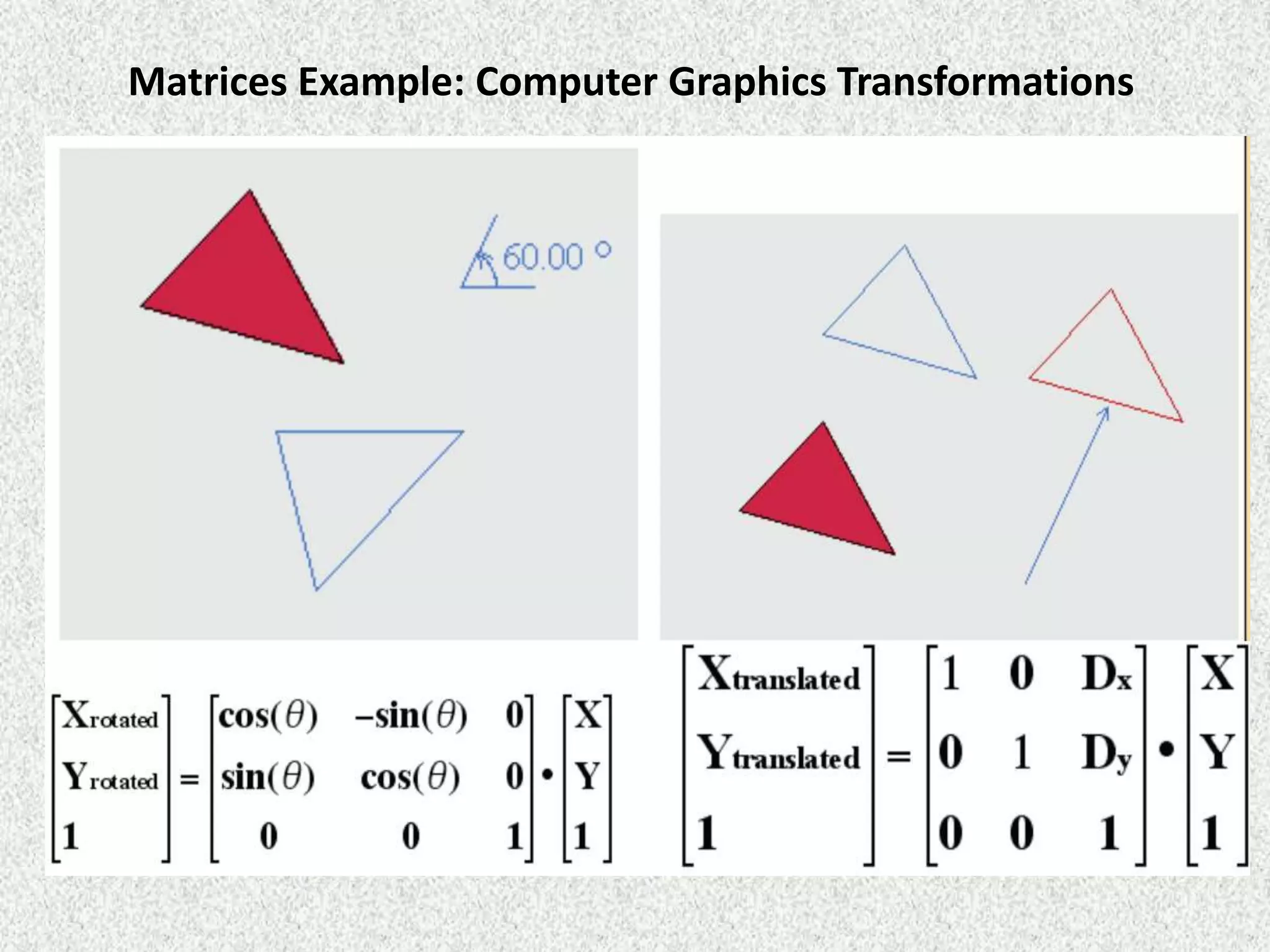

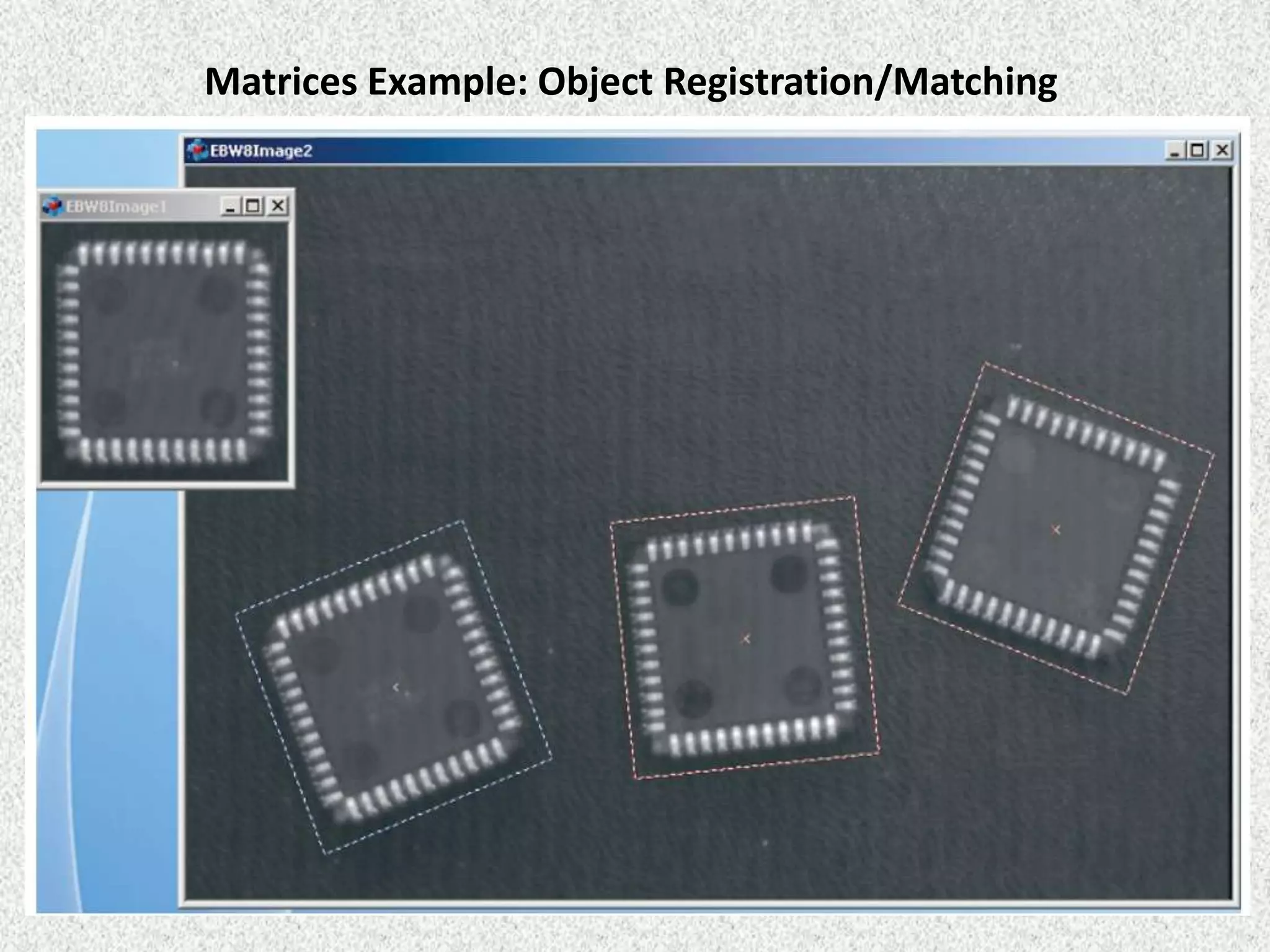

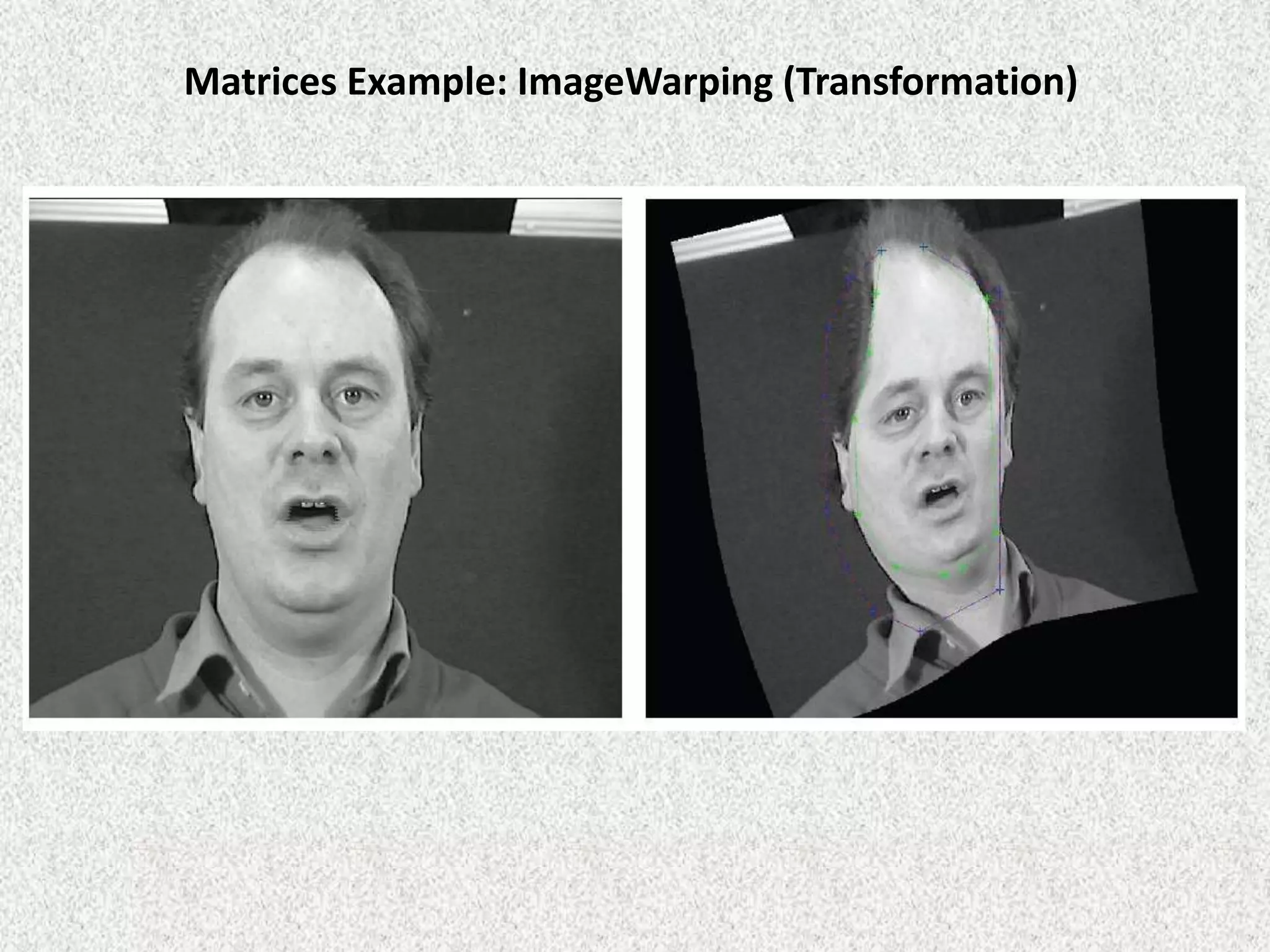

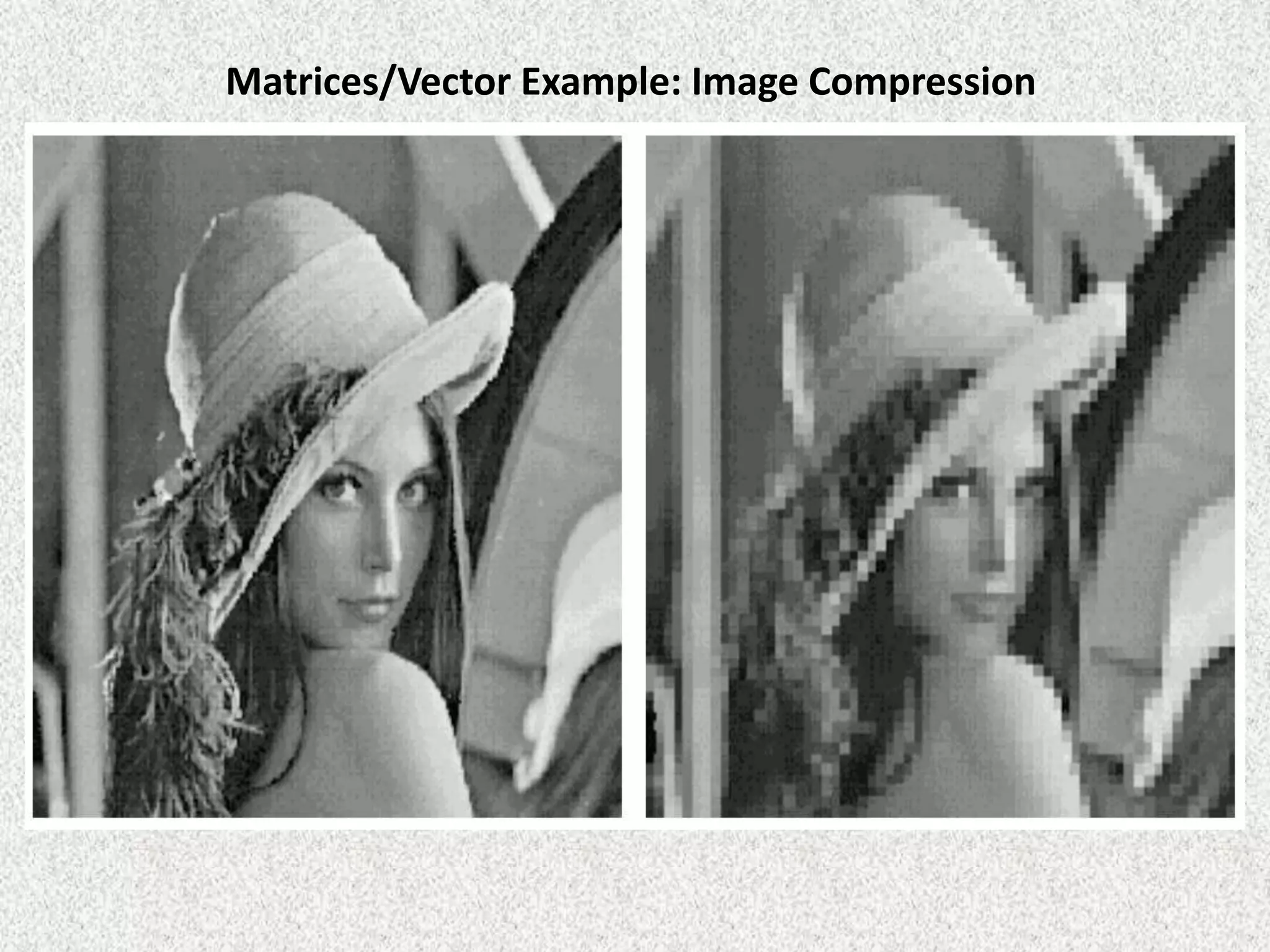

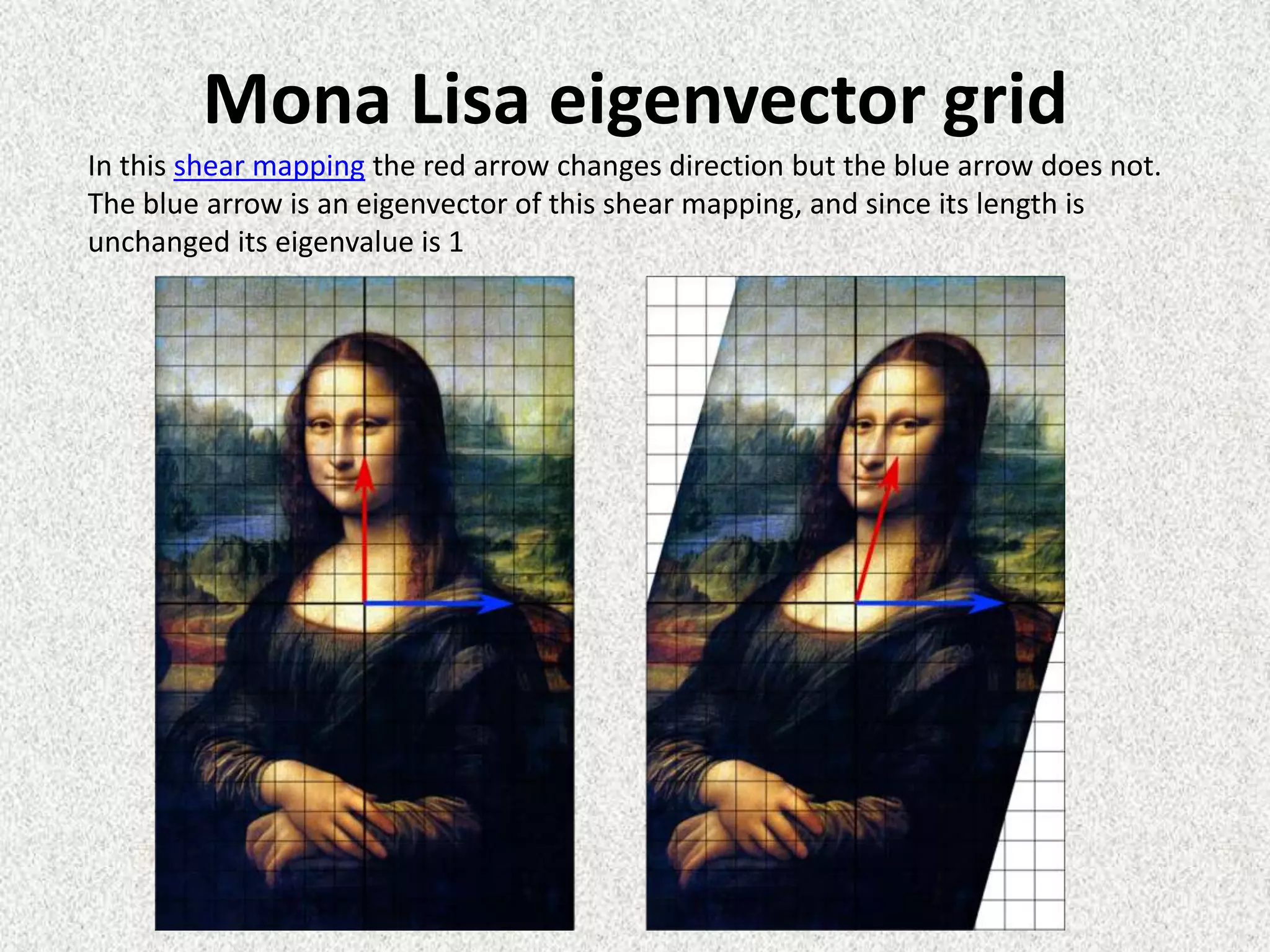

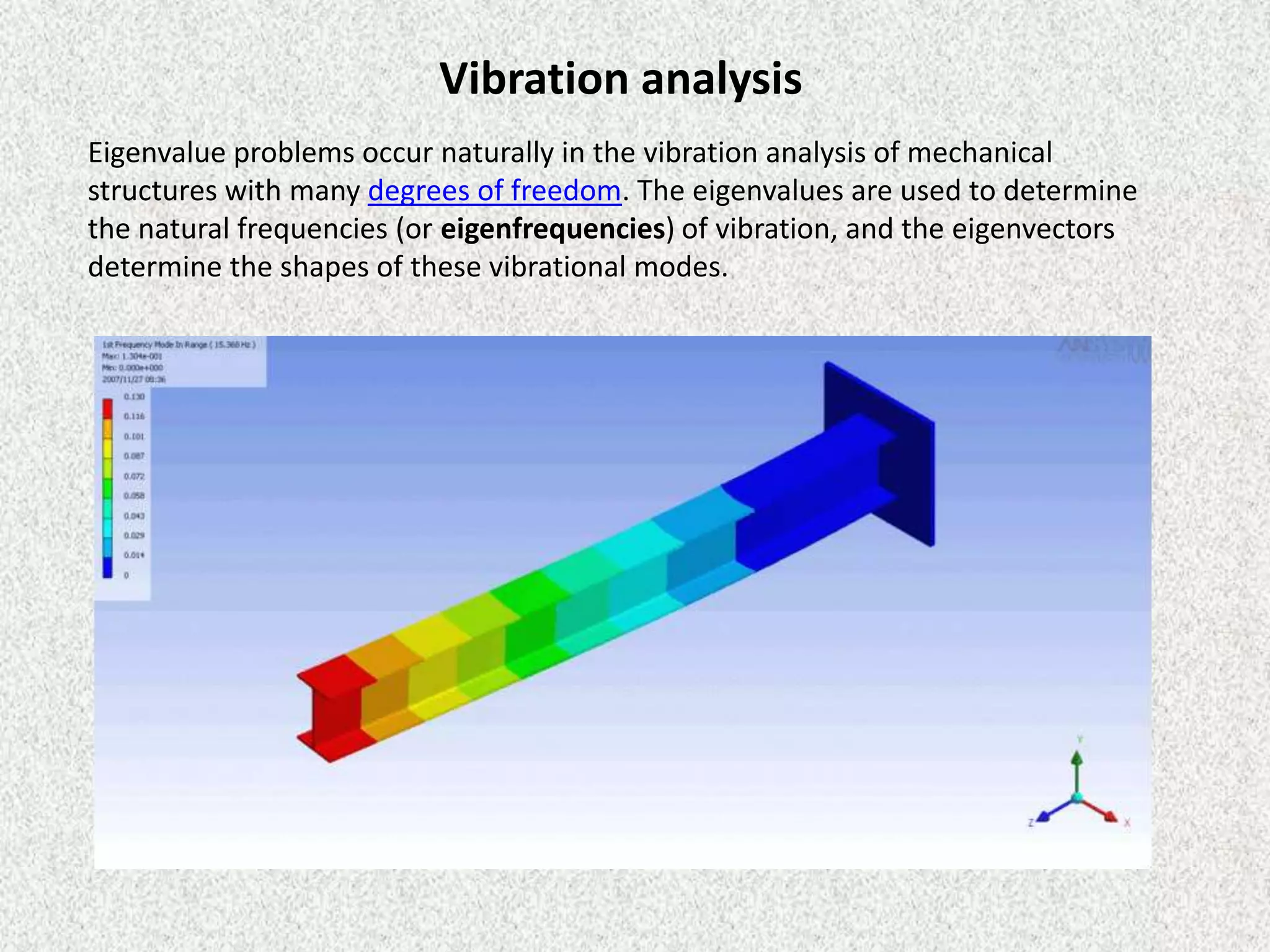

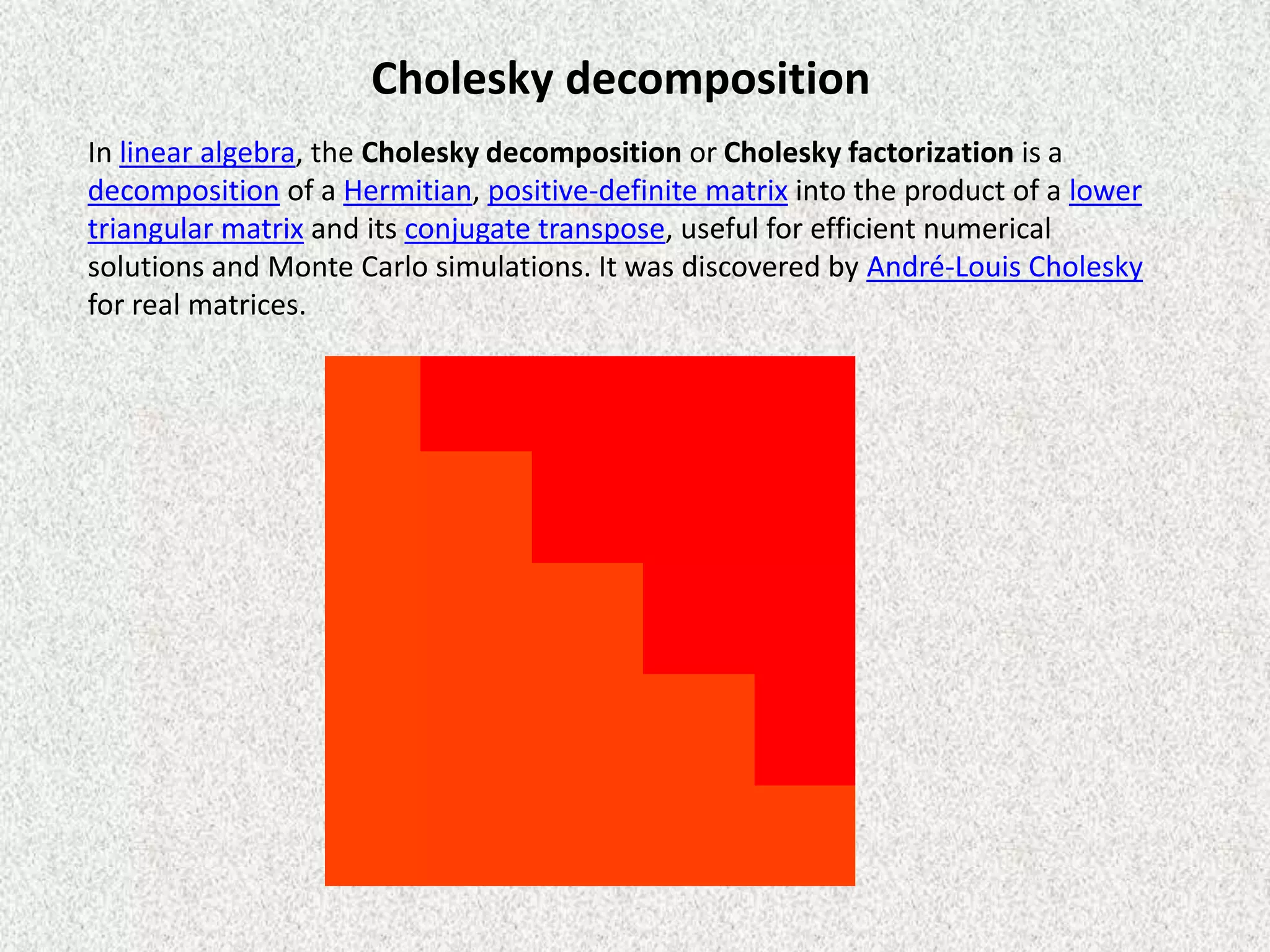

Rolly Rochmad Purnomo gave a public lecture on linear algebra at Serang Raya University on January 11, 2014. He discussed several topics in linear algebra including systems of linear equations, matrices, determinants, vectors in two and three dimensional spaces, vector spaces, eigenvectors and eigenvalues, linear transformations, and applications of linear algebra. He emphasized that linear algebra is widely used in fields like computer graphics, image processing, machine learning, and data compression.