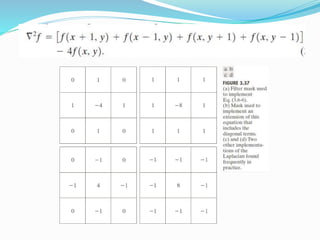

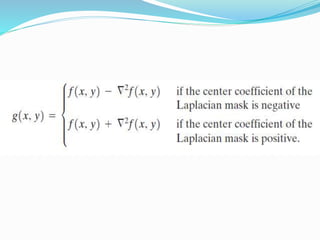

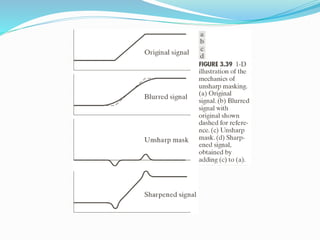

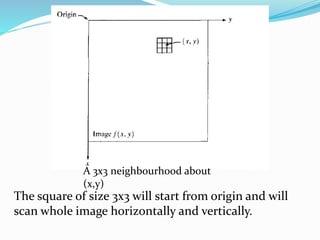

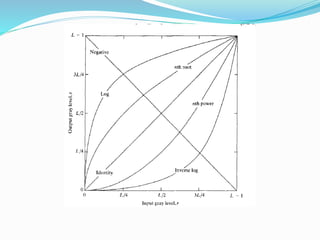

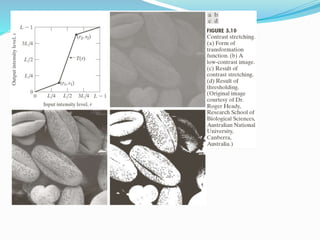

Spatial domain filtering and intensity transformations are techniques used in image processing. Spatial domain refers to the pixels that make up an image. Spatial domain techniques operate directly on pixels by applying operators to pixels and their neighbors. Common operators include averaging, median filtering, and contrast adjustments. Spatial filtering techniques include smoothing to reduce noise and sharpening to enhance edges through differentiation. Intensity transformations map input pixel values to output values using functions like logarithms, power laws, and piecewise linear approximations to modify image contrast and highlight certain intensity ranges.

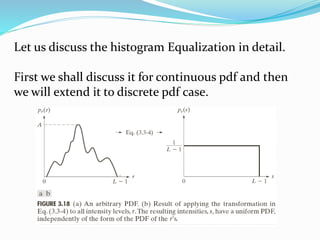

![The spatial domain process is explained by following

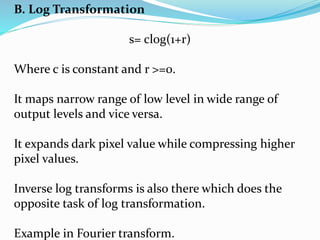

expression.

g(x,y) = T[f(x,y)]

Where f(x,y) is the input image and g(x,y) is the image

at output.

T is an operator on f(x,y) defined over neighborhood

of point (x,y).

The image shows one such transformation.

An example of such transformation is image averaging.](https://image.slidesharecdn.com/3rdunit-230319183231-05a17dcb/85/3rd-unit-pptx-2-320.jpg)

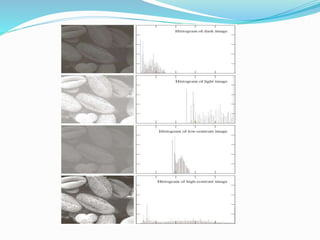

![Histogram Processing

The histogram of a digital image with intensity levels

in intensity range [0,L-1]is a discrete function h(rk) =

nk where rk is the kth intensity value and and nk is the

number of pixels in the image with intensity rk.

Histogram can be normalized by dividing each of its

components by total number of pixels present in the

image.

If MN is total number of pixels where M is the number

of rows in the image and N is the coloumns.](https://image.slidesharecdn.com/3rdunit-230319183231-05a17dcb/85/3rd-unit-pptx-22-320.jpg)