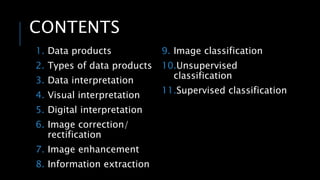

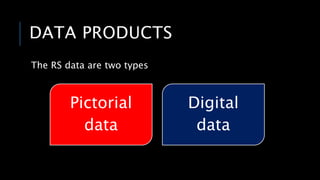

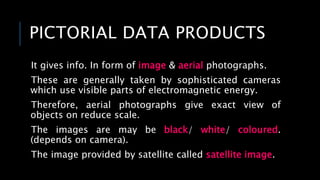

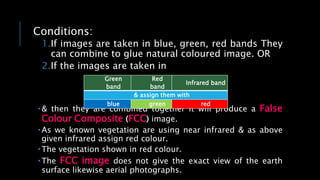

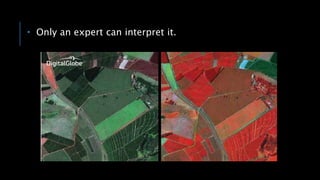

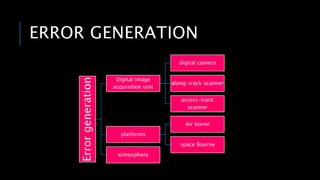

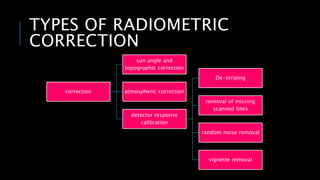

The document discusses various aspects of digital image processing, detailing types of data products, including digital and pictorial data, and their interpretation methods. It covers technical concepts such as image correction, enhancement, classification, and the processes of visual and digital interpretation. Furthermore, it explains unsupervised and supervised classification techniques for extracting information from images.