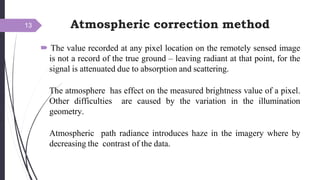

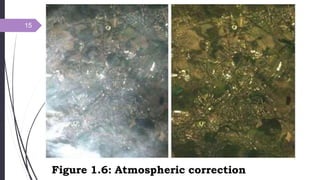

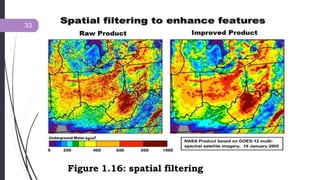

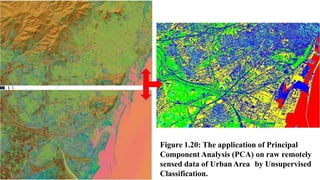

The document provides an overview of digital image processing techniques used in remote sensing, highlighting the limitations of visual image interpretation and the advantages of digital data analysis. It discusses various stages of digital image processing, including pre-processing methods for correcting errors and enhancing image quality, as well as image processing operations like registration, filtering, and classification. Additionally, it examines the significance of geometric and radiometric corrections to enhance data utility in natural resource mapping.