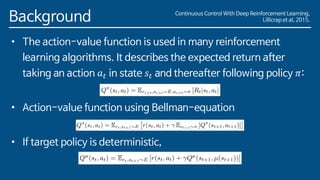

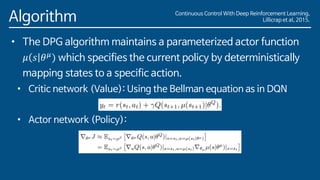

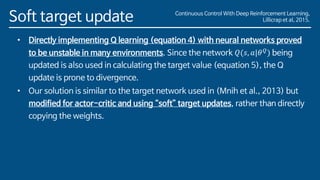

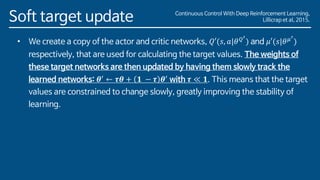

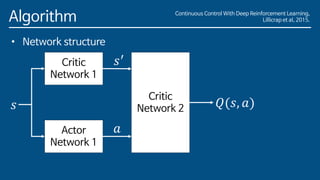

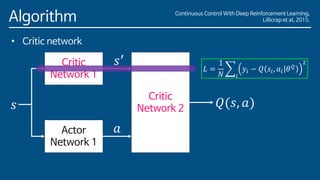

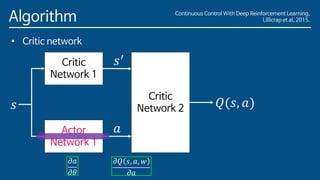

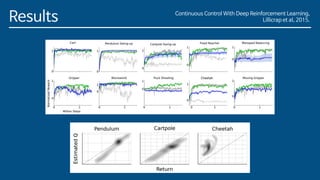

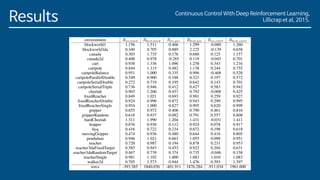

The paper introduces Deep Deterministic Policy Gradient (DDPG), a model-free reinforcement learning algorithm for problems with continuous action spaces. DDPG combines actor-critic methods with experience replay and target networks similar to DQN. It uses a replay buffer to minimize correlations between samples and target networks to provide stable learning targets. The algorithm was able to solve challenging control problems with high-dimensional observation and action spaces, demonstrating the ability of deep reinforcement learning to handle complex, continuous control tasks.