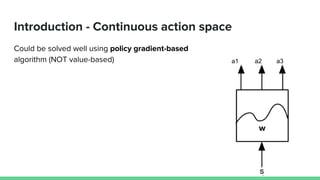

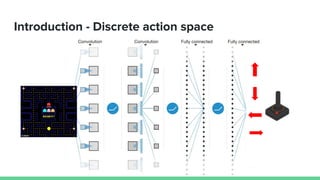

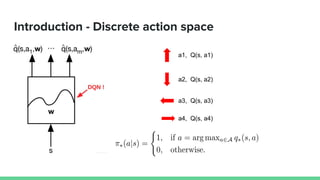

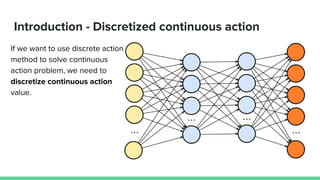

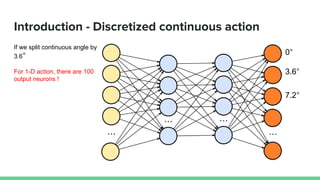

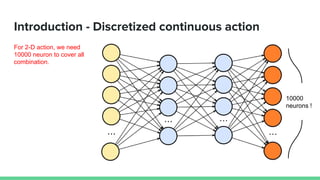

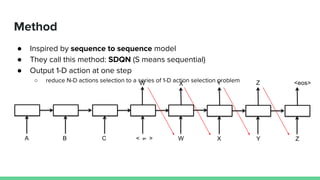

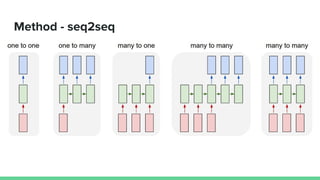

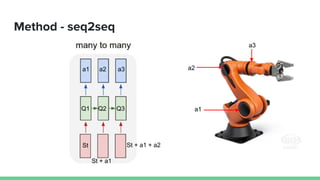

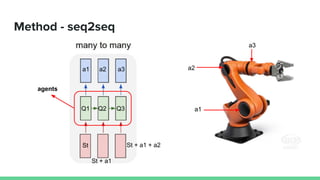

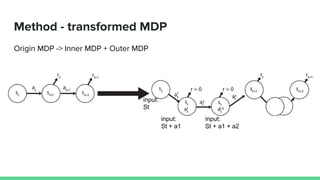

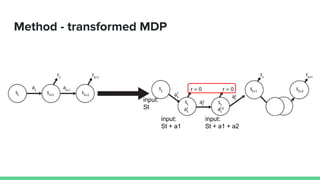

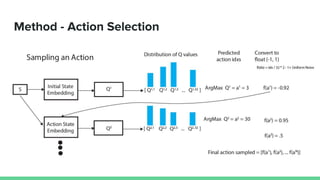

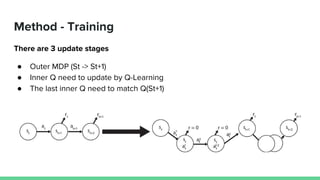

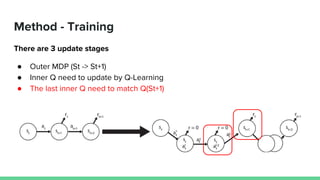

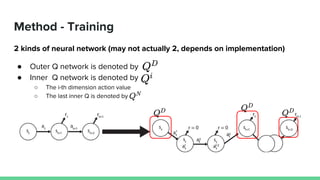

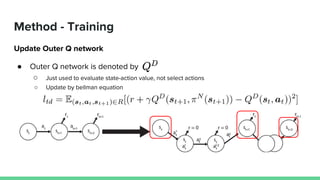

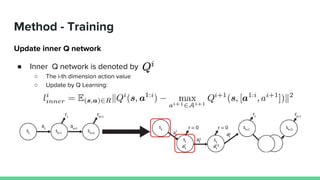

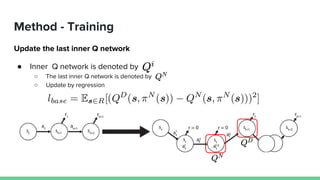

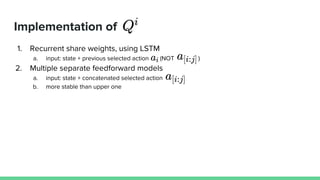

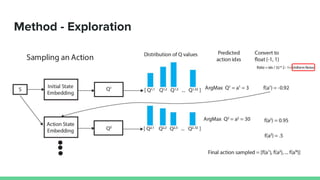

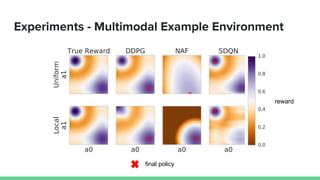

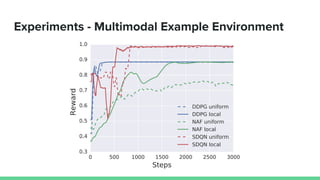

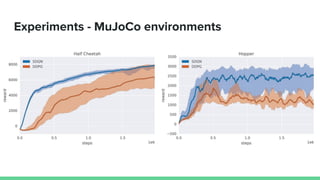

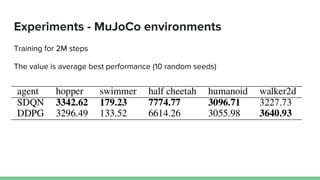

This paper proposes a method called SDQN (Sequential Deep Q-Network) to solve continuous action problems using a value-based reinforcement learning approach. SDQN discretizes continuous actions into sequential discrete steps. It transforms the original MDP into an "inner MDP" between consecutive discrete steps and an "outer MDP" between states. SDQN uses two Q-networks - an inner Q-network to estimate state-action values for each discrete step, and an outer Q-network to estimate values between states. It updates the networks using Q-learning for the inner networks and regression to match the last inner Q to the outer Q. The method is tested on a multimodal environment and several MuJoCo tasks, outperform