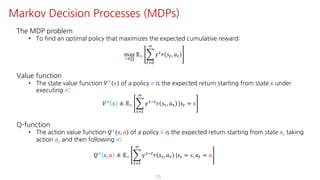

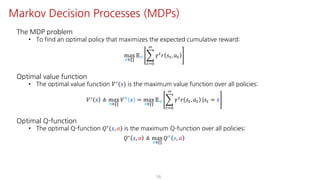

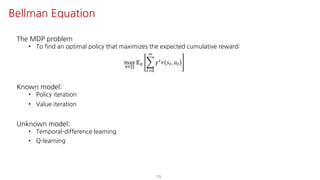

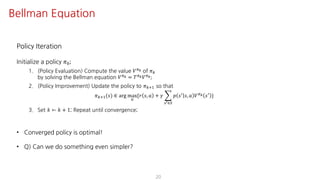

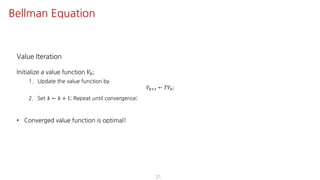

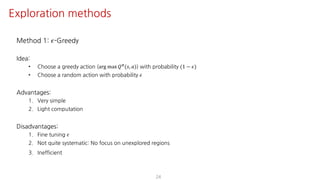

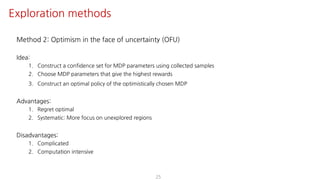

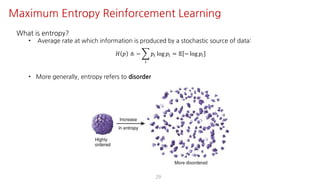

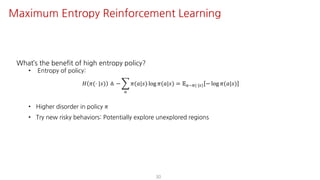

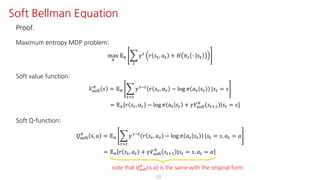

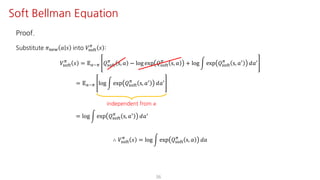

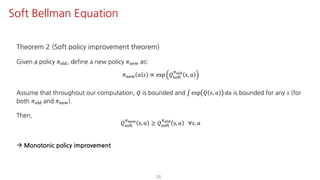

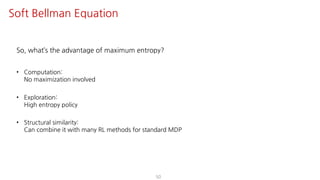

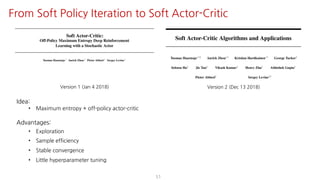

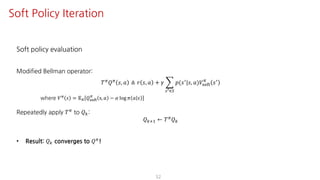

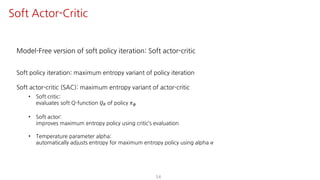

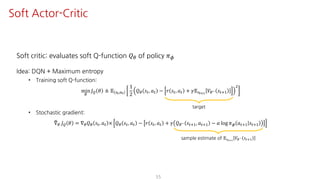

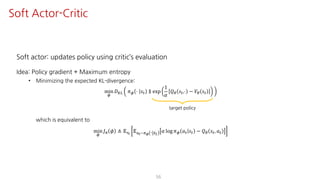

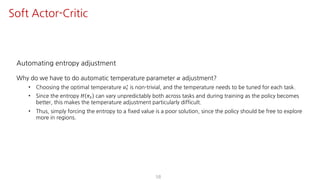

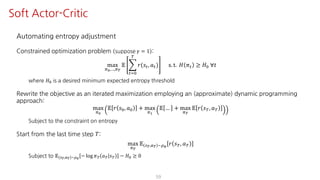

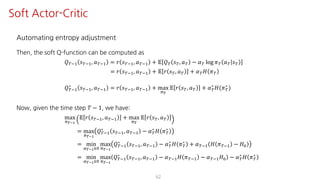

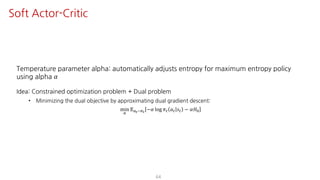

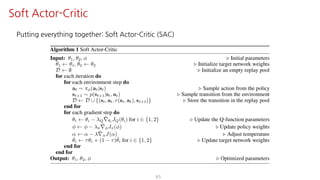

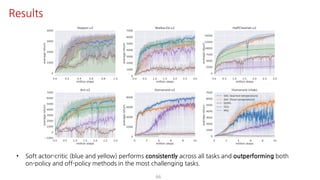

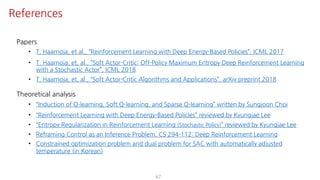

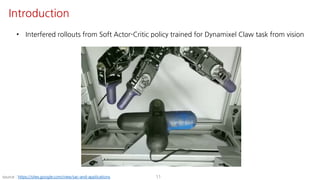

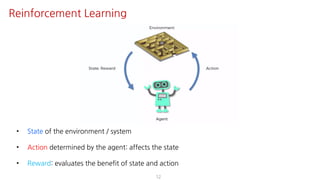

The document presents an overview of maximum entropy reinforcement learning, exploring key concepts such as Markov decision processes, the Bellman equation, and various exploration methods. It highlights the importance of adapting to uncertainty in environments while employing deep learning techniques for policy optimization. The Soft Actor-Critic algorithm is discussed in detail, emphasizing its applications and benefits in reinforcement learning scenarios.

![A Markov decision process (MDP) is a tuple < 𝑆, 𝐴, 𝑝, 𝑟, 𝛾 >, consisting of

• 𝑆: set of states (state space)

e.g., 𝑆 = 1, … , 𝑛 (discrete), 𝑆 = ℝ:

(continuous)

• 𝐴: set of actions (action space)

e.g., 𝐴 = 1, … , 𝑚 (discrete), 𝐴 = ℝ<

(continuous)

• 𝑝: state transition probability

𝑝 𝑠= 𝑠, 𝑎 ≜ Prob 𝑠"?@ = 𝑠= 𝑠" = 𝑠, 𝑎" = 𝑎 d

• 𝑟: reward function

𝑟 𝑠", 𝑎" = 𝑟"d

• 𝛾 ∈ (0,1]: discount factor

14

Markov Decision Processes (MDPs)](https://image.slidesharecdn.com/maximumentropyrl-190824142117/85/Maximum-Entropy-Reinforcement-Learning-Stochastic-Control-14-320.jpg)