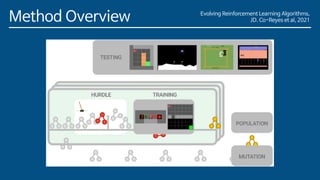

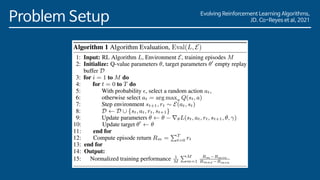

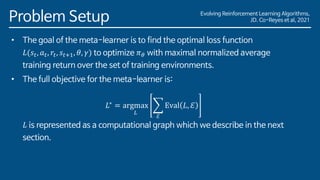

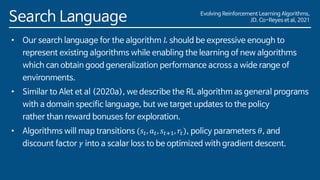

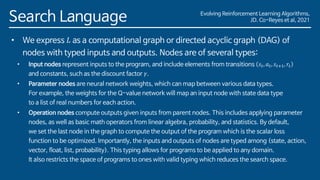

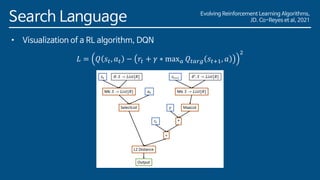

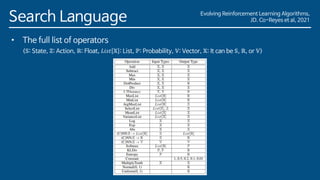

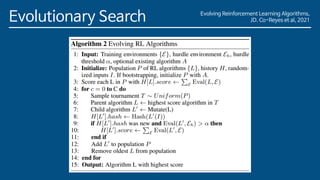

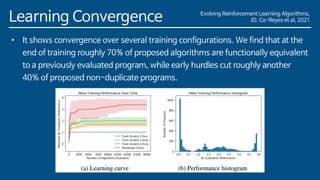

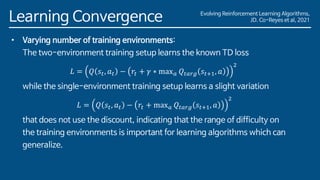

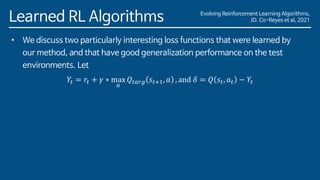

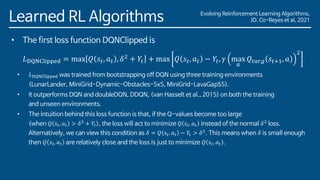

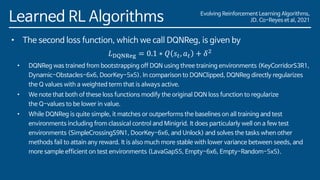

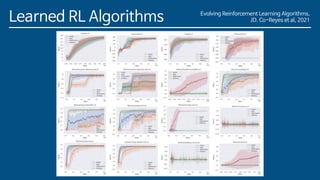

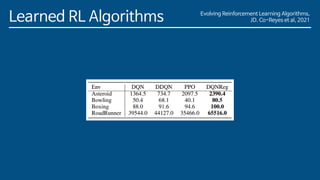

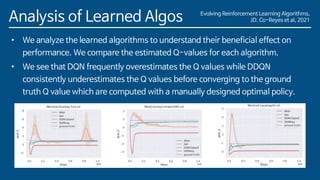

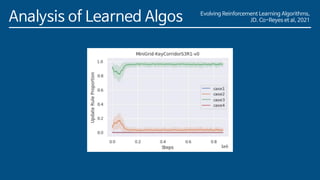

The document discusses the development of a new meta-learning framework for designing reinforcement learning algorithms automatically, aiming to reduce manual efforts while enabling the creation of domain-agnostic, efficient algorithms. The authors propose a search language based on genetic programming to express symbolic loss functions and utilize regularized evolution for optimizing these algorithms across various environments. They demonstrate that this approach successfully outperforms existing algorithms by learning two new algorithms that generalize well to unseen environments.