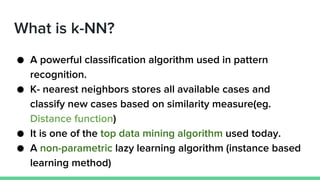

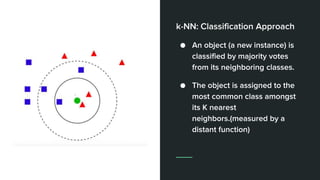

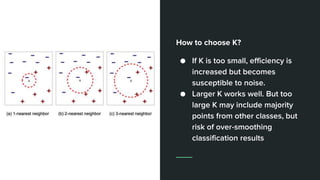

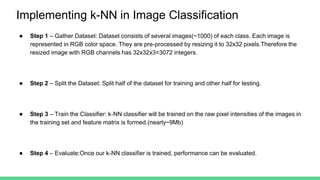

k-Nearest Neighbors (k-NN) is a simple machine learning algorithm that classifies new data points based on their similarity to existing data points. It stores all available data and classifies new data based on a distance function measurement to find the k nearest neighbors. k-NN is a non-parametric lazy learning algorithm that is widely used for classification and pattern recognition problems. It performs well when there is a large amount of sample data but can be slow and the choice of k can impact performance.