Embed presentation

Downloaded 40 times

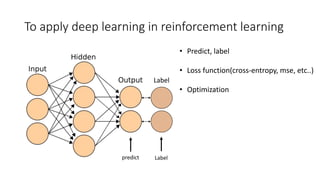

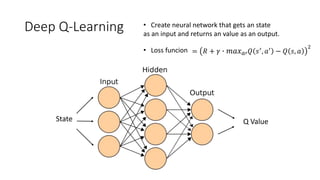

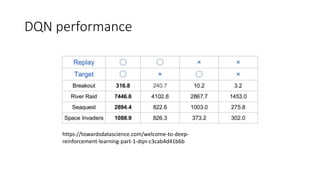

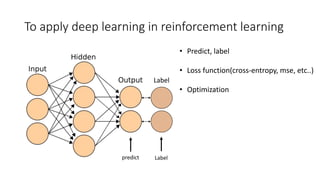

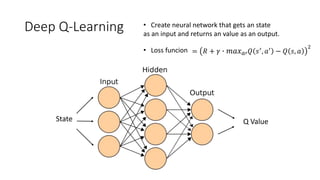

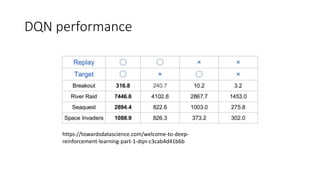

Deep Sarsa and Deep Q-learning use neural networks to estimate state-action values in reinforcement learning problems. Deep Q-learning uses experience replay and a target network to improve stability over the basic Deep Q-learning algorithm. Experience replay stores transitions in a replay buffer, and the target network is periodically updated to reduce bias from bootstrapping. Deepmind's DQN algorithm combined Deep Q-learning with experience replay and a target network to achieve good performance on complex tasks.