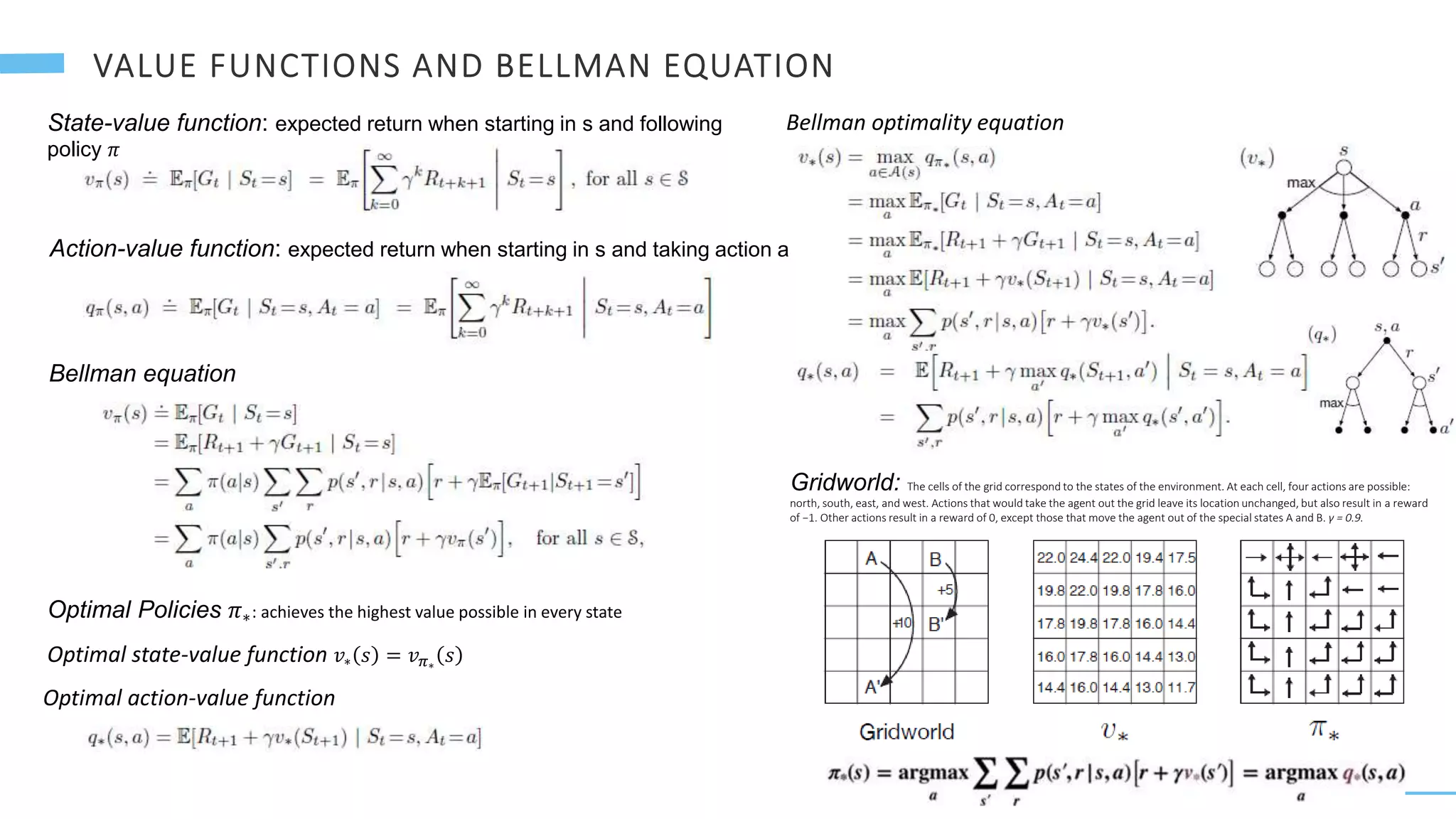

Here are the key steps to run a REINFORCE algorithm on the CartPole environment using SLM Lab:

1. Define the REINFORCE agent configuration in a spec file. This specifies things like the algorithm name, hyperparameters, network architecture, optimizer, etc.

2. Define the CartPole environment configuration.

3. Initialize SLM Lab and load the spec file:

```js

const slmLab = require('slm-lab');

slmLab.init();

const spec = require('./reinforce_cartpole.js');

```

4. Create an experiment with the spec:

```js

const experiment = new slmLab.Experiment(spec

![24

RAY: Distributed Computing for ML

Researchers in UC Berkeley created the framework

To speed up ML training with CPU cores or cluster nodes

API in Python, core in C++

High level libraries use Ray internally for distributed computation

Ray GitHub

RlLib: Scalable Reinforcement Learning

import ray

import ray.rllib.agents.ppo as ppo

ray.init()

SELECT_ENV = "CartPole-v1"

config = ppo.DEFAULT_CONFIG.copy()

config['num_workers'] = 8

config['model']['fcnet_hiddens'] = [40,20]

trainer = ppo.PPOTrainer(config, SELECT_ENV)

for n in range(20):

result = trainer.train()

print(result['episode_reward_mean'])

Ray Tune: Hyperparameter Tuning

import ray

from ray import tune

ray.init()

tune.run(

"PPO",

stop={"episode_reward_mean": 400},

config={

"env": "CartPole-v1",

"num_gpus": 0,

"num_workers": 6,

"model": {

'fcnet_hiddens': [

tune.grid_search([20, 40, 60, 80]),

tune.grid_search([20, 40, 60, 80])]},},)](https://image.slidesharecdn.com/reinforcementlearning-220212125446/75/Reinforcement-learning-24-2048.jpg)

![25

Policy: calculate action from state

Colab RlLib

Environment

import gym

env = gym.make('CartPole-v0’)

# Choose an action (either 0 or 1).

def sample_policy(state):

return 0 if state[0] < 0 else 1

def rollout_policy(env, policy):

state = env.reset()

done = False

cumulative_reward = 0

while not done:

action = policy(state)

# Take the action in the environment.

state, reward, done, _ = env.step(action)

cumulative_reward += reward

return cumulative_reward

reward = rollout_policy(env, sample_policy)

import gym, ray

from ray.rllib.agents import ppo

class MyEnv(gym.Env):

def __init__(self, env_config):

self.action_space = <gym.Space>

self.observation_space = <gym.Space>

def reset(self):

return <obs>

def step(self, action):

return <obs>, <reward: float>, <done: bool>, <info: dict>

ray.init()

trainer = ppo.PPOTrainer(env=MyEnv, config={

"env_config": {}, # config to pass to env class

})

while True:

print(trainer.train())

Algorithms](https://image.slidesharecdn.com/reinforcementlearning-220212125446/75/Reinforcement-learning-25-2048.jpg)

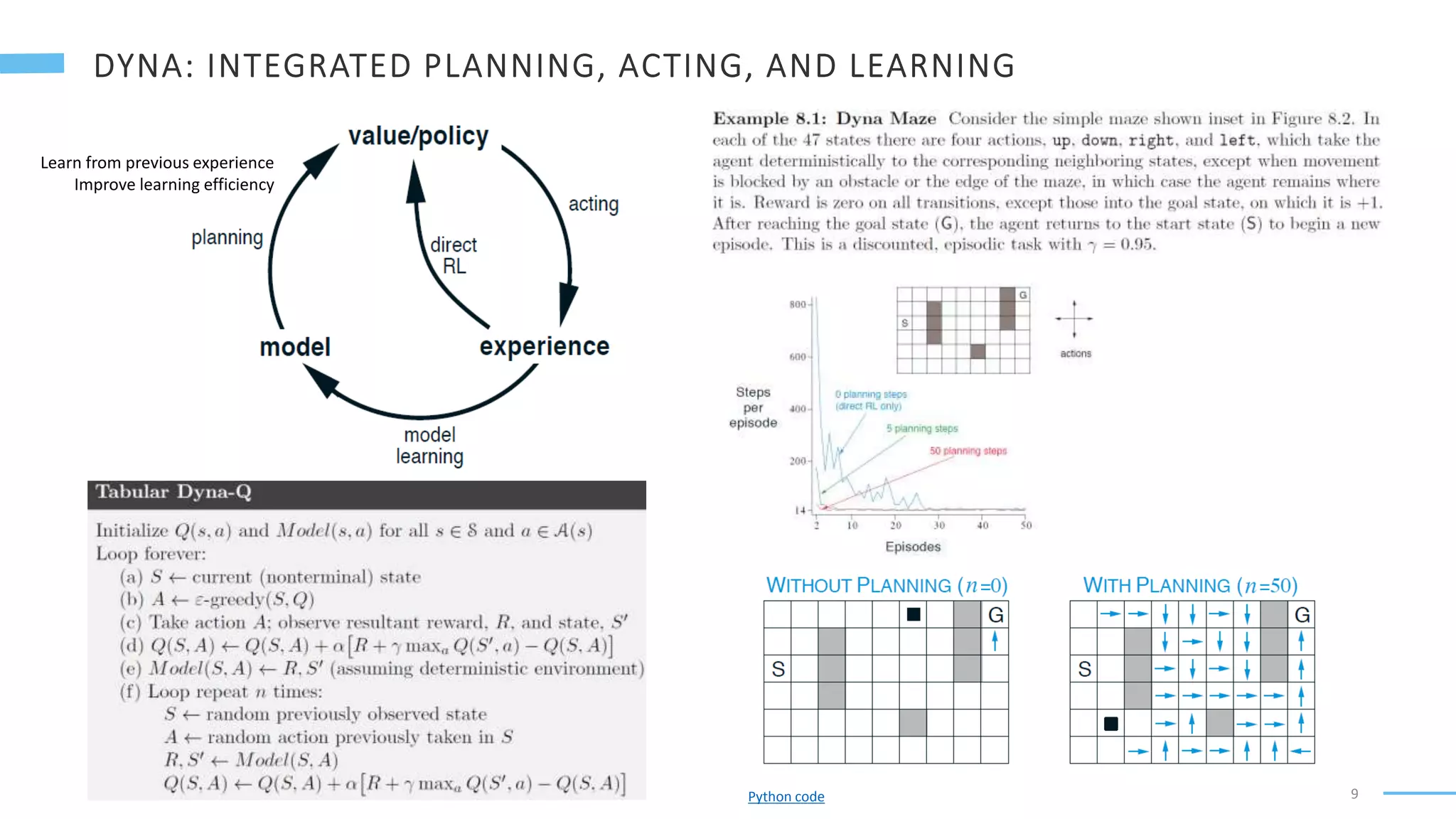

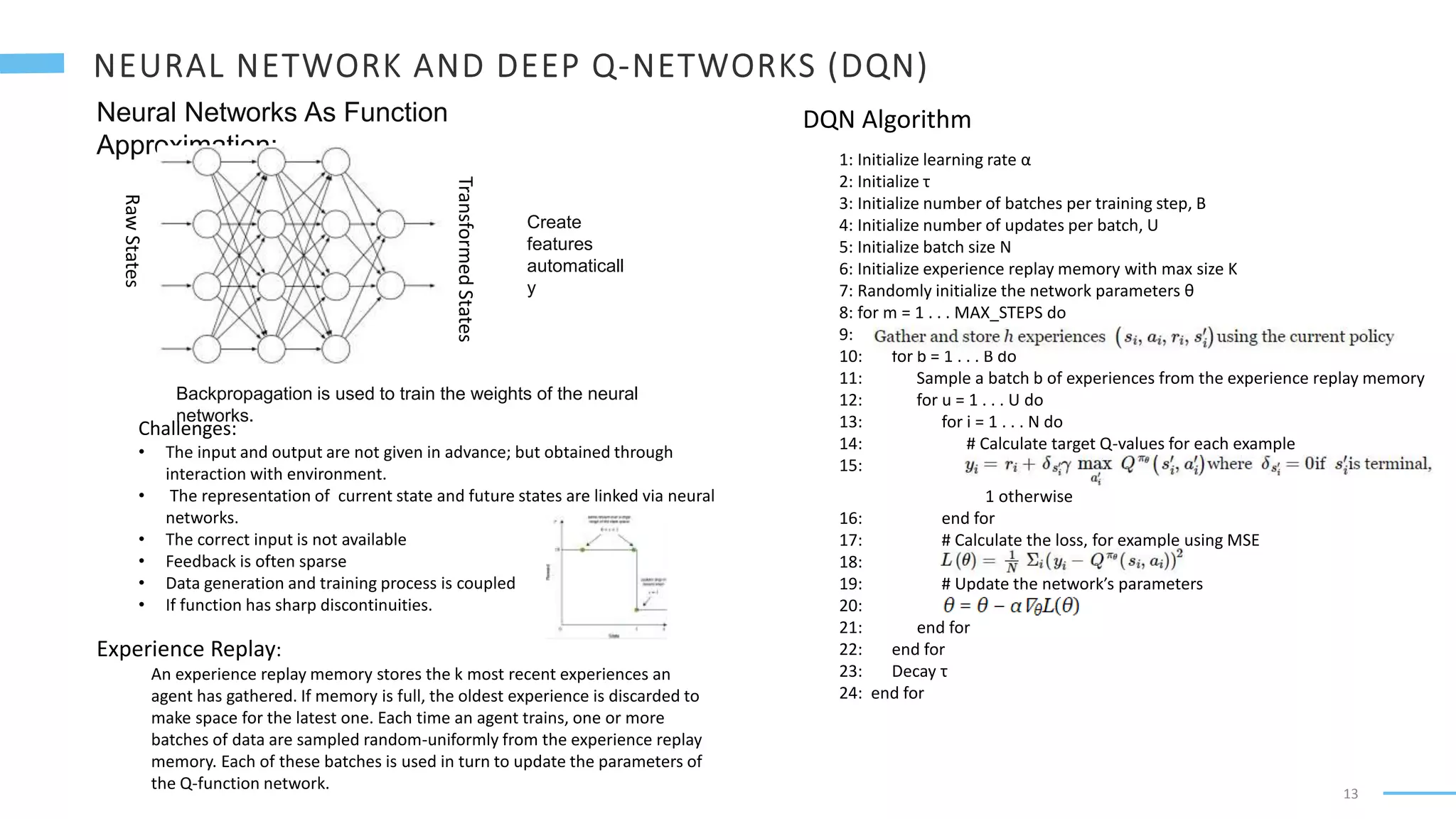

![27

A simple REINFORCE car pole spec file

1 #

slm_lab/spec/benchmark/reinforce/reinforce_cartpole.js

on

2

3 {

4 "reinforce_cartpole": {

5 "agent": [{

6 "name": "Reinforce",

7 "algorithm": {

8 "name": "Reinforce",

9 "action_pdtype": "default",

10 "action_policy": "default",

11 "center_return": true,

12 "explore_var_spec": null,

13 "gamma": 0.99,

14 "entropy_coef_spec": {

15 "name": "linear_decay",

16 "start_val": 0.01,

17 "end_val": 0.001,

18 "start_step": 0,

19 "end_step": 20000,

20 },

21 "training_frequency": 1

22 },

23 "memory": {

24 "name": "OnPolicyReplay"

25 },

26 "net": {

27 "type": "MLPNet",

28 "hid_layers": [64],

29 "hid_layers_activation": "selu",

30 "clip_grad_val": null,

31 "loss_spec": {

32 "name": "MSELoss"

33 },

34 "optim_spec": {

35 "name": "Adam",

36 "lr": 0.002

37 },

38 "lr_scheduler_spec": null

39 }

40 }],

41 "env": [{

42 "name": "CartPole-v0",

43 "max_t": null,

44 "max_frame": 100000,

45 }],

46 "body": {

47 "product": "outer",

48 "num": 1

49 },

50 "meta": {

51 "distributed": false,

52 "eval_frequency": 2000,

53 "max_session": 4,

54 "max_trial": 1,

55 },

56 ...

57 }

58 }

REINFORCE spec file with search spec for different gamma values

1 # slm_lab/spec/benchmark/reinforce/reinforce_cartpole.json

2

3 {

4 "reinforce_cartpole": {

5 ...

6 "meta": {

7 "distributed": false,

8 "eval_frequency": 2000,

9 "max_session": 4,

10 "max_trial": 1,

11 },

12 "search": {

13 "agent": [{

14 "algorithm": {

15 "gamma__grid_search": [0.1, 0.5, 0.7, 0.8, 0.90, 0.99, 0.999]

16 }

17 }]

18 }

19 }

20 }

γ values above 0.90 perform better,

with γ = 0.999 from trial 6 giving the

best result. When γ is too low, the

algorithm fails to learn a policy that

solves the problem, and the learning

curve stays flat.](https://image.slidesharecdn.com/reinforcementlearning-220212125446/75/Reinforcement-learning-27-2048.jpg)