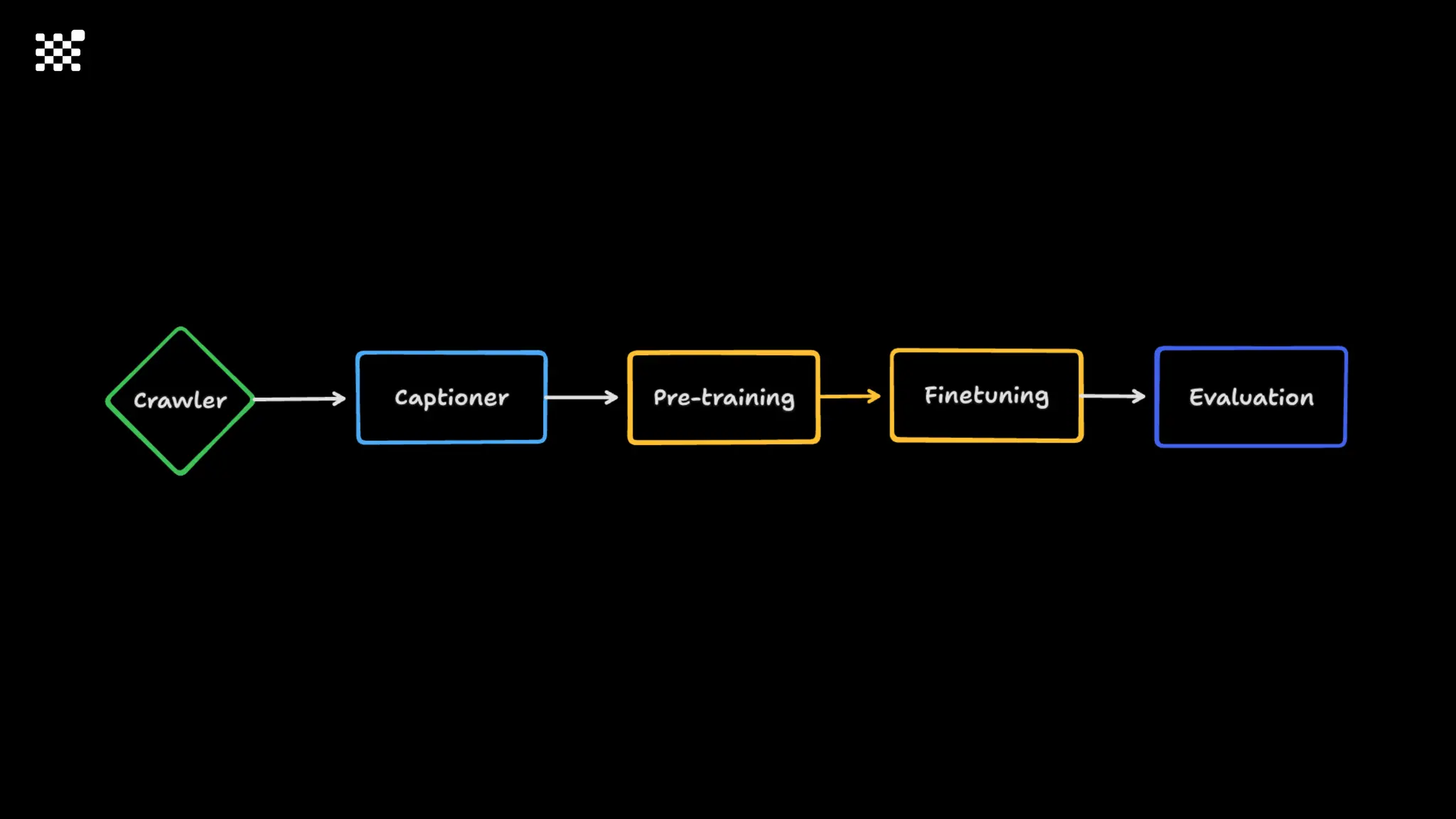

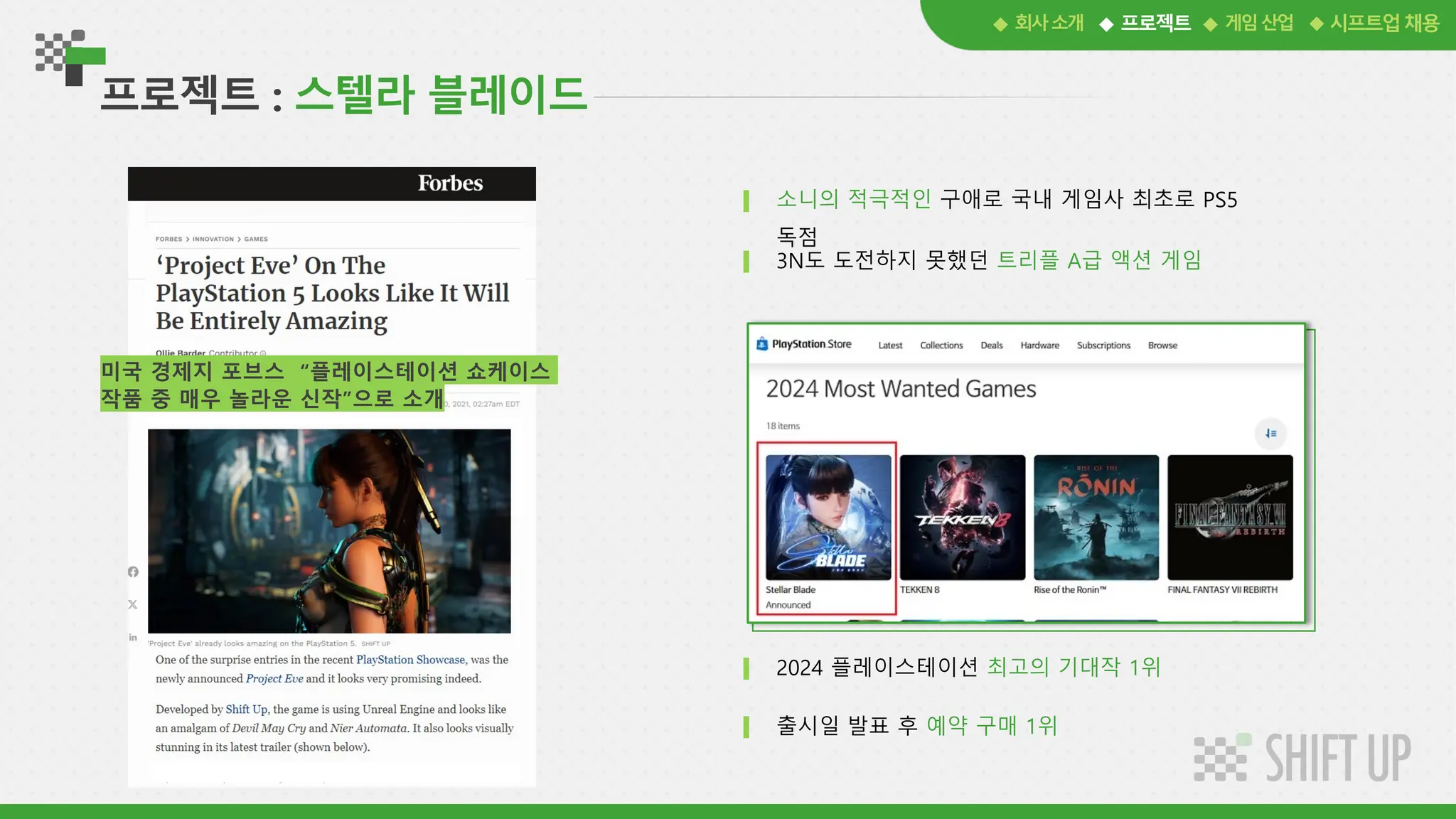

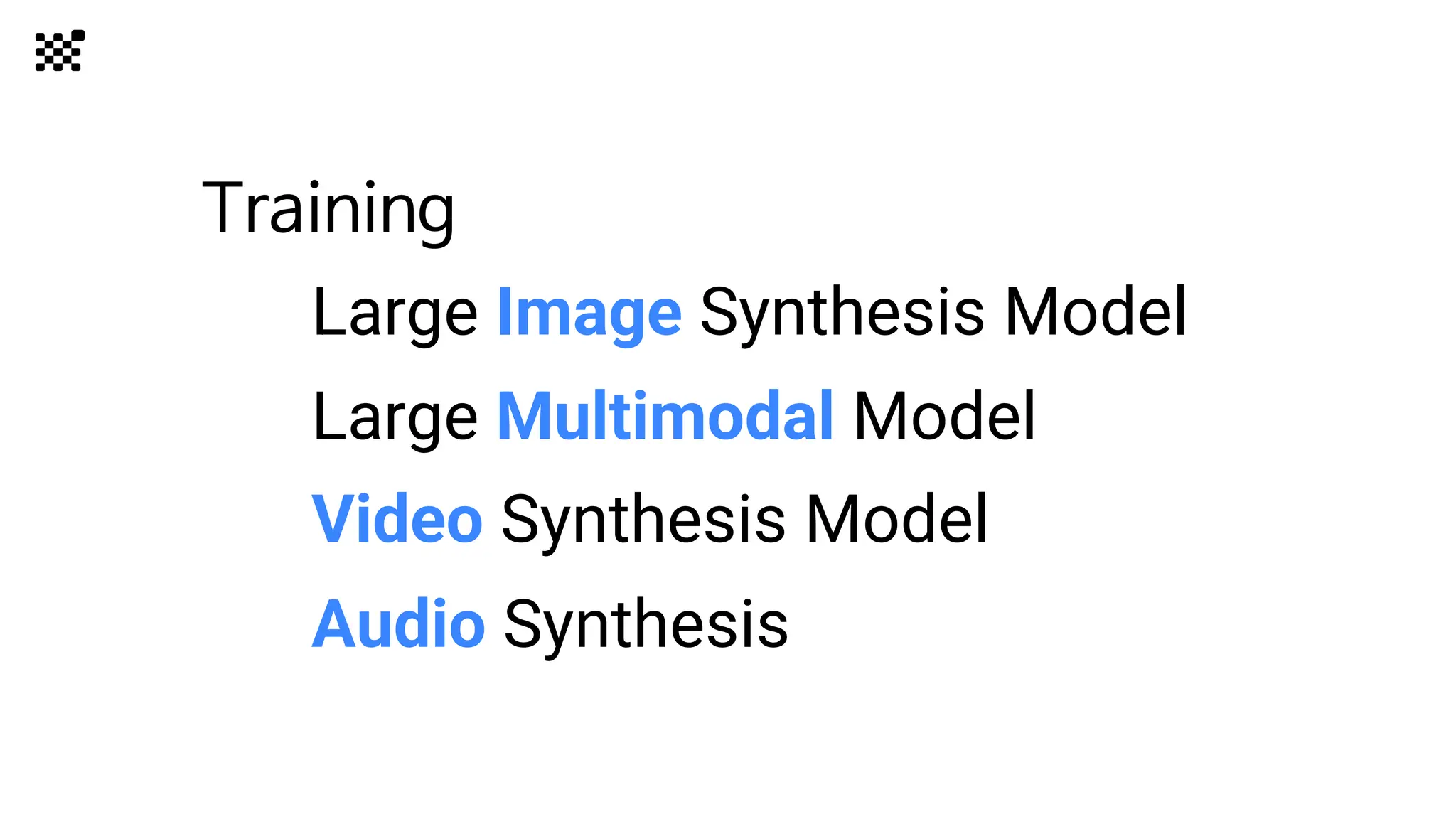

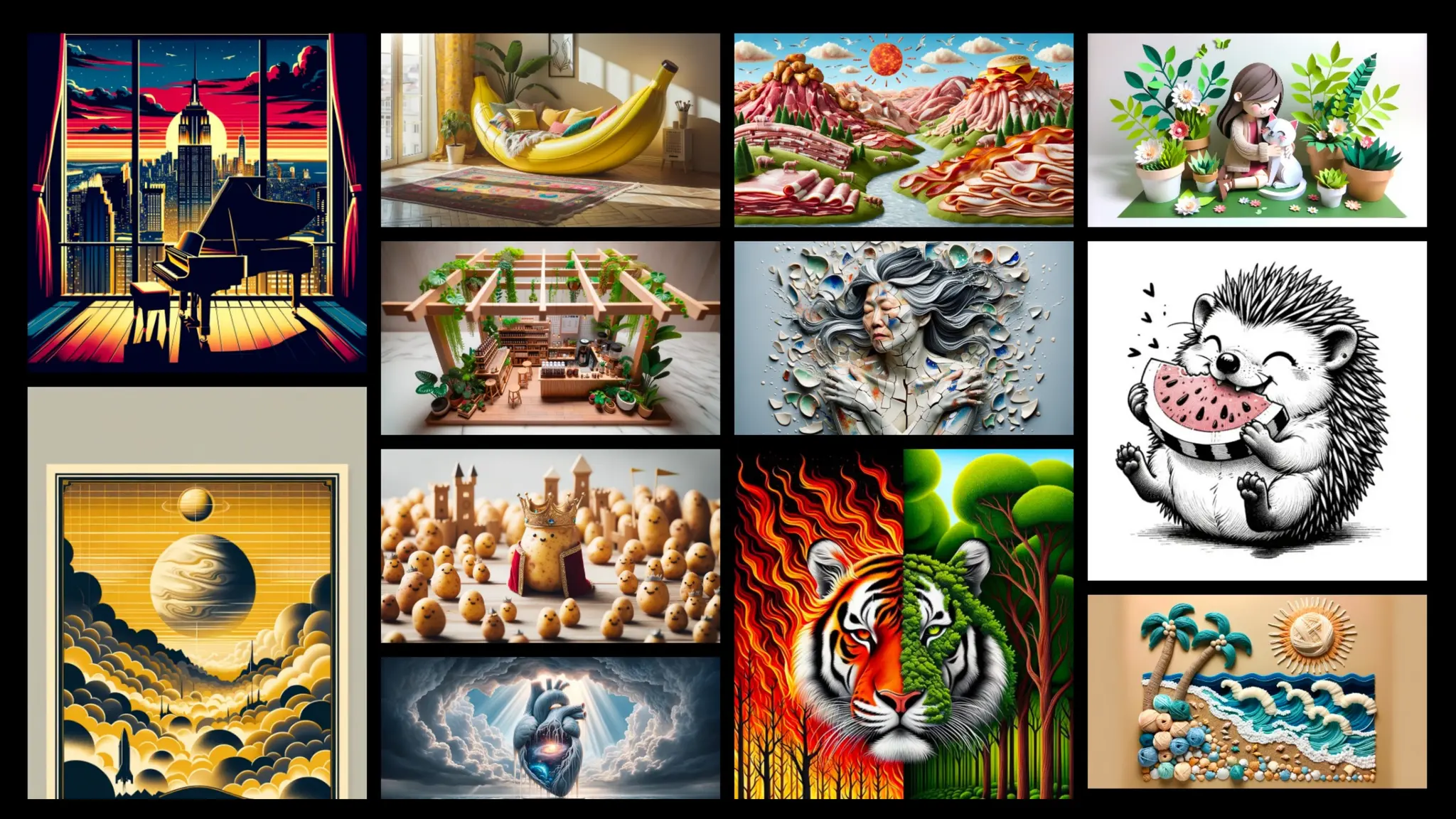

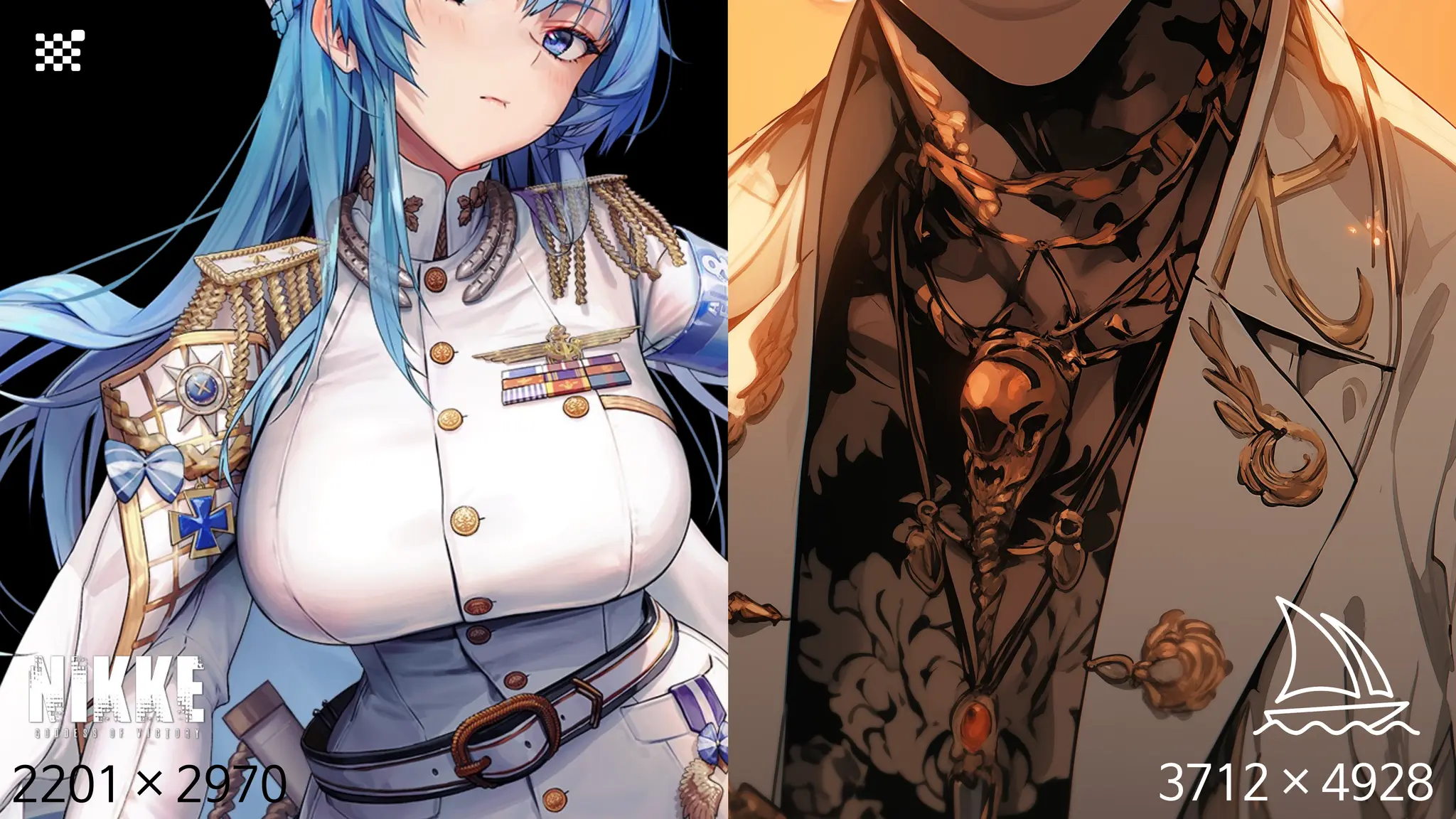

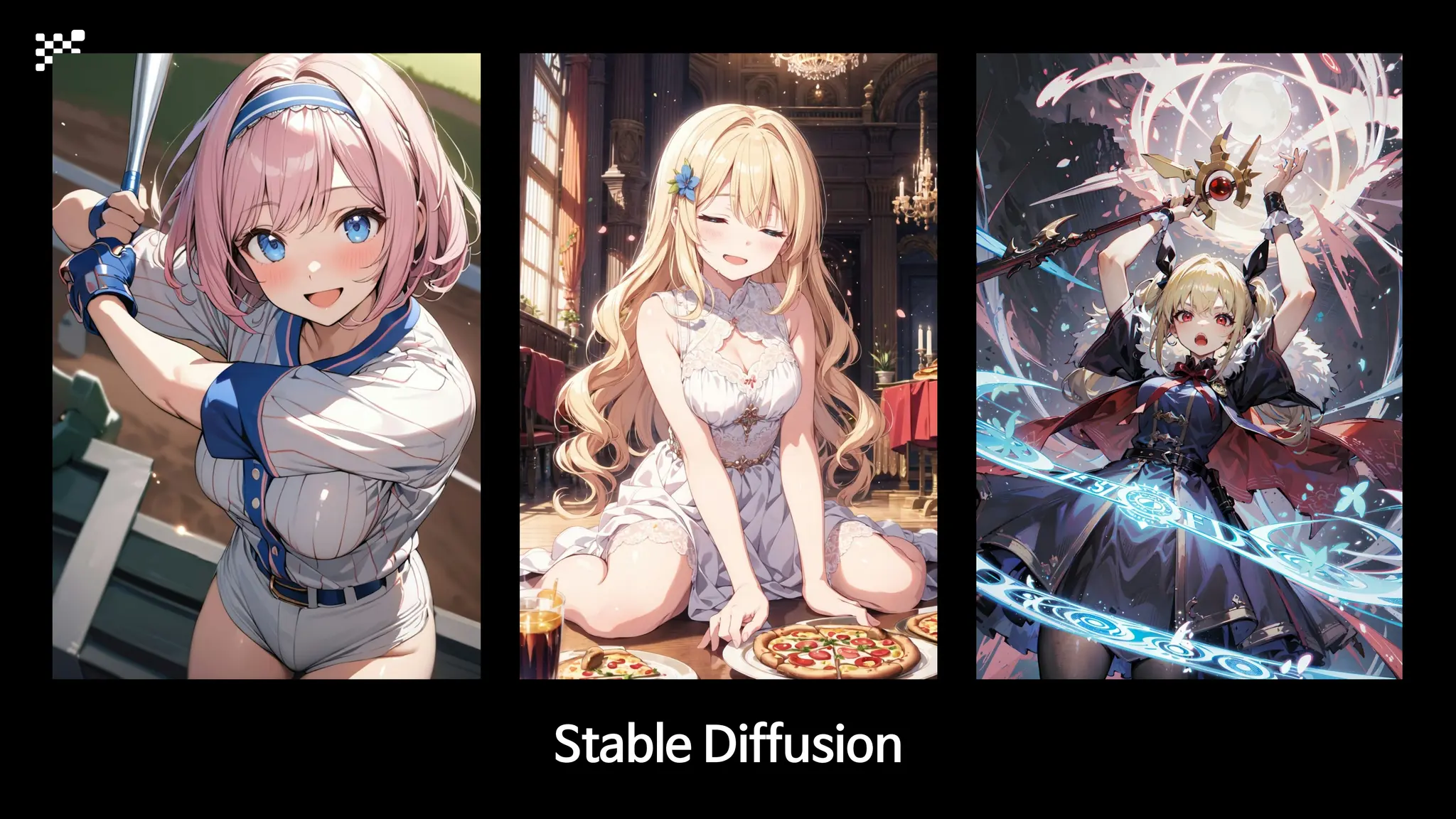

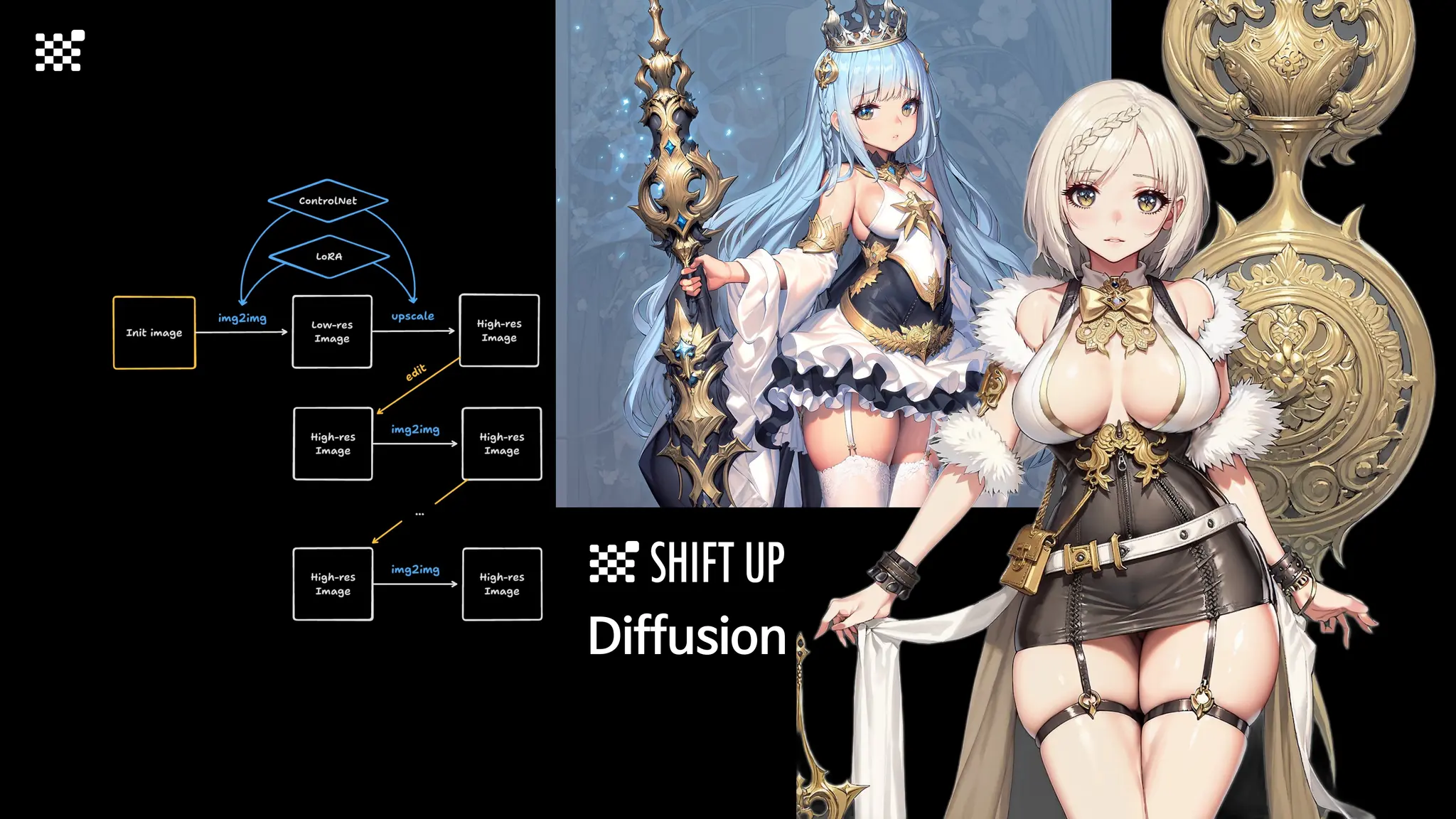

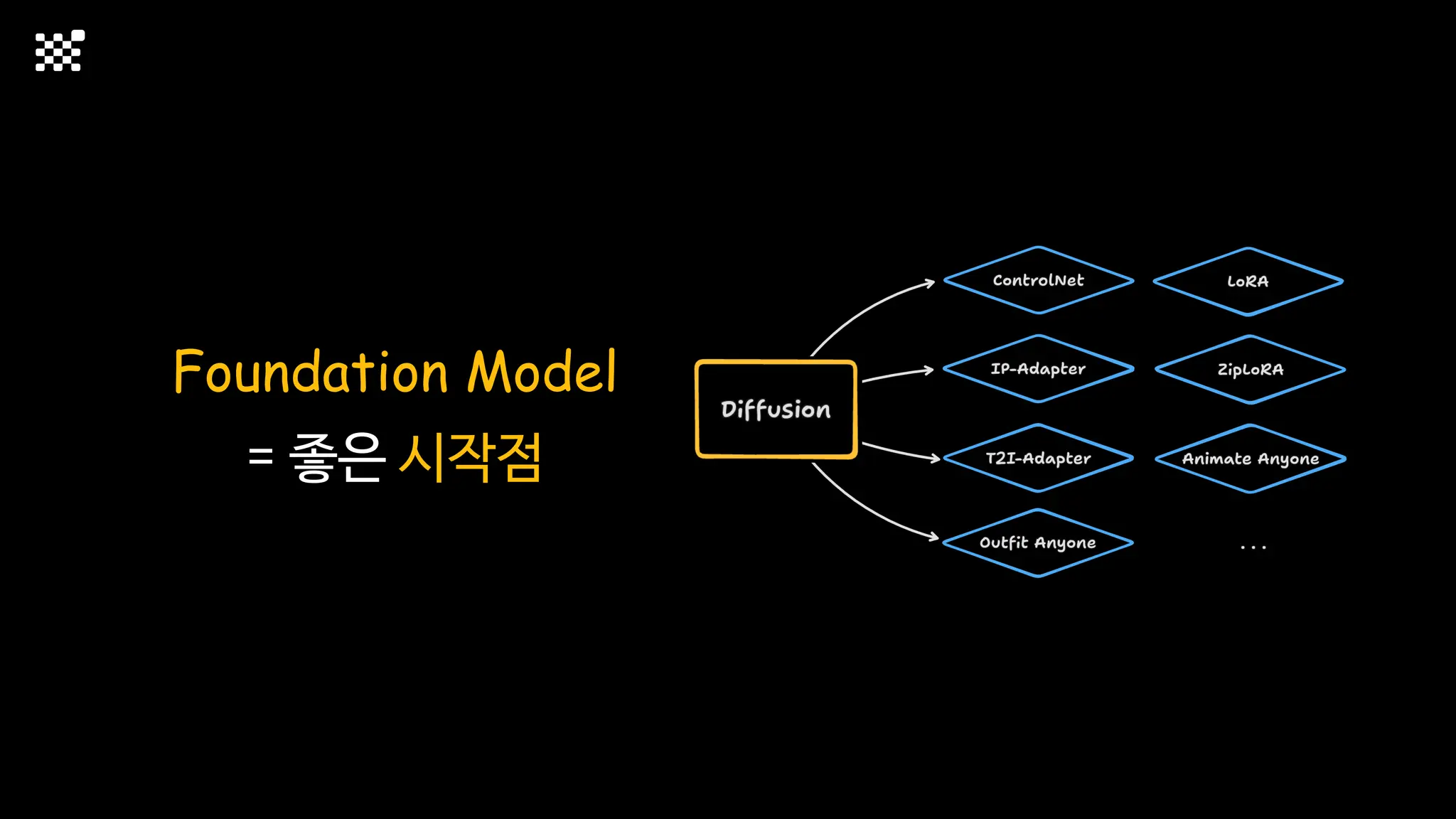

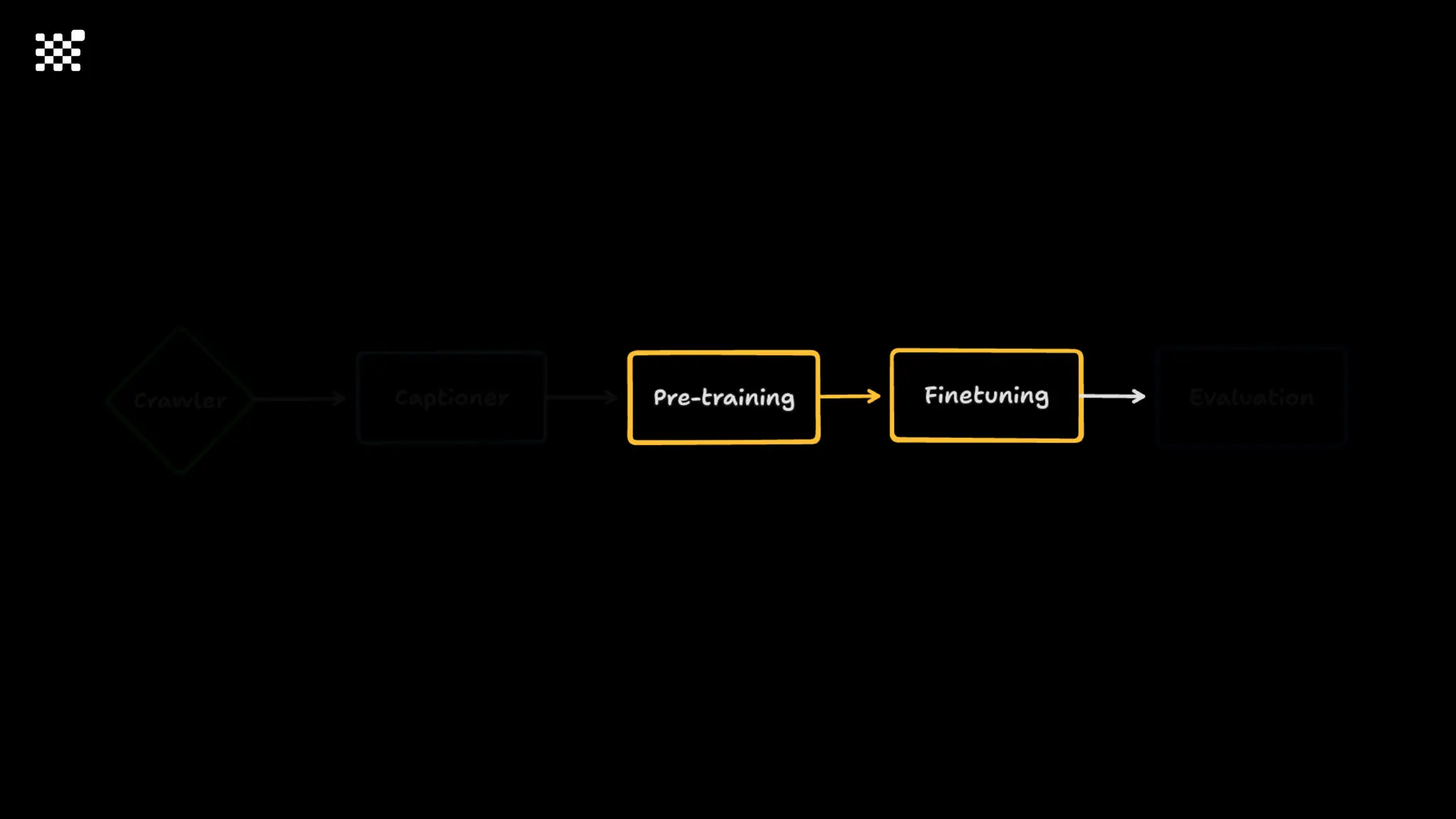

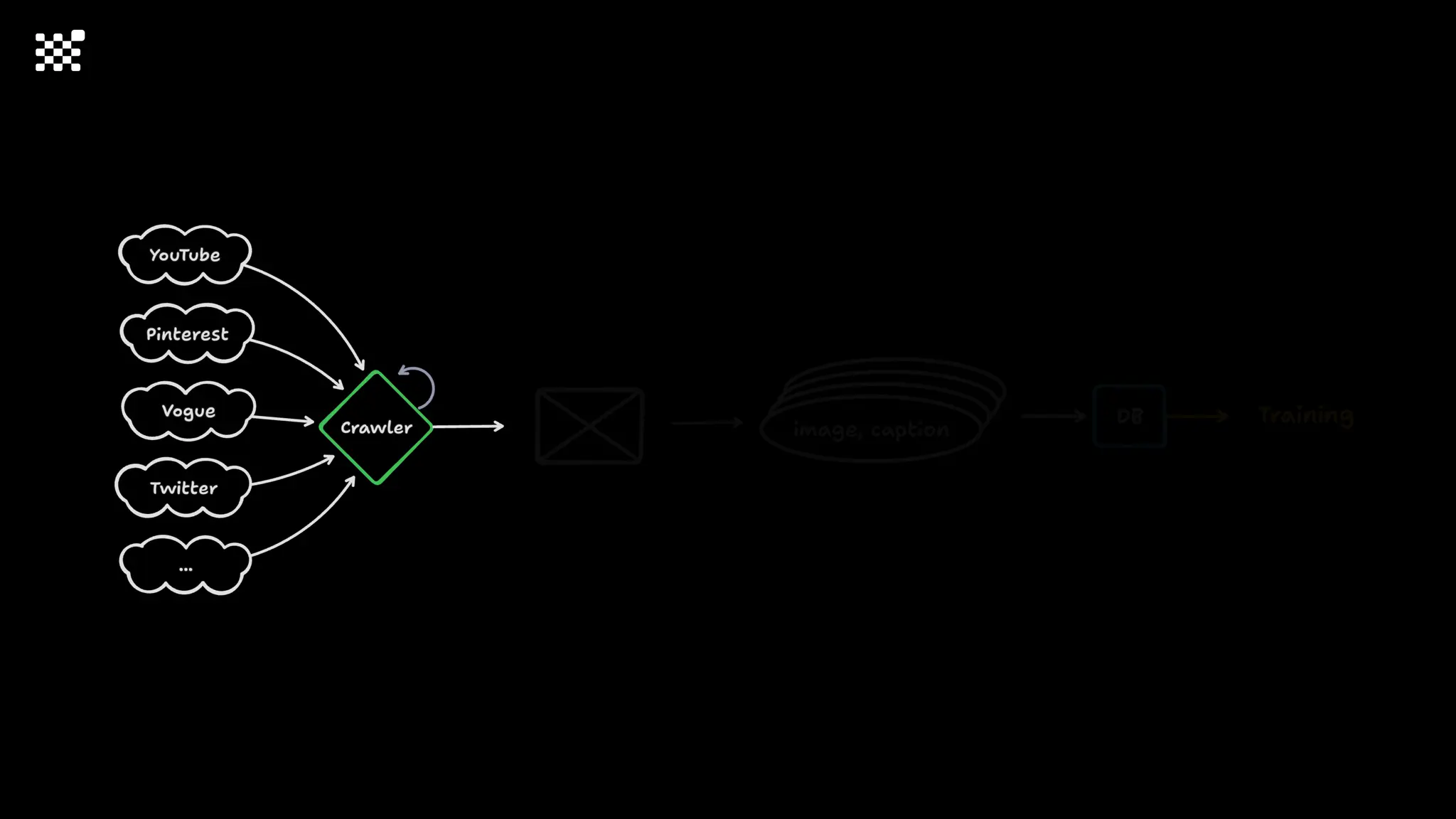

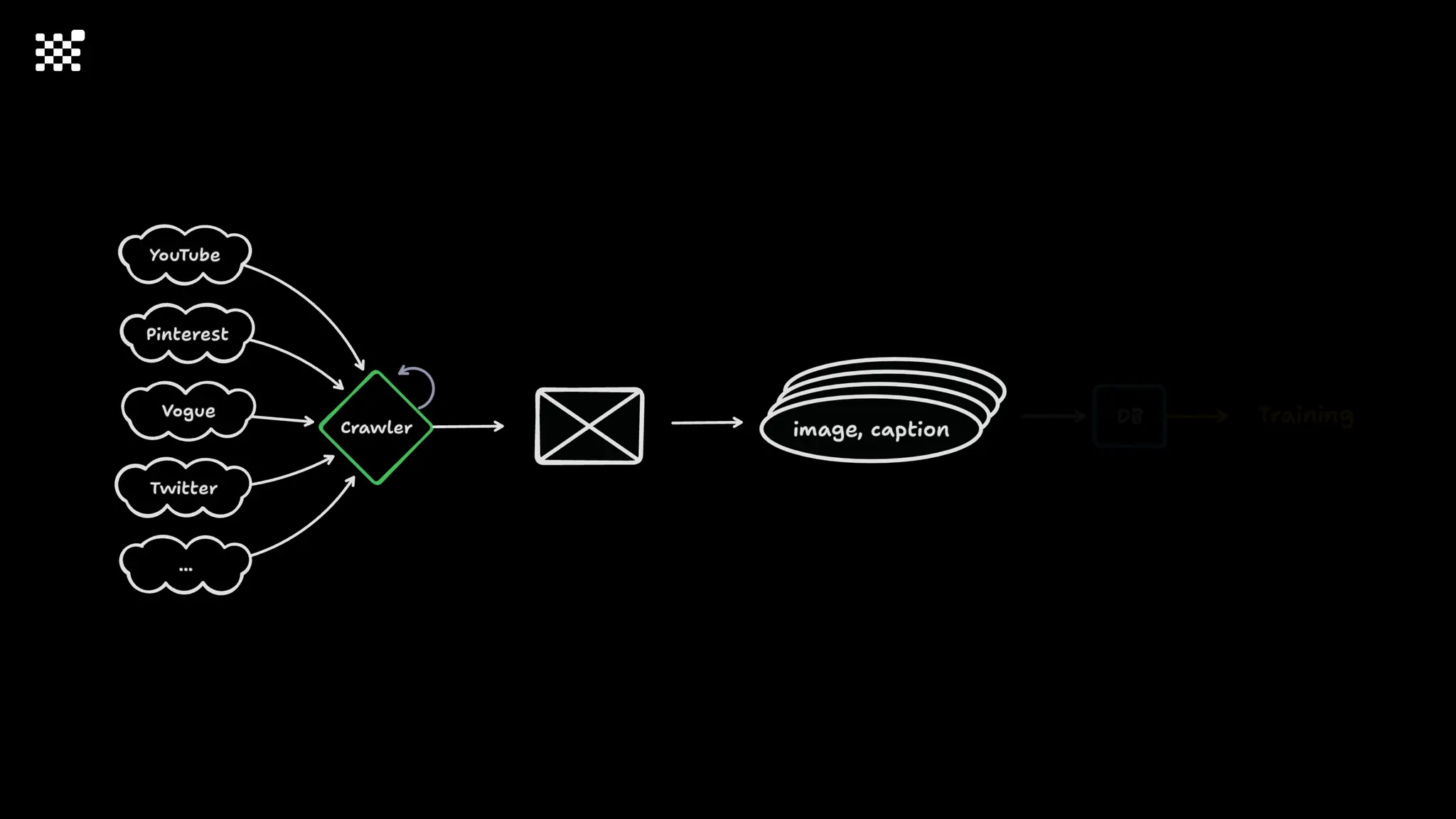

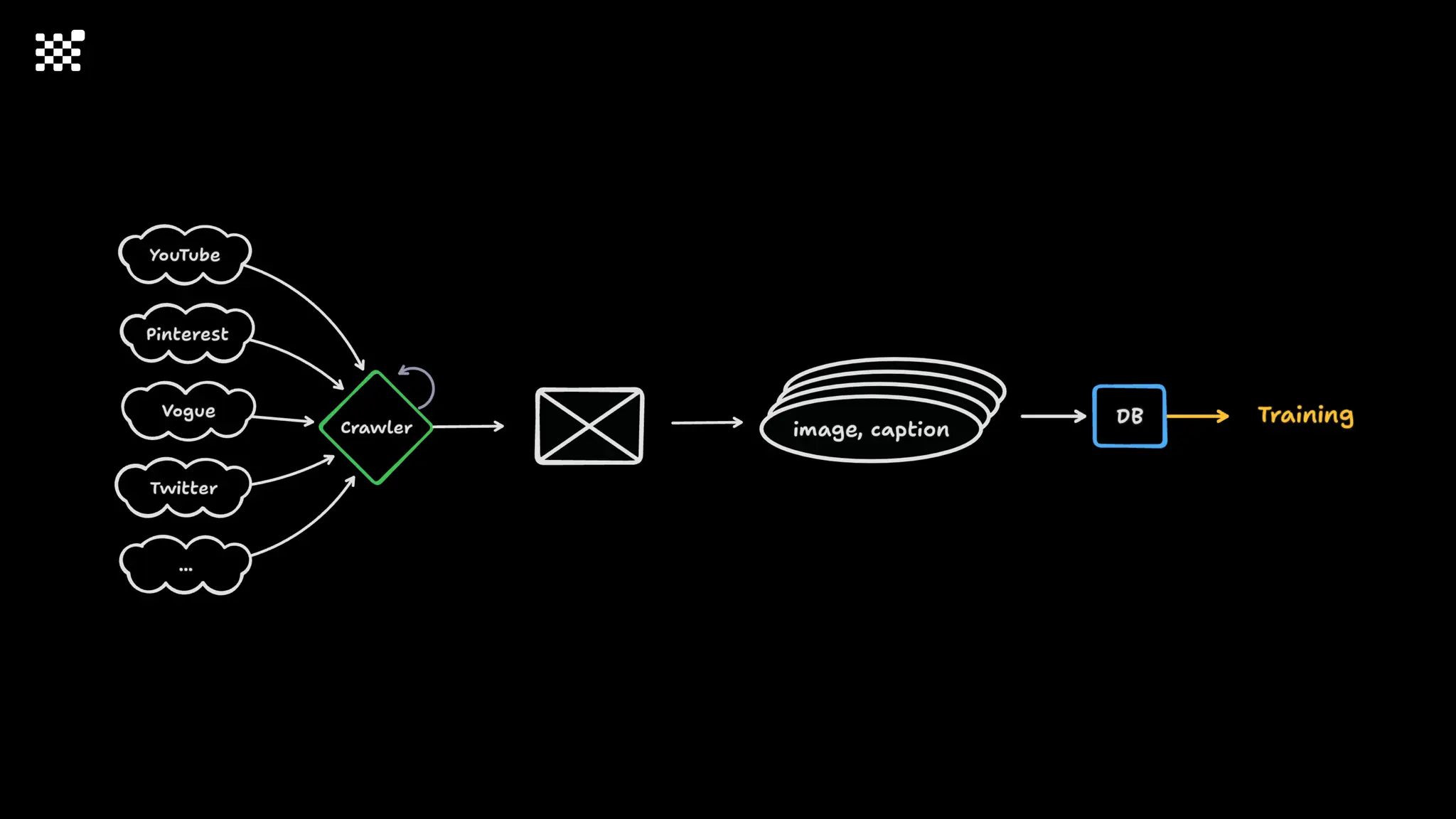

3개월 전부터 Diffusion 모델을 zero부터 학습하는 프로젝트를 시작했습니다.

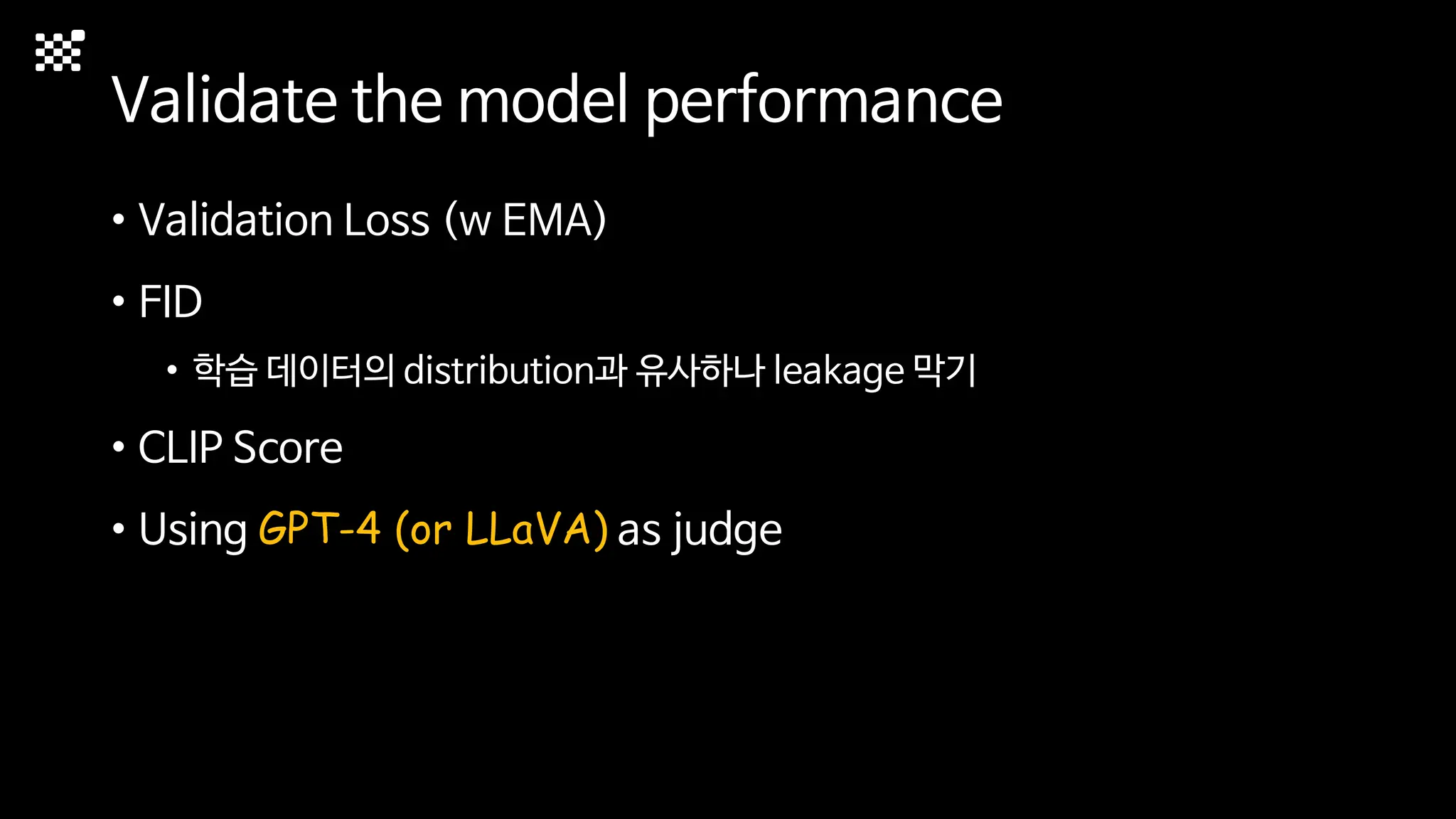

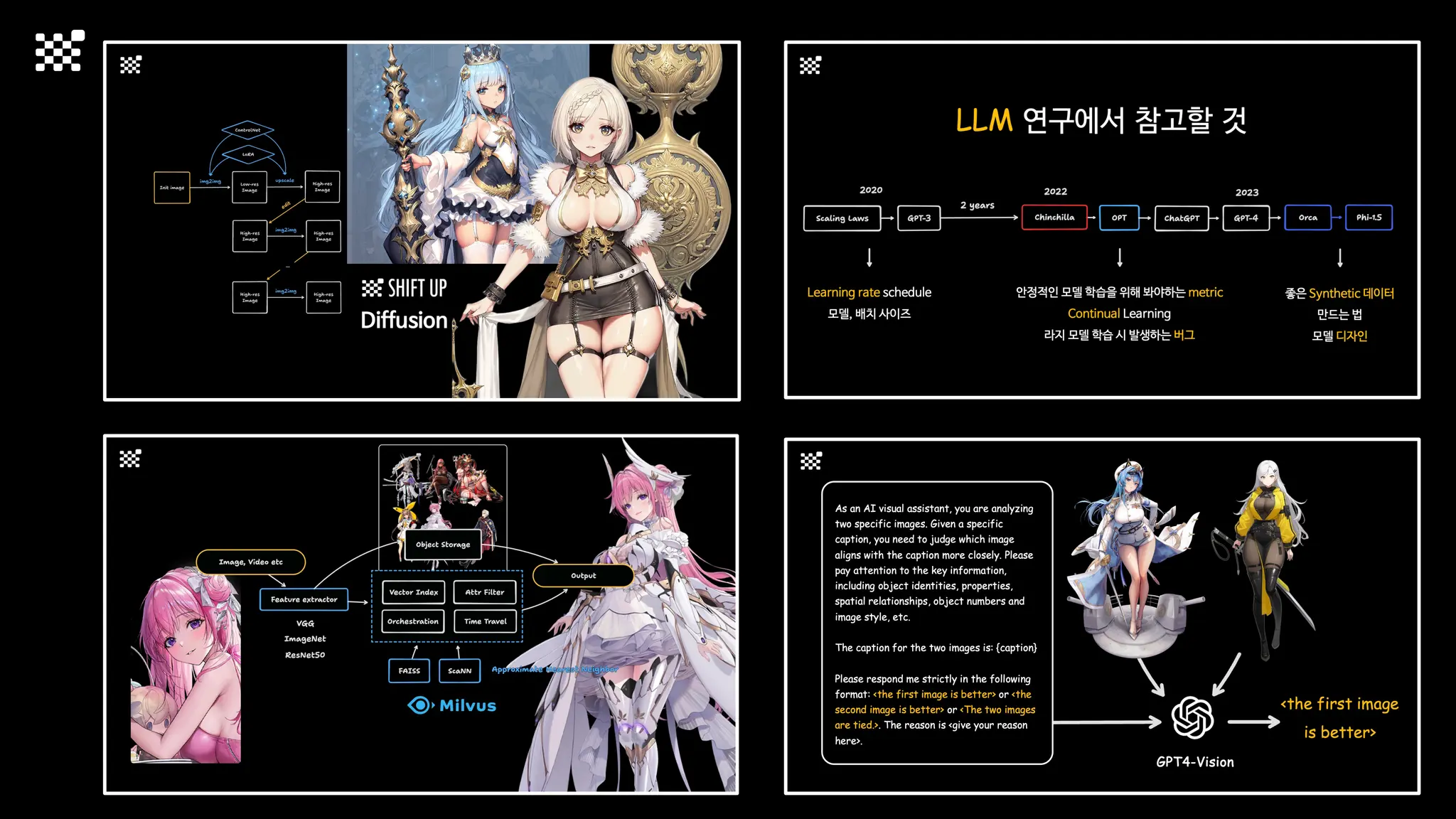

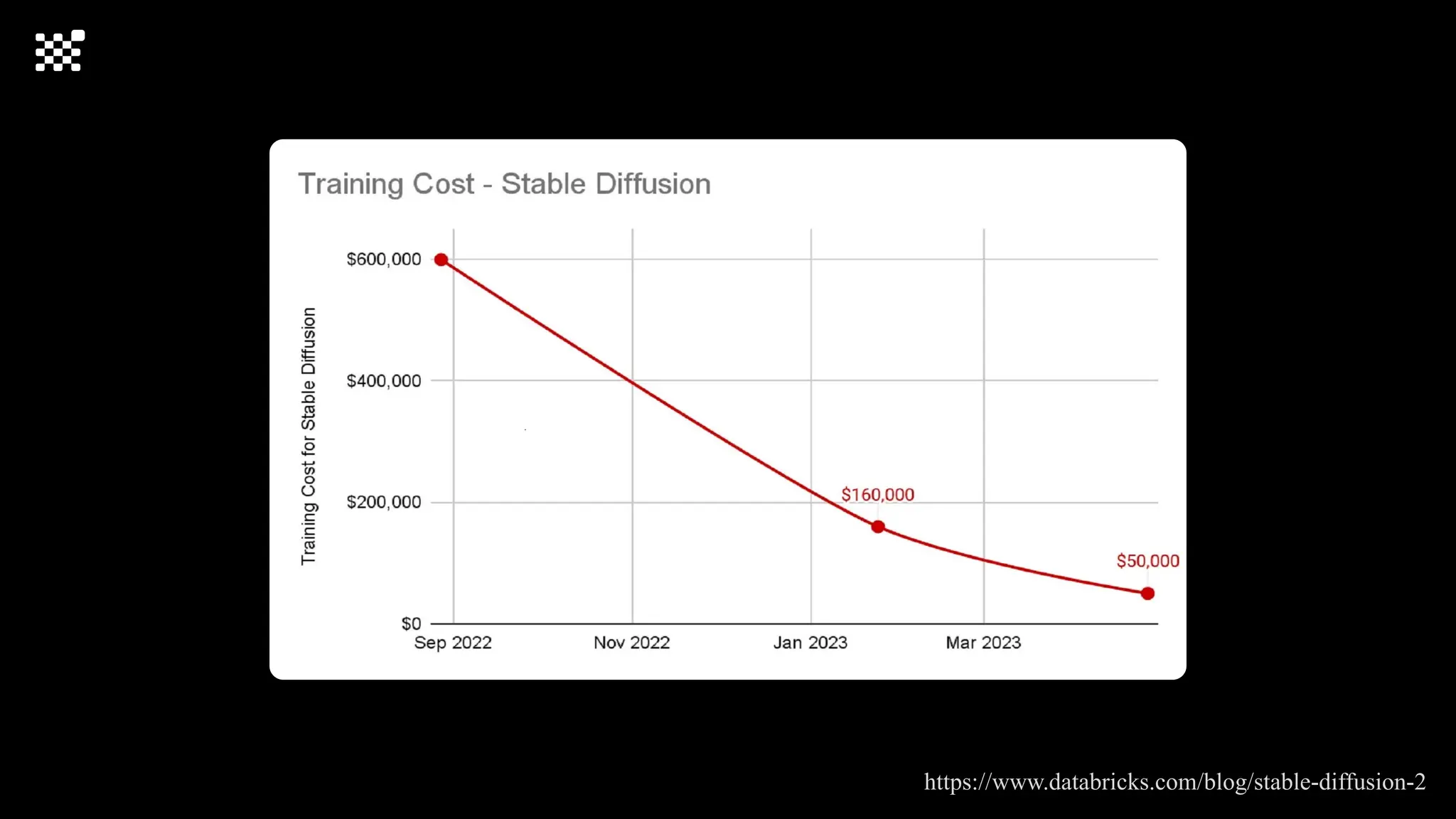

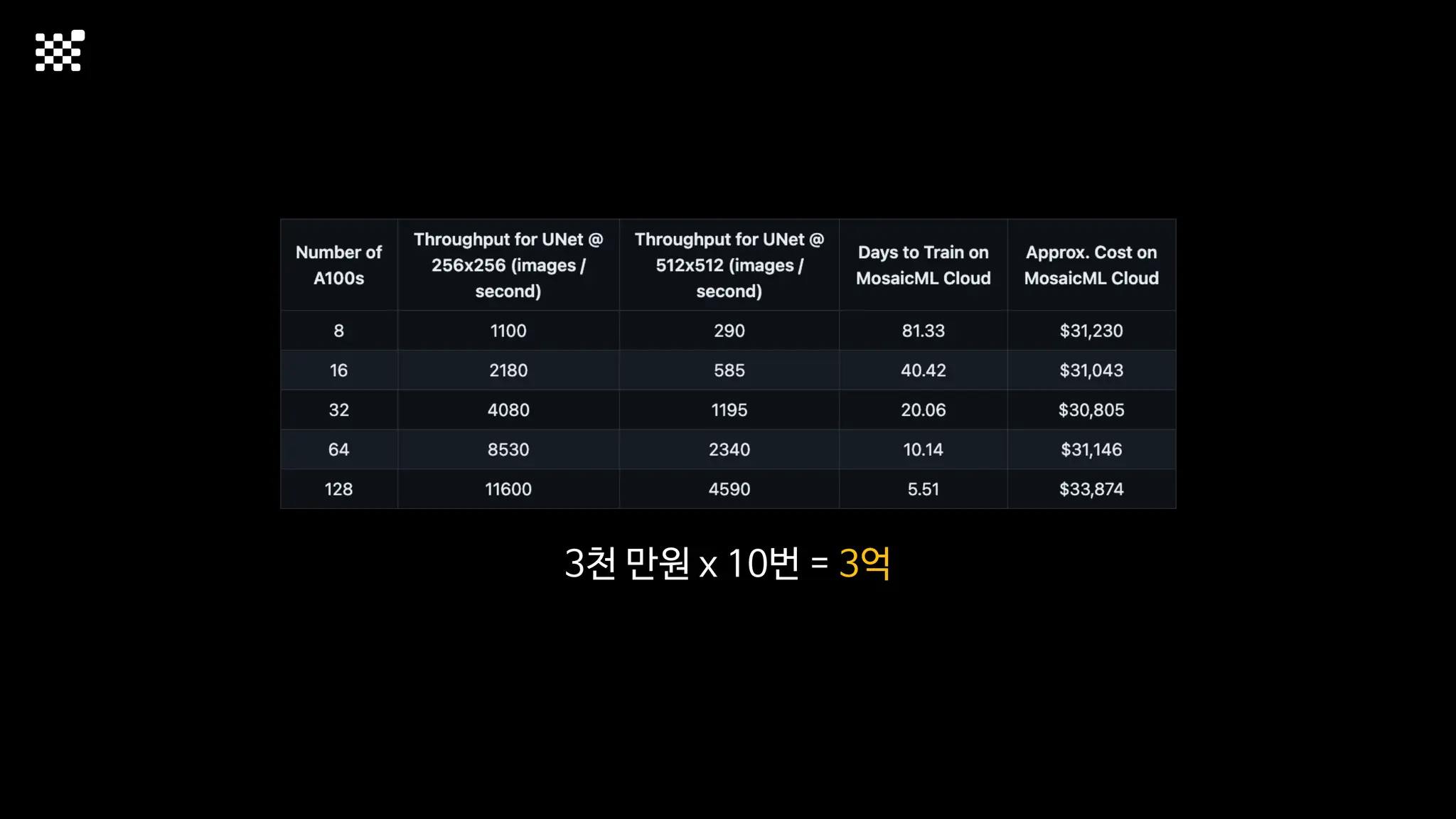

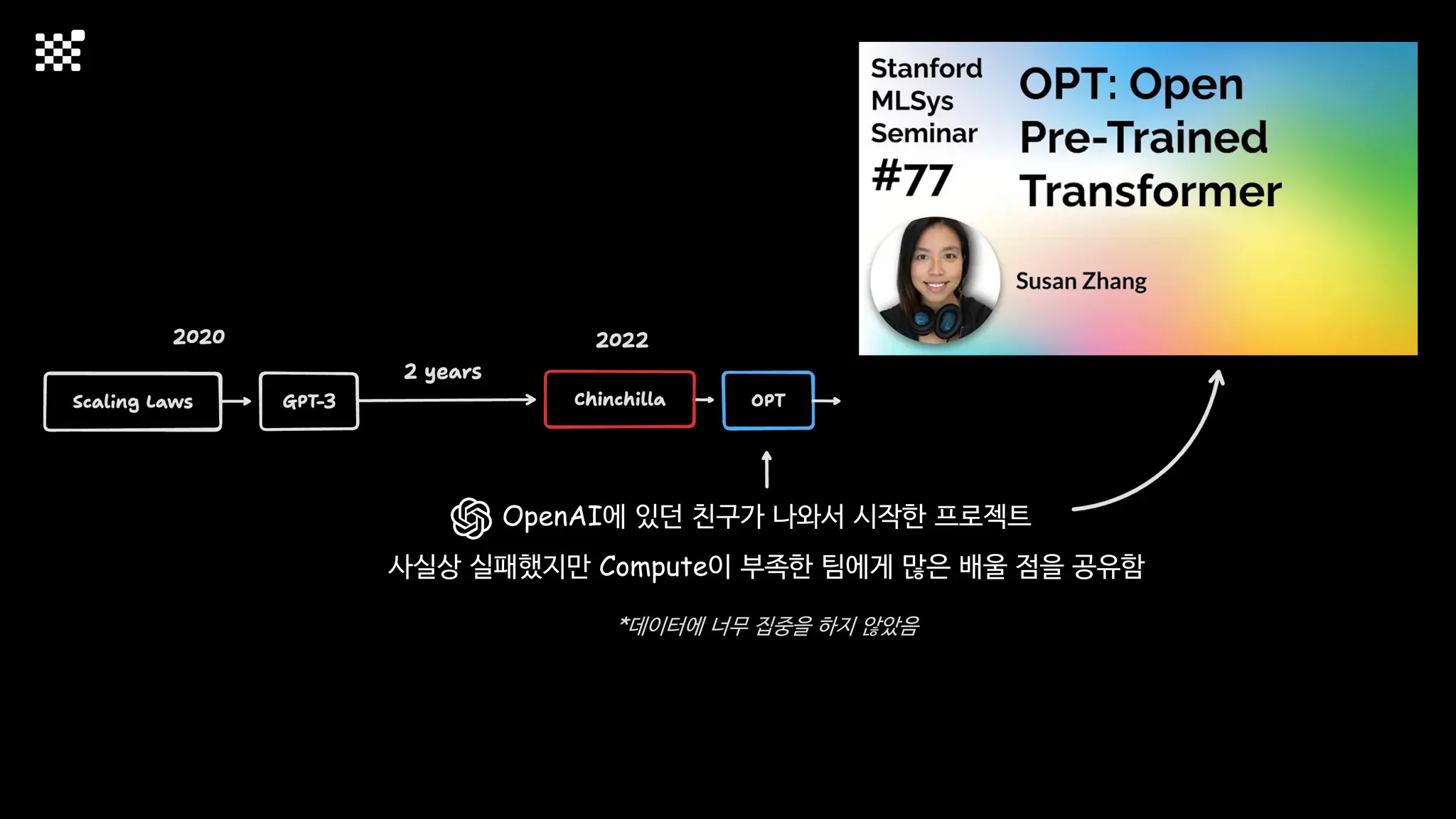

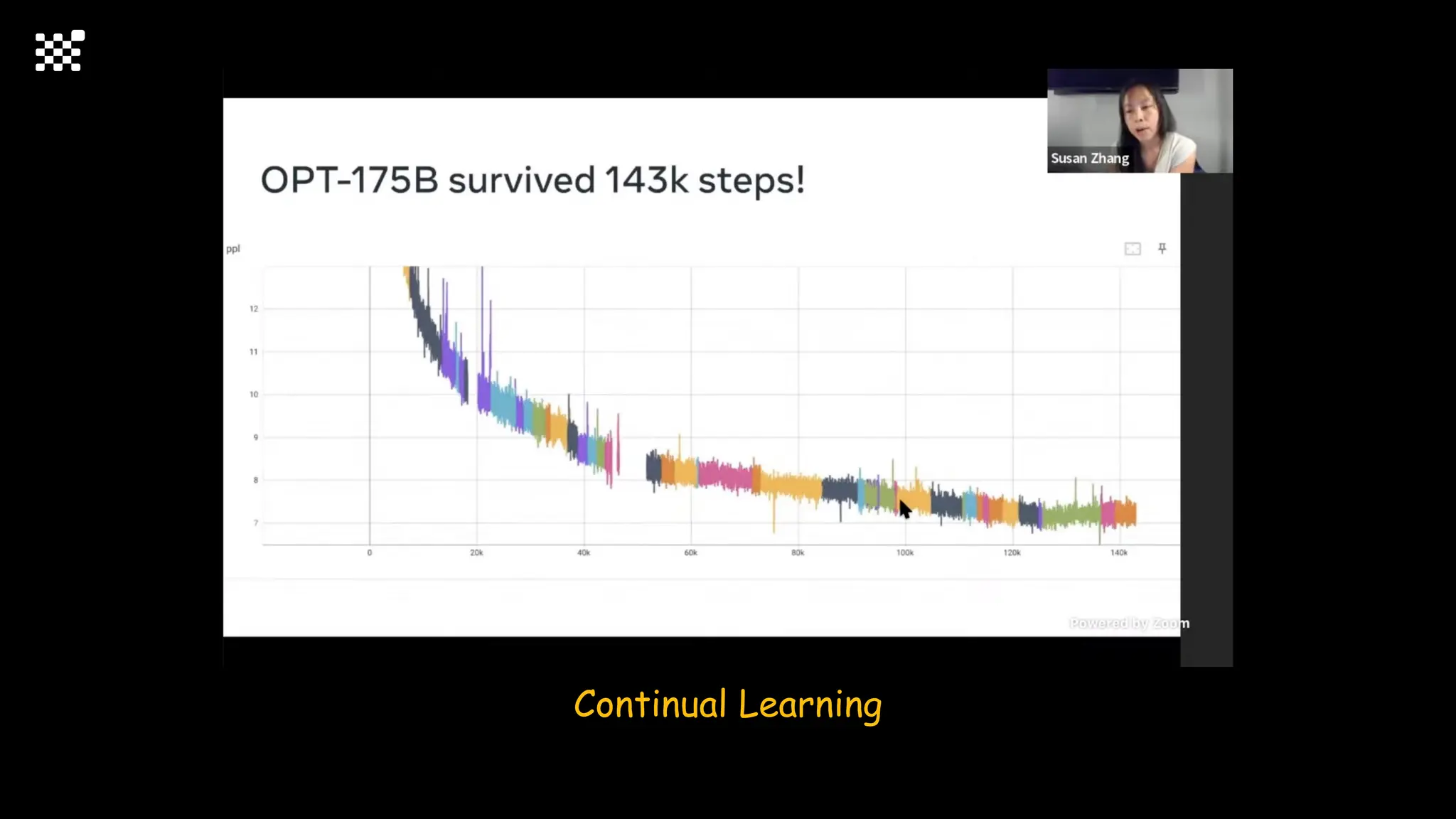

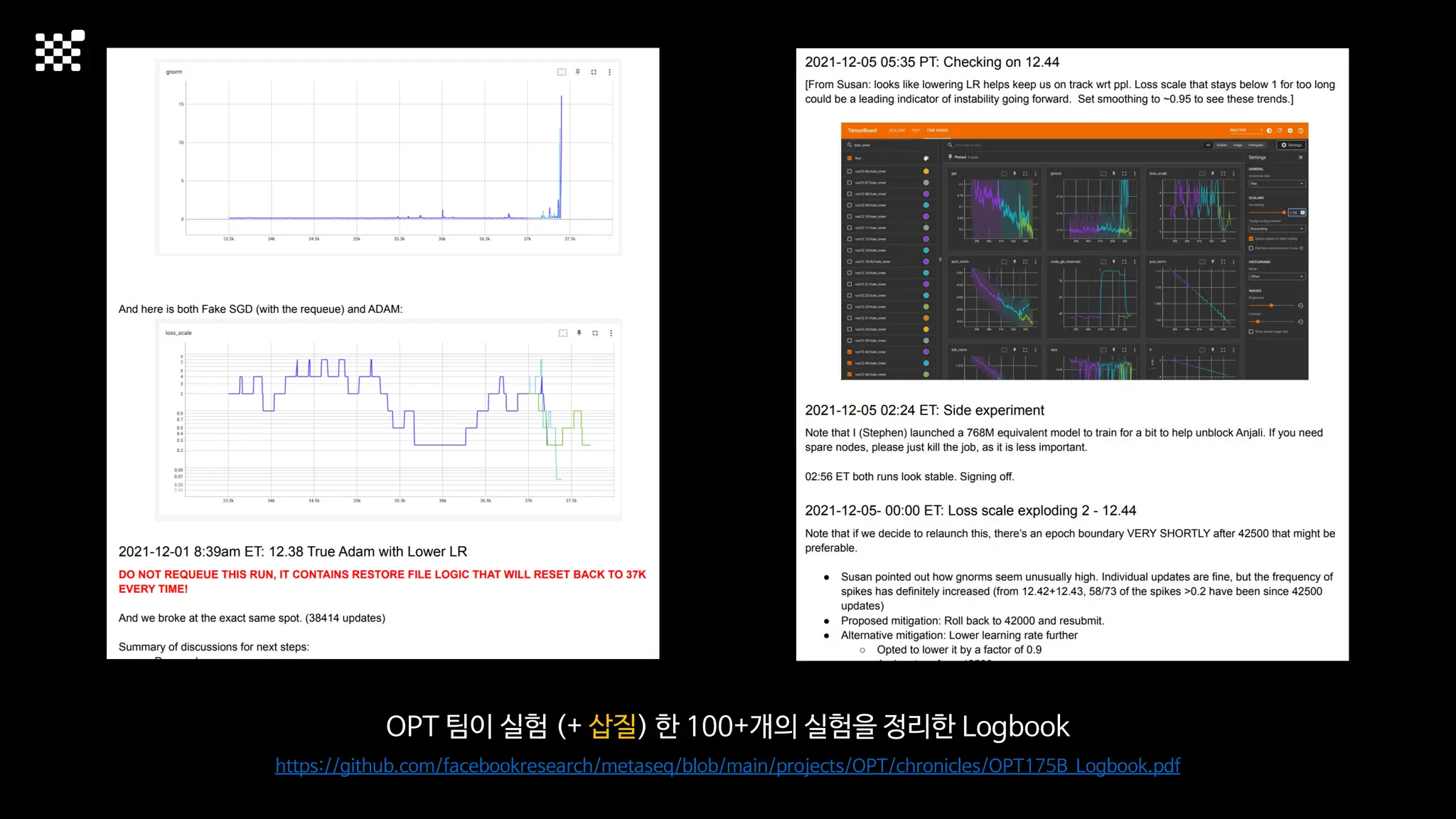

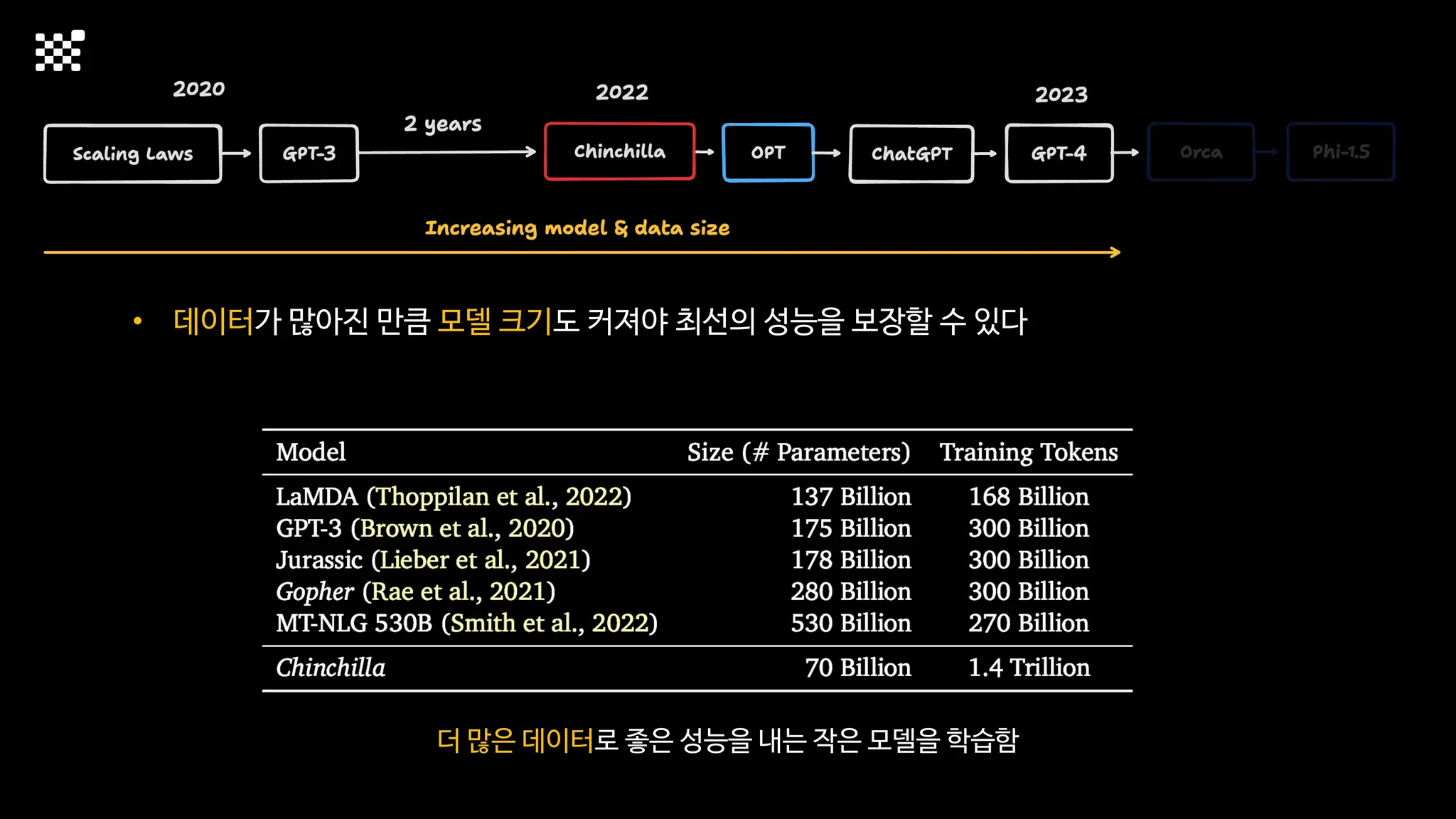

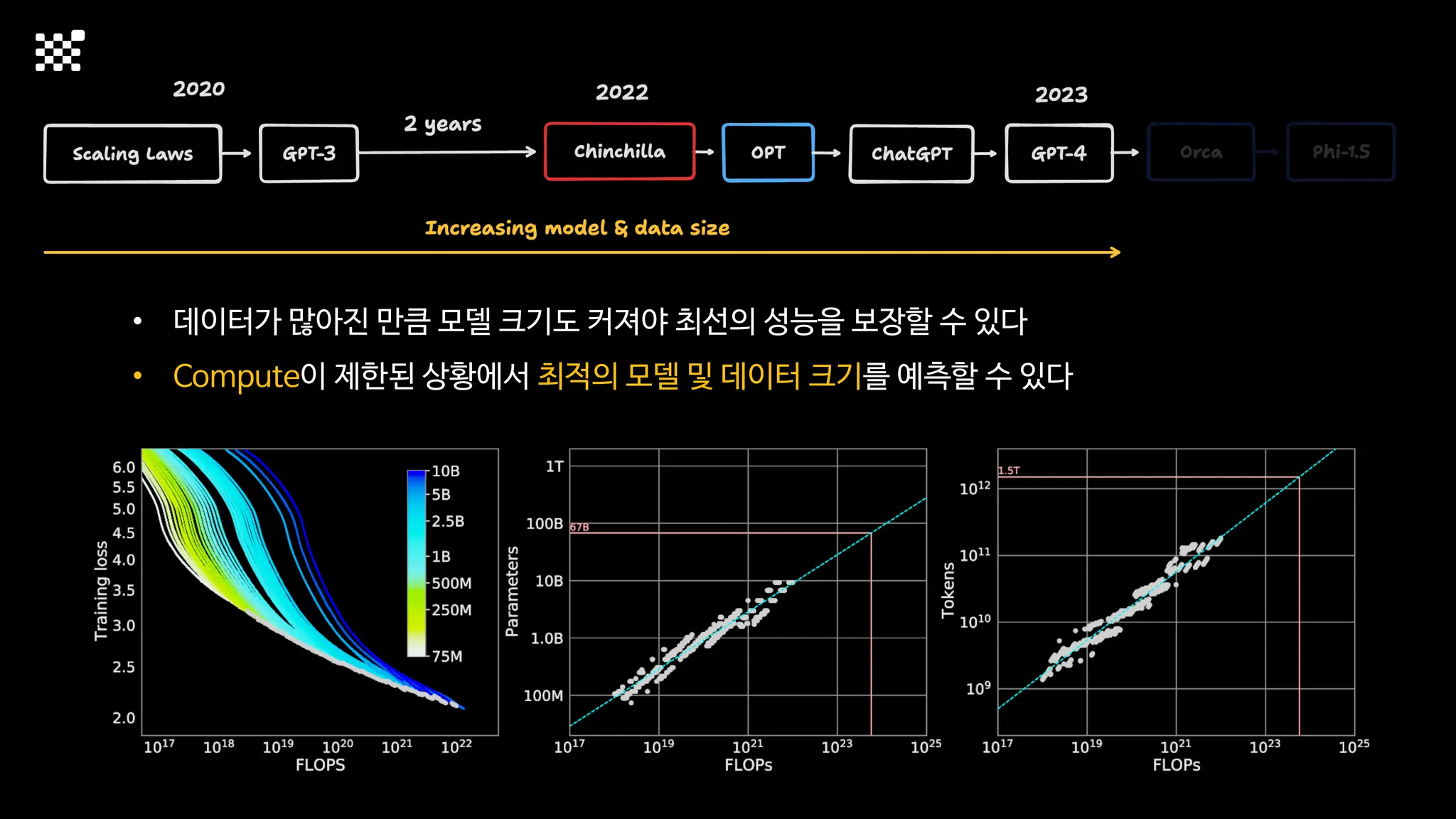

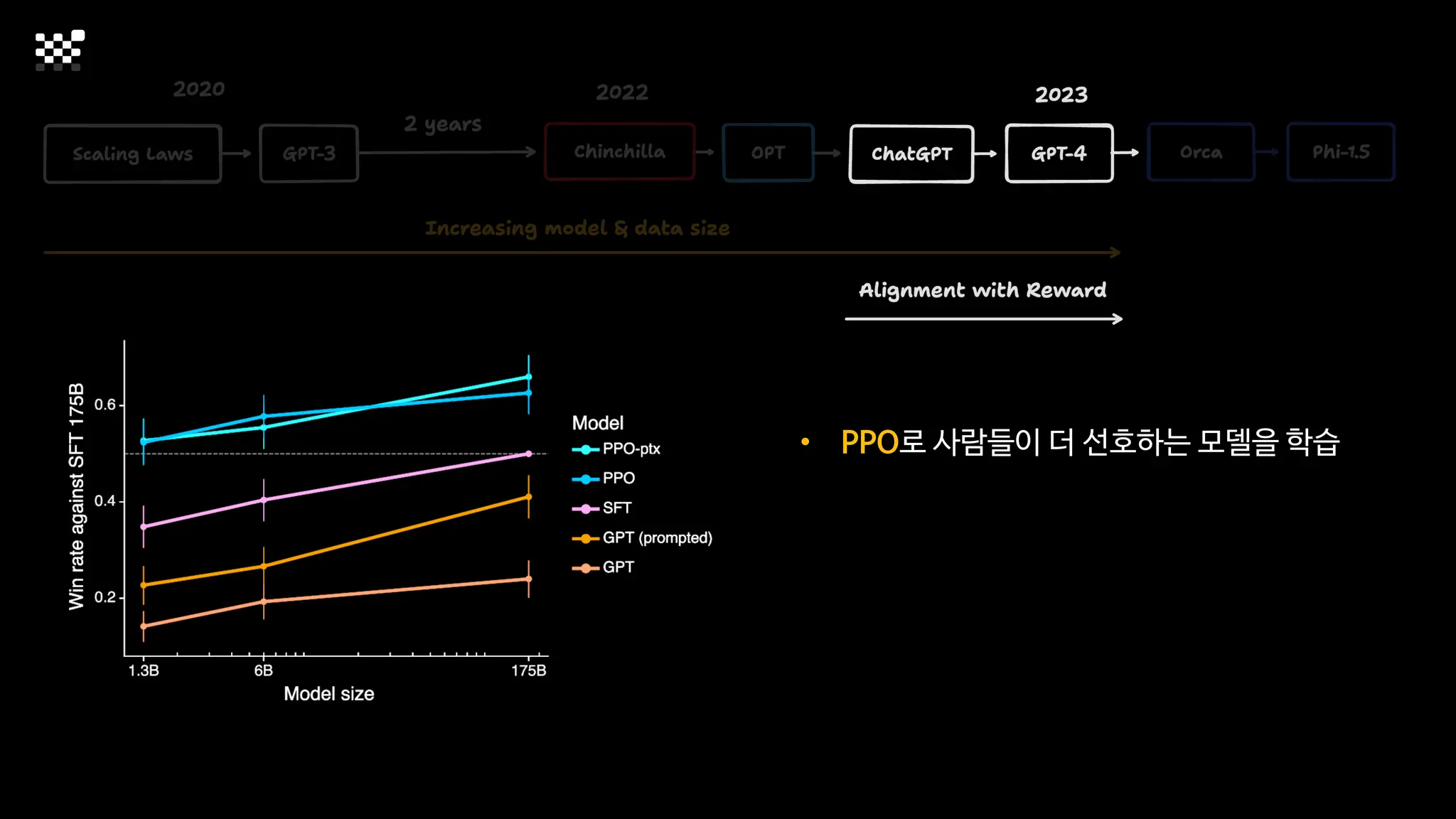

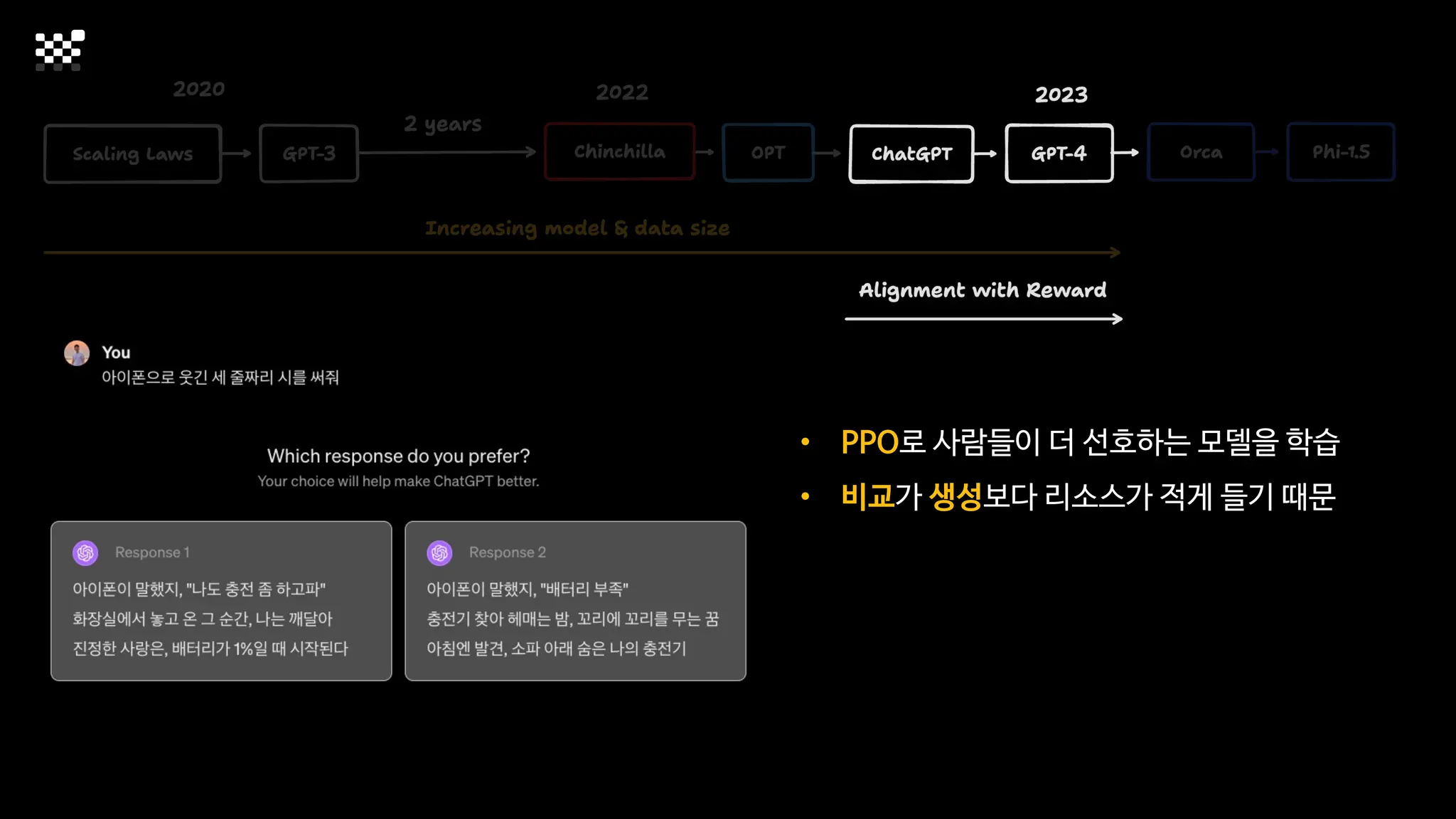

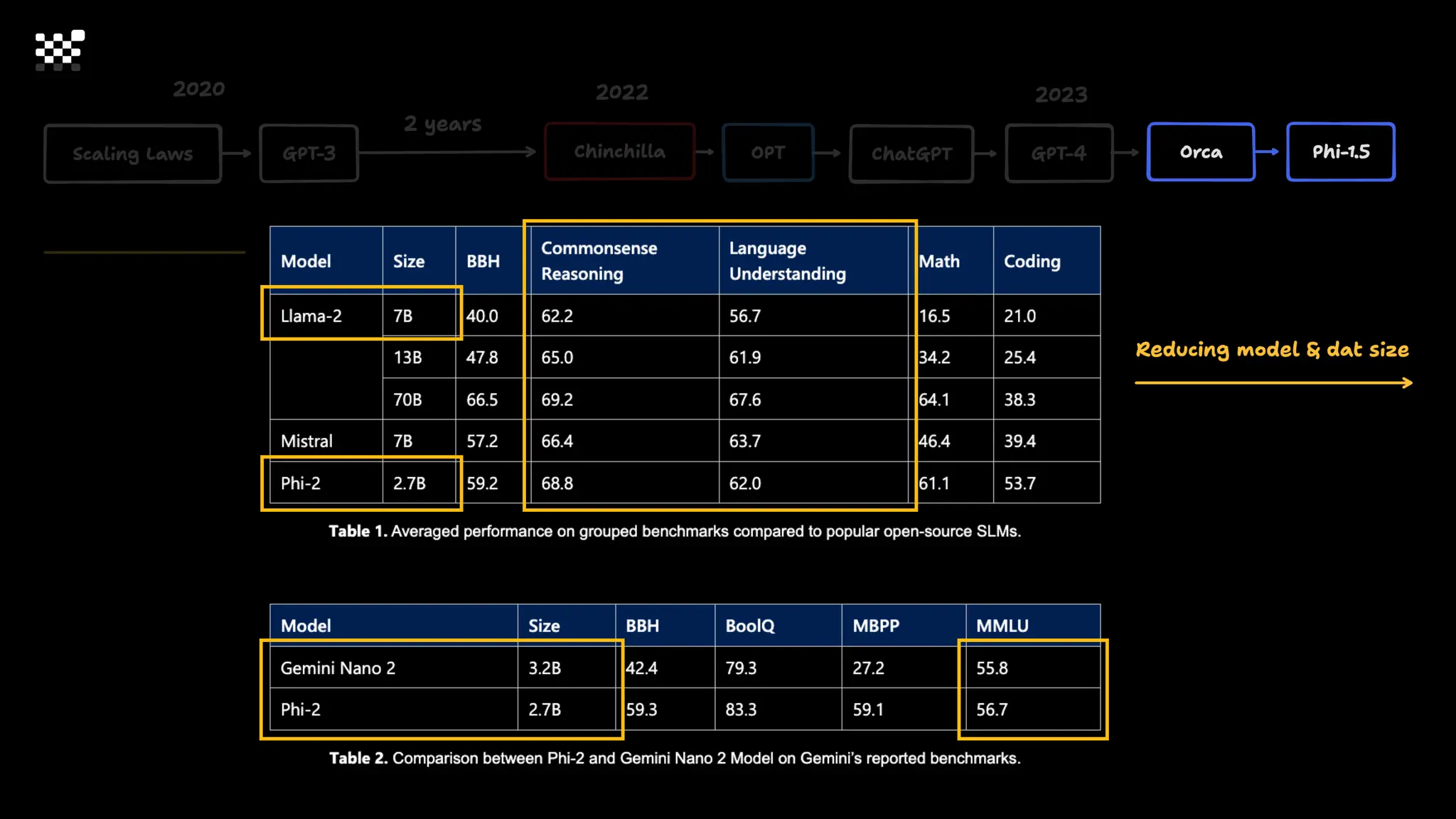

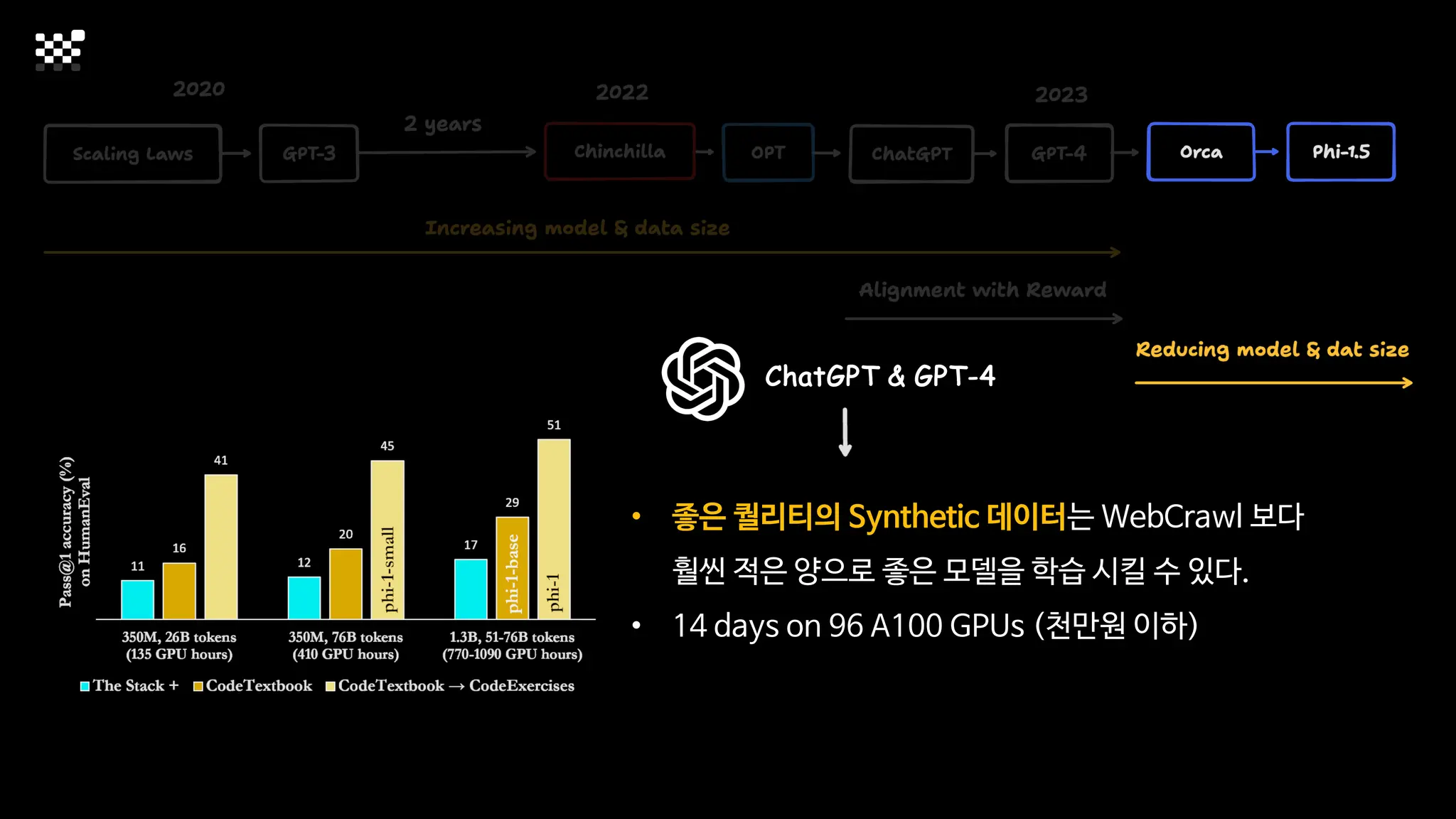

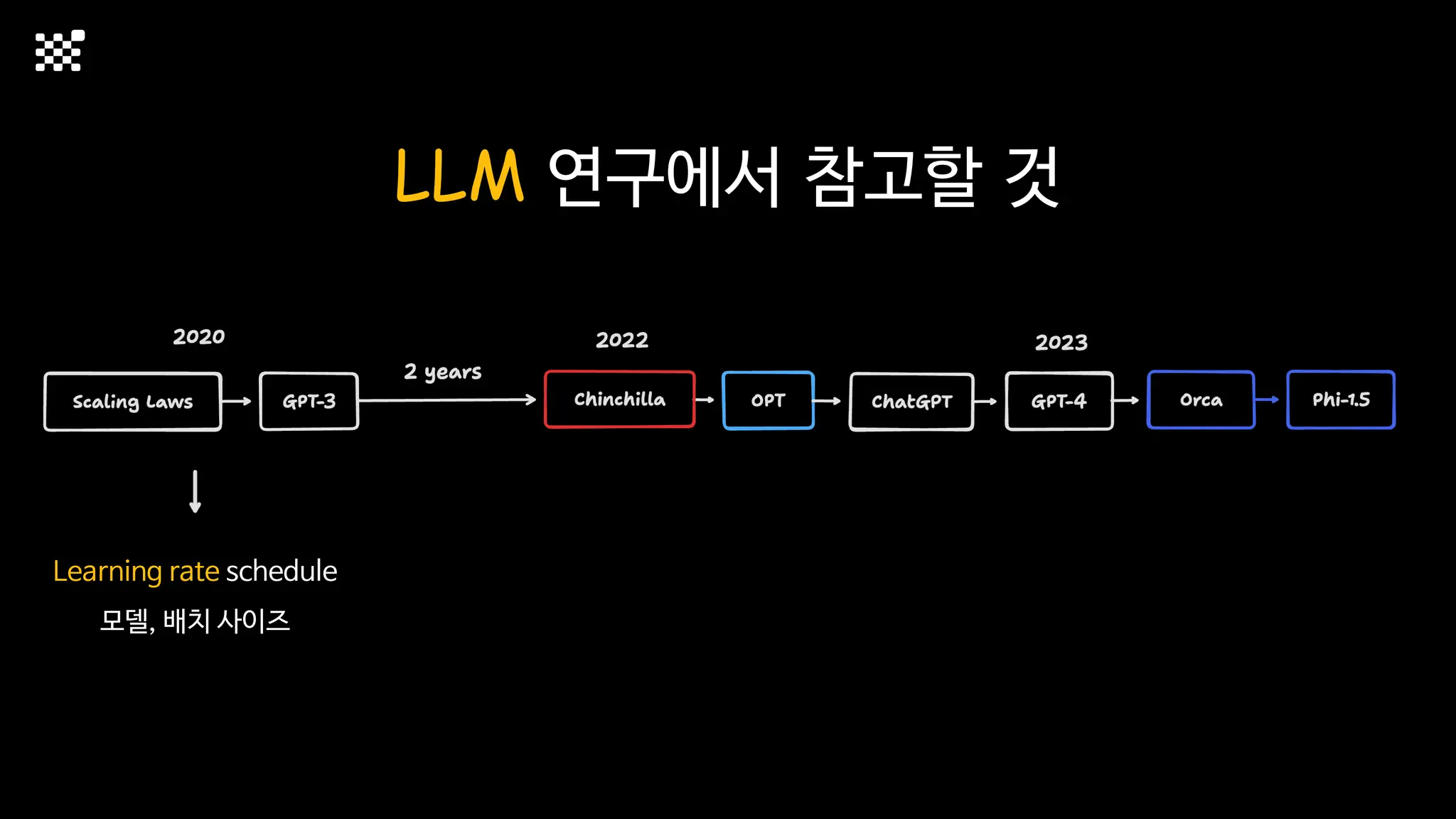

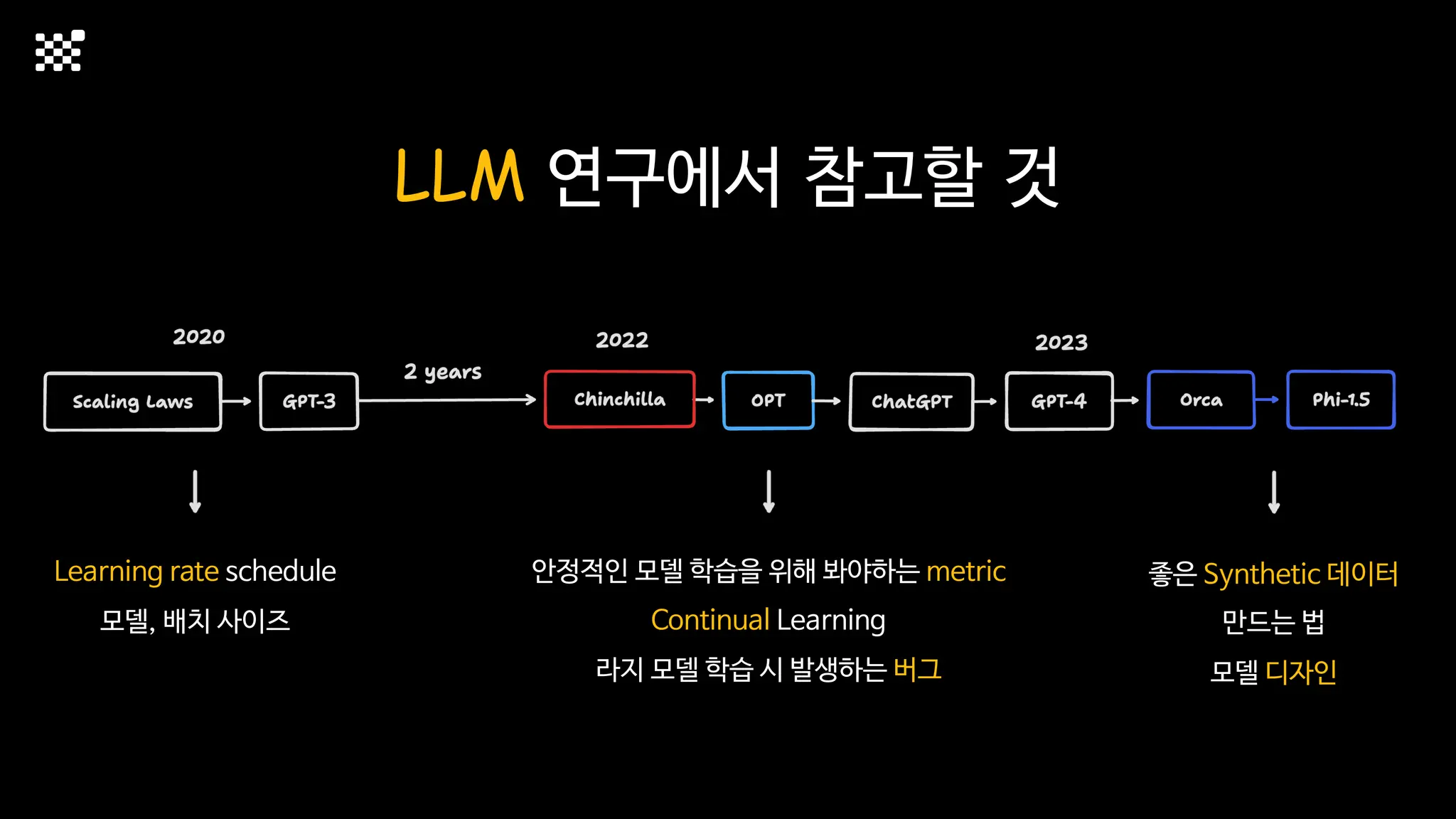

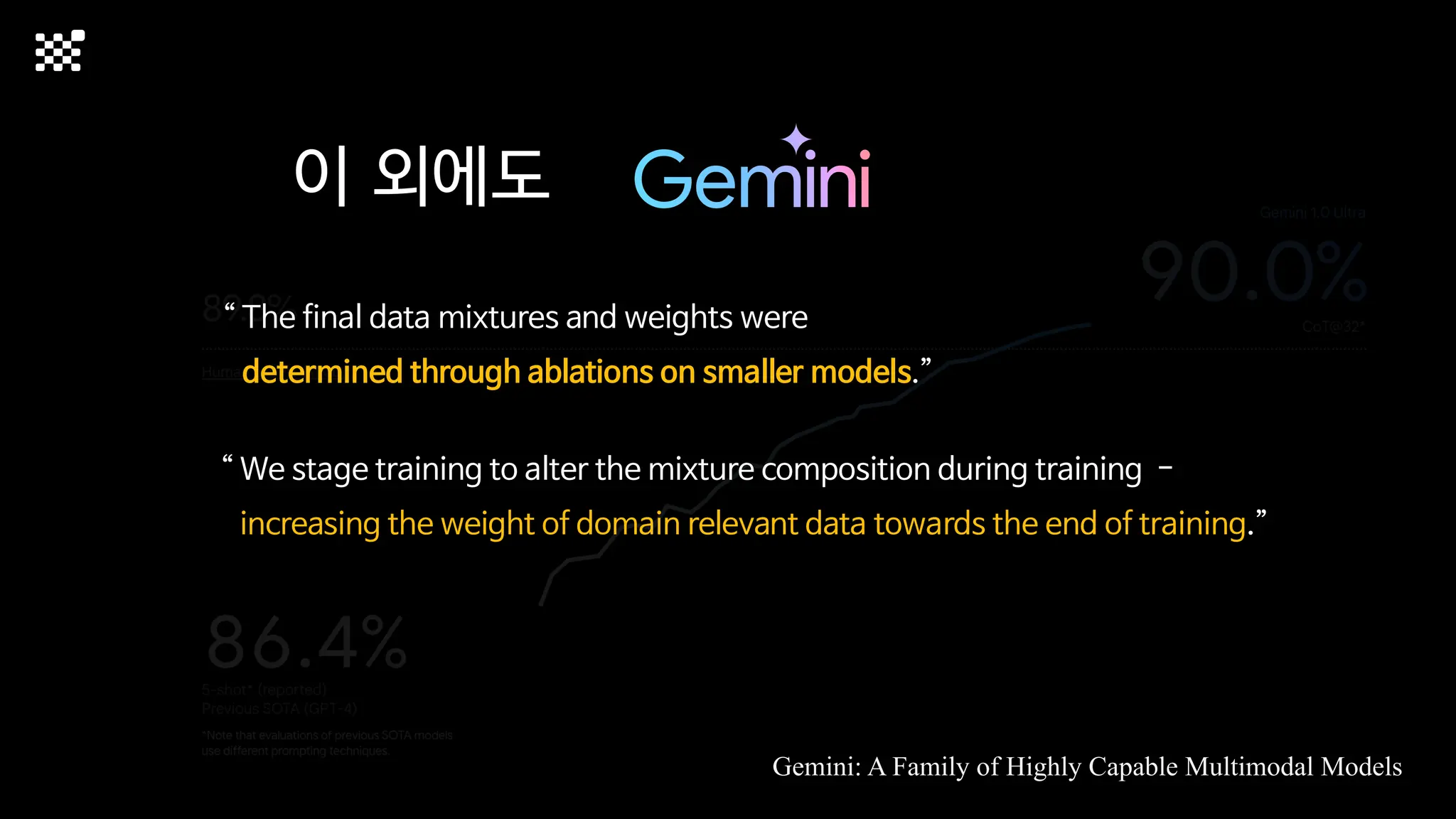

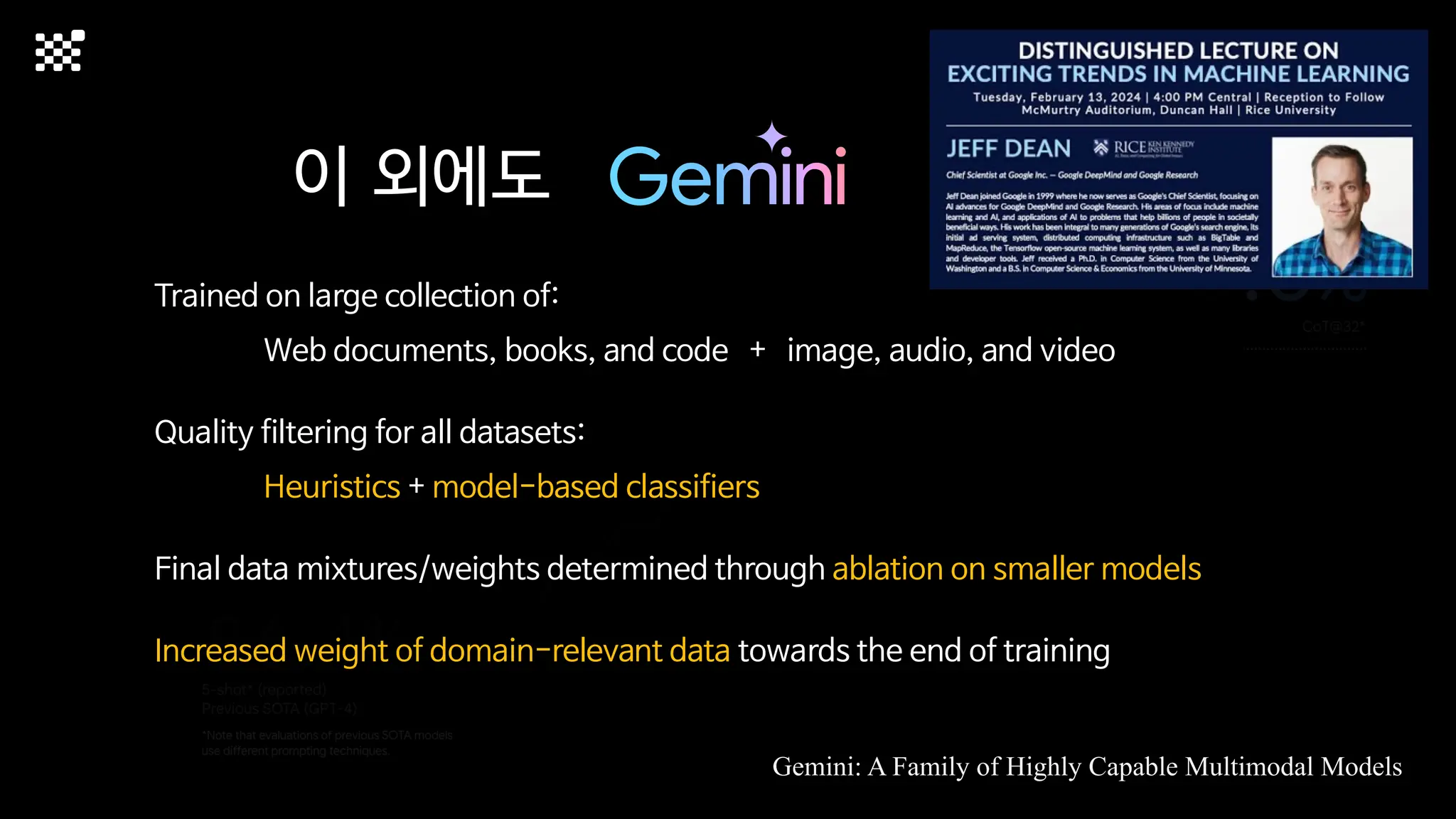

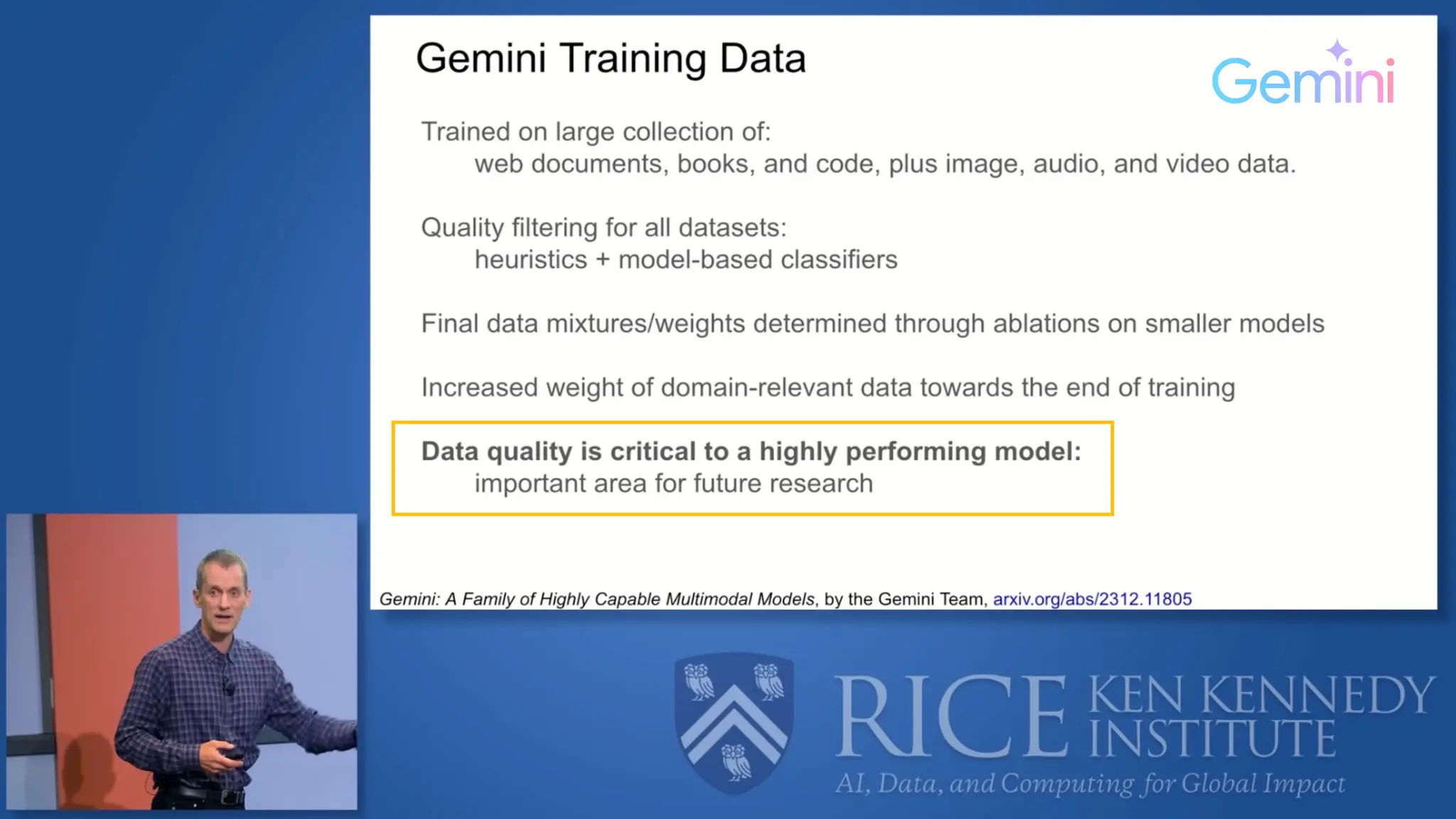

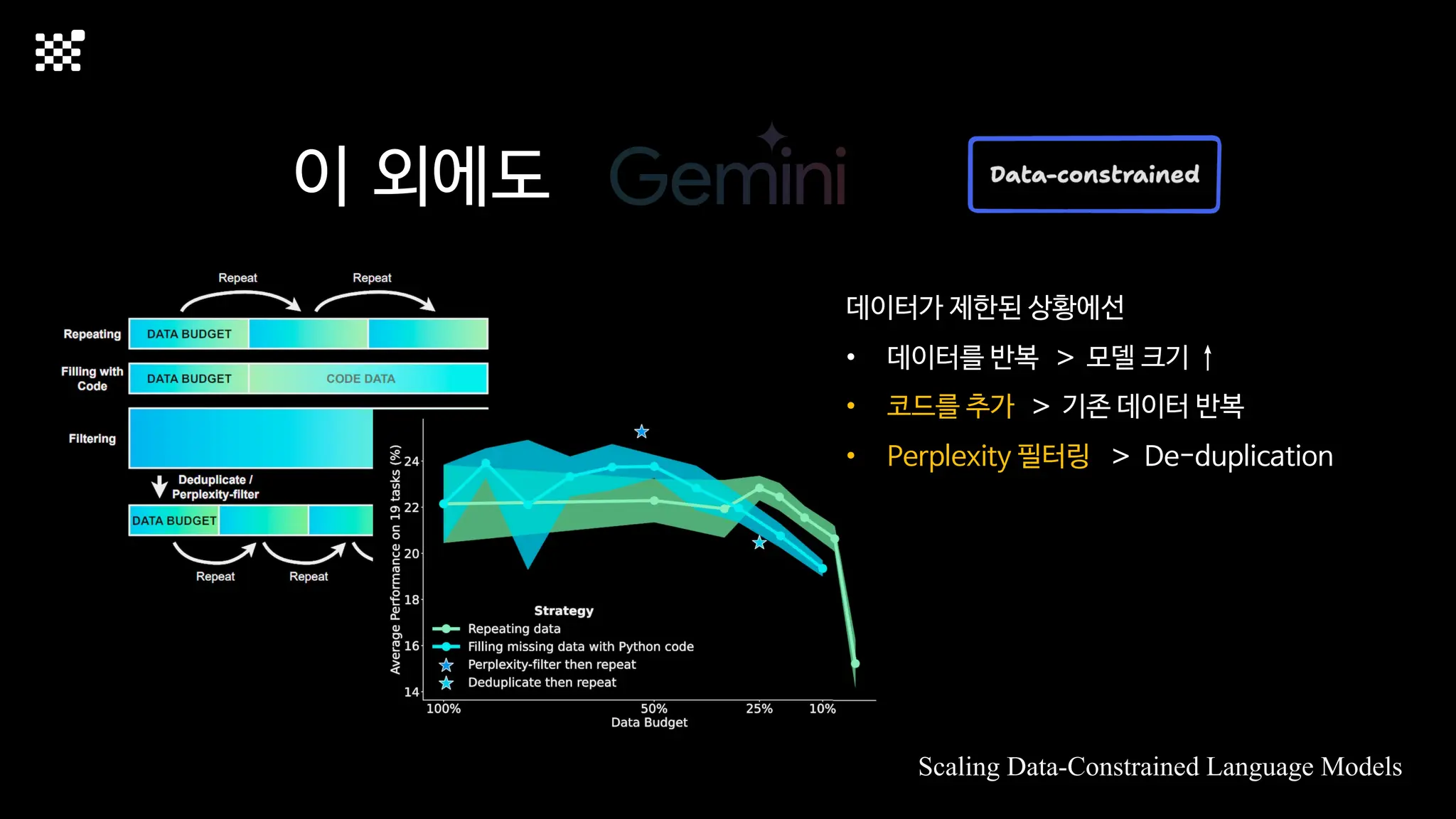

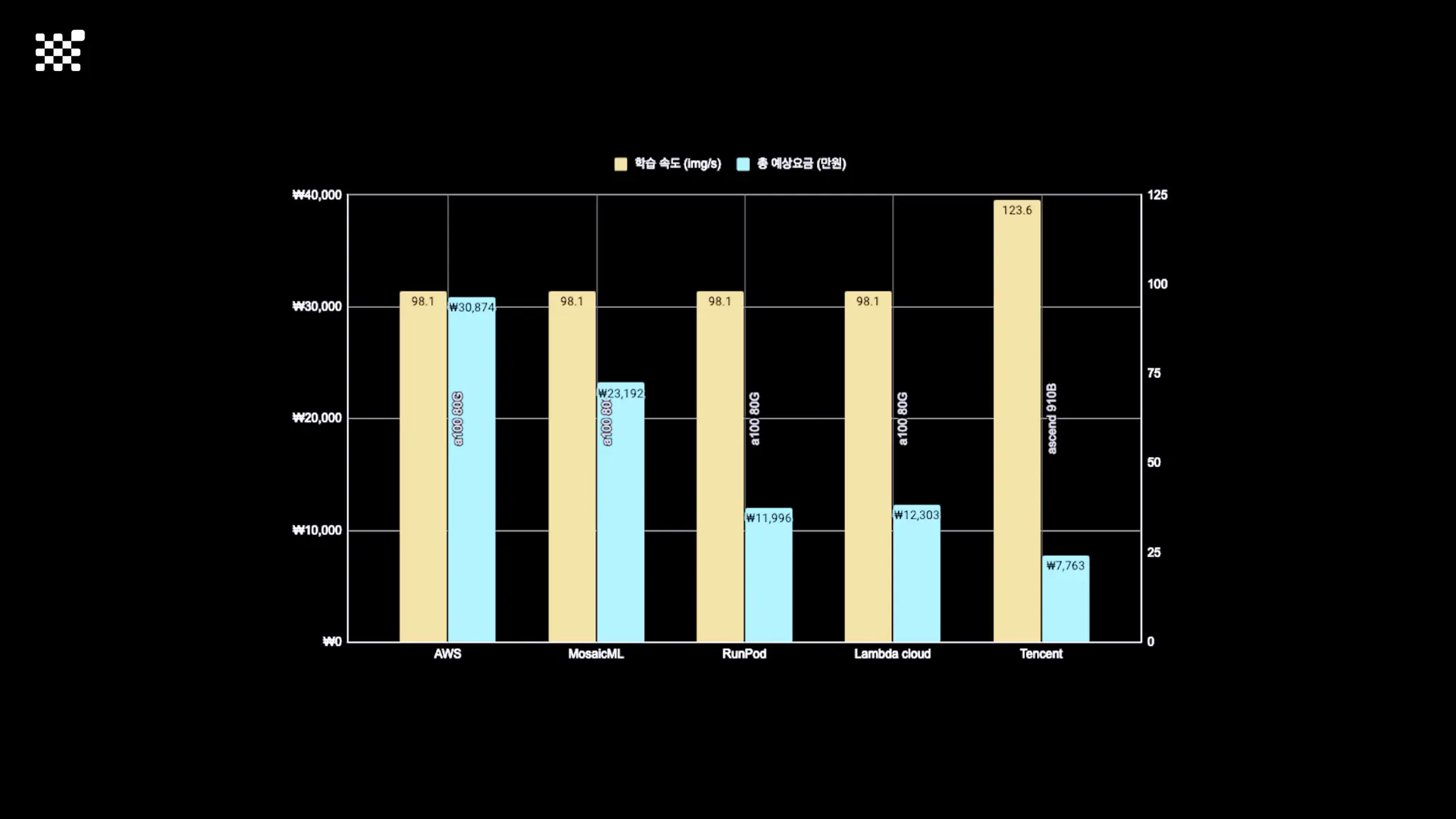

매 실험마다 천만원 이상의 GPU를 쓰는 경험을 몇 년만에 했기 때문에, 큰 스케일의 학습 과정이 활발하게 공유되고 있는 LLM 논문을 많이 참고하며 실험을 해 왔습니다.

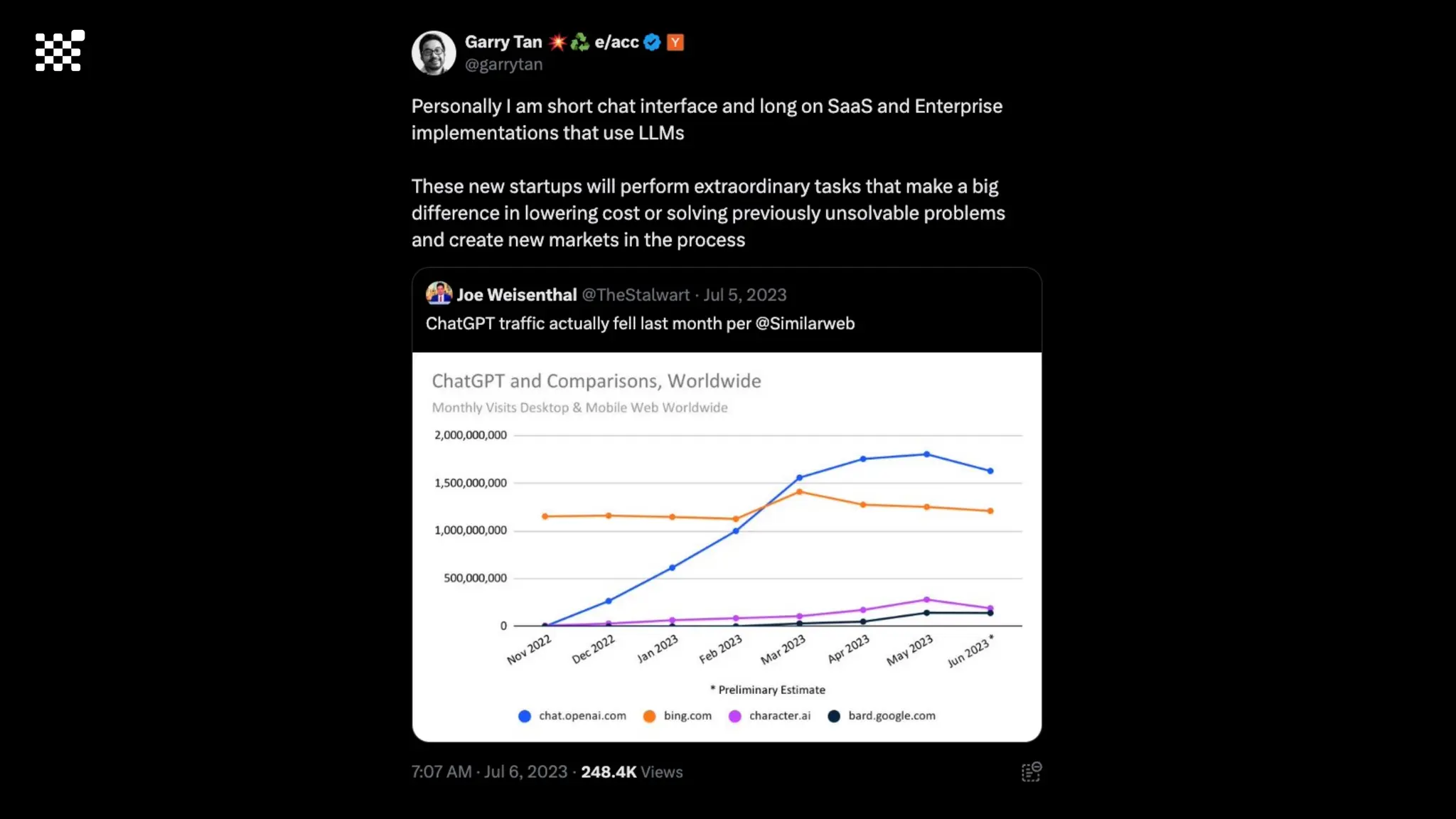

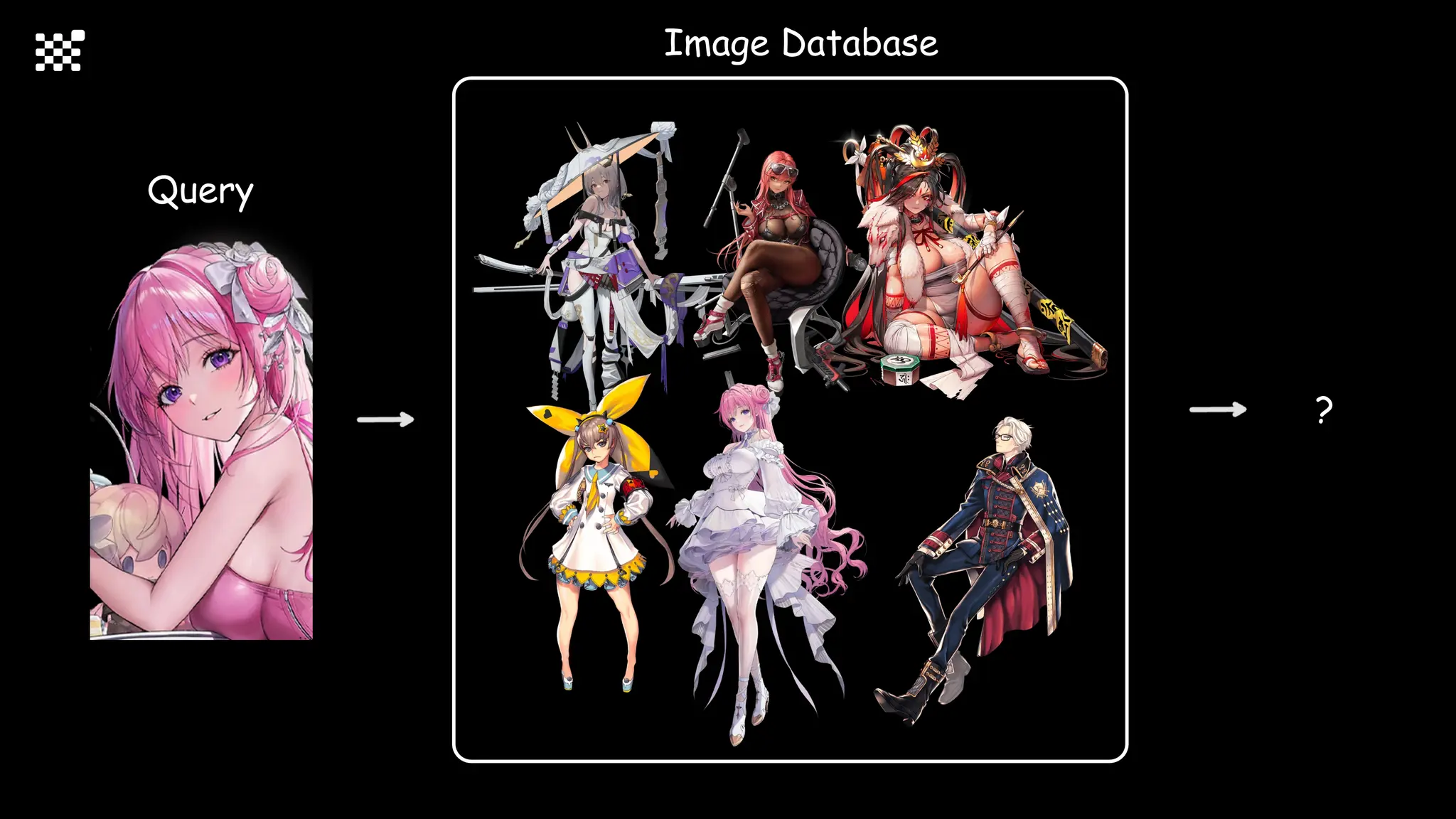

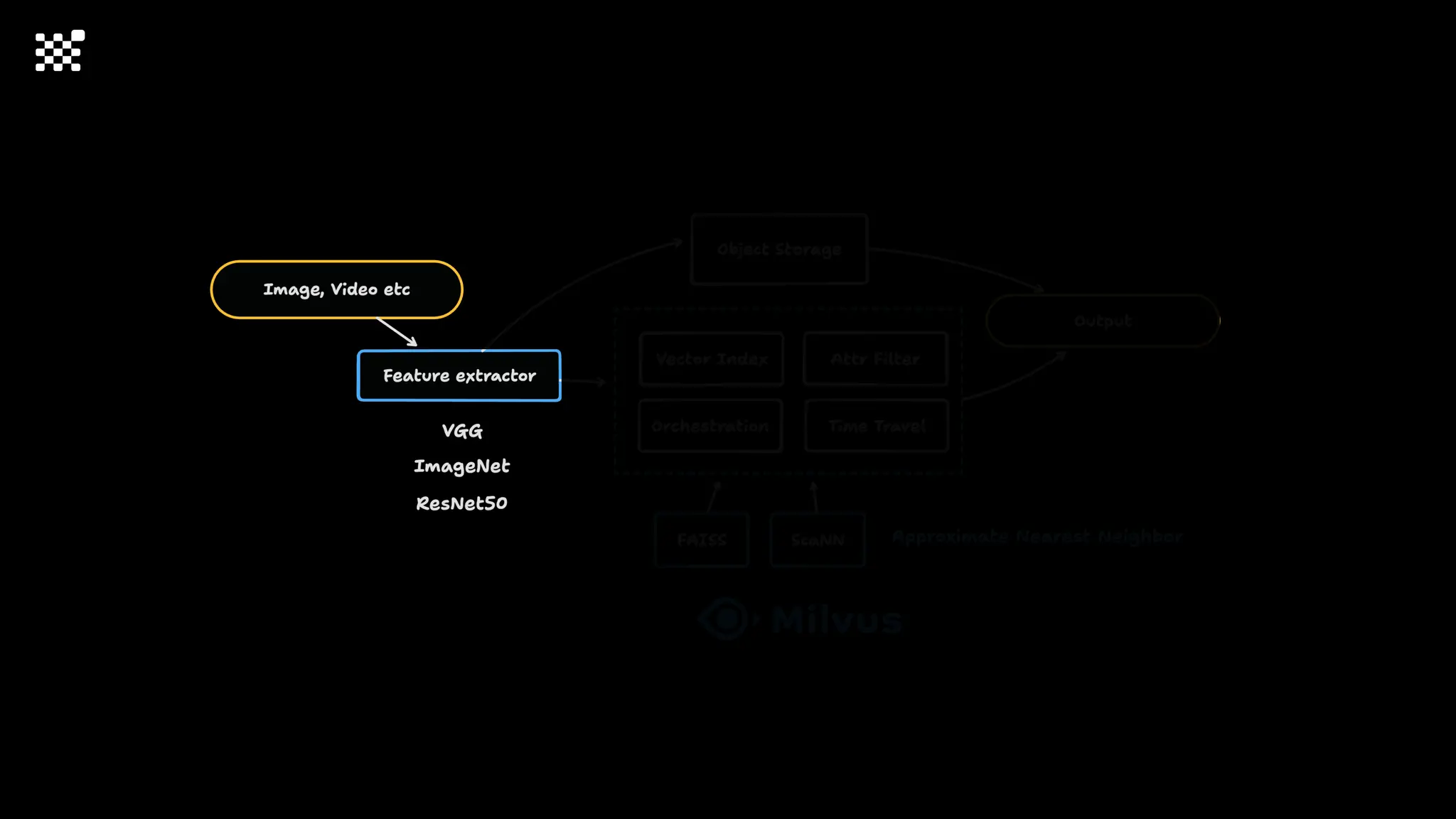

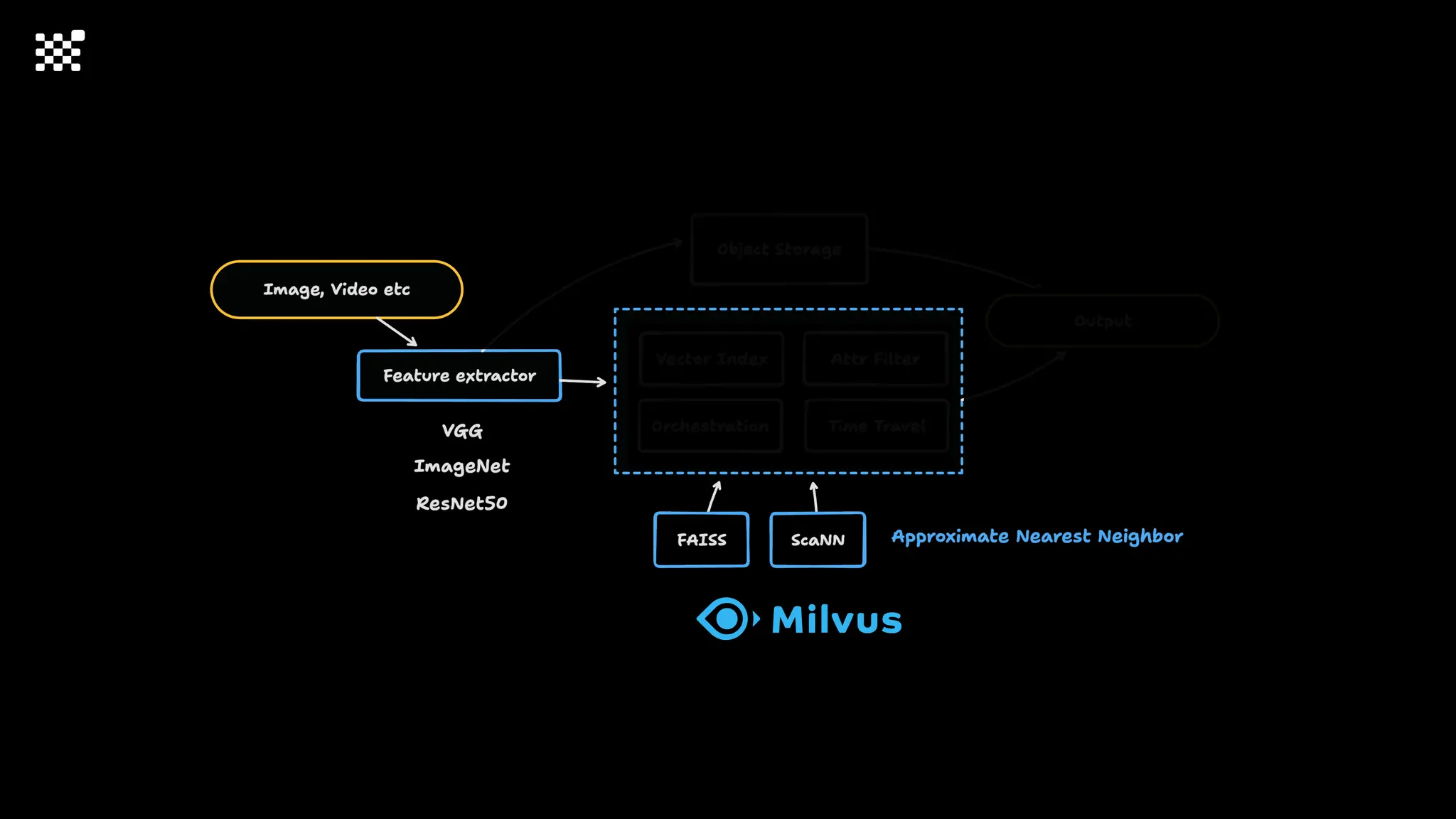

LLM에 많은 관심이 쏠린 지금, Large-scale diffusion model 학습은

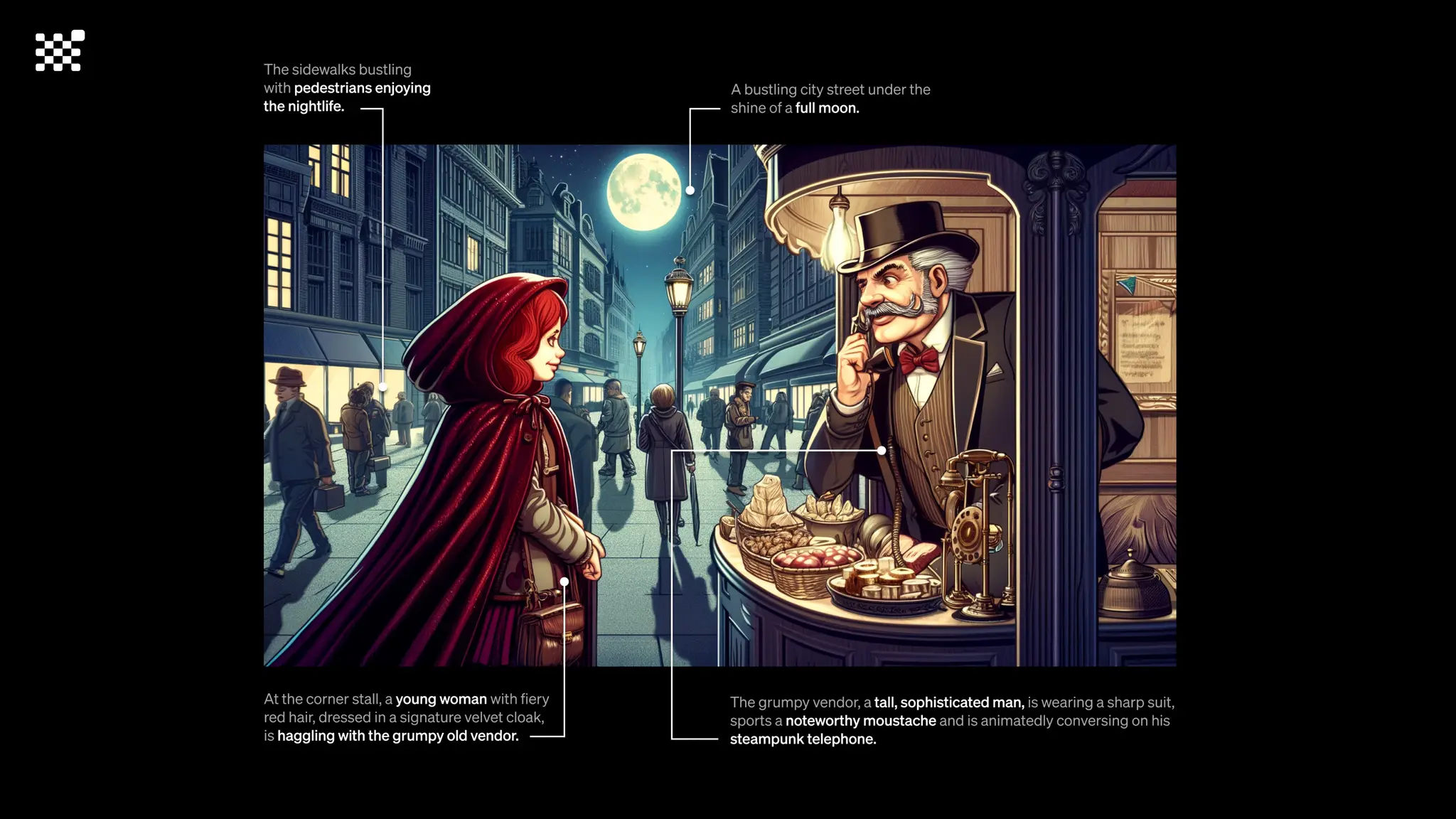

- 시각적이기 때문에 global scale이 용이하고

- 시장에 충분한 기회가 있으나 관심이 적고

- 큰 모델 학습에 관련된 경험이 거의 없기 때문에

그 과정에서 수많은 엔지니어링 문제를 푸는 것이 도전적이고 즐거운 것 같습니다!

저희와 함께 Domain-specific 지식으로 전문가가 쓸 수 있는 fine-grained 이미지 생성 모델을 만들고 싶으신 분은 언제든 편하게 연락주세요!

SHIFT UP AI Labs: https://bit.ly/shiftup-ai

* 이미지 생성 모델에 대한 Discussion이나 저희 팀에 관심이 있는 분이 계신 곳이라면 언제든 가서 Talk을 할 의향이 있으니 편하게 연락주세요!

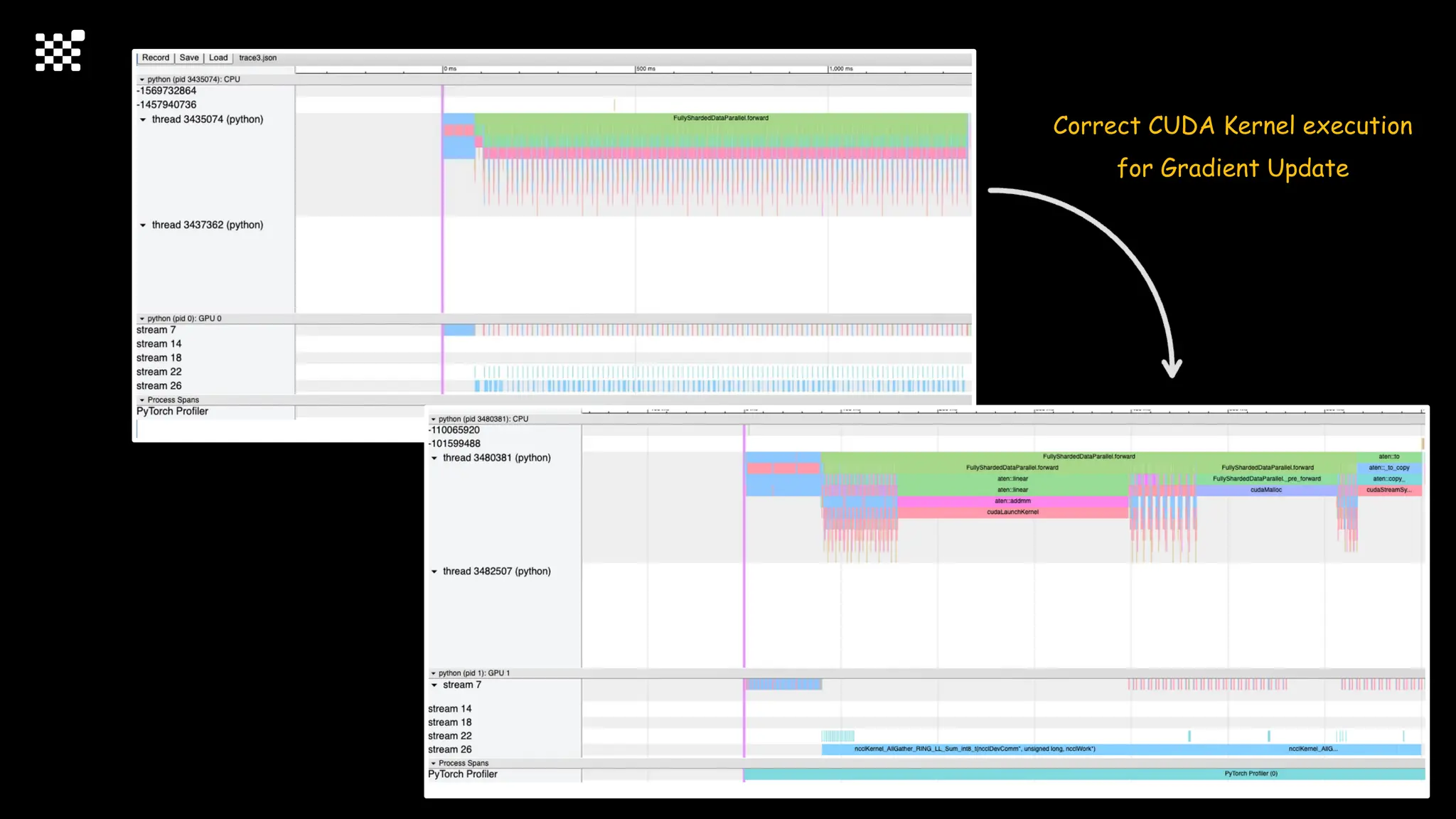

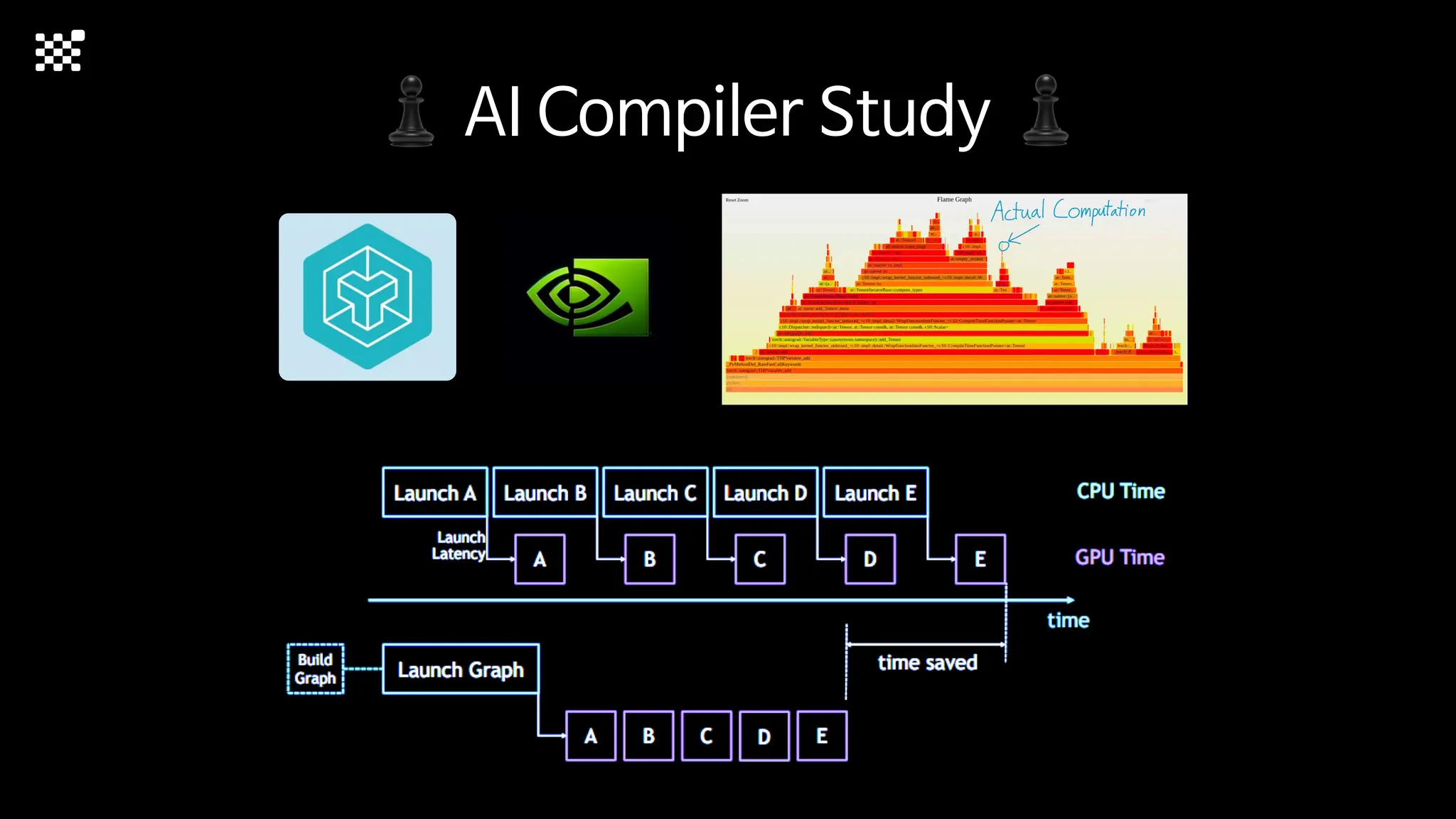

![Training Speed Error

from torch.profiler import profile, record_function, ProfilerActivity

with profile(

activities=[ProfilerActivity.CPU, ProfilerActivity.CUDA],

record_shapes=True

) as prof:

# 문제가 있던 코드

optimizer.step()

prof.export_chrome_trace("trace.json")](https://image.slidesharecdn.com/2024attentionxpublic-240329071953-16f92dd7/75/LLM-ZERO-Training-Large-Scale-Diffusion-Model-from-Scratch-132-2048.jpg)